What Is ModelOps and How Does It Compare to MLOps

|

|

In today’s digital world, artificial intelligence (AI) and machine learning (ML) are transforming various industries, including healthcare, finance, retail, and manufacturing, in a significant way. However, despite the hype, many sectors are still struggling to operationalize the AI initiatives. Due to difficulty in implementing these AI initiatives manually, most of them do not make it to production.

Although data scientists and ML engineers have tools to create and deploy AI models, they need to be deployed in production to address real-world use cases. Hence, we need a framework or methodology to reduce manual effort and streamline the deployment of ML models. That framework is ModelOps.

| Key Takeaways: |

|---|

|

This article provides an in-depth examination of ModelOps, covering its key components, capabilities, benefits, challenges, and practical applications.

Understanding ModelOps

ModelOps (Model Operations) is the practice or discipline for managing, deploying, monitoring, and governing AI and ML models across production environments in a consistent, automated, and compliant manner.

ModelOps ensures that models are not only accurate but also operationally explainable, efficient, and auditable. In contrast to MLOps (Machine Learning Operations), which focuses mainly on automating ML workflows and integrating data pipelines with model training and deployment, ModelOps has a broader scope as it extends beyond ML to include all types of analytical models.

ModelOps encompasses statistical, optimization, business rules, and AI models, emphasizing governance, lifecycle management, and business alignment. ModelOps can be regarded as the operational backbone of AI governance and scalability.

Key aspects of ModelOps

Here are the key aspects of ModelOps you should keep in mind:

- Broader Scope: ModelOps covers a wider range of AI and decision models, including rule-based engines, optimization models, and knowledge graphs. This is in contrast to MLOps, which is specific to ML models.

- End-to-End Lifecycle Management: It encompasses the complete journey of a model, from its creation and validation to its deployment, continuous monitoring, and ongoing improvement.

- Governance and Compliance: ModelOps strongly emphasizes governance and compliance, ensuring models comply with regulatory requirements, maintain version control, and remain explainable.

- Operationalization: ModelOps aims to quickly and efficiently move models from the development “lab” to a live production environment and scale them as needed.

- Continuous Improvement: ModelOps incorporates feedback loops and performance monitoring to enable continuous improvement and updating 9retraining) models with updated data.

- Collaboration: It encourages collaboration among data scientists, ML engineers, and IT teams to ensure models are operationalized more quickly and efficiently.

Why do We Need ModelOps?

With organizations scaling their use of AI, the number of models used is increasing exponentially. Without a structured framework to monitor and manage them, it may lead to:

- Model Sprawl: There will be hundreds of models with unclear ownership or tracking.

- Performance Degradation: AI models that “drift” as data changes over time may be degraded.

- Regulatory risks: There is a risk of non-compliance with data privacy, fairness, or explainability standards.

- Operational Barriers: A lack of collaboration may exist between data scientists, IT teams, and business leaders.

ModelOps successfully addresses all the above situations as it standardizes model lifecycle management, ensuring models are:

- Validated before deployment.

- Monitored for ongoing performance.

- Updated or retrained as data evolves.

- Audited for compliance and transparency.

Experimental AI projects can be moved to trusted, production-grade systems that deliver measurable business value with ModelOps.

ModelOps vs. MLOps vs. DevOps

These three “Ops” frameworks are often used interchangeably, but each serves a unique purpose. Here are the key differences between the three:

| Feature | ModelOps | MLOps | DevOps |

|---|---|---|---|

| Primary Focus | Governance, deployment, and lifecycle management of all AI and decision models (ML, statistical, rule-based, etc.). | ML model training, testing, and deployment. | General software application development, deployment, and IT operations. |

| Goal | Enterprise-wide AI governance, management, and transparency. | High-quality, scalable, and continuously monitored ML models in production. | Rapid, automated, and reliable software deployment. |

| Scope | The entire spectrum of AI and decision models across business processes. | ML models and the associated data science workflow. | Software code and infrastructure. |

| Primary Stakeholders | Data scientists, IT, business, and compliance teams. | Data scientists, ML engineers. | Developers, IT, Operations. |

| Lifecycle Management | Focuses on the entire lifecycle of all models, with an emphasis on governance and management from development to deployment. | Focuses on the end-to-end ML lifecycle: data gathering, model training, deployment, and monitoring. | Focuses on the continuous loop of code integration, testing, and deployment. |

| Key Differentiator | Provides a broader governance and management framework for all models, not just ML, at an enterprise level. | Addresses the unique issue of ML model performance degradation over time due to changes in the data. | Monitors and maintains the performance and reliability of code. |

| Example | Managing and monitoring an entire suite of AI models used for risk assessment, fraud detection, and quality control across an enterprise. | Deploying and monitoring a recommendation engine for an e-commerce site. | Automating the deployment of an e-commerce website’s updates. |

- DevOps automates software delivery.

- MLOps automates ML pipelines.

- ModelOps governs and operationalizes all models to deliver consistent business outcomes.

- Why DevOps Needs a ‘PromptOps’ Layer in 2025

- DevOps: A Software Architect’s Perspective

- DevOps vs. CI/CD

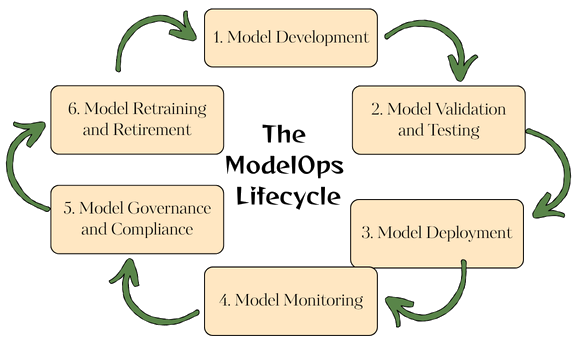

The ModelOps Lifecycle

ModelOps introduces a structured, end-to-end lifecycle for managing the operationalization of analytical and AI models, encompassing everything from model creation to retirement. It encompasses key phases, including data collection, model development, testing, deployment, and continuous monitoring, which help ensure model quality, compliance, and performance over time.

The following sequence of steps describes the ModelOps lifecycle:

1. Model Development

This phase involves collecting and preparing data, performing feature engineering, training the model, and evaluating its initial performance. In the model development phase, data scientists and analysts build models using different tools and frameworks, from Python-based ML models to R or SAS statistical models. ModelOps ensures version control, reproducibility, and standardized documentation in this phase.

2. Model Validation and Testing

- Accuracy and bias

- Data drift

- Compliance with policies

- Technical integration readiness

The model validation and testing phase ensures the model performs as expected under real-world conditions before it is deployed.

3. Model Deployment

The trained model is moved from the development environment to a production environment. Deployment can occur in various environments, including on-premises, in the cloud, or on edge devices. ModelOps platforms provide automated deployment pipelines to simplify and standardize this process, reducing manual errors and delays.

4. Model Monitoring

- Model performance metrics (accuracy, precision, recall)

- Data quality

- Prediction drift

- Resource utilization

Real-time alerts notify teams when models deviate from expected behavior and exhibit performance degradation, data drift, and potential bias.

5. Model Governance and Compliance

ModelOps enforces governance rules and policies by maintaining model lineage, audit trails, and documentation to ensure compliance with regulations. This ensures traceability, a key requirement for regulated industries, including banking, healthcare, and insurance.

6. Model Retraining and Retirement

When monitoring indicates a performance degradation, the model is retrained with new data or replaced with a new version. ModelOps automates retraining workflows, version updates, and model rollbacks. At this stage, the model is obsolete and it is retired systematically, ensuring continuity and documentation.

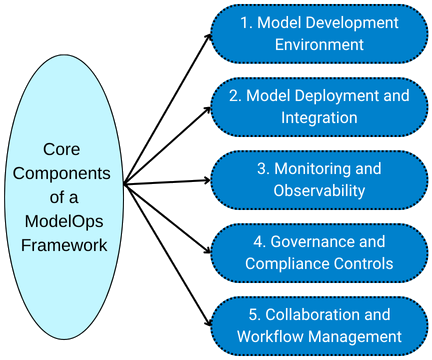

Core Components of a ModelOps Framework

A ModelOps framework comprises several core components that manage the end-to-end lifecycle of AI and decision models. The essential building blocks of ModelOps include:

1. Model Development Environment

Before developing a model, the dataset is prepared for the model. It also involves determining tools and practices for data collection, preparation, feature engineering, model training, and validation.

A centralized repository is maintained to store model artifacts, metadata, and version history. This enables easy tracking, collaboration, and rollback capabilities.

- Data and Feature Management: Processes and tools for data versioning, quality checks, and creating reusable features (feature stores).

- Experiment Tracking: Tracking model versions, hyperparameters, code changes, and performance metrics to ensure reproducibility and comparison across experiments.

2. Model Deployment and Integration

- Automation/CI/CD: Continuous integration/continuous delivery (CI/CD) pipelines are used to automate testing, packaging (e.g., containerization), and deployment of models and associated code.

- Model Serving: The model is provided for predictions, often as low-latency REST APIs, with capabilities for various serving strategies, such as online or batch inference.

- Scalability and Infrastructure: The underlying infrastructure, whether cloud-based, on-premises, or edge-based, handles varying workloads and scales automatically in response to demand.

3. Monitoring and Observability

Monitoring is the most critical aspect of ModelOps, as it significantly impacts the model’s long-term performance.

A deployed model is continuously tracked to ensure sustained performance and value.

- Performance Monitoring: Tracking technical metrics (e.g., latency, resource use) and business KPIs. Read: Different Evals for Agentic AI: Methods, Metrics & Best Practices

- Drift Detection: Monitoring the model for data drift (changes in input data patterns) and concept drift (changes in the relationship between input and output variables) that can degrade model performance.

- Alerting and Automated Response: Setting up alerts for performance degradation or anomalies can trigger automated actions, such as rolling back to a previous version or initiating a retraining workflow.

- Retraining and Updating: A systematic process for updating or retraining models with new data to adapt to changing conditions.

4. Governance and Compliance Controls

- Centralized Model Inventory/Catalog: A central repository to manage metadata, ownership, risk scores, and documentation for all models across the organization.

- Audit Trails and Versioning: Maintaining clear records of model lineage, approvals, and changes for auditability and risk management.

- Risk and Bias Monitoring: Ongoing evaluation of models for fairness, bias, and robustness to identify and mitigate potential risks. Read: AI Model Bias: How to Detect and Mitigate

5. Collaboration and Workflow Management

Effective ModelOps requires seamless collaboration and strong communication among multiple teams, including data science, IT, DevOps, and business. It bridges the gap between data scientists, ML engineers, IT operations, and business stakeholders. This requires strong communication and a shared understanding of objectives and processes.

Benefits of ModelOps

- Faster Time to Market: ModelOps utilizes automated deployment and validation pipelines that eliminate bottlenecks, enabling models to transition from development to production in days rather than months. This enables organizations to realize business value more quickly.

- Improved Model Performance: Models can degrade over time due to concept or data drift. ModelOps provides continuous, automated monitoring to track performance. Once an issue arises, it triggers proactive retraining or updates, ensuring models remain accurate and reliable.

- Enhanced Governance and Compliance: ModelOps provides a robust framework for managing model risk and adhering to regulatory requirements, such as GDPR and HIPAA, as well as financial risk models like SR 11-7 and Basel III, through audit trails, version control, performance logging, and bias detection mechanisms.

- Cost Efficiency: ModelOps optimizes infrastructure utilization, automates workflows, and enhances overall efficiency, thereby reducing the total cost of ownership of AI/ML systems.

- Scalability: It automates repetitive tasks, such as model testing, deployment, and retraining, which reduces manual work and enables organizations to manage and scale a large number of diverse models across business units, geographies, and environments without compromising control.

- Cross-Team Collaboration: ModelOps bridges the gap between data scientists, IT operations (DevOps), and business stakeholders by providing a common framework and shared visibility into the model lifecycle and aligning capabilities with business goals for more effective teamwork.

ModelOps Tools and Platforms

There are numerous ModelOps solutions available in the market, some of which are listed in the following table:

| Tools/Platforms | Description |

|---|---|

| ModelOp | Enterprise software focused on AI lifecycle automation and governance. It serves as a system of record for all AI assets (both internal and third-party) and enforces compliance across the entire enterprise. |

| IBM Watson OpenScale | This platform focuses on model governance, fairness, and explainability. It ensures models remain fair, explainable, and accurate over time by tracking issues such as bias and drift. |

| SAS Model Manager | It provides enterprise-level model management for analytical and ML models. It provides a structured environment for managing the entire lifecycle of analytical models, from development and validation to deployment and monitoring. |

| DataRobot MLOps | This platform automates deployment and monitoring across cloud and on-prem environments. It is an enterprise-grade platform that automates the ML lifecycle, with a strong focus on AutoML, governance, and compliance, making it accessible to users with less technical expertise. |

| Amazon SageMaker Model Monitor | The Amazon SageMaker Model Monitor monitors data drift and model quality in AWS environments by providing automated monitoring of ML models deployed in production. |

| Azure Machine Learning | This cloud-based platform from Microsoft offers end-to-end MLOps and ModelOps capabilities integrated with Microsoft’s ecosystem. It offers AutoML, MLOps templates for CI/CD, and robust governance features. |

| Kubeflow + MLflow | This is a popular open-source tool that can be integrated into a ModelOps framework for flexibility and control. |

| Databricks ML | This is built on the Lakehouse architecture, which provides a unified platform for data engineering, analytics, and machine learning, with integrated MLflow for tracking and management. |

| Google Cloud Vertex AI | A unified platform on Google Cloud that brings together AutoML and custom model development, with features like a centralized feature store and built-in monitoring and explainability. |

These tools help automate workflows, provide dashboards, and enforce governance regulations, so that organizations can focus on innovation rather than maintenance.

Challenges in Implementing ModelOps

- Cultural Resistance: Communication and collaboration between IT, data science, and other business teams can be challenging as transitioning from data science practices to standardized operations requires a cultural shift and executive sponsorship.

- Tool Fragmentation: Organizations often opt for a mix of proprietary and open-source tools (fragmentation), making integration complex.

- Regulatory Complexity: Industries struggle to ensure the security of sensitive information and comply with regulations that are constantly evolving.

- Lack of Skilled Professionals: ModelOps requires expertise in data science, software engineering, ML, and IT operations, a skillset that is in short supply.

- Data and Model Drift: Even with continuous monitoring, the data or model may drift. Maintaining model accuracy over time requires robust feedback loops and retraining strategies.

Best Practices for Successful ModelOps Implementation

- Establish Clear Ownership: Determine who is responsible for each stage of the model lifecycle, from development to monitoring and decommissioning.

- Standardize Model Development: Ensure model development is consistent by encouraging the use of reusable templates, version control, and documentation standards.

- Automate Everything: Automate everything from deployment to validation to monitoring to minimize errors and accelerate delivery.

- Embed Governance Early: Incorporate compliance and governance from the start so that all rules and regulations are followed.

- Implement Continuous Monitoring: Use real-time dashboards to continuously track model performance metrics, data drift, and prediction accuracy.

- Encourage Collaboration: Utilize clear communication channels and collaboration tools to facilitate seamless communication and collaboration among data science, IT, and business stakeholders.

- Adopt a Phased Rollout: Start small with a pilot project with one or two high-value models, refine the process, and then scale across teams and regions.

ModelOps in Action: Real-World Use Cases

Here are some real-world examples of ModelOps in action:

Banking and Financial Services

Banking and financial institutions use ModelOps to manage credit risk and fraud detection models across multiple regulatory jurisdictions. Automated governance ensures each model complies with local and global finance regulations and protects sensitive customer data.

Healthcare

Hospitals deploy AI models for diagnostic imaging and patient risk prediction. ModelOps frameworks help monitor model drift due to demographic or technological shifts, ensuring patient safety and accuracy.

Retail

E-commerce companies rely on recommendation engines and dynamic pricing models to drive sales. ModelOps enables continuous retraining using new data from customer behavior and seasonal trends, allowing for ongoing improvements.

Manufacturing

In predictive maintenance, ModelOps helps manage edge-based AI models that monitor machinery performance in real time and prevent failures or breakdowns.

The Future of ModelOps

- Integration with Generative AI: ModelOps will govern and deploy large language models (LLMs) and generative AI systems.

- AI Governance Automation: AI-driven automation, such as self-healing models and auto-tuning frameworks, can be expected within ModelOps.

- Edge and Hybrid Deployments: ModelOps frameworks will increasingly support decentralized environments with IoT and edge computing.

- Ethical and Responsible AI: ModelOps will be responsible for ensuring fairness, transparency, and accountability in AI systems.

- Unified “XOps” Ecosystem: ModelOps will converge with DataOps, MLOps, and DevOps, forming a unified ecosystem that manages the entire AI pipeline end-to-end.

ModelOps and testRigor

testRigor’s work aligns closely with the core principles of ModelOps, focusing on assisting AI lifecycle automation, governance, and continuous quality assurance for AI-driven systems.

In a way, testRigor serves as a ModelOps enabler, ensuring that AI models, from generative to predictive, are validated, governed, and monitored effectively within a continuous, automated, and human-accessible testing ecosystem.

- Automating model validation and regression testing with effective English commands that can deal with the unpredictable nature of AI. Read: AI Features Testing: A Comprehensive Guide to Automation

- Integrating AI governance and compliance checks as test cases.

- Enabling continuous feedback and retraining readiness.

- Enhancing cross-team collaboration through no-code, natural language (English) test creation.

Conclusion

ModelOps is the cornerstone of enterprise-scale AI success, ensuring that models transition seamlessly from experimentation to production while maintaining compliance, performance, and trustworthiness. By establishing a solid ModelOps foundation that integrates automation, governance, monitoring, and collaboration, businesses can harness AI as a reliable engine for growth.

As AI becomes mission-critical, organizations that fail to adopt ModelOps risk falling behind in both innovation and governance. In essence, it is not just about building models anymore; it’s about operationalizing intelligence at scale.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |