Prompt Engineering in QA and Software Testing

|

|

In the age of artificial intelligence (AI) and automation, software testing has evolved considerably. One emerging practice is “prompt engineering,” which is especially relevant when it comes to testing models like the OpenAI’s series. But what exactly is prompt engineering, and how does it fit within the software testing landscape? Let’s look into it, and then show you how testRigor is utilizing its model for faster and more efficient test creation.

What is Prompt Engineering?

At its core, prompt engineering involves designing, analyzing, and refining the inputs (or “prompts”) used to elicit responses from AI models, ensuring that the outputs are as desired. Just as a skilled interviewer can frame questions in various ways to get the most accurate answers from a human interviewee, prompt engineers frame their inputs to AI systems in a way that maximizes the accuracy, relevance, and clarity of the system’s outputs.

For many people, the phrase “using prompt engineering” is synonymous with “using ChatGPT”. However, this isn’t necessarily the case, as prompt engineering can be applied to a broad spectrum of models. Additionally, many companies have security concerns about using ChatGPT, which is one of the reasons why testRigor’s AI engine does not use it.

Why is Prompt Engineering Crucial in Software Testing?

- Improves Model Understanding: Different prompts shed light on the AI model’s functioning, assisting in troubleshooting and behavior refinement.

- Enhances Model Utility: Consistent and appropriate responses to a broad spectrum of user queries make models like chatbots or virtual assistants more valuable. Prompt engineering is the key.

-

Safety and Reliability: It’s imperative to identify and rectify potential problematic outputs for AI models in sensitive applications. Diverse prompts play a pivotal role.

- Real-world Consequences: Inadequately tested AI models, especially in sectors like healthcare or autonomous vehicles, can have grave implications. This emphasizes prompt engineering’s necessity.

- Contingency Measures: It’s beneficial for AI systems to have built-in mechanisms, like deferring to a human or providing generic answers, when faced with unfamiliar prompts.

Key Aspects of Prompt Engineering in Software Testing

-

Diverse Inputs:

- Examples: For instance, testing a chatbot requires prompts from different languages, colloquialisms, and cultural contexts.

- Impact on Model Fairness: Ensuring models don’t discriminate against specific groups mandates testing with diverse demographic inputs.

-

Iterative Refinement:

- Feedback Loop Creation: Continuous improvement is realized when insights from one test cycle inspire the next set of prompts.

- Integration with Other Testing Methods: Prompt engineering works best when integrated with other methods, like adversarial testing.

-

Collaboration with Model Training:

- Fine-tuning with Custom Prompts: Insights can guide further model refinement. If a style of prompt is consistently misinterpreted, it indicates a training gap.

- Active Learning: Challenging examples unearthed by prompt engineering can be incorporated into model retraining.

Comparison with Traditional Testing

Traditional QA methodologies often focus on fixed scenarios with predictable outputs. In contrast, prompt engineering, tailored for AI, accepts and even expects variability. While the former might rely heavily on predefined test cases, the latter leans into adaptability and exploration, navigating the vast landscape of potential AI responses to ensure consistency and reliability.

Training and Skillset for QA Testers

With AI becoming central to software solutions, the required skill set for QA engineers is also evolving. In the age of prompt engineering, understanding AI behavior, linguistic intricacies, and domain knowledge is as crucial as understanding code structure. Training programs are emerging to equip QA professionals with these competencies, ensuring they are primed to navigate the challenges AI presents.

Challenges and Considerations

- Bias Mitigation: Testing prompts must be unbiased, ensuring the model’s fairness and wide applicability.

- Complexity of AI Responses: AI models, unlike traditional software, produce a broad range of responses, complicating the testing process.

- Subjectivity in Evaluating Responses: The “correctness” of AI responses can be open to interpretation, posing unique challenges.

-

Scalability:

- Automated Prompt Generation: Given the vastness of potential prompts, automated tools might be the answer to generate a plethora of test prompts, or even employing AI to craft challenging prompts for other AI systems.

Prompt Engineering in Software Testing Example

Now let’s talk about how you can use prompt engineering to build your automated tests in testRigor. And before we dive into more details, here is an example of how to use Prompt Engineering for your test cases:

How does Prompt Engineering in Software Testing work

As a prompt engineer, you copy and paste your test case into testRigor, which then breaks it down line by line. Each line is treated as a prompt and executed by the AI step by step. At each step, the system examines your screen and determines what action should be taken based on the content displayed.

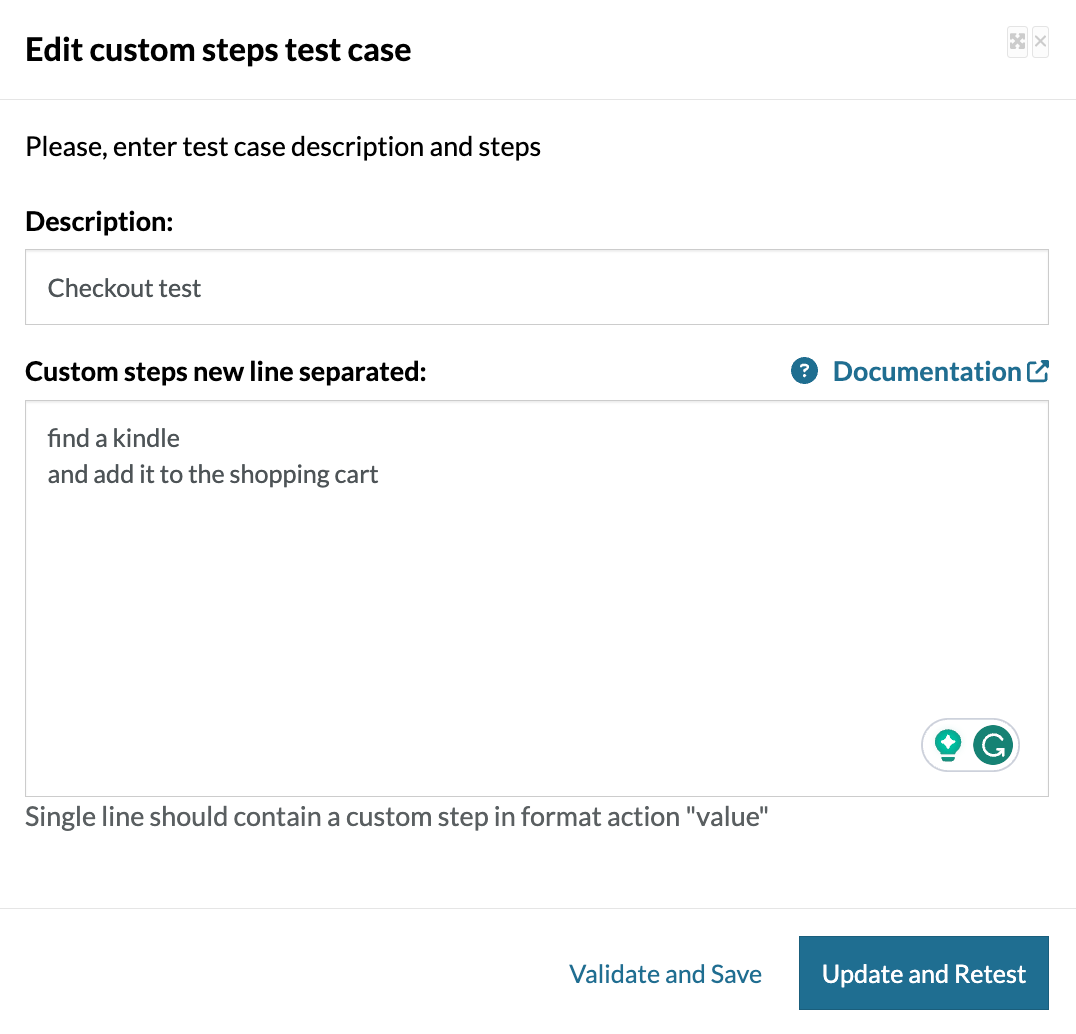

High-level approach

find a kindle and add it to the shopping cart

This is how it will look like in the UI:

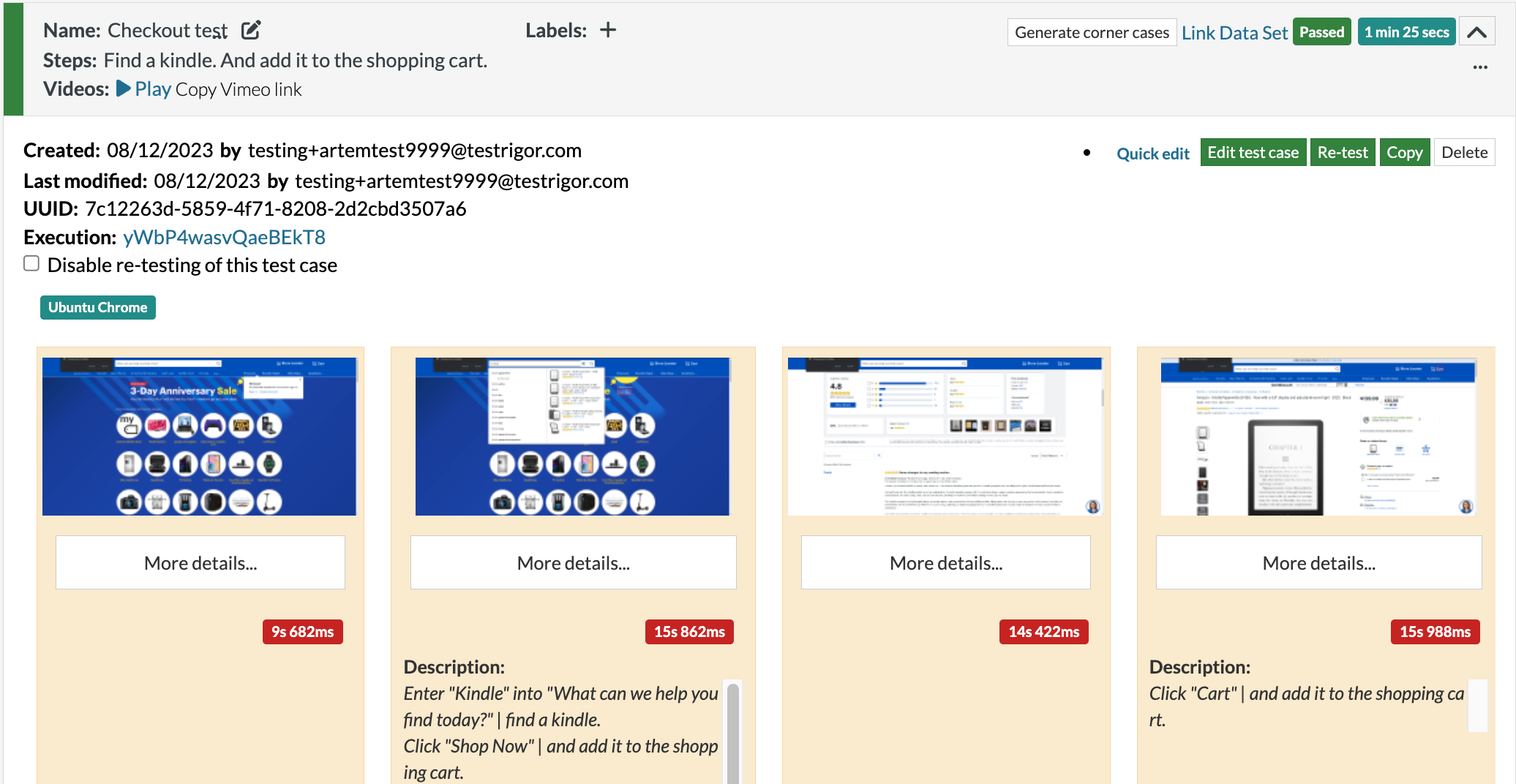

After pressing confirm, sit back and relax. The testRigor engine will create the test case based on the criteria you’ve specified. However, upon execution, you might discover that it doesn’t perform as you intended:

As illustrated in the example, since no Kindle was selected, the system wandered around trying to satisfy the second prompt: add it to the shopping cart.

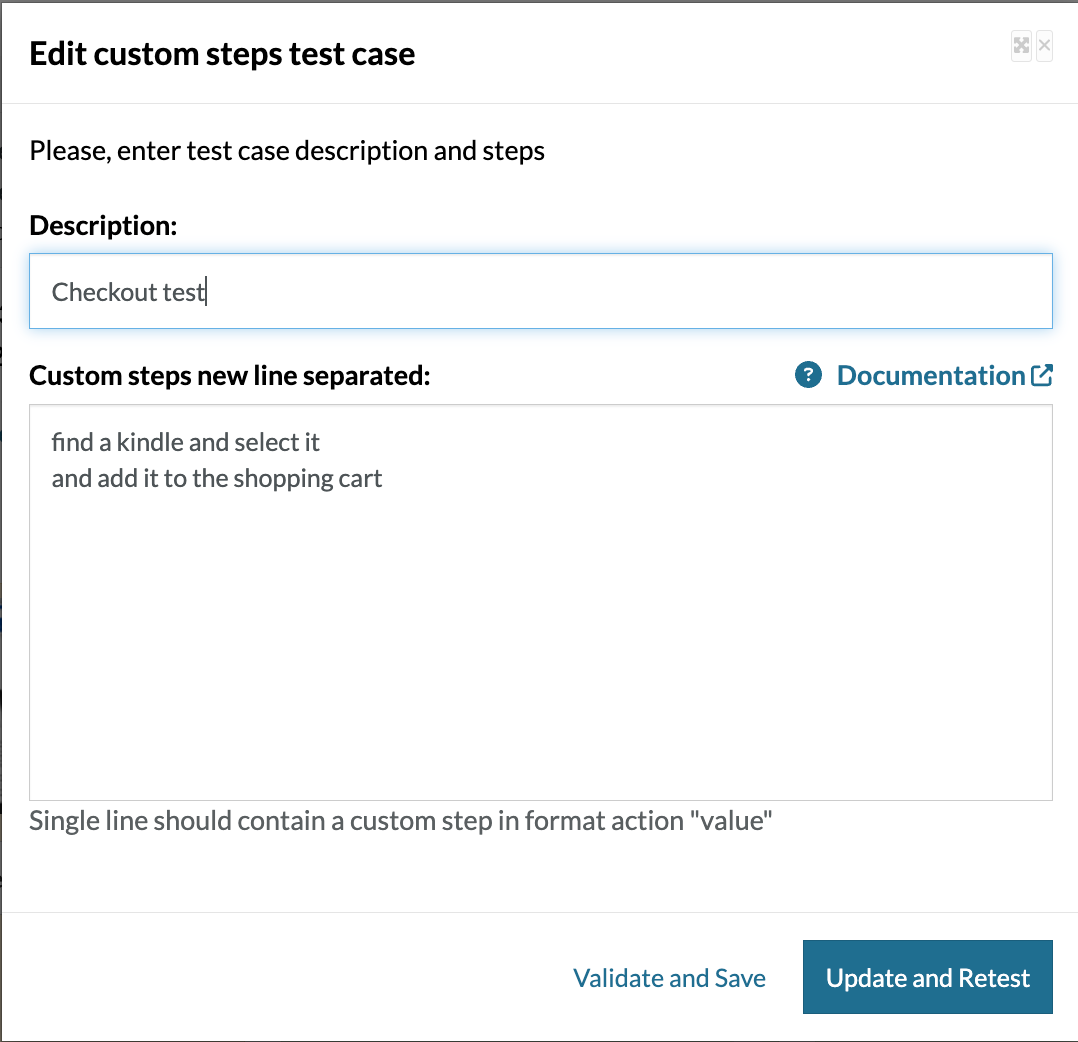

find a kindle and select it and add it to the shopping cart

This is how it will look like in the UI:

In essence, the primary responsibility in this scenario is ensuring that the prompt is lucid and straightforward. It may require supplementary clarifications or additional context to guide the system effectively and guarantee it operates as intended.

Other Prompt Engineering techniques

Prompt engineering is a multi-faceted field, comprising numerous techniques. Let us consider the ones that would be helpful in QA environment.

Least-to-most prompting technique for QA prompts

Rooted in the principle of gradation, the ‘least-to-most’ technique seeks to guide AI systems incrementally. There might be instances where an AI doesn’t behave as anticipated. One effective countermeasure, drawing from this technique, is to fractionate the primary instruction into more granular, explicit steps, thereby facilitating the AI’s comprehension and execution.

add a kindle to the cart

find a kindle and select it and add it to the shopping cart

By employing such granular instructions, we can bridge the gap between AI’s interpretation and the desired outcome, ensuring smoother and more predictable system interactions.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |