Agentic AI vs. AI Agents vs. Autonomous AI: Key Differences

|

|

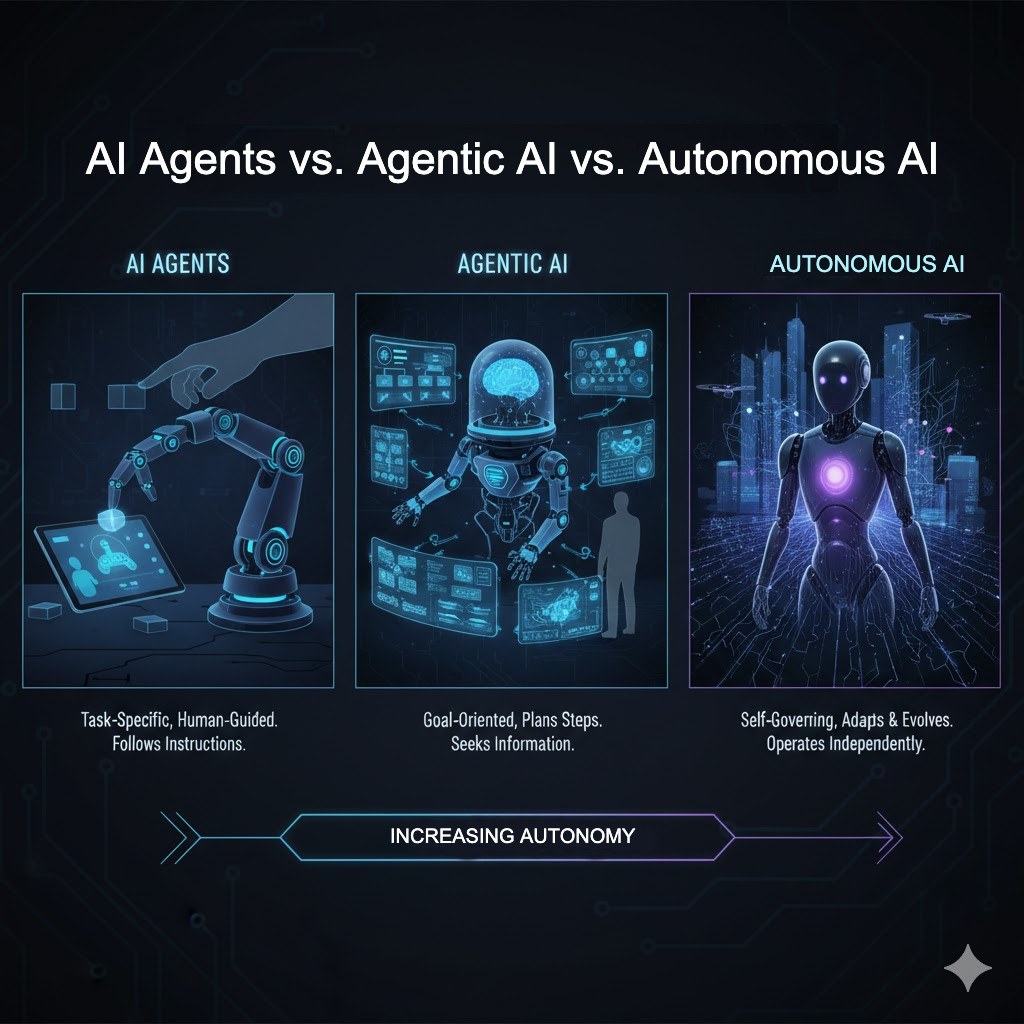

As static task-specific AI becomes an adaptive being capable of learning and making decisions, additional vocabulary can be used to describe its capabilities. Phrases like AI agents, agentic AI, and autonomous AI are thrown around interchangeably all the time, but are they so interchangeable?

These notions are related but correspond to different generations of intelligent systems. It is important to recognize the difference between them for designers, developers, policy-makers and anyone else interested in where AI technology is going.

| Key Takeaways: |

|---|

|

Understanding the Terms: AI Agent, Agentic AI, and Autonomous AI

Before diving deeper, it’s essential to establish clear working definitions.

AI Agent

An agent is defined as an autonomous system that perceives its environment and acts so as to meet its goals. It communicates with the environment through inputs and outputs. Agents can span from static rule-based bots to learned-automata style models, which optimize their actions over time. Their freedom is often restricted, and they perform limited actions based on predefined instructions. Read: AI Agents in Software Testing.

Agentic AI

Agentic AI refers to the whole ecosystem or environment that does more than just act, reason, adapt, and plan across multiple steps. It is characterized by agency, that is, they can make decisions in a context-sensitive manner, can follow sub-goals or can change strategies dynamically. Agentic AI isn’t quite autonomous in the absolute sense, but it demonstrates self-directed behavior, planning and learning, a midpoint between simple agents and truly autonomous intelligence.

Autonomous AI

Fully autonomous AI is the greatest degree of independence. These systems can scale to complex, open-ended tasks without the need for constant human supervision. They are thinking, rational beings that make decisions on the fly when confronted with unknown situations in a relatively unsupervised way. The thing autonomous AI aims to do is simulate human-like decision-making and flexibility, potentially working around the clock in changing environments.

Historical and Conceptual Foundations

The Origins of Agents

The idea of an “agent” has been central to artificial intelligence for a long time. In the early days of AI research, Decades ago, any agent was defined as something that could perceive an environment and perform actions to achieve goals. Traditional AI systems like chess-playing programs or expert systems can be thought of as agents; they collect input, reason, and act upon that reasoning. These early agents are deterministic, goal-directed, and reactive.

From Static Rules to Adaptive Behavior

With growing computer capacity, AI agents moved from being hard-coded to adaptive systems. Machine learning is the process by which agents can learn from data and thus increase their performance without explicit reprogramming. Reinforcement learning also allowed systems to learn their behaviors from experience. That was the beginning of dynamic agents, agents that had the possibility to learn and introduce agency.

The Road Toward Autonomy

In areas such as robotics and automation, autonomy is the holy grail. Applications included self-driving cars, flying drones, and factory robots that aimed to minimize human involvement. These pursuits, out of which the field developed, led to the modern vision of AI as autonomous computers that can govern their own behavior and solve open-ended problems.

AI Agents: The Foundational Layer

AI agents are the building blocks of intelligent systems. They are designed to perceive, decide, and act in ways that achieve predefined goals. An AI agent typically includes:

- Perception: The system takes inputs from the environment (sensors, APIs, text, images, logs) and extracts meaningful signals/features. Effective perception filters out noise and maintains context for decision making.

- Decision Logic: The system decides the most suitable next move based on current state and objectives through rules, learned policies, search/planning, or optimization. It can also predict uncertainty and trade-offs.

- Action: The system performs the selected action-commanding, updating data, moving a robot, responding to a user, and then monitors the effects. Those results swim right back up into perception for the next cycle.

These actions create a feedback cycle: sense → decide → act → repeat. The loop permits the agent to engage in lifelong interaction with its environment.

Types of AI Agents

AI agents exist along a spectrum of complexity:

- Simple Rule-based Agents: These agents follow a particular set of logic and conditions, and in general, they act only when certain rules are satisfied. For instance, “if the temperature > 30°C, enable the cooling system”.

- Model-based Agents: Construct and update models of the environment and use these models to make good decisions.

- Learning Agents: Continually get better by applying reinforcement or supervised learning to adjust behavior according to feedback and experience.

- Goal-based Agents: Decide by searching for and executing actions that take them closer to predefined goals or desired final outcomes.

- Utility-based Agents: Assess various possible scenarios and choose the one that yields the highest degree of global satisfaction (utility), trading off between disparate goals.

Practical Examples

- Chatbots: Engage with users in everyday language, answering questions and doing menial tasks within specific domains or contexts.

- Recommendation Engines: Study user behavior and preferences so that customized products or selections, including movies and articles, can be recommended.

- Bots: Responsible for watching market data and executing buy or sell orders based on your settings or strategies.

- Game Agents: Serve as simulated players and opponents, moving pieces, and making choices in what appear to be smart playing behaviors.

While capable, these agents are generally reactive and constrained within the environments they were designed for.

Strengths and Limitations

| Advantages | Limitations |

|---|---|

|

|

AI agents are excellent doers, but not thinkers. Their lack of ability to adapt in real-time to differing circumstances is what spawned agentic AI.

Agentic AI: The Adaptive Middle Ground

Agentic AI is the next phase of that evolution, systems capable of not just planning, adapting, and collaborating but working to accomplish things. They have characteristics such as self-control and attention to context, which make them similar to humanlike agents.

What Makes AI “Agentic”?

An AI becomes agentic when it can:

- Decompose Complex Goals: An agentic AI can divide up a big goal into smaller tasks that it can complete more easily, handling problems in an efficient step-by-step way.

- Adapt its Plan: Change its tactics when new information or unforeseen factors force it to, thereby making it adaptable.

- Learn from Experience and Feedback: The system learns better scenario selection over time by observing outcomes and previously taken actions.

- Coordinate with the Actions of Others: It works well with other AI systems and people, exchanging information and coordinating their efforts toward common ends.

- Predict and Trade-off: The AI models evaluate the effects of its own actions, weighing risk versus reward and priorities with respect to improving overall performance.

Example: Agentic Workflow Automation

Imagine an AI system managing a company’s marketing campaign. The system:

- Defines goals (increase engagement).

- Deconstructs them (running ads, analyzing results, and budget optimization).

- Manages specialized sub-agents (content creation, analytics, ad placement).

- Automates monitoring metrics, tuning campaigns, and refocusing strategies automatically.

Here, AI isn’t merely reacting; it’s thinking and methodically moving its way through the process dynamically.

Strengths and Limitations

| Advantages | Limitations |

|---|---|

|

|

Despite these hurdles, agentic AI is already transforming sectors like business automation, digital assistants, and robotics. It’s the stepping stone between narrow intelligence and complete autonomy. Read: Different Evals for Agentic AI: Methods, Metrics & Best Practices.

Autonomous AI: The Peak of Independence

Autonomous AI is the most advanced form of artificial intelligence, systems that can operate independently in complex and unpredictable environments. An AI is called autonomous if it is able to establish and pursue its own goals, work with little or no human assistance, be able to act safely in the presence of unexpected conditions, and plan and react over long time periods.

These systems combine perception, reasoning, and action in a continuous feedback loop that allows them to be flexible in response to the particular variations of their environment while remaining responsible and accountable.

Key Features

- Self-Initiation: The system initiates tasks on its own or creates new goals without requiring specific human requests, and with more proactive behavior.

- Adaptation to Novelty: It is intelligent in its response to a new stimulus or task by making an abstraction from past experience and changing its strategy of action.

- Long-term Planning: The AI takes a look at what the future holds and how it will result from its current activity, delivering and enacting plans that have time as one of the dimensions and conditions change.

- Minimal Oversight: It works well without human input and operates autonomously, ensuring a high level of consistency and accountability.

- Integrated Reasoning: The platform inherently combines perception, prediction, and decision-making for context-aware interpretation and intelligent response.

Levels of Autonomy

Just as autonomous cars are rated on a scale from Level 0 (manual) to Level 5 (fully autonomous), AI is classified according to where it falls along the spectrum of autonomy:

- Level 1 – Human-assisted: AI supports humans to automate certain tasks, but is not able to make decisions by itself, as it requires human control and input directly.

- Level 2 – Human-supervised Automation: The system is capable of some independent operation; however, humans are always on hand to monitor it and can override if needed.

- Level 3 – Conditional Automation: The AI can act alone for long stretches but might ask a person to intervene, or take charge in tricky or uncertain situations.

- Level 4 – Fully Self-Driving in Various Limited Scenarios: The system can operate all functions unaided, but only under very specific circumstances or limited environments and as needed (some may not even need a human behind the wheel).

- Level 5 – Omniscient Through any Domain or Task: The AI is completely self-sufficient and can adjust itself on any occasion without human intervention.

Most real-world AI systems currently operate at Levels 2-3.

Example: Autonomous Research AI

Take a scientific AI tasked with discovering new chemical compounds. It develops theories and experiments to prove them, reviews the results, and refines its knowledge in response. It operates without micromanagement by humans, running autonomously to test ideas, learn from each experiment, and hone its strategies for directing future research. This is the sign of a would-be Cinderella, an AI that is persistent, goal-driven, and continually getting better as it seeks ever greater understanding.

Strengths and Limitations

| Advantages | Limitations |

|---|---|

|

|

Hence, autonomous AI must include rigorous safety mechanisms, transparent reasoning, and well-defined ethical boundaries. Read: 5 Advantages of Autonomous Testing.

Spectrum and Hierarchical Perspectives

AI agents, agentic AI, and autonomous AI are not distinct categories as much as they form a continuum. Understanding this spectrum and the hierarchies among them clarifies how capabilities, autonomy, and responsibility evolve in systems.

The Autonomy Spectrum

The relationship between AI agents, agentic AI, and autonomous AI can be viewed as an ongoing sequence or evolution. Each type is more sophisticated than the previous in that each involves higher levels of capability, adaptability, and independence.

So each phase has what the previous one has: adaptiveness, autonomy, and long-term reasoning.

- AI agents: These are task-driven, low-backbone executors that work within a certain scope based on pre-determined rules or shallow learning.

- Agentic AI: Such systems also have the capability to adjust mission plans, coordinate with team members, and together manage flexible workflows.

- Autonomous AI: Follows decision-making over long horizons, executes plans, and goal-seeks with limited or no human integration.

No sharp line divides these stages; systems can possess mixed traits depending on design.

Organizational Analogy

Imagine an organization:

- AI Agents: Much like employees, agents concentrate on performing the task as instructed, with clear directives and a set of procedures in place.

- Agentic AI: Like middle managers, they decide and delegate tasks, monitor progress, and respond to problems.

- Autonomous AI: Acting like upper management, they establish top-level policy and strategy, but delegate day-to-day decision-making to distributed system elements.

This analogy captures the functional hierarchy and the degree of responsibility each stage represents.

Multidimensional Comparison

| Dimension | AI Agents | Agentic AI | Autonomous AI |

|---|---|---|---|

| Goal Scope | Single, fixed task | Multiple adaptive goals | Broad, evolving objectives |

| Learning | Limited or offline | Continuous adaptation | Self-directed learning |

| Planning Depth | Reactive | Multi-step planning | Long-horizon reasoning |

| Human Oversight | High | Moderate | Minimal |

| Coordination | Simple | Multi-agent collaboration | Ecosystem integration |

| Failure Handling | Manual reset | Self-correction | Autonomous recovery |

| Transparency | High | Medium | Low |

This table emphasizes that autonomy evolves along several dimensions, not just independence, but also adaptability, coordination, and decision depth.

Blurred Boundaries

In practice, the lines between AI agents, agentic AI, and autonomous AI become quite blurred. For example, a smart AI agent can demonstrate agentic behaviour when it learns to adapt, and does so in context; at the same time, an agentic system with a high level of autonomy may start to almost run itself. Yet many of these so-called autonomous systems are dependent on embedded agents or human operators to ensure safety and control as well. When combined, these overlapping capabilities establish layers of an ecosystem rather than a set of zone categories.

Architectural and Design Considerations

As we design AI systems, it is important to think about their architecture carefully as they go from simple agents to sophisticated and independent beings. With increased levels of autonomy come new planning, coordination, safety, and control challenges. This knowledge is critical to constructing systems that can be scaled and are dependable, but most importantly, those designed in symphony with human desire.

Modular Design

Modular architectures are essential to both agentic and autonomous AI. Sub-agents are concerned with perception, planning, and control, or communication. There is a central orchestrator that coordinates them, allocating tasks, consolidating results, and resolving conflicts. This modularity provides a scalable, debugable, and transparent intercommunication among the components.

Planning and Meta-Reasoning

Advanced systems incorporate multiple levels of reasoning:

- Reactive Planning: The system reacts immediately and reflexively to changes in the environment or external stimuli, taking rapid decisions merely for its own stability or its short-term goals.

- Deliberative Planning: The AI plans its action by considering multiple alternative actions and their corresponding plans to achieve the goal in a structured manner.

- Meta-Reasoning: The system thinks about its decision-making mechanisms, considering what paths of action to perform or strategies/plans to follow and when and where they may be changed.

This layered design enables agentic AI to adapt dynamically while maintaining coherence.

Safety and Guardrails

As autonomy grows, safety mechanisms become critical:

- Constraint Modules: These apply strict constraints and rules that the AI has to follow, in order not to take harmful or dangerous actions.

- Fallback Mechanisms: In case of anomalies or failures, the system adopts fallback strategies, failsafe shutdowns or conservative operation modes to reduce risk.

- Audit Logs: All actions, decisions, and thoughts are tracked to hold people, processes, and systems accountable.

- Human Override: There is a failover mechanism where human intervention can occur or a human can take control of an automated function anytime in case of emergency for ultimate oversight and safety.

Even “autonomous” systems must include these safeguards to ensure accountability.

Interaction and Feedback

Effective agentic systems depend on robust feedback loops:

- Input Senses: The device operates in an endless loop, constantly feeding on data around it through sensors, user inputs, or other sources, enabling it to keep track of its surroundings and maintain situational awareness.

- Outcome Evaluation: To learn from success or failure, evaluate the effectiveness of actions by comparing them to what is intended or performance targets.

- Adaptation: Based on its experience (from observations or rewards), the system modifies its internal models or policies to perform better in the future and continue to satisfy its objectives.

This constant feedback enables learning and prevents drift from intended objectives.

Trade-Offs, Risks, and Governance

With AI systems becoming more autonomous and having increasing decision-making authority, ensuring that new developments are balanced with safety is more important than ever. Each layer of freedom has its own trade-offs among control, flexibility, and accountability. Understanding these trade-offs, the risks, and governance needed to mitigate them is critical for building AI that is powerful while remaining trustworthy.

Balancing Autonomy and Control

The push for greater autonomy introduces trade-offs:

- Flexibility vs. Predictability: As AI systems become more flexible and autonomous, their behaviors may be less predictable and controllable.

- Performance vs. Transparency: Really complex models may outperform simpler ones, but very few people will be able to understand or explain how/why they made that decision.

- Speed vs. Safety: Autonomous systems cannot afford slow decision-making but must ensure safe and reliable results.

Designers must find an equilibrium between independence and oversight.

Risk Factors

Common risks in advanced AI systems include:

- Goal Misalignment: The AI might pursue two goals in a problematic way that conflicts with what is actually desired.

- Unintended Consequences: The action can generate side effects or impacts that were not intended during the planning of the system.

- Systemic Errors: Tiny errors or biases in one part can jump throughout connected components, magnifying their overall influence.

- Loss of Human Oversight: Over-reliance on automation may decrease human vigilant monitoring and decision authority, or lead to places where it should not.

Governance Principles

Robust governance frameworks help manage these risks through:

- Clear Objective Setting: Determining clear goals or limits ensures that AI training and decision-making stay within the right boundaries.

- Transparency Standards: Servicing mechanisms that enable decisions to be audited and explained go a long way in generating trust and accountability.

- Regulatory Compliance: Safeguarding the compliance of AI processes with ethical and legal norms is vital for security and social acceptance.

- Continuous Monitoring: System performance is continuously monitored to identify behavioral drift, errors, or failures before they become immune.

- Human-in-the-Loop Design: Maintaining human control over key decisions ensures oversight, ethical judgment, and control.

Trust and Explainability

Trust is the foundation of adoption. For users and regulators to rely on agentic or autonomous AI, systems must be able to explain:

- Why was a decision made?

- What data influenced it?

- How confident the system was.

Explainability transforms AI from an opaque black box into a trustworthy collaborator. Read: What is Explainable AI (XAI)?

Use Cases and Real-World Examples

Let us review a few use cases of each.

Robotics and Transportation

| AI Agents | Agentic AI | Autonomous AI |

|---|---|---|

| Sensor modules for lane detection or obstacle avoidance. | A vehicle’s control system integrates navigation, decision-making, and safety monitoring. | A self-driving car capable of navigating any environment without human help. |

Digital Assistants

| AI Agents | Agentic AI | Autonomous AI |

|---|---|---|

| Voice command interpreters (e.g., setting reminders). | Smart assistants that plan, prioritize, and learn user habits. | Future assistants who act proactively, managing schedules, negotiating services, and resolving conflicts autonomously. |

Infrastructure and IT Operations

| AI Agents | Agentic AI | Autonomous AI |

|---|---|---|

| Monitoring systems that detect anomalies. | Self-healing infrastructure that diagnoses and fixes issues automatically. | Fully independent platforms that manage servers, networks, and deployments with minimal human input. |

Conclusion

Moving from AI agents to agentic AIs and then onto autonomous AIs is a significant step in terms of processing and behavior for machines. With each level, you’re adding a little more flexibility, autonomy, and complexity from rule-following agents to self-organizing systems and fully autonomous entities. Together, they make up a continuum that represents how close an AI system is to being an actual self-directed intelligence.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |