AI Agency vs. Autonomy: Understanding Key Differences

|

|

Artificial Intelligence (AI) has evolved from being a computational tool to becoming a decision maker. Today it can execute tasks alone and even sometimes have autonomy. Of all the new concepts in this universe, the AI agency is a hot topic of discussion and application among scholars.

These days, people, including many seasoned practitioners, misunderstand agency and autonomy with regard to AI systems. Although they are overlapping, they are not identical. The perception of how AI should be understood to possess agency is essential to address the ethical, technical, and practical challenges surrounding AI-facing modern societies.

| Key Takeaways: |

|---|

|

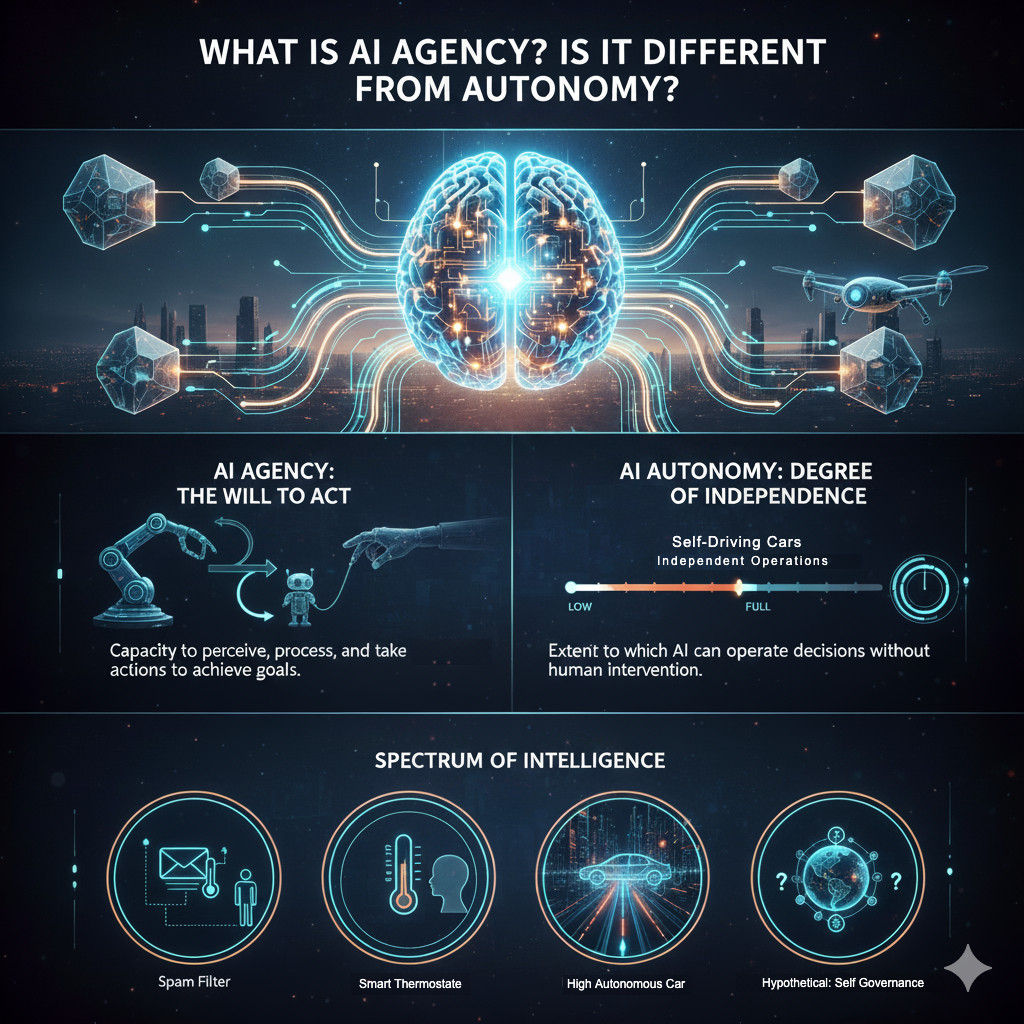

What is an AI Agency?

The autonomy or free will of an AI agent empowers an AI system to decide, act on its own, and perform actions based on its inner processes, goals, or external stimuli. Unlike the simple algorithms which passively react to the instructions of some external environment, an agent with real agency can actively seek objectives, adapt depending on new conditions and even create its environment. Here, the system itself is being on purpose and can therefore play a participative role in the world in substantial ways.

The autonomous vehicles can be an example; they need to decide how to bypass traffic or to avoid obstacles, or the financial algorithms that can choose trading strategies based on market conditions. In these cases, the AI is not just acting in accordance with an existing system of regulations; it is literally part of the creation of judgments and influences the outcomes in a way that appears to be somehow intentional or meaningful.

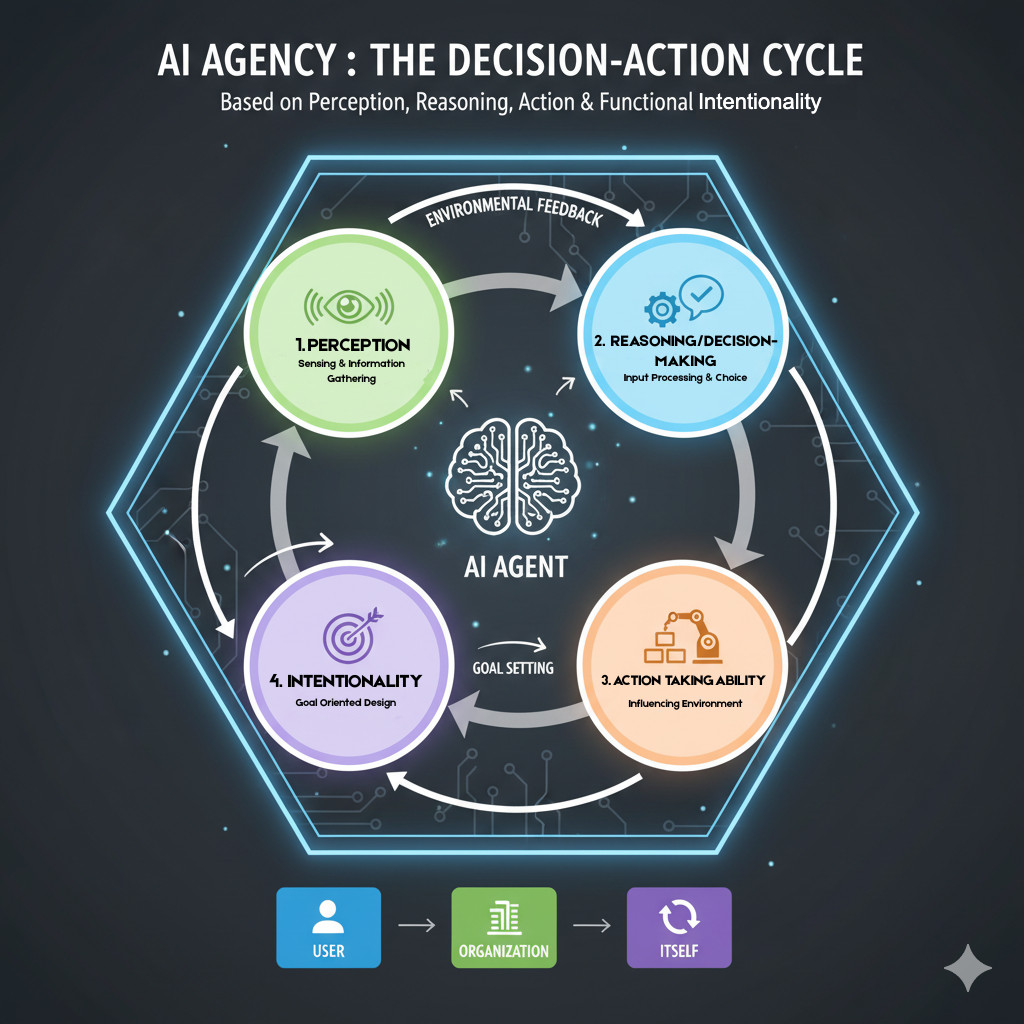

Understanding the Concept of Agency

Agency is the ability of a person or a thing to act willfully and make decisions that influence the world in philosophy and social sciences. In the context of AI, an AI agency can be defined as the ability of an AI system to act on behalf of a user, organization, or itself based on decision-making mechanisms. The important aspects of agency are:

- Perception: The sense of feeling or getting information about one’s surroundings.

- Reasoning or Decision-making: The ability to take inputs and decide on a course of action.

- Action-taking Ability: The ability to affect its environment or implement a decision made.

- Intentionality (in a functional sense): AI does not have human consciousness, but its design can be used to simulate purposeful action in accordance with goals.

AI agents can take many different shapes:

- ChatGPT, Siri, or Alexa are virtual assistants responding to verbal instructions.

- Self-driving cars are on the streets of the city with minimal human control.

- Robotic trading systems that buy stocks according to market information.

- Robotic process automation (RPA) software that executes monotonous business operations.

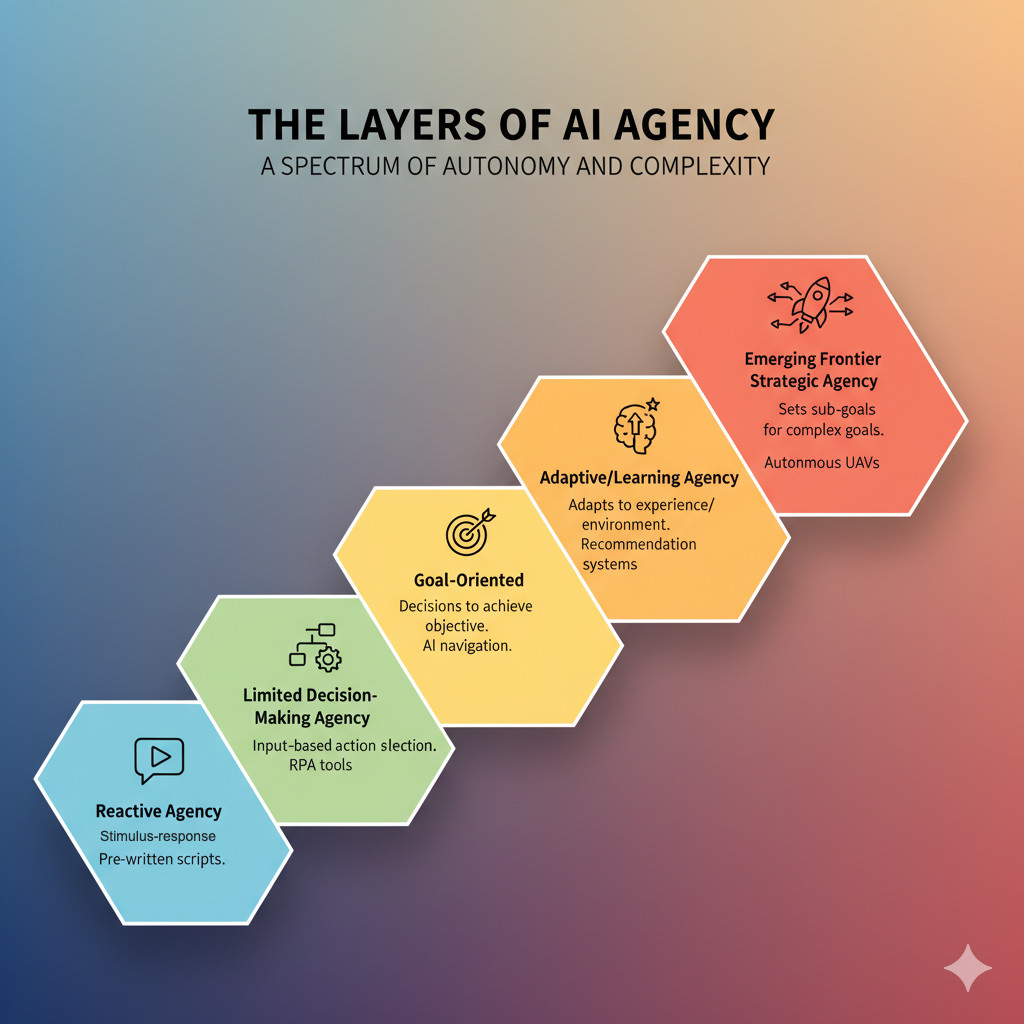

The Layers of AI Agency

The AI agency relies on multiple layers, all of which give the system the ability to act, make decisions, and influence the outcomes. These strata are a manifestation of different levels of complexity of interaction between AI and the environment and the achievement of its objectives. The concept of AI agency is not a duality; it is a spectrum. The levels of AI agency are characterized by different models depending on the capabilities of the system and its autonomy in making decisions.

- Reactive Agency: Systems are stimulus-response systems.

- A chatbot that responds with some pre-written scripts in the event of keyword occurrence.

- Few Agency of Decision-Making: The systems receive inputs and select actions based on an existing set of actions.

- RPA tools, such as the sequence of work to be done, e.g.,

- Goal-Oriented Agency: AI has the ability to make decisions that will achieve a specific objective.

- A navigation system revised by an AI-based one re-computes the routes based on the real-time traffic information.

- Adaptive or Learning Agency: AI adapts to experience or even environmental differences.

- Self-learning recommendation systems on websites like Netflix or Amazon.

- Emerging Frontier Strategic Agency: Faculty will set sub-goals in order to achieve complex goals.

- Developed UAV drones that do multi-step missions without directives.

Why AI Agency is Not the Same as Autonomy

Agency and autonomy are terms that are often used interchangeably when it comes to Artificial Intelligence (AI). Nevertheless, they are different concepts that describe various aspects of the functionality of an AI system. Although both are essential to the comprehension of the capabilities of AI to make decisions and execute tasks, they focus on different aspects of the behavior and interaction of these systems with the surrounding world. Read: AI Glossary: Top 20 AI Terms that You Should Know.

Is it Different from Autonomy?

To observe the distinction between them, it is appropriate to describe autonomy first. The amount of independence that the system has as far as performing tasks or making decisions in the absence of human involvement is referred to as autonomous AI. Having an autonomous system means that the AI can act independently and, in most instances, with little or no human regulation. The key difference lies in the intentionality and purposefulness of AI’s actions.

- AI autonomy implies that the system is not controlled by humans all the time. It is able to perform tasks, including following a route or decision-making in real-time, but these are usually directed by a set of pre-defined rules, models, or parameters.

- An AI agency, in its turn, implies that the system can act purposefully, with its decision-making that is not only in line with the set guidelines but also works at the assessment of the situation, modifying itself in response to the changes and directed towards the goals that correlate with its internal mechanics.

The AI agency suggests decision-making, the presence of goals, and flexibility, all of which are necessary for smart conduct.

- Decision-making: The skills of evaluating the available options and choosing among the most favourable solution to the presented information.

- Goal-setting: System goals to inform the operation of the system. For example, the process optimization or some particular end result.

- Flexibility: It is the ability to adapt to the variations of the environment and correct the behaviors by the new knowledge or altered circumstances.

All these factors are involved to ensure that the effectiveness of AI systems is not only functional but also corresponds to improvements and development so that they can face complicated tasks.

AI Agency Without Full Autonomy

An AI agency without full autonomy refers to systems that can reason, process information, and take meaningful actions, but still require human guidance or oversight for higher-level decision-making. These systems demonstrate intelligent behavior and agency in specific contexts, yet they lack complete independence, ensuring that humans remain responsible for critical or moral choices.

- Cognitive Systems Theory: This theory states that intelligent systems (including humans) can behave in a reasoned manner, processing the information, reaching a decision and changing the environment. But, nevertheless, they require the manipulation or intervention of external agents (including human beings) to guide certain activities.

- Hybrid Decision-Making System: The hybrid human-AI decision-making framework suggests that the AI systems with agency may be autonomous in certain activities but still need human involvement in making high-level decisions such that the AI system can operate in a moral and practical context.

- Take an example of an AI-based medical diagnosis. It examines patient information, gives likely diagnoses, and recommends treatment. It is agency-based since it processes information and produces actionable results. However, it is not entirely independent since a human doctor finally accepts the decision.

AI Autonomy as a Sub-Agency

A drone that can choose the route to fly, avoid obstacles, and deliver goods is an example of agency and autonomy. Agency is manifested in its capacity to act meaningfully in the environment. Autonomy is manifested in its freedom from constant human control.

Why Does Distinction Matter?

The distinction between agency and autonomy is crucial in AI decision-making, especially for responsibility and accountability. Agency suggests purposeful actions by AI, but keeps humans, creators, or operators as ultimately responsible. Autonomy implies self-regulation without human interference. This difference also shapes AI design and monitoring, with agency allowing for oversight and autonomy, emphasizing independent decision-making. Ethically, agency highlights the need for AI systems to make morally viable decisions in cooperation with humans, while autonomy raises concerns over adaptability and control. Finally, in governance, highly autonomous systems, such as autonomous weapons, demand stricter regulation to ensure safety and prevent misuse.

Ethical Considerations

- Accountability: Where does the responsibility lie in case an AI system makes an unfavorable decision, with the person who made it, or with artificial intelligence itself?

- Agency Debate: The other issue, which is brought about by the capability of the AI to perform ethical actions, is the liability and decision-making issue.

Legal and Regulatory Impact

- AI Governance: Paving the way to a highly autonomous system is liable to specific regulations that would establish the limit of liability and block of operation. Read: AI Compliance for Software.

- Human-in-the-Loop Rules: One approach to regulating high-stakes AI (such as medical diagnoses or military drones) is to guarantee human oversight of it. So as to granting it less autonomy and maintaining its agency.

Practical Deployment

- Control vs. Efficiency: Autonomous AI may possess a high level of efficiency in conducting operations. Yet, as long as it lacks proper control, there is a high risk of serious dangers, such as the occurrence of ethical mistakes or unexpected results.

- Human-AI Collaboration: Systems with an agency of AI, but less autonomy, will provide an option between these two extremes. Where an AI is used to inspire productivity, but a human is used to keep AI responsible in its use.

- Action Decision: AI may serve as an efficient means of information management. But then human beings may introduce the critical analysis of this information to the decision making process of ethical, legal and social issues. This guarantees no data will be used out of the context.

- The Theory of Shared Responsibility: The theory is based on the idea that the collaboration of AI decision-making with humans will lead to a more efficient and safer environment. Where the routine jobs are sent to and processed by the AI, and then, at a higher level, humans take care of the decision-making and ethics.

Examples Highlighting the Difference

The quality of agency in artificial intelligence systems relates to their capacity to make their own decisions and do something on the basis of a certain goal or objective. Degree of autonomy, however, varies, and it provides information on how far such systems can be applied without human intervention. AI systems, which act in the agency manner, though not with full autonomy, still require an intervention by a human being in making the critical decisions. And those that do not act in the agency manner, but rather in the autonomy manner, are the ones that can perform tasks on their own, and make decisions in real-time.

AI Agency Without Autonomy

- Google Translate: Acts as an intelligent assistant, but doesn’t translate text without human input.

- Fraud Detection Systems: Flag suspicious transactions, but require human investigators to confirm.

AI Agency With Autonomy

- Autonomous Vehicles: Make real-time driving decisions without continuous human commands.

- High-Frequency Trading Bots: Execute market actions based on algorithms, often faster than human reaction time.

Minimal Agency and Low Autonomy

- Basic Automation Scripts: Perform repetitive actions but cannot adapt or make choices.

The Future of AI Agency

The future of AI agency is determined by its increasing ability to make decisions, shape the results, and communicate with the world on its own. AI systems will change this concept whereby agency will be shifted to autonomous decision making that is not rule based. Cognitive systems theory can be used to explain such development as AI systems increasingly have capabilities to think abstractly, generalize, and respond to changing circumstances. It will enable AI systems to behave intentionally in novel and unpredictable situations at hand.

AI will be able to process and solve complex information in a more human-like way because it will use cognitive architectures similar to human cognition, such as the ACT-R or the SOAR architectures. Such tasks as autonomous deciding based on the changes in conditions will also enable AI systems to perform such functions. But this change will also presuppose autonomous decision-making in real-time, providing the ability to modify the behavior of the system.

Since AI is dynamically evolving, it is becoming a mechanism with more sophisticated versions of agency. This evolution includes multiple AI agents communicating with each other and having a purpose. The challenge is to devise a balance between high agency and controllable autonomy to make AI a companion rather than an unpredictable agent.

Wrapping Up

AI agency and autonomy are complementary but distinct concepts. Recognizing this distinction allows developers, regulators, and society to design safer AI, allocate responsibility clearly, and embrace AI’s potential without ceding uncontrolled power to machines.

- AI Agency focuses on the capacity to perceive, decide, and act meaningfully.

- AI Autonomy refers to the degree of independence in performing those actions without human intervention.

In essence, agency is about action; autonomy is about independence. An AI can act without thinking on its own, or think and act without supervision but how much freedom we grant determines its role in our lives.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |