Gap Analysis in QA: How Do You Master It?

|

|

Gap analysis is an extremely important tool for any team, and the discipline of QA is no exception. Despite its importance, many teams are guilty of not practicing gap analysis in their QA process, often due to a lack of confidence and understanding of how to conduct a gap analysis. However, it is a vital technique that is capable of helping your organization to achieve the growth you’re aiming for. So let’s dive right in.

| Key Takeaways: |

|---|

|

Why Gap Analysis is Often Ignored in QA Teams

Despite being discussed frequently in theory, gap analysis is surprisingly absent from many real-world QA processes. Teams are usually busy meeting sprint deadlines, responding to production issues, and keeping automation running. In such environments, quality becomes reactive rather than strategic.

Another common issue is that QA teams often inherit processes instead of designing them. When teams operate on autopilot, they rarely stop to ask whether the current approach is still aligned with business goals, product complexity, or release velocity. Gap analysis forces that pause, and that is precisely why it feels uncomfortable to many teams.

There is also a misconception that gap analysis is a management-only exercise. In reality, the most effective QA gap analyses are collaborative efforts involving testers, developers, product managers, and leadership.

What is Gap Analysis in QA?

In general, gap analysis is the practice of comparing the current state of your organization or team with the ideal outcome of where you want to be as an organization or team.

In the world of QA, gap analysis usually means comparing the observed results against the expected results of the QA process.

For example, you may have 4 hotfixes per month on average in your current state, and your goal is to have no more than two. Addressing this gap between the present and the targeted state is what gap analysis is all about. You need to assess the difference between the two states and determine what can be done to get you to where you want to be.

Maybe it’s increasing test coverage in a particular area? Or improving the process in some way?

Explaining the Importance of Gap Analysis in QA

If you’re working in quality assurance, it’s almost guaranteed that you’re part of a broader engineering team that will need to understand why gap analysis is essential for the QA process and the whole organization.

If you find yourself needing to explain to others why gap analysis matters for QA, here are some pointers:

- Identify and remove inefficiencies to eliminate wasted time

- Improve the speed of the test lifecycle

- Improve the quality of the test lifecycle with more errors identified before release

- Fewer hotfixes after deployment

- Better documentation, fewer overlooked changes

- Higher quality test reports

By keeping this set of advantages in mind, you’ll be able to easily explain to others in your organization why it’s worth investing in gap analysis for QA in order to pursue the larger payoff in the end.

Read: Why Critical and Lateral Thinking Matter for Testers.

Essential QA Gaps to Identify

Quality gaps in modern QA organizations are no longer limited to missing test cases or late defect detection. As systems grow more complex and release cycles accelerate, QA teams must analyze multiple categories of gaps to understand where quality is breaking down and why.

- Process Gaps: They occur when essential testing activities are missing, poorly sequenced, or inconsistently executed across the delivery lifecycle. Examples include skipping exploratory testing, late involvement of QA, or over-reliance on manual workflows that slow releases and increase human error.

- Coverage Gaps: Emerge when critical business flows, integrations, or user journeys are not adequately tested. These gaps often result in production failures because edge cases, negative scenarios, or cross-system interactions were ignored during testing.

- Skill Gaps: Arise when QA teams lack expertise in areas such as test automation, AI-driven testing, performance engineering, or domain-specific knowledge. Without the right skills, teams struggle to design effective tests or adapt to modern engineering practices.

- Tooling Gaps: Occur when teams rely on fragmented tools that do not integrate well with each other or with the CI/CD pipeline. Poor tooling choices lead to duplicated effort, limited visibility, and slower feedback across the testing process.

- Environment Gaps: Happen when test environments do not accurately reflect production conditions. Differences in infrastructure, configurations, or third-party dependencies often cause issues that only surface after deployment.

- Data Gaps: Caused by unrealistic, outdated, or insufficient test data that fails to represent real user behavior. This limits the effectiveness of testing and increases the risk of defects related to data integrity, security, or scale.

- Feedback Gaps: Exist when QA teams do not systematically learn from production incidents and customer-reported defects. Without closed feedback loops, the same issues tend to reappear, preventing continuous quality improvement.

Read: What is Gamma Testing?

How to Do QA Gap Analysis

While different teams may follow different processes, the steps for an effective gap analysis process in QA are typically very similar:

- First, define your ideal or expected outcome. Think about the practices that your QA team should be using, as well as if any tools will help improve how your team operates. Additionally, consider the key metrics and what they should reflect for your QA team.

- As you might expect, the next step is to inspect the current state of your QA process to compare it with the ideal state that you identified earlier.

Is your team actually adhering to the prescribed QA processes correctly? What tools do you currently use, and do you use them effectively? What metrics do you currently have in place?

- Finally, design a solution. Now that you’ve understood where problems are occurring, formed a definition of where the QA process needs to be, and then compared it to the current state of your QA process, you should be in a good position to come up with a plan to address any problems uncovered.

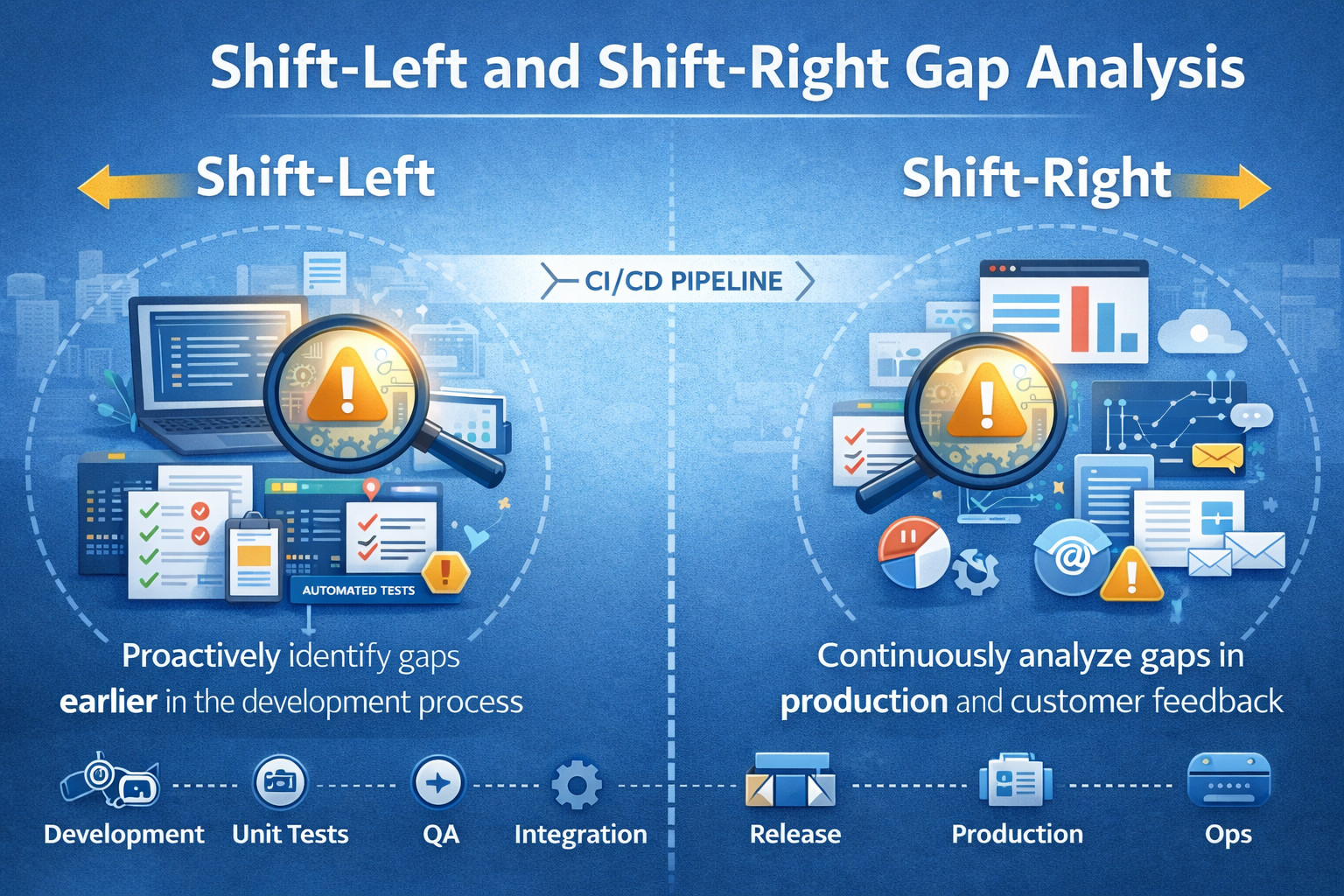

Shift-Left and Shift-Right Gap Analysis

Modern QA gap analysis must extend beyond the testing phase and cover the entire software lifecycle. By examining both shift-left and shift-right gaps, teams can identify where quality risks originate early and where learning breaks down after release.

Shift-Left Gaps

Shift-left gaps appear when quality practices are not embedded early in the lifecycle, particularly during requirements and design. When testing starts late, requirements lack clarity, and testability reviews are skipped, defects are effectively designed into the product before development even begins.

Read: Shift Left Testing – Software Testing Done Early.

Shift-Right Gaps

Shift-right gaps emerge after release when teams fail to observe how the system behaves in real-world usage. The absence of production monitoring, user behavior analysis, and post-release validation means incorrect assumptions go unchallenged, allowing hidden quality issues to persist.

- Requirements: Gap analysis at the requirements stage focuses on identifying ambiguity, missing acceptance criteria, and untestable features. Without early QA involvement, teams inherit unclear expectations that propagate defects throughout development and testing.

- Development: In development, gap analysis highlights missing unit tests, weak code reviews, and poor collaboration between developers and QA. These gaps increase rework and push defect detection further down the pipeline, where fixes become more expensive.

- Testing: In this phase, gaps surface when coverage is shallow, automation is limited, or environments do not reflect production realities. At this stage, gap analysis helps determine whether testing is validating real business risk or simply checking predefined paths.

- Production: Examines how well teams learn from real usage, incidents, and customer feedback. Without monitoring, observability, and feedback loops, QA becomes disconnected from reality, preventing continuous improvement across future releases.

Read: Shift-Right Testing: What, How, Types, and Tools.

AI-Powered Gap Analysis in QA

AI-powered gap analysis moves QA from reactive defect discovery to proactive quality intelligence. Instead of relying solely on human intuition and static reports, AI continuously analyzes signals across the lifecycle to surface hidden quality risks.

How AI Identifies Gaps

AI can automatically detect untested user flows by analyzing application behavior, user journeys, and historical test coverage. It also uncovers repeated defect patterns and correlates them with code changes, releases, or teams, helping identify high-risk areas before failures occur.

- Predictive Gap Analysis: Focuses on answering a critical question: what is likely to break next? By learning from past defects, production incidents, and change frequency, AI can forecast risk hotspots and highlight areas that require immediate quality attention.

- AI-Driven Recommendations: Beyond detection, AI provides actionable guidance on where to add new tests and which existing tests should be prioritized. This allows QA teams to focus their effort on maximum risk reduction rather than expanding test suites blindly.

Closing QA Gaps with AI-Driven, Codeless Automation

Modern QA gaps cannot be closed with traditional automation alone. testRigor is positioned as a Gen AI-driven quality platform that directly addresses the most common and costly QA gaps by combining codeless automation with built-in quality intelligence.

Closing Coverage Gaps with Natural Language Test Creation

Coverage gaps often occur when test creation is slow, brittle, or limited to a small group of specialists. Natural language test creation allows teams to define tests in plain English, focusing on user intent rather than implementation details. This lowers the barrier to automation and enables broader participation across QA and product teams. As a result, coverage can expand continuously rather than lag behind development.

Read: Natural Language Processing for Software Testing.

Eliminating Stability Gaps with Vision AI

Test instability is a costly QA gap that erodes trust in automation and slows delivery. Vision AI enables tests to interpret applications visually and contextually instead of relying on fragile locators. This makes tests resilient to UI changes, layout shifts, and dynamic elements. Fewer false failures allow teams to focus on genuine quality risks rather than maintenance noise.

Read: Vision AI and how testRigor uses it,

Closing Feedback Gaps with Integrated Defect Tracking and Analytics

Feedback gaps arise when defects are detected late or lack sufficient context for fast resolution. Integrated defect tracking and execution analytics shorten the feedback loop by capturing issues directly from test runs with supporting evidence. Over time, this data highlights recurring problem areas and high-risk workflows. Gap analysis shifts from reactive detection to proactive prevention.

Read: Defect Lifecycle.

Quality Intelligence: Turning Gap Analysis into Strategic Insight

Modern QA requires more than pass-fail results; it needs visibility into quality trends and risk signals over time. Quality intelligence dashboards reveal how coverage, stability, and defect patterns evolve across releases. This insight helps teams prioritize testing based on impact rather than intuition. Gap analysis becomes a continuous, data-driven capability rather than a periodic exercise.

Conclusion

Gap analysis in QA is no longer a one-time assessment but a continuous discipline that evolves with the product and delivery pace. By systematically identifying and closing coverage, stability, and feedback gaps, teams can reduce risk earlier in the lifecycle and release with greater confidence. AI-driven, codeless automation enables this shift by making quality scalable, resilient, and data-driven. As a result, QA becomes a strategic driver of predictable, high-quality software delivery rather than a last-line checkpoint.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |