The Easiest Way to Automate Acceptance Testing

|

|

Acceptance Testing Automation

Acceptance testing is a critical step in the software development process that verifies if a system meets business requirements and can be delivered to customers. It is the final stage of testing, following unit, integration, and system testing. Acceptance testing is a form of testing where the system is evaluated against a set of acceptance criteria to ensure it meets the required specifications. Once the system passes acceptance testing, it can be handed over to the stakeholders or deployed to a production environment.

Acceptance testing is typically carried out in an environment that closely mimics a production environment. Integration testing focuses on business, risk, contract, and user perspectives, while acceptance testing focuses on user scenarios. This type of testing is often executed in the form of black box testing, concentrating on the end-user experience. In the initial phase, requirements are converted into test scenarios or use cases, which are then validated during acceptance testing. If a product passes acceptance testing, it means that it meets the business requirements.

A test scenario may be divided into multiple user stories in an agile environment. Some user stories may be developed in the current sprint, while others may be developed in future sprints. This means that acceptance testing will fail at the end of every sprint, as many scenarios have not yet been developed. As a result, the same acceptance scenario must be run again, making acceptance testing an iterative process.

- Internal Acceptance Testing

- External Acceptance Testing

Internal acceptance testing, also known as alpha testing, is performed by a different testing team within the organization, typically in a development or testing environment. Once testing is completed, bugs are identified, and developers review and fix them before releasing an updated version. External acceptance testing, also known as beta testing or user acceptance testing, is performed by people outside the organization, such as beta testers or customers.

As previously mentioned, acceptance testing is an iterative process. Test scenarios are written and executed against the current build, and some scenarios will pass while others will fail as they may not have been fully developed. To improve the testing process, acceptance scenarios can be automated and added to the CI/CD pipeline. This reduces the amount of redundant work, as the manual QA team does not need to repeatedly execute the same scenarios after every sprint. Automation also allows for more time for the manual team to focus on other types of testing, such as smoke or exploratory testing.

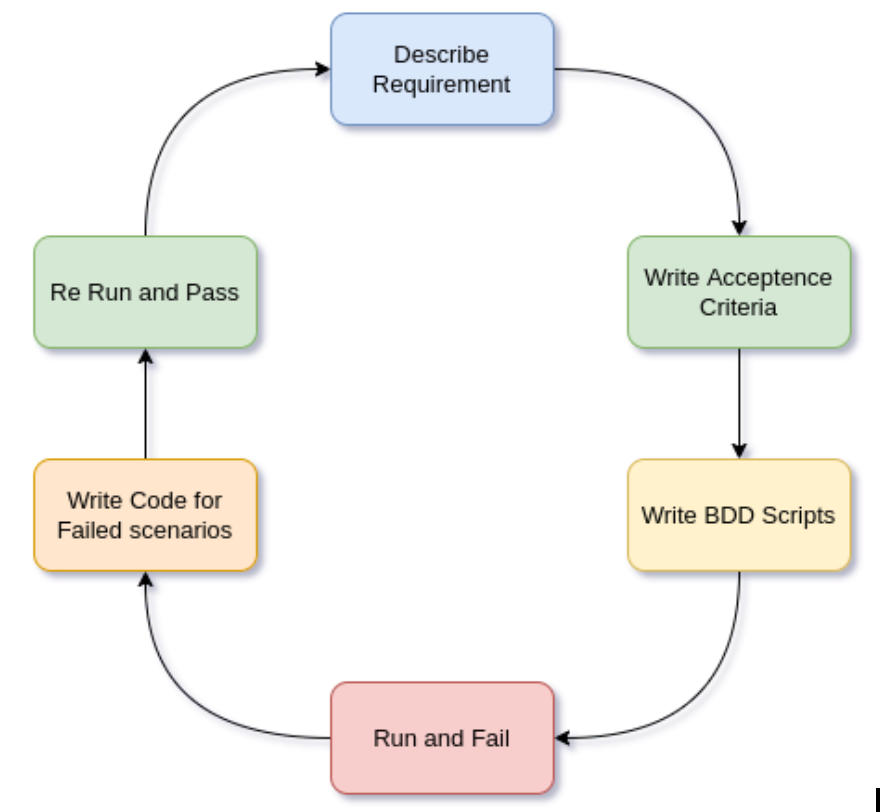

Acceptance testing can involve a variety of teams, including project managers, business associates, management, sales, and more. The development methodology used by leading industries is Acceptance Test Driven Development (ATDD), which is similar to Behavior-Driven Development (BDD). In ATDD, for every new use case, the business associates, project managers, developers, and testers will discuss and write acceptance criteria. BDD scripts are then written and executed based on these criteria. If a script fails, developers will create a new script for the failed feature file or user story and rerun it. This process is repeated during every sprint cycle.

It is essential to note that acceptance testing involves non-technical teams, and as such, it is best to avoid using legacy automation tools like Selenium. These tools can require a significant amount of time to set up the infrastructure and framework, which can cause delays. When Selenium was more widely used, the QA team often lagged at least one sprint behind due to the time required to create automation scripts. The budget and timeframe to complete scenarios were also high, which resulted in lower quality output than expected. As the test cases count increases, the Selenium scripts can get overly complicated with many complex functions and scripts. A small change in one function can cause other test cases to fail. So over time, the automation team gets more frustrated with this, and we’ve seen cases where they even have to create multiple silos of the same framework to handle different types of automation.

Today, legacy tools are being replaced by no-code and low-code alternatives, which are faster and easier to set up, resulting in improved efficiency and output quality. testRigor is a perfect example of such a tool, helping software teams to write test scripts in plain English. Integrated AI technology automatically captures all possible locators, allowing users to refer to elements from a human perspective (ex: click “Add to cart” button). The entire automated test becomes an executable specification, opening up test creation and maintenance for the whole team: business members, product managers, and so on.

Using testRigor for acceptance testing automation drastically reduces the time and effort spent on test creation, fits into the BDD process out of the box, and allows different teams to collaborate and track development progress. The tool is cloud-hosted, so you don’t need to consider setting up and maintaining any infrastructure.

So how do you automate acceptance testing with testRigor? First, identify the acceptance testing scenarios, and describe them in plain English test steps, just as you would for a manual test case. Since you don’t need to include any element locators, the tests can be written even before the desired functionality is coded. Any given test should pass once the feature becomes available on an environment that you’re testing against.

For the execution of acceptance scenarios, effort and time play a vital role, as the current market strategy is “First to market.” To achieve this, testRigor provides significant help in ensuring quality and saving time.