How to Do API Testing in Microservices Architecture: A Beginner’s Guide

|

|

API testing is integral for the stability and quality of services in microservices architecture, but can become an over-engineering problem if not considered carefully. Advances in technology have led to this next generation of software development, architected as Microservices. It is required for scalability, however decoupled systems present challenges when it comes to testing with its distributed dependencies and complex interactions.

| Key Takeaways: |

|---|

|

What is Over-engineering?

Over-engineering occurs when something is engineered to be more complicated than is needed. Products are designed instead of trying to solve the problem in a simplistic and effective manner by adding additional features, tools, or processes that do not contribute to much value. This may result in time wastage, higher expenses, and more demanding upkeep.

Why Over-Engineering Matters in Testing

The term over-engineering is frequently used in software testing when overly complex automated systems are created rather than engaging in only necessary test cases. Or utilizing tools not well-suited to the characteristics of the project. This matters because:

- It decreases test and development speed.

- It adds to the maintenance and training expenditure.

Instead of building complicated custom frameworks, teams can use testRigor to cover the most critical API workflows, avoiding unnecessary overhead.

Microservices Architecture in the Modern World

The architecture of micro services refers to the technique of constructing software in an application out of the small and self-reliant services. The services deal with a particular functionality, and liaise with other services on APIs.

Microservices are also popular in the contemporary world since they:

- Support modular development and deployment

- Improve scalability and flexibility

- Enable team specialization

Read more: Micro-frontends Automated Testing: Is It Possible?

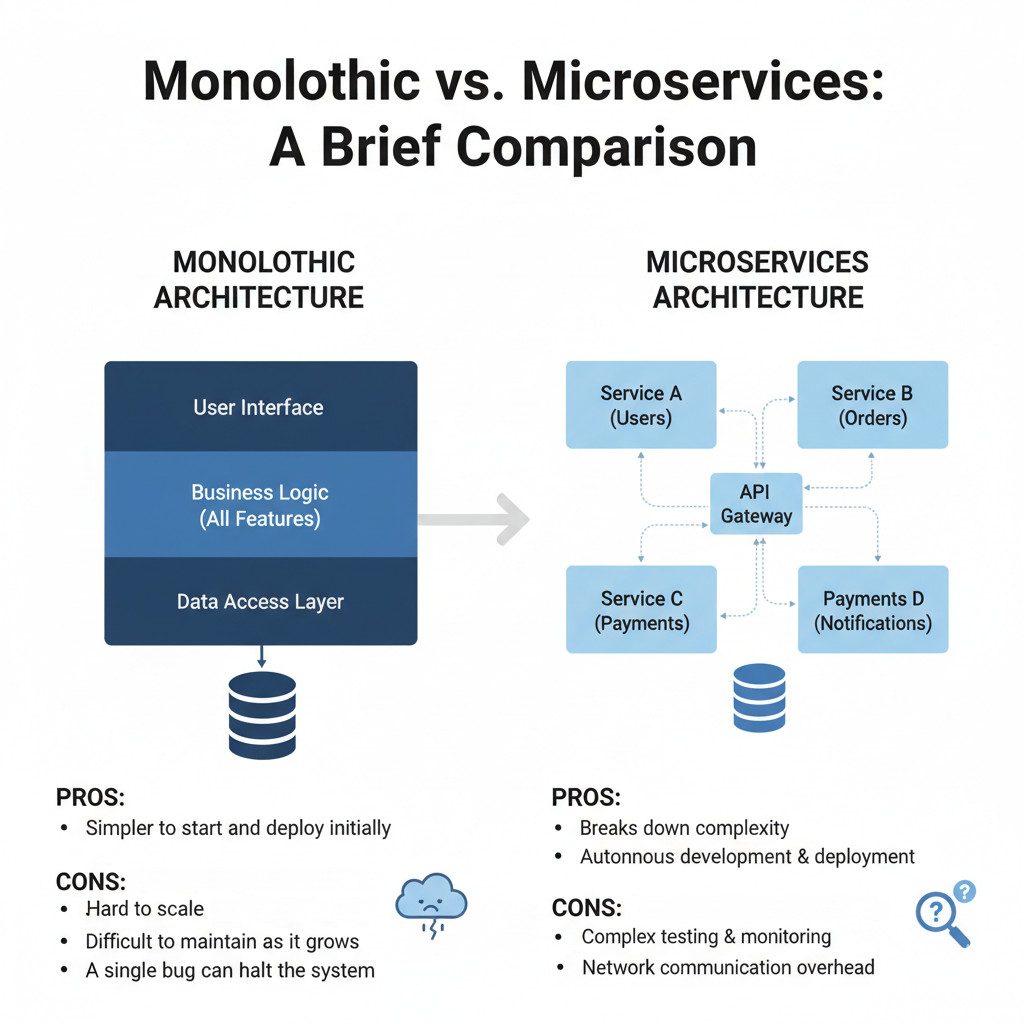

Monolithic vs. Microservices: A Brief Comparison

Monolith and microservice architectures are the two architectures that are used to construct applications. Monolithic systems are planned to implement just one separate entity, whereas micro services divide the capability into many autonomous services. This puts each with its advantages and disadvantages, particularly in regards to scalability, maintenance, and testing.

- Monolithic systems are simpler to begin with, but hard to scale and ensure maintenance.

- Breaks system complexity for deployment and enables autonomous system development.

Testing Complexity in Microservices

Microservices testing is not an easy task compared to testing indivisible systems due to dispersed services and dependencies that are a mix of services. The presence of many related parts that make up microservices is different to a single codebase. This is because of the difficulty of determining where issues exist. Communication via networks also increases the difficulty in the process of testing.

- The reliance on many interconnected services and dependencies makes it harder to pinpoint the root cause of issues.

- Network-based communication brings about delay, failures, and additional testing requirements.

To manage distributed dependencies effectively, testRigor can validate service-to-service communication through end-to-end scenarios without requiring brittle low-level checks.

Types of APIs and Communication Patterns in Microservices

Microservices are based on APIs (Application Programming Interfaces) as they are a method of communicating with each other. The environmental factor of a synchronous or asynchronous choice of communication pattern is important in performance and reliability. Various services can make use of mixed patterns, which might be tested in a different manner.

Common Pitfalls that Lead to Over-engineering in Testing

Having these tests also tends to lead teams toward the construction of overly complicated solutions. Although extensive testing is critical, over-engineering may slow down the development and efficiency. It is important to find the balance between planning the tests that are comprehensive and the effective application.

- Writing excessive end-to-end tests is counter productive because it slows down the development of the code, and because it causes the build to be unstable.

- Neglect of contract testing usually results in service mismatches.

Instead of building bulky frameworks, you can use testRigor to capture only high-value API flows, keeping the test suite lean.

Over-engineering in API Testing: Causes and Symptoms

Over-engineering in API testing happens when teams create overly complex test designs that add little value but increase effort, cost, and delays. Instead of improving quality, it results in bloated test suites, duplicated cases, and fragile systems that are hard to maintain.

What Does Over-engineering Look Like?

Over-engineering in API testing is the complexity being introduced to the test design that is not required. Rather than making testing effective, it provokes additional work and a delay in progress.

Causes:

- Trying to take into account all possible scenarios.

- Application of excessively complicated tools or models.

- Failure to emphasize key business requirements.

Symptoms

- Very large and slow to run test suites.

- The effective number of duplicate or trivial cases.

- Problems in maintenance are frequent and add no value.

Symptoms of Over-engineering in Test Suites

The clear sign over-engineering has happened is a clearly noticeable effect on speed and reliability. Such indicators render it difficult to have faith or trust the results among teams.

Causes

- Strain to match growth in testing and a lack of priorities.

- Ineffective scope and objective planning.

Symptoms

- There are delays in carrying out tests and feedback taking.

- False negatives or positives decrease credibility.

- More time is spent by the developers or QA engineers correcting tests than coding.

Root Causes: Why Teams Over-engineer API Tests

Meaningless action plans or unrealistic leanings will always lead to over-engineering of teams. In as much as they aim at quality, they produce loose and fraudulent systems.

Causes

- Choosing not to damage the crucial testing

- Pressure to reach 100% coverage.

Symptoms

- Excessive focus on low-value situations.

- It is time-consuming and resource-intensive.

- Complex test systems.

The Cost of Over-Engineering

Excessive engineering introduces unseen expenses that slacken down undertakings and decrease productivity. This instead of centering quality resources impedes delivery.

Causes

- Inefficient planning and performance of tests.

- Developing frameworks bigger than what can be maintained and scaled efficiently.

Symptoms

- There is more development and maintenance time.

- Greater cost, since increased expenses do not contribute to greater assurance.

- Reduced and slowed release agendas and decreased team productivity.

Read more about How to do API testing using testRigor?

How Much Testing is Enough: Avoiding Over-engineering

Determining the right amount of testing is essential to ensure quality without wasting time and resources. Too little testing leaves gaps that risk failures, while too much leads to over-engineering, delays, and fragile systems. In practice, this means keeping most tests at the unit/contract level and reserving testRigor for 5–10 essential end-to-end API scenarios that represent true business flows.

Defining Minimal Viable Testing

Minimal viable testing simply means just doing the tests needed to make sure that the required functionality is met. It is quality with no wastage of time and resources.

Key Principles

- Focus on critical paths: Put user flows or API interactions that are most valuable to the infrastructure under test and ensure they work. This reduces the risk and the unnecessary test cases.

- Eradicate unnecessary situations: Do not repeat the same behavior in different ways. Test streamlining helps in saving on time and effort without wastage.

Balancing Test Depth and Breadth

An effective test plan is that neither conducts too little (but enough) of testing, nor one that strikes an extreme with the point of data counts. On either side may be the excess of the waste.

Depth Considerations

- Test high-risk areas deeply: One makes a closer examination of services that are of an imperative nature to the security or business integrity, or information.

- Further description of low-risk areas is to be avoided: There is no need to invest into details as it is not required to devote more efforts to the details, which do not directly influence product quality.

Breadth Considerations

- Coverage of modules should be ensured: Get the key services covered at least once to be sure that you do not leave anything out. This keeps confidence up in the stability of the system.

- Prioritize business impact: Preference should be given to those modules that are directly related to customer experience or business outcome, rather than setting out to measure everything.

Prioritizing to Avoid Redundancy

The tests of all types are not equally valuable. Using the right balance can prevent re-test as well as sustain a lean test suite.

Recommended Focus

- Unit and contract tests should be embraced first: They identify most of the issues promptly, compute fast, and ensure that the communication of services works as expected without much overhead.

- Intensive testing: This can be used to validate service-to-service only. This is achieved by keeping things simple.

Avoiding Redundancy

- Don’t duplicate test layers: A test that has already been undertaken in unit/integration tests, simply run it in end to end tests.

- Review on a regular basis: Regular reviews (once in a year, say) can help in getting rid of old or unnecessary tests, and make the suite lean.

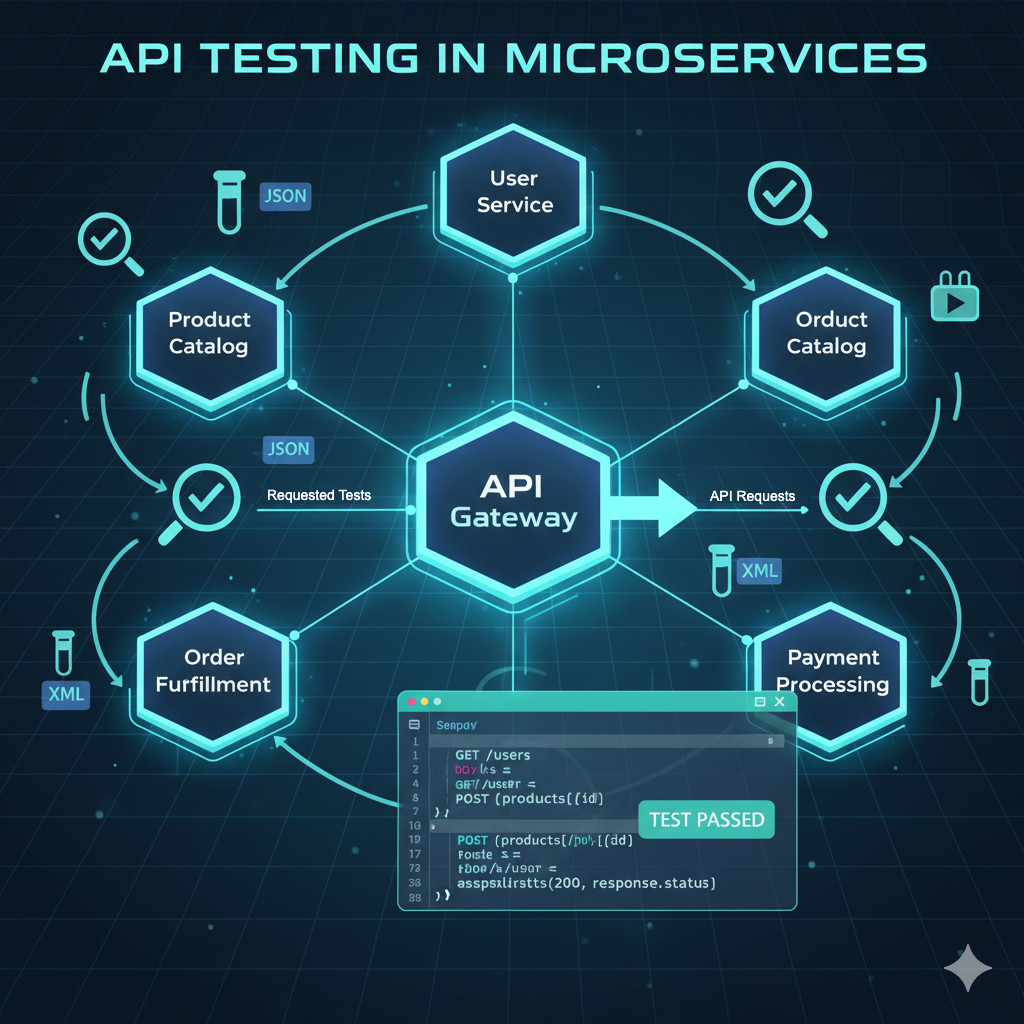

API Testing Fundamentals in Microservices

API testing is the backbone of ensuring that microservices can communicate and function reliably in a distributed environment. It not only validates that individual services work as expected but also confirms that interactions between them remain consistent and error-free. This section explores the purpose of API testing, its key goals, the different testing layers, and the importance of data contracts, schema validation, and backward compatibility. Read about The Best API Testing Tools for 2025.

Goals of API Testing: Verification vs. Validation

API testing fulfills the aim of making sure that the system that is developed is in accordance with the requirements and business expectations. This type of balance ensures functionality coverage and quality.

- Checking of requirements: Checks of APIs are done to assist in uncovering flaws as early as possible during the development period of the application.

- Validation of outcomes: Test that the API achieves results in which the system appears to be realistic and attainable by the customers.

Understand the difference: Verification and Validation.

Key Layers in Testing

The testing of a microservice will need numerous layers whose purpose is diverse. Tests should cover the entire workflows.

- Unit and component testing: Concentrates testing the individual services, which are verified before they are assembled into bigger systems.

- End-to-end and integration testing: Manages interaction between services and the entire system and proves that the real-world workflows work E2E.

Data Contract, Schema Validation, and Backward Compatibility

Microservice communication heavily relies on data; therefore, updates touching on data consistency need to be maintained and tested. Testing provides the reliability of APIs over time.

- Schema validation: Ensure that data exchanged between components has the correct structure, type, and format, and eliminates run-time errors and incompatibilities.

- Backward compatibility: If older API versions are not compatible with the update, make sure that the service is still workable and should not fail without the appropriate update.

Testing Strategies to Prevent Over-engineering

A clearly defined test pyramid is essential in microservices to ensure balanced test coverage without falling into the trap of over-engineering. By strategically layering unit, integration, and end-to-end tests, teams can maintain speed, reliability, and scalability while avoiding unnecessary complexity.

Clearly Defined Test Pyramid in Microservices

An explicit test pyramid can be used as a means to balance the testing activity, giving priority to smaller and faster tests and less priority to large and slow ones. This prevents unnecessary testing and wastage of time.

- Emphasis on unit tests: The majority of the tests must be at the unit level, as they are fast, constant, and reveal problems at an early development stage.

- Limited end-to-end tests: End-to-end tests are to be fewer, but of good quality, only including critical workflows, so that they are not fragile.

Contract Testing: Consumer-Driven Contracts

Contract testing helps in making sure that both the service providers and consumers concur on the misconducts of the APIs. It assists in avoiding integration problems in distributed systems.

- Consumer-driven approach: Consumers establish criteria for how the API should behave, and services should deliver the appropriate data and responses.

- Fewer integration failures: Mismatch is detected before deployment by validating contracts before deployment, thereby saving time and cost.

Once a contract is verified in CI, a single testRigor flow can be used to confirm that the API behaves correctly in a real-world scenario, ensuring consumer expectations are met. Read more: API Contract Testing: A Step-by-Step Guide to Automation.

Mocking vs. Real Dependencies: When and Why?

Both mocking and the real dependency have their part to play in testing. The selection of an effective one would be based on the purpose of the test as well as the developmental stage.

- Using mocks for isolation: Mocks are used when testing functionality to avoid reliance on actual services and achieve an isolating effect, resulting in increased test speeds and reliable tests. Read: Mocks, Spies, and Stubs: How to Use?

- Accurate use of real dependencies: Real services offer more realistic output, to ascertain that tests are checking the real system behaviour.

Designing API Tests the Right Way

Writing clear and purposeful API tests ensures that each test validates a specific behavior rather than duplicating coverage. This approach improves test readability, reduces maintenance effort, and strengthens overall reliability.

Writing Clear, Purposeful Tests

Quality API tests are also focused on one objective and should be easy to comprehend. A definitive purpose is also needed to enhance readability and facilitate debugging.

- Best practice: One assertion per test.

- Make test descriptions simple and meaningful. By looking at them, the team should understand what functionality is being checked.

Naming Conventions and Documentation

The test suites can be navigated consistently due to the naming and appropriate documentation.

- Keep concise notes that explain the purpose and reason for the test’s existence.

- Adhere to the naming rules or guidelines relevant to the project or group so as to be consistent.

Read: How to Write Maintainable Test Scripts: Tips and Tricks.

Reusable Tests and Data Setup

Reusable tests save time, effort, and cost. They also ensure consistency even in various test cases.

- Compile standardized ways of setting up common resources, such as a connection to a database or an authentication token.

- Use data-driven testing to make it quick and efficient.

Parameterization vs. Hard-coding

Hard-coded values when used in tests, makes them inflexible, whereas parameterization enhances the scalability. Parameterized tests are easily enhanceable by use of separate and multiple inputs.

- Run the same test using parameterized variables and varied sets of data.

- One should not put values as hardcoded in the tests, but keep them as config files or variables.

When writing testRigor scenarios, you can keep each test focused on one clear business outcome in plain English, making it easy to understand and maintain.

Common Anti-patterns and How to Avoid Them

Anti-patterns in testing emerge when well-intentioned practices inadvertently create inefficiency, fragility, or unnecessary complexity. Recognizing these pitfalls early allows teams to refine their strategies and build more effective and sustainable test suites.

Proliferation of End-to-End Tests

End-to-end tests are effective but have to be applied wisely since they might be overly broad, causing the development cycle to be stagnant. Reliance on them too much may lead to inefficient testing, and it can be difficult to track down the problem quickly. The remedy is to cover unit and integration tests. Do not use end-to-end tests on small and isolated components, use unit and integration tests instead.

Over-use of Mocks

Mocks work well with isolated individual tests although excessive use of mocks can give false positives with tests passing. So the solution is to test some cases using a real dependency to assure that services integrate together and act in a way that is actually satisfactory.

Duplicated Tests Across Services

Cross-service duplication not only wastes time, it also adds to the maintenance overhead. It results in test redundancy, makes it harder to maintain tests and creates disconnects between services. The solution is to find some of the fundamental capabilities that services share. Then write tests for that common functionality that can be reused among the service interfaces, like authentication or authorisation.

Too Much Tooling Complexity

The whole process of testing can be very confusing and inefficient when it includes too many tools.

- Use essential tools: Minimal tools should be used, and only those that are most effective at supporting your testing environment should be used.

- De-tool among teams: Teams cannot be allowed to use different testing tools since they will be inefficient, and this may cause discrepancies in test results.

Excessive Data Fixtures and Setup

Unnecessary delays in the execution of tests and sensitive tests are possible due to over-complicated data fixtures and setup.

- Few and small data fixtures: Only fetch the essential data needed to test a code’s functionality, avoiding any unnecessary or sparse additions.

- Automate the data cleanup: Use automated data cleaning tools so that the system will always be in known state prior to running the tests.

Conclusion

API testing in a microservices architecture is about finding the right balance between coverage and simplicity. While comprehensive validation is essential, falling into the trap of over-engineering can slow teams down, increase costs, and reduce reliability. By focusing on critical business flows, prioritizing contract and unit tests, and keeping end-to-end testing lean, teams can achieve stability without unnecessary complexity. With the right approach and tools like testRigor, API testing becomes a scalable, efficient process that strengthens both quality and delivery speed.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |