How to Write Test Cases from a User Story

|

|

In software development with Agile, user stories are the fundamental entity to define what the user wants. Each of these stories represents some functionality or condition from the user’s point of view and fills the gap between business requirements and technical descriptions. Unfortunately, turning these short and informal descriptions into concrete and full-fledged test cases is not trivial; you need to be very aware, you need the context, you need the domain, and you need analytical capability.

Creating test cases with the user story as the basis ensures that development meets the business objectives, that users’ needs are satisfied, and that product quality is ensured. It also forms the basis for a strong test suite that maintains application stability and reliability through sprints.

| Key Takeaways: |

|---|

|

Understanding User Stories

Before you even consider writing a test case, you need to know what a user story is. A user story is a very high-level definition of a requirement, containing just enough information so that the developers can produce a reasonable estimate of the effort to implement it. It gives the development team a better understanding of what the user wants to achieve, the need behind the desire, and the value the feature will deliver when it’s shipped.

A user story can be formatted as follows:

“As a [user], I want [goal] so that [benefit].”

This structure emphasizes the user’s role, their desired action, and the benefit they expect to receive.

As a registered user, I want to reset my password so that I can regain access if I forget it.

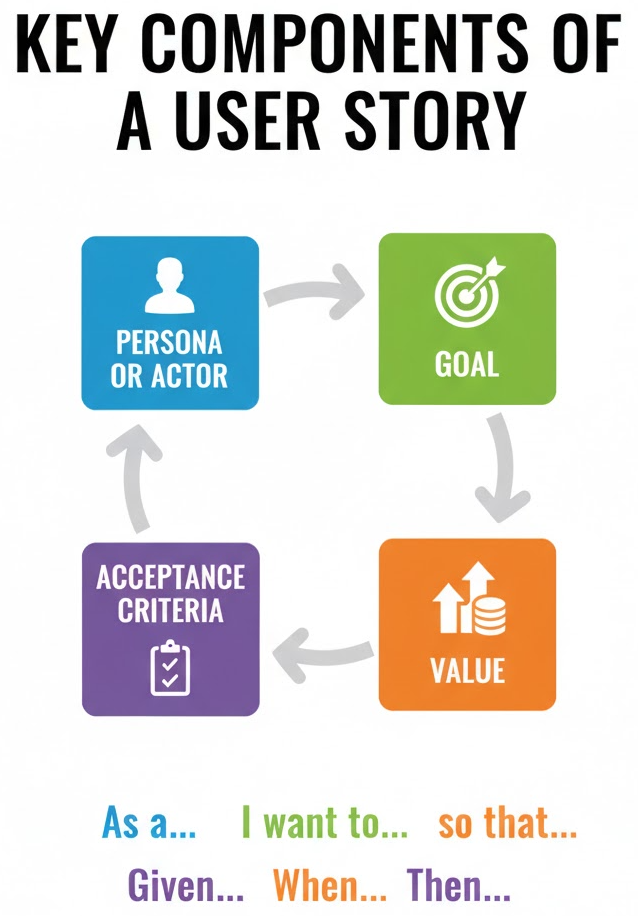

Key Components of a User Story

Each user story should contain the components below to make sure it is clear and complete:

- Persona or Actor: This describes the person or the function that person has that will populate the system. It makes a lot of sense to position the story in a certain user-point-of-view (e.g.,” an admin,” “a registered user,” “a guest visitor”).

- Goal: The Goal is a description of the particular function(final output)/behavior that the user wants the system to achieve. It’s the intent that the user has in using the software.

- Value: This is what the user or business achieves after implementing the story. It rationalizes the story’s intent and brings it into congruence with the larger business mandate.

- Acceptance Criteria: These are the specific details or requirements that need to be fulfilled in order for the story to be deemed finished. They are used as a base for writing test cases and to make sure that the implementation of the story is accurate and complete.

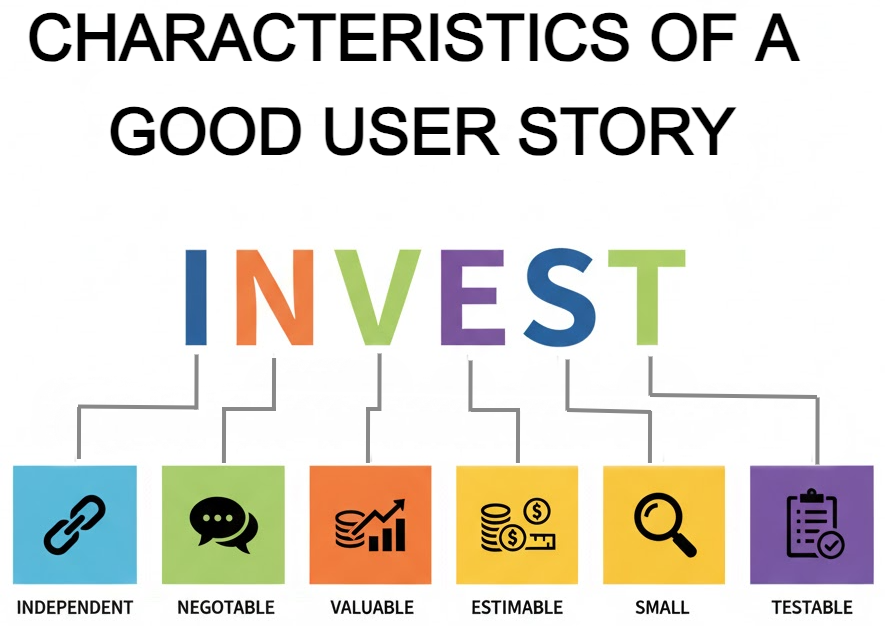

Characteristics of a Good User Story

A good user story should adhere to the INVEST model, each letter signifies a vital aspect:

- Independent: The story must be self-contained and have nothing to do with other stories. The independence is what allows for it to be developed, tested, and deployed separately from being embedded as a dependency.

- Negotiable: You should not treat user stories as a contract for what gets delivered because they are not supposed to be a design spec. They should be malleable so that negotiations and re-evaluations can take place as work progresses.

- Valuable: Each user story must bring value to the customer or end user. If it does not offer concrete benefits, it may not be worth doing.

- Estimable: The work should be defined well enough for the team to estimate how long or how much effort would be required to implement it.

- Small: A good user story should be able to be completed in 1 iteration/sprint. Own a big story too big to tell, in small parts along the way.

- Testable: There should be clearly defined acceptance criteria so that the team has a standard for saying that the story has been implemented and works as expected. Since they aren’t testable, it’s impossible to say when the story is “done.”

Benefits of Writing Test Cases from User Stories

Creating test cases out of user stories can connect the dots between what a user needs and how the system is verified. Doing this helps ensure testing is closely tied to business goals and encourages shared understanding across teams.

- Clarity on Requirements: Creating test cases from user stories forces QA teams and stakeholders to think in depth about the feature. This exercise can often uncover hidden assumptions and clarify what exactly needs to be built and tested.

- Better Test Coverage: User story-based test cases are based on the actual user behaviors and requirements, meaning real-world use cases are very well catered for. This led to high coverage of functional paths and corners.

- Reduces Ambiguity: When we transform user stories into a test case, we get to know about the unclear, incomplete requirements or conflicting requirements, and hence, clarity can be achieved early in the development phase. This reduces the likelihood of misinterpretations during execution.

- Fosters Collaboration: It creates a need for more testers, developers, and product owners to have daily conversations around the current work. This type of partnership also results in shared feature expectations and prioritized testing.

- Supports Agile Practices: Creating test cases from user stories makes more sense in an Agile approach. It also encourages test-first methods, sprint planning, and trust in continually validated software.

Read: Mastering Test Design: Essential Techniques for Quality Assurance Experts

The Role of Acceptance Criteria

Acceptance criteria provide a clear understanding of a user story, and describing it is key to implementing it as expected. They are criteria required to be met for a user story to be marked as done, a single shared definition of done used by all members of the team to determine whether work on the product owner’s priorities is complete. Such criteria help capture user expectations into implementation details and form a clear goal of validation.

Acceptance criteria describe in detail what success looks like upon the completion of a feature; however, they are not test cases. They are not run as they are, but are an initial configuration style from which test cases are generated.

Example Acceptance Criteria for a Password Reset Capability:

- A password reset link will be sent to your registered email account.

- The password reset link should be valid for 15 minutes after it is created.

- The new password cannot be the same as one of the user’s previous three passwords.

These acceptance criteria result in an unambiguous understanding as well as guiding development and testing teams. By turning each criterion into one or more test cases, QA can run through a checklist and see if the feature indeed works in the predetermined ways.

Read: User Acceptance Testing: Manual vs. Automated Approaches

Pre-requisites for Writing Effective Test Cases

So, what does it take to write good test cases from user stories? We all know that it takes more than a ‘technical brain’. It requires context, focus, and collaboration. As with any engineering role, there are certain building blocks that QA engineers should have in place to provide high-quality test cases that properly simulate user demands and system functionality.

- Clear Understanding of the Story: QA engineers must fully understand the user story’s intent, functionality, and the value it delivers.

- Well-defined Acceptance Criteria: Test cases should be based on clearly written acceptance criteria that define when the story is complete.

- Domain Knowledge: Familiarity with the application domain helps QA anticipate user behavior and identify meaningful test scenarios.

- Collaboration with Stakeholders: Regular interaction with developers and product owners ensures clarity and alignment on feature expectations.

- Tool Access – To Test, Report, and Track Issues: QA engineers need access to testing environments and tools for test execution, defect tracking, and reporting.

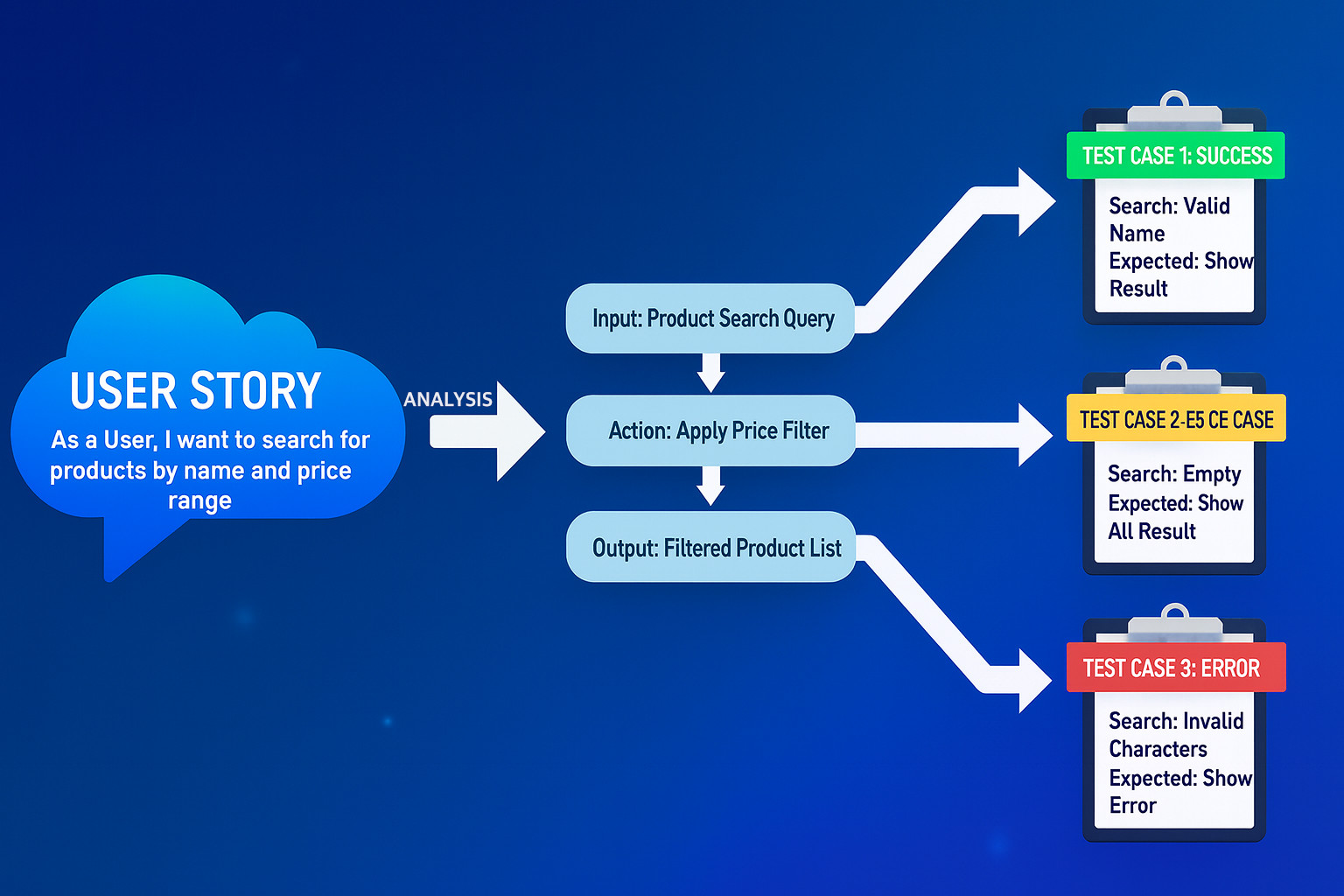

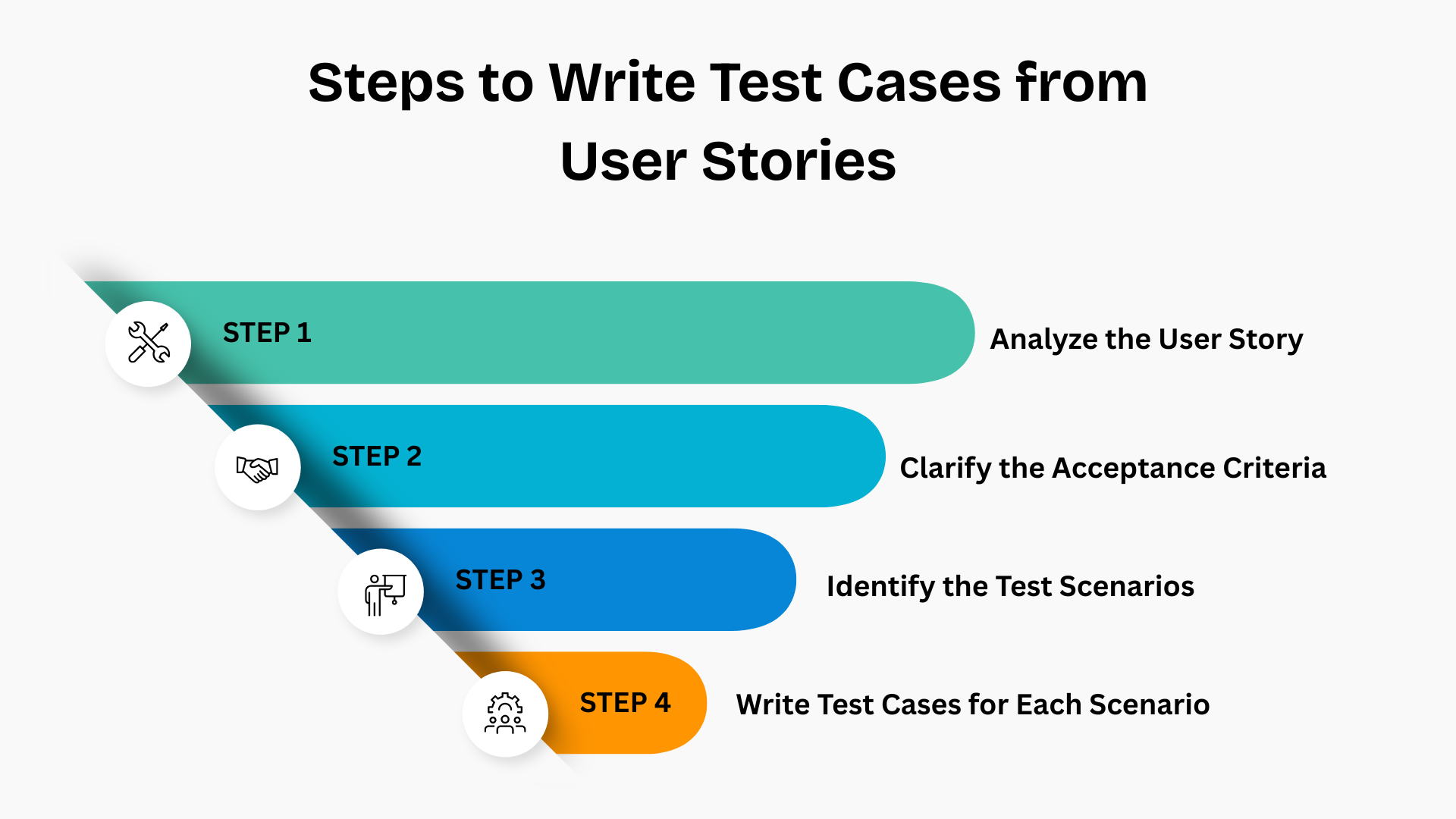

Steps to Write Test Cases from User Stories

Steps for creating user stories are frequently required to verify the corresponding test steps, and a conversion process between user stories and test cases exists. The following is the progressive way for QAs to reach the point of highly effective and traceable test cases from user stories.

Step 1: Analyze the User Story

The process of generating test cases begins with an in-depth discussion and analysis of the user story. There is a certain amount of reading the story and making an interpretation. The tester needs to identify the user’s role, what action they want to do, and then what the expected result is. Without it, the tester only knows WHAT to test, not WHY that functionality is present or HOW it contributes to user value.

- The user’s role

- The action they want to perform

- The expected outcome

As a customer, I want to apply a discount code at checkout so that I can reduce the total price.

- Actor: Customer

- Action: Apply discount code

- Outcome: Lower price

Step 2: Clarify the Acceptance Criteria

Acceptance criteria set the boundaries of what “done” means and how something should behave; they also need to be specific, measurable, and testable. Calling out gaps with the product owner keeps ambiguity from seeping into scenarios and test cases. Every criterion should smoothly correspond to one or more verifiable checks.

Make certain that the acceptance criteria are well defined, testable, and not ambiguous. If not there, ask the product owner what to do.

- Only one code can be applied per order.

- The discount code must be valid and not expired.

- The discount must reflect immediately on the total.

Step 3: Identify the Test Scenarios

Represent criteria as clear, user-level scenarios that encapsulate success and failure flows of a system. The two make up a coverage map and are technology-independent- they can easily be mapped back to criteria. Ideal completions should be as full as possible with as little overlap as possible.

Break down the story into meaningful test scenarios.

- Apply a valid discount code.

- Apply an expired discount code.

- Apply an invalid code.

- Apply two codes together.

- Remove an applied discount code.

Step 4: Write Test Cases for Each Scenario

Turn each scenario into test cases that are easily runnable, with obvious pre-conditions, test data, steps, and expected outcome. Addressing positive, negative, and boundary cases to ensure resilience in use. Make each case atomic so that it can be traced to a scenario/acceptance.

For each scenario, you can have multiple test cases for positive, negative, and edge conditions.

- Go to the checkout page.

- Enter a valid discount code.

- Click Apply.

Read: Test Cases and Test Suites: The Hierarchy Explained

Techniques for Deriving Test Cases from User Stories

Developing robust test cases involves more than simply trying to decode user stories. It requires organized analysis so you account for all the different forms of things that could happen. Test design strategies/methods aid the tester in generating effective and efficient test cases that detect expected results as well as unexpected results.

- Boundary Value Analysis (BVA): This methodology is centered on checking the extremes rather than checking the entire input range. It is useful for detecting bugs that are common at the boundaries of input ranges (between minimum and maximum allowed values).

- Equivalence Partitioning: Equivalence partitioning pools the input data into two partitions, a valid and an invalid set of inputs, both representing a similar behavior of input. By only testing one value from each partition, we achieve thorough coverage without repeating tests.

- Error Guessing: Error guessing depends on the experience of the tester and an intuitive feeling for where the system is likely to fail. It is especially helpful in finding errors that formal design methods miss, including absent validations or uncaught exceptions.

- State Transition Testing: This approach analyzes how the system transitions between states based on user input or mediated events. It guarantees that the valid transition takes place and the invalid one is prohibited.

- Decision Table Testing: Decision table testing is an approach that is used when there are multiple input conditions that can cause different results. It helps you systematically check every possible combination of inputs and outcomes (inputs, expected outputs), so all logical possibilities are covered.

Read: Test Design Techniques: BVA, State Transition, and more

Maintaining Test Case Quality

Valuing high-quality test cases will be key to keeping your tests reliable, scalable, and synchronized with the growing requirements. Not only that, but test cases need to be constructed so that they’re easy to read, quick to run, and simple to update. Good test cases promote collaboration and abilities for good long-term product health, reduced maintenance costs over releases.

- Clear: Test cases should be simple and not have any ambiguity so that any team member can follow them.

- Atomic: Each test case should only check one thing, to keep the focus and avoid confusion.

- Traceable: Each test case should track back to the related user story, acceptance criteria, and any defects.

- Reusable: Properly designed test cases can be reused across different cycles or environments without changes.

- Maintainable: The format and language should be able to update when the product or needs change.

Common Mistakes to Avoid

Even well-written user stories can execute poorly if specific traps are not identified and resolved. Knowing what to look for can provide added confidence that test cases continue to be both accurate and complete per user requirements. Avoiding such mistakes can increase test coverage and minimize the chance of defects going unnoticed.

- Ignoring Acceptance Criteria: Not adhering to acceptance criteria will lead to partial validation and lost functional coverage.

- Testing Implementation Instead of Behavior: Testing internal code and not what the user sees makes for tests that do not mimic real user interaction.

- Writing Vague Steps: Writing tests that have ambiguous steps brings inconsistency and reliability issues while running the tests on different testers.

- Skipping Negative Tests: Omission of negative cases results in systems that are prone to rejecting unsuitable or unexpected errors.

- No Traceability: Unlinked to the user stories and acceptance criteria, the regression testing is not efficient and can have gaps.

Read: How to Write Test Cases? (+ Detailed Examples)

Collaborative Review Process

Cross-functional reviews help a lot, especially when the test cases are derived from the user stories. The Three Amigos technique (Product Owner + Developer + QA) creates shared understanding and reduces misunderstandings early. Read: Metrics for Director of QA

With the support of tools such as testRigor, this collaboration goes further; the described tests can even be verified in plain English and tailored to match user intention. Furthermore, testRigor keeps maintenance costs at a minimum by adjusting tests to UI changes on its own. Read: All-Inclusive Guide to Test Case Creation in testRigor

Conclusion

Writing good test cases based on user stories keeps testing in line with actual user requirements, business goals, and the system’s anticipated behavior. By tightly coupling acceptance criteria and user intent to each test, teams end up with better coverage, less ambiguity, and more robust software. This regimented behavior also encourages better collaboration between QA, developers, and product owners as you generate shared understanding and therefore delivery.

Lastly, it ensures that user stories can be converted to test cases and the product will remain stable throughout agile development phases.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |