Over-Engineering Tests: How to Recognize the Signs and Fix

|

|

– Timothy Geithner.

As the quote suggests, we need to find a route between overdoing and undergoing, applicable in life, and applicable in software development, as well. Good testing practices are essential when talking about test automation. We depend on tests to catch bugs early, have confidence in releases, and enable iteration. Testers have become coding and test automation savvy in recent decades. But to power comes the potential for excess. Sometimes, with the best intentions in mind, testing teams can over-engineer their test suites. They add undue complexity, brittleness, or manual labour that compromises rather than enhances the value of automation.

Over-engineering tests is not just an academic matter. It has real-world consequences: slow tests, false negatives all the time, high maintenance costs, stress for the team, and finally, a waste of your time. And knowing when automation kills clarity, when abstraction obscures intent, or where tool churn costs more than it delivers is crucial.

| Key Takeaways: |

|---|

|

What is Over-Engineering in Testing?

In testing, over-engineering is the tendency to create test suites that are more complex than they need to be to accomplish their intended goal. Rather than simple, meaningful tests, teams develop abstract structures, automate trivial cases, or introduce complexity that does not add to real value. Over-engineering may be limited to test code full of redundant abstractions, or it may be so generic as to lose sight of what the test is actually performing.

In its simplest form, it is when the time spent making tests fancy is more than the practical value.

Good testing balances robustness (important scenarios should be taken care of) with simplicity (tests should be easy to read, run, and maintain). Over-engineering is a scale that is too complex. For example:

- Introduction of several levels of abstraction to a test that only checks a single function.

- Automation of edge cases that are very few, rather than on the primary user flows.

- Setting the parameters of tests too high to be read.

The point is to keep in mind that tests are not only machine-oriented, but also human-oriented. A strong yet comprehensible test suite gives strength to teams, whereas an over-engineered test suite slows them down. Tools like testRigor emphasize plain-English test creation and minimize excessive abstraction. By allowing non-technical team members to write meaningful, readable tests without coding overhead, testRigor helps teams avoid over-engineering in the first place.

Consequences of over-engineering tests

In case of over-engineering of tests, the impact is experienced throughout the development cycle. The test suite itself becomes a bottleneck rather than making the release of the application faster and safer. Teams start wasting time maintaining tests and less time developing features, and confidence in the suite starts to wane. This eventually results in resource wastage, frustration, and sluggish development.

Key consequences include:

- Reduced Test Speed: Over-parameterized and complicated tests are slow to execute, which slows down feedback loops.

- Brittleness and Fragility: Tests that are too detailed or abstract will easily break as the code is changed slightly.

- Loss of Developer Trust: The engineers do not keep on using the suite when failure or breakdowns appear random or false.

- Less Clarity: Over-abstraction causes one to lose track of what a test is actually testing.

- Testing is Transformed into Technical Debt: The suite uses the resources to not provide the same value in maintenance.

Slow Delivery: Tests act as a hindrance to fast software release as opposed to speeding up development.

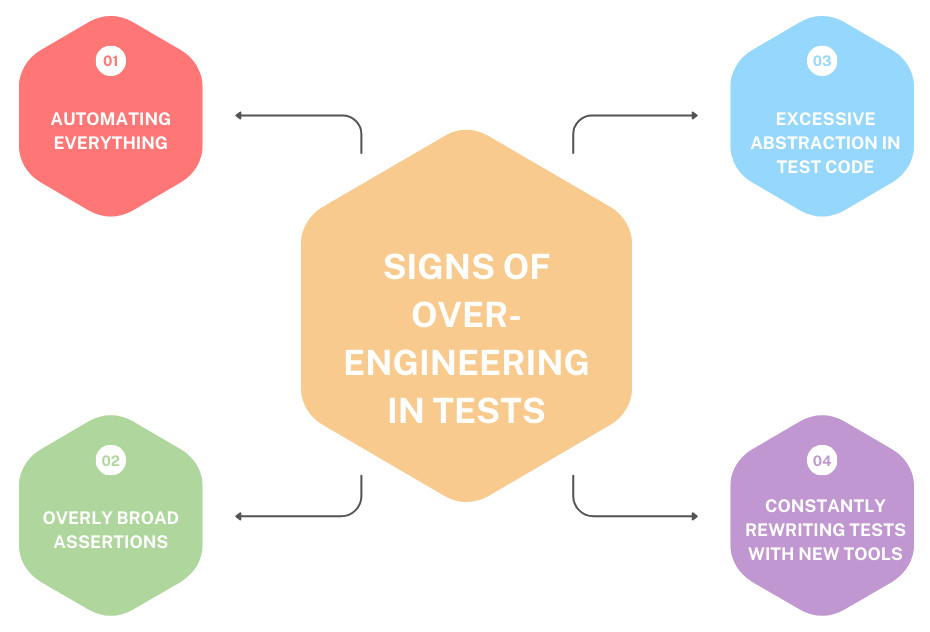

Signs of Over-Engineering in Tests

Over-engineering does not manifest itself in a single instance; it accumulates over time as teams attempt to make their test suites more sophisticated. What starts as an effort to be comprehensive, maintainable, or future-proof usually works against it and leads to bloated, confusing, and inefficient tests.

Automating Everything

Automation is strong; however, it is not free. Not all test scenarios are worth the cost of automation. In a scenario where teams seek to achieve 100% automation, they tend to burn energy on non-value-adding cases.

- Automation of such trivial cases as UI alignment or cosmetic text, which might be checked after the fact.

- Writing complicated scripts to cover the rare edge cases and ignoring the high-risk user flows.

- Replacing the creativity and flexibility that humans introduce with automation as an alternative to exploratory testing.

What is produced is a bloated test suite that is difficult to maintain and does not provide better coverage in the areas where it is most needed.

Overly Broad Assertions

The statements must be accurate and meaningful. Once a single test proves a number of conditions simultaneously, it turns weak and cloudy.

- Failure does not give a clear picture of what failed and hence requires time-consuming investigations.

- One failure may conceal other key problems.

- Big, jack-of-all-purpose tests do not encourage clarity and obscure the point of testing.

Excessive Abstraction in Test Code

Although abstraction has the capability of minimizing duplication, excessive abstraction conceals the actual purpose of tests. It is common to have developers and testers reading through layers of helper functions or frameworks simply to know what a test is.

- The excessive use of custom frameworks renders the test suite unfriendly to new users.

- The readability of the tests is impaired, and one of the primary purposes of tests is clear communication, is lost.

- Unnecessarily complicated abstractions are a waste of maintenance.

For example, instead of wading through layers of helper methods, a test written in testRigor might simply read: click on “Login” and enter “username” into “Email”.’ This prevents abstraction overload and keeps test intent clear.

Constantly Rewriting Tests with New Tools

It is easy to be tempted to jump between testing tools, particularly when a new framework is being touted as fast or flexible. However, rewrites invariably cost too much.

- Teams lose the stability of historical tests that have been tested.

- The work is no longer spent on feature development but on test code migration.

- Developers might be frustrated by the fact that their efforts are thrown away with each new tool adoption.

The tool churn causes instability and denies the teams the long-term advantages of a stable testing strategy.

Why These Signs Show Over-Engineering

The trends outlined above do not merely seem inefficient, but actually negate the role of testing, which is to offer quick, dependable feedback with a minimal amount of friction. That is why each of the signs indicates over-engineering in practice.

- Automation of Everything is Not Efficient: The optimal application is where automation will be used to save effort and reduce risk. By attempting to automate all possible scenarios, teams will find themselves developing slow, high-maintenance tests that do not contribute value. Indicatively, the cosmetic UI checks that are automated introduce overhead to the system but do not often stop actual business failures. The most effective efficiency is the selective automation of high-impact tests, and not the pursuit of 100 percent automation.

- Too Many Assertions Increase Fragility: A test having many assertions may appear comprehensive, yet it may be very weak. The failure of any single assertion causes the entire test to be considered a failure, thus obscuring other test results, compelling the developers to search logs. This complicates the process of diagnosing problems and makes debugging slow. Focused and atomic assertions minimize fragility and facilitate easier comprehension of failures. Read: How to perform assertions using testRigor?

- Too Much Abstraction Reduces Clarity: Abstraction is useful in preventing duplication, but it can be carried too far and obscure the purpose. Reading a test by developers should not require one to go through five helper methods to figure out what they are verifying. Beyond abstraction increases onboarding, debugging, and test suites, as it becomes a micro-framework, and complicates debugging. Direct and expressive tests tend to age more and are better able to give clarity within the team.

- Tool-hopping is Not Inherently Beneficial: Replacing testing tools regularly can be like an improvement, but it can be a distraction. Any migration is time-consuming, introduces instability, and removes familiarity of the team with the toolchain. Constant tool-hopping is not of much actual value unless a tool is preventing progress or incapable of fulfilling urgent requirements. Even a test environment with certain flaws is generally more long-term valuable when it is stable.

- Speed, Maintenance, and Meaning Trade-offs: Poor trade-offs between meaning, maintainability, and speed are typical of overengineering. For instance, tests with high parameters can be slow. Excessive mocks decrease reliability and meaning, as they are no longer representative of real-world use. Delicate tests require continuous upkeep, which drains the team.

Read: Decrease Test Maintenance Time by 99.5% with testRigor.

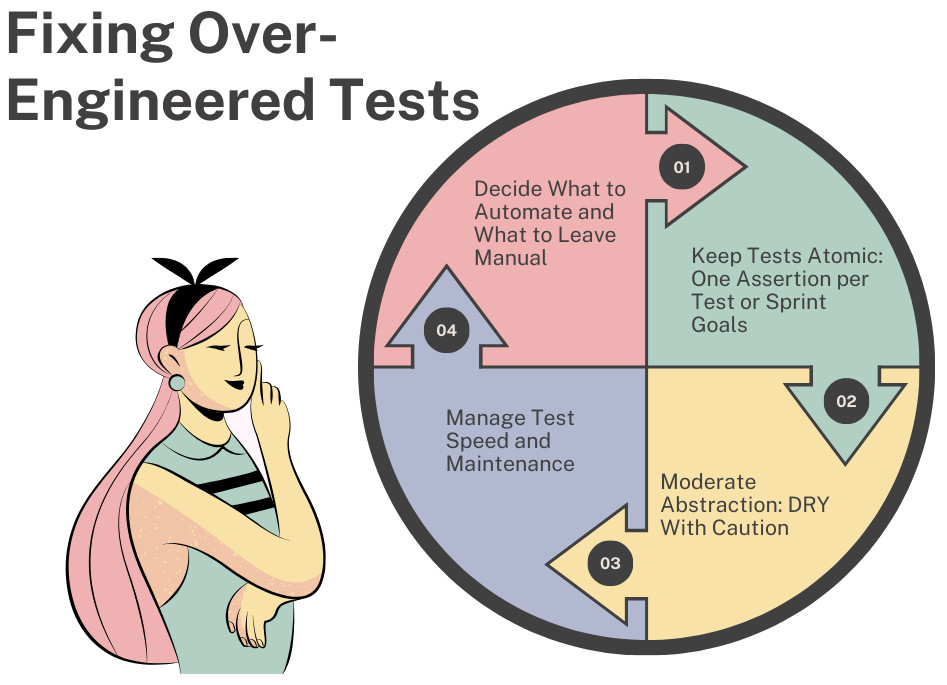

Fixing Over-Engineered Tests

The problem of over-engineering in testing is not an accident, but rather a result of good intentions. The fixes, thus, do not mean a renouncement of rigor but putting the scales back on. A properly organized test suite is lean, significant and robust, and assists a team to confidently release software.

Decide What to Automate and What to Leave Manual

- Risk-Based Approach: Automation is most effective in cases of high risks and high cost of repetition. As an example, the automation of login flows, checkout processes, or API calls will guarantee a uniform validation without wasting human effort. On the other hand, automating cosmetic UI checks, such as button alignment or one-time configuration screens, are resources that are not of much value. Read more: Risk-based Testing: A Strategic Approach to QA.

- Exploratory Testing vs. Scripted Automation: Exploratory testing reveals usability errors, gaps in the workflow, and real-life edge cases that are not detected by scripted automation. An advanced strategy is a combination of both: automation to be stable and regression, exploratory testing to be creative and flexible. In the absence of this balance, teams will either over-automate or over-test with human testing and fail to detect critical bugs. Read more here: Exploratory Testing vs. Scripted Testing: Tell Them Apart and Use Both.

Keep Tests Atomic: One Assertion per Test or Sprint Goals

- Benefits of Atomic Tests: Atomic tests bring enlightenment. In case of a test failure, the developers understand immediately what failed. This helps in building confidence in the test suite, saves on debugging time and prevents cascading failures in which a failed function triggers on many false alarms.

- Structuring Small, Focused Tests: One suggestion is one assertion per test, or one test per sprint goal. This makes sure that all tests convey the purpose. To use the example of a large test that verifies that one can log in, handle a session, and log out, break it down into three smaller tests that are individually verifiable.

Moderate Abstraction: DRY With Caution

- When Abstraction Helps: Medium abstraction eliminates duplication and simplifies configuration. The creation of utilities that are reusable, such as a create test user utility, minimizes clutter and promotes uniformity throughout the suite, instead of building layers of custom frameworks, testRigor abstracts standard browser, API, and mobile interactions out of the box.

- When it Hides Intent: Goal is though lost in excessive abstraction. When the number of helper functions called by the test to determine the item being tested is large, the test becomes hard to read. This slows down the onboarding speed and makes the test sensitive during refactoring. The workaround that will be used in the process is to abstract only repetitive boilerplate and keep logic that is of importance to business.

Manage Test Speed and Maintenance

- Fast Feedback Loops: Fast tests are invaluable. The developers can tell within the next few minutes whether their changes have broken something or not. An equal mixture of speed layers puts faster unit and integration testing at the bottom, and more sluggish end-to-end testing in the mission-critical flows. Read: How to Customize the Testing Pyramid: The Complete Guide.

- Test Reliability as Priority: A test suite containing flaky tests is no better than one that does not. Flaky tests are time-wasting, cause erosion of confidence, and motivate developers to disregard the outcomes. Stability through isolation of dependencies, cleaning of test data, and stabilization of environments is more important as it restores confidence and minimizes wasted time. By handling selectors with AI-driven recognition, testRigor eliminates fragile locators, reducing flakiness and speeding up feedback loops. Read more: testRigor Locators.

Simplify Mocks and Fixtures

- Mock Only What’s Necessary: Mocks can be useful to isolate external systems (e.g. APIs, databases), but excessive mocking will lead to unrealistic testing. When everything is taken as stubs/drivers, the test is no longer representative of the real world. Mocks must be express and meaningful. Read: Mocks, Spies, and Stubs: How to Use?

- Use Lightweight Test Data: Heavy fixtures slow tests and make them fragile. Wherever possible, generate minimal data on-the-fly or use small, focused fixtures. Read: How to generate unique test data in testRigor?

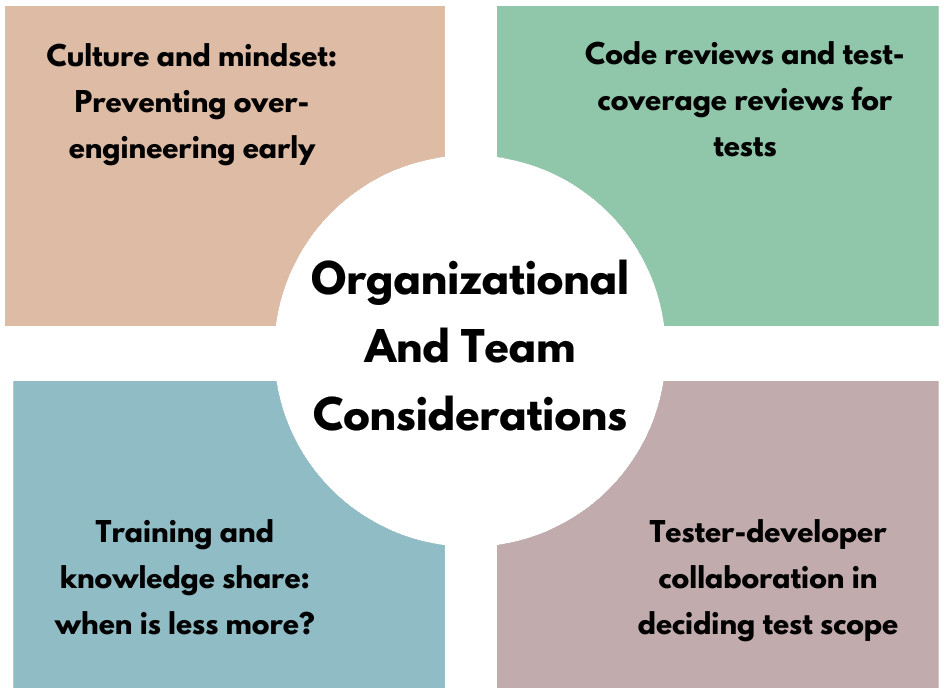

Organizational and Team Considerations

Not only a technical problem, but also generally founded on organizational habits and team culture, over-engineered tests are tests engineered in advance to fail. After all, there is a need to alter the thinking of teams regarding testing, teamwork, and knowledge sharing to avoid it. Some of the primary organizational and team considerations are the following.

Culture and Mindset: Preventing Over-engineering Early

An effective testing culture, too, does not appreciate complexity as an attainment; it is focused on clarity and business value. Tests ought not to be viewed as buildings of engineering but as lightweight devices that should assist teams in building confidence.

Define developers and testers to make simplicity a good test feature.

- Structurally reward terse, readable test suites, and not cheers of creative solutions that are excessively intricate.

- Introduce a culture where the tests are product-oriented and not vice versa.

This is done by setting cultural expectations early enough before over-engineering begins to occur and before it becomes institutionalized, so to speak.

Code Reviews and Test-coverage Reviews for Tests

Code review has to assess the quality of tests and production code. The reviews are frequently conducted with reference to feature logic, but do not answer the question of the clarity and maintainability of the tests themselves.

- Incorporate test review in the PR, check whether it does not include over-abstract, over-duplicated, or over-bloated fixtures.

- Intelligently utilize coverage metrics: reach not arbitrary percentages but aim to achieve meaningful coverage of critical business paths. Read: What is Test Coverage?

Periodically, conduct a health check of the test suites in order to identify unnecessary flakiness, slowness, or complexity.

This proactive approach will make sure that test suites will be compliant with long term goals instead of solving up the issues.

Tester+Developer Collaboration in Deciding Test Scope

The problem of over-engineering is usually caused by siloing testing responsibilities. Developers can become too automated and testers can become too specific. The solution lies in teamwork during planning. The decision on which scenarios to automate and which ones to remain manual should be made by both developers and testers. Read: Test Automation Playbook.

- Misunderstandings regarding risk, scope, and value can be clarified by means of pairing.

- Cross-functional planning is done to make sure that the test suite is based on actual business requirements, not technical interest.

The balance will minimize unnecessary or low-value testing and cover the critical areas at all times.

Training and Knowledge Share: When is Less More?

In other cases over-engineering occurs due to ignorance of the teams. To create awareness on when less is more, training and knowledge sharing are necessary.

- Conduct lean test design workshops with a focus on clarity, speed, and risk-based thinking.

- Provide examples of well-written and atomic tests as well as over-engineered tests.

- Promote a mentoring relationship among the senior and junior team members to disseminate good practices.

With testRigor, both developers and testers, even non-technical stakeholders, can contribute to the test suite. This shared ownership reduces over-engineering by keeping tests business-oriented and easy for everyone to understand.

Conclusion

Over-engineering tests may start with good intentions, but they often result in fragile, confusing, and high-maintenance suites that slow teams down. The key is to keep tests lean, clear, and business-focused, striking a balance between automation and simplicity. With tools like testRigor, teams can achieve this balance by writing plain-English, low-maintenance tests that reduce complexity and restore confidence in their testing strategy.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |