Test Scenarios vs. Test Cases: Know The Difference

|

|

What is a test case?

The test case provides detailed information about the strategy, process, pre-condition, and expected result. It also mentions detailed step-by-step instructions on performing the testing steps. It helps the tester understand which actions they need to perform in what order, and details the expected outcomes. We can write multiple test cases from each test scenario. Executing test cases helps to get errors in the application. Test cases are mapped to requirements using the requirement traceability matrix.

What is a test scenario?

On the other hand, a test scenario, also known as a test possibility or a test condition, is a description of a specific test situation or set of circumstances for a software application, service, or system. It outlines the steps that need to be taken to validate certain functionality, conditions, or requirements of the product being tested. A test scenario includes the inputs, expected outputs, and any other relevant information that the testing team will need to execute the test successfully. It is a high-level representation of a test case and provides a comprehensive understanding of the functionality being tested. Leads or business analysts typically derive test scenarios.

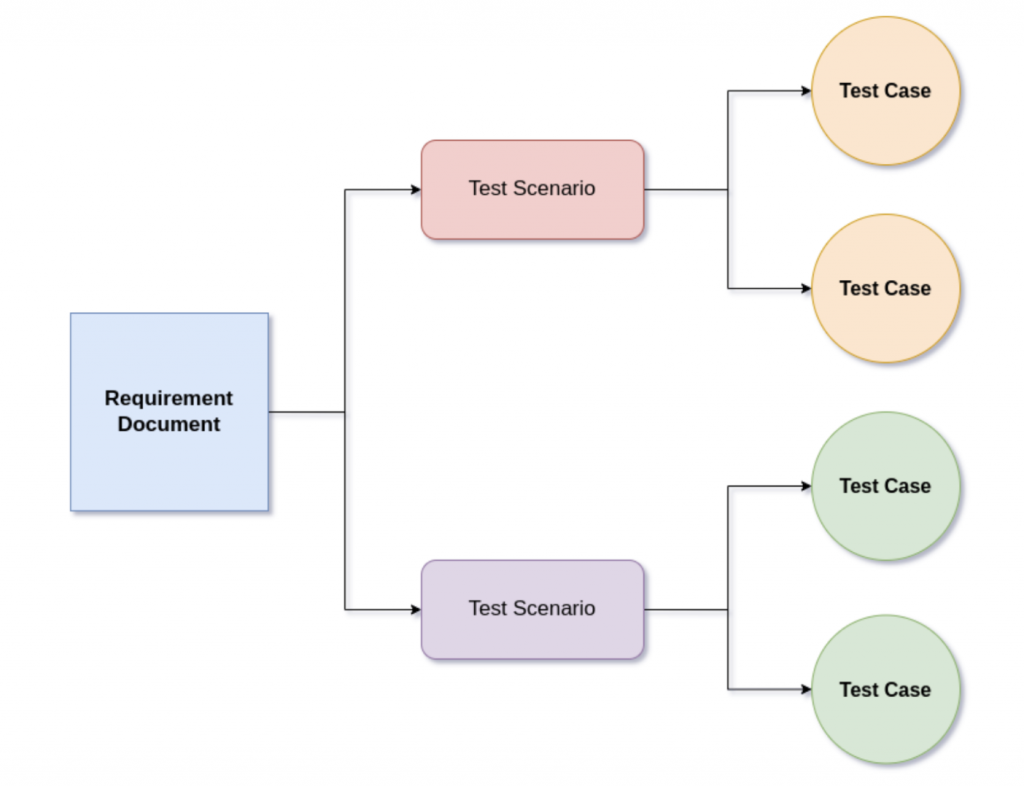

The diagram above shows a relationship between requirements documents, test scenarios, and test cases. In short, a test case is a document that contains a set of actions to be performed on the application under test to validate the expected functionality. Usually, from requirements documents, the team prepares test scenarios, and then test cases are created based on these scenarios. Be it a test case or test scenario, the way it’s written can be different for manual and automated testing.

How the traditional (old) model works

In the legacy model, the manual and automation used to be two different teams. The manual team prepared test scenarios or test cases and executed them; then, the automation team would take the same test scenarios and create other test cases for automation. The interaction between both teams would have been very limited, including rare occurrences for the team to have a peer review of the test cases. Also, whenever there were changes in functionality or some hotfixes, only the manual team would be told about these changes, but the automation team would often be kept in the dark. The result would be a failure in an automation test results, which muddles understanding if it’s indeed a bug or a functionality change. Manual and automation teams often write different use cases for their testing, and there is a high chance that the team can miss out on any scenarios leading to a gap in testing between the two testing teams, which leads to scenario leakage with increased cases of defects.

How the new model works

Fast response and feedback are crucial for satisfying the expectations of the stakeholders. Having separate manual and automation teams can hinder the process as their priorities, and hierarchical structures may differ. The idea might be that while the manual team is performing exploratory or hotfix testing, the automation team is responsible for running regression tests to ensure that the changes do not negatively impact other modules. However, suppose a new release accidentally introduces any change in the HTML element structure. In that case, the automation scripts relying on XPaths will fail, resulting in incomplete testing and a slower feedback loop.

The latest market trend is the no-code automation tools which can clear all the cons mentioned above, and make the entire testing process a lot more straightforward. testRigor is a no-code automation tool that supports manual QA to write automation scripts using the tool’s intelligent ML and AI technology. With testRigor, there is no need to have separate manual and automation teams. Also, manual testers no longer require learning complex programming languages for automation. The entire team can use the same plain English test cases with a slight modification as the automation script in testRigor.

- The entire team can create efficient automated tests much faster than with other frameworks – due to simplicity of outlining commands in plain English language

- Tests can be created even before development is completed, since there is no reliance on details of implementation (such as XPaths, CSS Selectors, etc.)

- Test maintenance becomes a minor task

The result? Every QA person can own a piece of automation, and participate in creating and editing test cases for any given feature.