The History of Test Automation

|

|

History

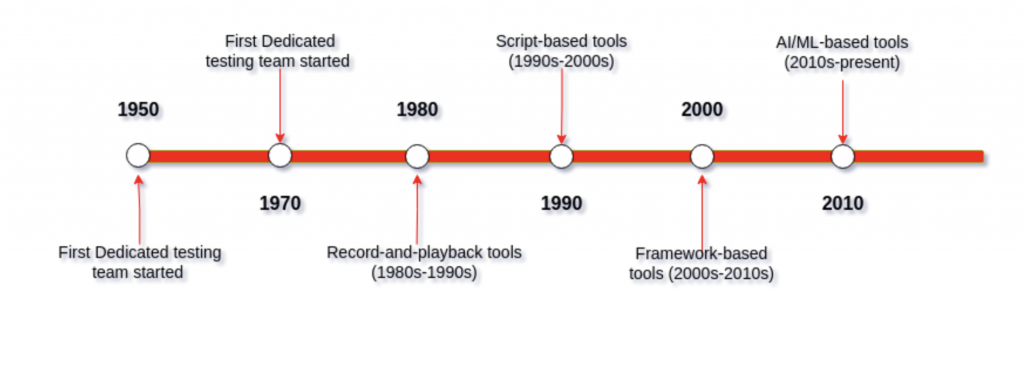

The history of software testing dates back to the 1940s and 1950s when programmers used ad-hoc methods to manually check their code for errors. However, the first dedicated software testing team was formed in the late 1950s at IBM. This team, led by computer scientist Gerald M. Weinberg, a pioneer in system testing, was responsible for testing the operating system for the IBM 704 computer, one of the first commercial mainframe computers.

The concept of test automation can be traced back to the early days of computing, but the first practical implementation occurred in the 1970s. One of the earliest examples of test automation is the Automated Test Engineer (ATE) system developed by IBM. The ATE system, designed to automate the testing of mainframe software applications, represented a significant breakthrough and set a precedent for future test automation systems.

- Record-and-playback tools (1980s-1990s)

- Script-based tools (1990s-2000s)

- Framework-based tools (2000s-2010s)

- AI/ML-based tools (2010s-present)

Record-and-playback tools (1980s-1990s)

Record-and-playback tools were some of the earliest test automation tools to be developed, emerging in the 1980s and 1990s. These tools allowed testers to record interactions with a software application and then replay those interactions as a script. The scripts could be run repeatedly, allowing for the automation of repetitive testing tasks.

One of the earliest record-and-playback tools was QuickTest, originally developed by Mercury Interactive and later acquired by Hewlett-Packard. QuickTest was designed to automate the testing of graphical user interfaces (GUIs), allowing testers to record interactions with a GUI and then replay those interactions as a script. The tool quickly gained popularity among software developers and testers and set the stage for the widespread adoption of test automation.

IBM Rational Robot was another popular record-and-playback tool that emerged in the 1990s. Rational Robot was designed to automate testing for Windows-based applications and allowed testers to create automated test scripts by recording their interactions with a software application.Rational Robot also included several built-in functions for verifying application behavior, such as ascertaining text on the screen or checking for the presence of specific windows.

Record-and-playback tools were relatively simple and required little programming knowledge, making them accessible to testers with limited technical expertise. However, they were limited in handling complex testing scenarios and were often unreliable when software applications underwent significant changes.

Despite these limitations, record-and-playback tools were a significant step forward in the evolution of test automation. They demonstrated the potential for automating repetitive testing tasks and set the stage for the more sophisticated script-based tools that would follow in the 1990s and 2000s.

Script-based tools (1990s-2000s)

Script-based tools emerged in the 1990s and 2000s as a more robust alternative to record-and-playback tools. These tools allowed testers to write scripts in a programming language to automate testing tasks, providing greater flexibility and control. Script-based tools increased the complexity of the tests they could run but also raised the barrier to entry for testers.

One of the most popular script-based tools was Selenium, released in 2004. Selenium was designed to automate web-based applications and allowed testers to write scripts in various programming languages, including Java, Python, and Ruby.

Mercury LoadRunner was another popular script-based tool that emerged in the 1990s. LoadRunner was designed to test the performance of software applications by simulating user traffic and measuring system response times. It included several features for load testing, including the ability to simulate thousands of virtual users and generate detailed reports on system performance.

Though Script-based tools provided more flexibility and control over testing scenarios and allowed for more complex testing scenarios, they required a higher technical expertise than record-and-playback tools, necessitating testers with knowledge of programming languages.

Framework-based tools (2000s-2010s)

Framework-based tools emerged in the 2000s and 2010s, offering a more sophisticated approach to test automation.

These tools provided a comprehensive testing framework, including libraries, functions, and methodologies for building and managing test automation scripts.

TestNG, released in 2004, became one of the most popular framework-based tools. Designed for Java-based applications, it provided a testing framework that included features for test configuration, data parameterization, and test grouping. TestNG also had several features for reporting and analysis, like generating detailed HTML reports and analyzing test results.

Another popular framework-based tool was Robot Framework, which was released in 2008. This generic test automation framework could be used for various software applications, including web, desktop, and mobile applications. Robot Framework provided a library of built-in keywords for interacting with software applications and the ability to create custom keywords and libraries.

Other notable framework-based tools included Cucumber, designed for behavior-driven development (BDD) and provided a testing framework for defining, executing, and reporting on BDD tests. JUnit, which was intended for Java-based applications and provided a testing framework for unit testing, integration testing, and system testing.

Framework-based tools provided a more structured and standardized approach to test automation. They allowed for greater flexibility and control over testing scenarios and offered a comprehensive testing framework that could be used across different software applications and testing scenarios. These tools also offered a more efficient way to manage and maintain test automation scripts, reducing the time and effort required to create and maintain scripts.

AI/ML-based tools (2010-2023)

Slowly we are witnessing a shift, that is, the decline of script-based or framework-based test automation tools. The rise of AI-driven tools marked a significant shift in the software testing landscape and led to the decline of script-based and framework-based tools. This decline can be attributed to several factors, including the inherent limitations of these tools that became more apparent with the rise of modern software development practices. Framework tools, for instance, rely heavily on scripting languages like Java or Python, and require a high level of technical expertise to create and maintain test scripts, creating a dependency on programming languages and technical knowledge.

Moreover, framework tools often lack flexibility and adaptability, making them rigid and challenging to customize. They require significant effort to modify scripts to accommodate changes in the application or environment. This inflexibility has become increasingly problematic as software development practices have evolved towards more agile and iterative methodologies.

Many traditional testing tools have struggled to keep up with the demands of modern software development practices, and some have even reached the end of their life cycle. Notable examples include Cucumber Open Source and TestProject.io, a cloud-based test automation platform developed by Tricentis.

Rise of AI-powered test automation

AI-driven testing tools leverage machine learning algorithms to overcome these limitations. They are intelligent enough to identify and adapt to changes in the application or environment, making them more stable and reliable. Additionally, these tools require little to no technical expertise, enabling non-technical stakeholders to contribute to the testing effort, making the entire testing process more efficient and effective.

One such example is testRigor, which was originally released in 2015. It is an intelligent AI-based cloud-hosted codeless automation tool made to simplify many legacy tasks associated with automation testing. It stood out from other automation tools right away due to ease of use, excellent test stability and scalability. Now manual testers can comfortably create end-to-end complex tests for mobile, web, and desktop – all without any coding experience. Read how testRigor is a Test Automation Tool For Manual Testers.

AI/ML-based tools represent a significant evolution in test automation, providing a more intelligent and efficient approach to testing. These tools leverage advanced technologies such as machine learning and natural language processing to automate testing tasks, improve test accuracy, and reduce the amount of manual effort required for testing. They provide a more proactive approach to testing, identifying potential issues before they become critical, allowing software developers and testers to take corrective action more quickly.

A peak into the future

Despite the advancements in AI/ML-based tools, it’s important to note that the evolution of test automation isn’t a linear progression, with each stage replacing the previous one entirely. Instead, each stage builds on the previous one, introducing new tools and practices that expand the possibilities of what can be achieved with test automation.

For example, while AI/ML-based tools represent the latest stage in the evolution of test automation, they aren’t necessarily the best choice for all testing scenarios. While AI/ML-based tools can automate many testing tasks, they can’t entirely replace the need for human testers, who bring their own unique perspectives and insights to the testing process.

Looking ahead, it’s clear that the field of test automation will continue to evolve as new technologies and methodologies emerge. For example, the rise of technologies like augmented reality (AR) and virtual reality (VR) is creating new challenges and opportunities for test automation, requiring new tools and approaches. Similarly, the growing emphasis on continuous integration/continuous delivery (CI/CD) and DevOps practices is changing how and when testing is done, leading to new practices like shift-left testing and continuous testing.

Examining testRigor, it’s evident that the growing focus on generative AI is beginning to shape our understanding of the next phase in test automation. The objective is to streamline the entire testing process — encompassing all stages from test creation and editing to maintenance — to the greatest extent possible.

In conclusion, the history of test automation is a story of continuous evolution and adaptation. From the early days of record-and-playback tools to the latest AI/ML-based tools, each stage has expanded the possibilities of what can be achieved with test automation, leading to more efficient, effective, and reliable software testing. And while the future of test automation is still being written, it’s clear that it will continue to be an essential tool for ensuring the quality and reliability of software applications.