Release Notes: Function Calling Enhancements

|

|

Function Calling and Auto-adapt enhancements for AI-Generated Test Steps

Experience more efficient and context-aware test automation with major enhancements to function calling and auto-adapt capabilities. Use function calling/MCP for generating steps for AI rules the same way as we do it for generating steps for new test case.

Now, the auto queue can now handle and adapt multiple steps at once, not just individual commands. This improvement enables true auto-adaptive test step generation as the system intelligently explores and understands the context of your test case prompts. testRigor’s AI actively analyzes the overall context, allowing it to generate, adjust, and optimize several steps simultaneously for more relevant and effective automated test scenarios.

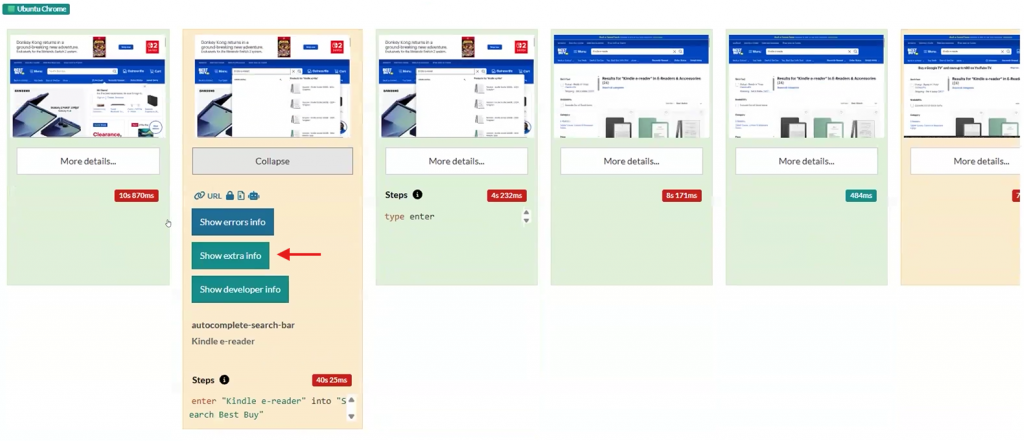

I.e.: When it started counting the process on this case, it failed because there is a typo, and it started self-healing (See image below):

Caption: Step with error and more details to click on.

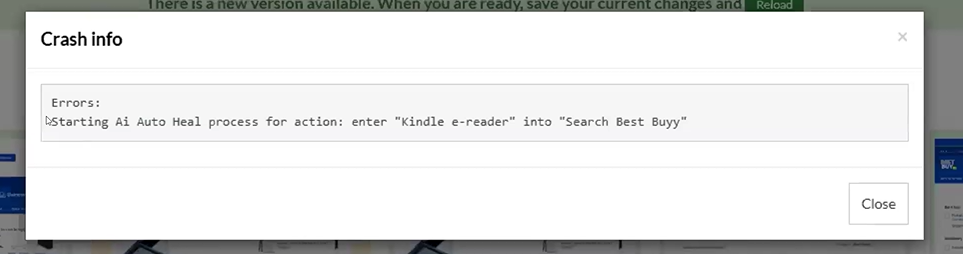

Caption: Starting auto-healing process.

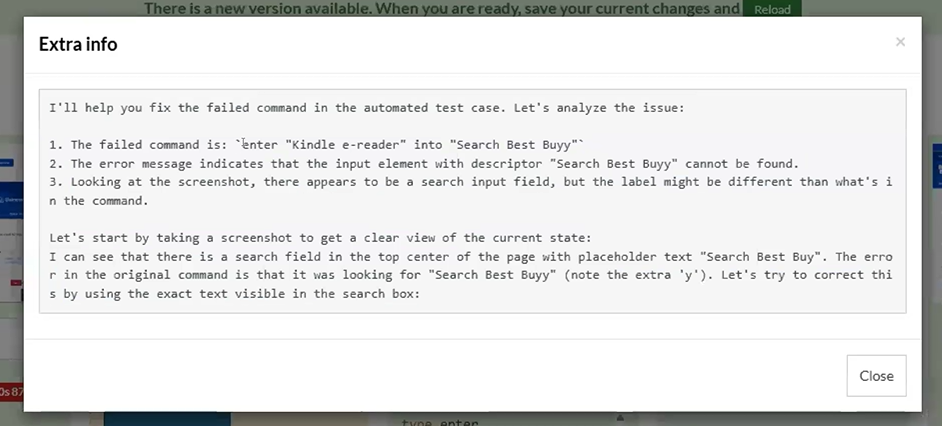

When a test case is fixed, detailed information is displayed, including the steps taken to resolve it, identified typo corrections, and the recognized goal and purpose of the test case.

Caption: AI providing more info and next steps.

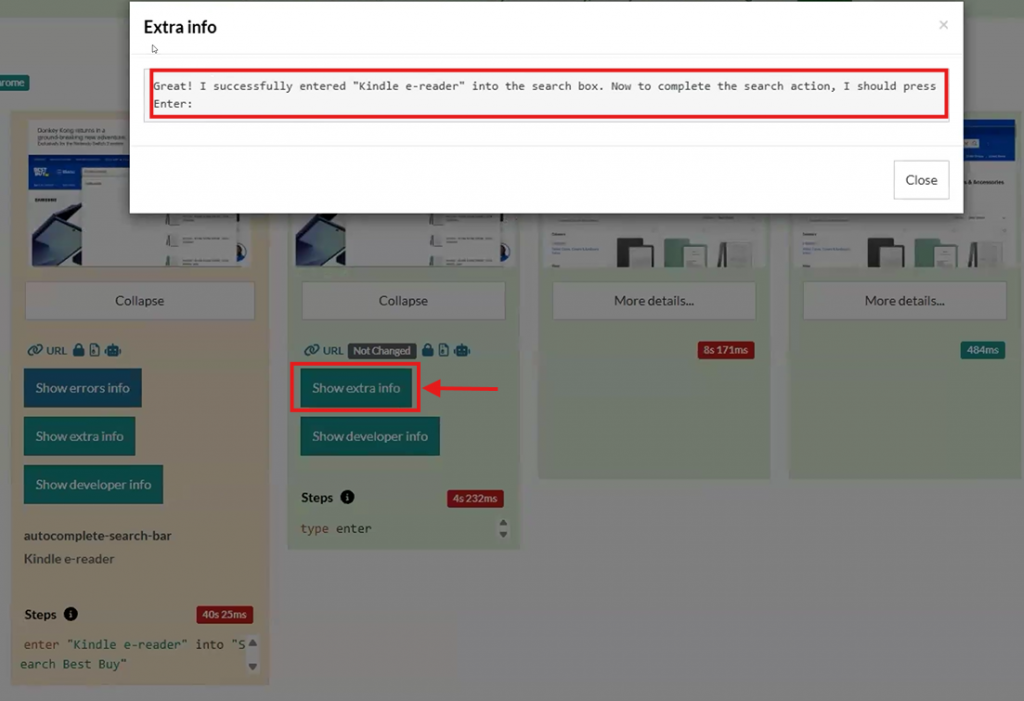

The AI intelligently generates actions like type enter or note the extra ‘y’ when it interprets a comment as a search intent, then displays the resulting steps and any corrections made during the healing process, displaying a successful result once it passed.

Caption: Successful step once healed.

Key Benefits

-

Faster Test Authoring: Quickly generate comprehensive sequences of AI-driven test steps, improving productivity for QA and development teams.

-

Improved Test Accuracy: Context-aware, as multi-step adaptation produces more accurate and reliable test cases.

-

Greater Automation Flexibility: Effortlessly adjust test scripts as application logic evolves, reducing maintenance overhead.