Software Testability: Benefits & Implementation Guide

|

|

Let’s talk about the ease of working with software, this time not for the users but for the developers and testers.

How hard can it be to test software? Developers can write unit and integration tests, while testers can focus on other types of testing. That should do it, right? Unfortunately, no.

Quite often, developers and testers struggle with poorly built software, that is, software that does not consider testability. When application code is written keeping in mind that it needs to be easily testable, we get software that is of impeccable quality—not just good quality but also scalable, easy to maintain, and testable.

Let’s explore the core benefits and actionable strategies for building highly testable software.

| Key Takeaways: |

|---|

|

What is Testable Software?

Software testability is how easy it is to check if a piece of software (like an app, a website, or a system) is working correctly, find problems when it’s not, and make sure it stays reliable.

It’s about designing and building software in a way that:

- Lets You “see inside”: Can you easily observe what the software is doing? What data is it holding? What decisions is it making?

- Lets You “control it”: Can you easily tell the software to do specific things, feed it specific information, and trigger different scenarios?

- Is Built in Small, Manageable Pieces: Are the different parts of the software separate enough that you can test one part without affecting or needing the entire system?

When software is highly testable, it “wants to be tested”. It’s cooperative. It makes the job of finding bugs, ensuring quality, and making future changes much simpler and faster. When it’s not testable, it’s like trying to debug a mystery – frustrating, time-consuming, and often leading to missed issues.

The Perception Triangle: How We See the Need for Testability?

When discussing testability with someone and they simply nod, it is essential to verify that they are picturing the same thing as you. Or is their perception about testability altogether different?

The shallow agreement about improving testability may be dangerous, as it might conceal the fact that you are solving entirely different problems. Two people can work on the same system on the same problem and yet perceive things differently.

For example, consider adding a logging module to the existing system to detect subtle issues. For developers, logs can be an added burden that increases investment and consumes more time. For them, having a logging module is unnecessary, as they claim they can catch all errors from the UI alone. However, for testers, the logs are essential. According to them, they can identify problems using logs that the UI cannot catch. For developers, a log is part of troubleshooting a known problem, and they can do without it. In contrast, for testers, logs are invaluable and necessary for improving the software.

This may not be a disagreement between teams, but rather a perception that shaped how they viewed the need for investing in better testability.

Factors Affecting the Need for Testability

Here are the three factors that shape different people’s perceptions of the need for testability:

- Frequency of Interaction: How often do you work with the product? Is it daily, occasionally, or rarely?

- Usage of the System: How do you use the system? When you interact with the product, whether you are developing it, testing it, or observing it, do you delve deeply into the system or just skim the surface?

- View of Testing: Do you consider testing mainly as validating known behaviors, or as exploring the application under test?

Of the three factors listed above, the third factor, View of Testing, is the one that changes the perception the most. Most organizations tend to view testing as a means to confirm expected behavior, mainly due to the ease of measuring such a type of testing.

Signs of Poor Testability in Software

Here are some signs that you can perceive as symptoms of the software not being testable:

- Critical bugs tend to slip into production frequently or are discovered very late.

- An “all passed” status tends to hide the amount of effort it took to achieve it.

- Teams end up doing the bare minimum to make tests pass without periodic exploration testing.

- Teams may prefer not testing some areas in the application due to its complexity or the amount of time it takes.

- The environment for testing is difficult to achieve or maintain.

- Proper logs are either not available or are hard to read.

- If a new team member is covering testing, then they struggle a lot to get by; a lot of product familiarity seems to be needed to do even a basic round of testing.

Factors of Software Testability

Let’s look at the important characteristics that make it easier, faster, and more reliable to check for quality. It’s not just one thing, but a combination of design choices that make a big difference.

Observability: Can You See Inside?

In software, observability means the program gives you clear signals about its internal state and behavior. This includes:

- Good Logging: The software writes down what it’s doing, like a diary. This helps you trace steps, see data changes, and understand decisions.

- Meaningful Error Messages: When something goes wrong, the software tells you what happened and why, instead of just crashing silently.

- Accessible Data: You can easily inspect the data the software is holding or processing at different points.

If you can’t “see inside” your software, finding out why something isn’t working is like trying to diagnose a problem in a completely dark room – nearly impossible.

Controllability: Can You Make It Do Things?

For software, controllability means you can easily feed it specific inputs, trigger particular functions, and set up different situations to see how it responds. This involves:

- Clear and Simple Ways to Interact: The software has well-defined “buttons” or commands that let you tell it what to do.

- Easy Test Data Setup: You can quickly provide the exact information the software needs to process for a specific test scenario.

- Isolated Functions: You can test one specific piece of logic without needing to run the entire application.

If software is hard to control, testing becomes a huge, manual effort.

Simplicity: Is Less More?

In software, simplicity means the code is straightforward, easy to understand, and avoids unnecessary complexity. This includes:

- Clear, Concise Code: Functions and modules do one thing and do it well.

- Minimal Features: Avoid adding features that aren’t truly needed.

- Easy to Read: The code is written in a way that makes sense to anyone looking at it, not just the original developer.

Complex software is harder to test because there are more paths, more interactions, and more potential for hidden bugs. Simple code is inherently more testable. Read: Clean Code: Key Dos and Don’ts for Successful Development.

Stability: Does It Behave Predictably?

Imagine a bridge that’s always changing its shape or location. You’d never trust it! A stable bridge stays put and reliably supports traffic.

Stability in software testability refers to how consistently the software behaves and how resistant its core parts are to unnecessary changes. This means:

- Consistent Behavior: Given the same input, the software always produces the same output.

- Stable Interfaces: The ways you interact with different parts of the software don’t change frequently or unexpectedly.

- Well-defined Requirements: The software is built based on clear, unchanging needs, reducing constant redesigns.

When the software’s behavior or its internal structure is constantly shifting, tests become unreliable, and you spend more time fixing tests than finding bugs.

Availability: Is It Ready to Be Tested?

Availability means the software (or the specific part you want to test) can be easily accessed and prepared for testing whenever needed. This includes:

- Quick Setup: It’s fast and easy to get the software running in a test environment.

- Minimal Dependencies: It doesn’t rely on too many other complex systems to start up or function for a test.

- Automated Deployment: The process of getting the software ready for testing is automated, not a manual chore.

If setting up your software for testing takes hours or requires complex manual steps, testing will be delayed, infrequent, and costly.

Decomposability: Is It Built in Small Pieces?

Decomposability means the software is designed and built in small, independent, and self-contained modules or components. Each component ideally does one specific job. This allows you to:

- Test Parts in Isolation: You can test a single function or module without needing the entire system to be running.

- Replace or Modify Easily: If one part has a bug, you can fix or replace just that part without affecting the whole.

- Understand Easily: Smaller pieces are simpler to grasp and manage.

If software isn’t decomposable, it’s like a giant, monolithic block – if one tiny part has an issue, you might have to check or even rebuild the whole thing.

Types of Software Testability

Let us review the types of software testability.

Object-Oriented Testability

Object-oriented testability is about how easy it is to test individual, self-contained “components” (called “objects” or “classes” in programming) that make up the software. If your individual building blocks are well-tested and reliable, putting them together to form a larger system becomes much less risky. It helps catch bugs at the smallest level, where they’re cheapest and easiest to fix. Primary focus is on unit, integration, and even system testing. Things like how tightly coupled the units are, checking polymorphism, and ease of mocking are what get tested.

Domain-Based Testability

Domain-based testability is about how well you can test if the software correctly handles the specific “world” or “business rules” it’s designed for. Ideally, your business rules or objects should not be burdened with technical implementation stuff like databases or web frameworks. This type of testability ensures the software is built with clear boundaries between business logic and technical implementation, which in turn makes it testable.

Module-Based Testability

This focuses on how the software is structured into larger, independent sections or “modules.” Can you easily isolate and test one module without needing to set up or run the entire complex application? This often involves using “mock” or “fake” versions of other modules that the one you’re testing might interact with. When software is built with strong module testability, it’s much faster to find and fix problems. You don’t have to untangle a giant mess to test a small change, and a bug in one module is less likely to break others.

UI-Based Testability

If UI is coupled with business logic, it can be difficult to test. Ideally, your UI should just focus on displaying relevant information. Modern frameworks do a good job of segregating concerns, which in turn helps test components in isolation.

Requirements of Software Testability

Let’s look at the features that a software needs to have built in for it to truly be testable.

Module Capabilities

Module capabilities refer to how well the software is divided into independent, self-contained pieces (we call them “modules” or “components”). Each piece should have a clear, single purpose and interact with other pieces in a well-defined way, without being overly dependent on their internal secrets.

When software has strong module capabilities, you can test each small part in isolation. This means you don’t need to set up the entire complex system just to check one small function. It makes finding problems much faster and ensures that fixing a bug in one area doesn’t accidentally break something else far away.

Testing Support Capabilities

Testing support capabilities are the features and hooks built directly into the software (or around it) that are specifically designed to help with testing. This isn’t about the software’s main purpose, but about making it “test-friendly.” This could include:

- Special Test Modes: A setting that lets the software run in a “test mode” where it might use fake data or skip certain external connections.

- Configuration Options for Testing: Easy ways to change settings (like database connections or external service URLs) specifically for testing.

- Data Setup Tools: Features that let testers quickly create or reset specific test data.

Defect Disclosure Capabilities

Defect disclosure capabilities mean the software is designed to communicate clearly when something goes wrong. Instead of just crashing or behaving strangely, it provides meaningful information about errors and failures. This includes:

- Clear Error Messages: Messages that explain what went wrong, where, and potentially why.

- Error Codes: Specific codes that can be looked up in documentation for more details.

- Structured Logging of Errors: Recording detailed information about errors in a way that’s easy for developers and testers to analyze.

Observation Capabilities

Observation capabilities refer to the software’s ability to reveal its internal state and behavior to an outside observer (like a tester or a monitoring tool). This is about making the software’s inner workings visible without needing to stop or modify it. This covers:

- Detailed Logging: Not just errors, but also normal operations, data values, and key decision points are recorded.

- Monitoring Metrics: The software provides data points (like how much memory it’s using, how many requests it’s handling, or how long operations are taking) that can be tracked over time.

- Debug Interfaces: Special ways to peek at the software’s internal data or variables while it’s running.

How to Measure Software Testability?

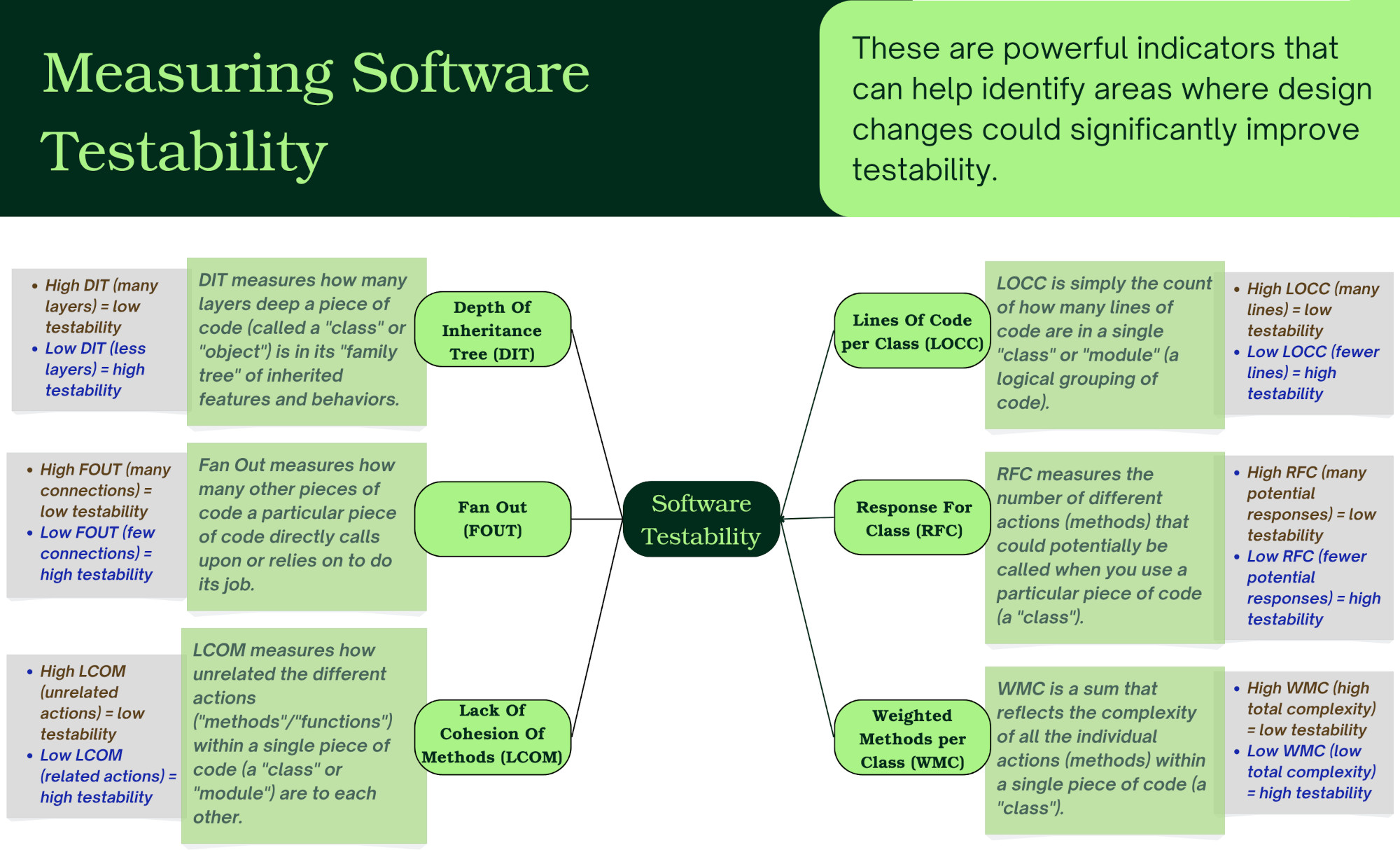

Here are some metrics used to measure software testability:

- Depth Of Inheritance Tree (DIT): In software, DIT measures how many layers deep a piece of code (called a “class” or “object”) is in its “family tree” of inherited features and behaviors.

- High DIT (many layers): This often means a piece of code inherits a lot of complex behavior from its ancestors. It becomes harder to understand exactly what it does, and to test all the possible interactions and inherited functionalities. You have to consider the behavior from many different levels of the “tree”.

- Low DIT (few layers): This usually means the code is simpler and more direct in its behavior, making it easier to understand and test without worrying about deep, hidden inherited traits.

- Fan Out (FOUT): This measures how many other pieces of code a particular piece of code directly calls upon or relies on to do its job.

- High FOUT (many connections): This indicates that a piece of code is very “chatty” or dependent on many other parts of the system. Testing it in isolation becomes difficult because you have to simulate or provide all those other pieces it talks to.

- Low FOUT (few connections): It means the code is more independent and focused, making it easier to test by itself without needing to set up a whole web of dependencies.

- Lack Of Cohesion Of Methods (LCOM): LCOM measures how unrelated the different actions (called “methods” or “functions”) within a single piece of code (a “class” or “module”) are to each other.

- High LCOM (unrelated actions): This is a sign that a piece of code is trying to do too many different, unrelated things.

- Low LCOM (related actions): It means the actions within that piece of code are all highly related and focused on a single purpose, making it much easier to test that specific responsibility thoroughly.

- Lines Of Code per Class (LOCC): LOCC is simply the count of how many lines of code are in a single “class” or “module” (a logical grouping of code).

- High LOCC (many lines): While not always a direct measure of complexity, a very high line count often suggests that a piece of code is doing too much. This makes it difficult to read, understand, and therefore difficult to test all its potential behaviors and interactions.

- Low LOCC (fewer lines): Generally indicates smaller, more focused pieces of code that are easier to grasp, test, and maintain.

- Response For Class (RFC): RFC measures the number of different actions (methods) that could potentially be called when you use a particular piece of code (a “class”). This includes its own actions and any actions of other pieces of code it directly calls.

- High RFC (many potential responses): This means that using a particular piece of code can lead to a very large number of potential execution paths and interactions throughout the system. Testing it thoroughly becomes very complex because you have to consider all those possible “ripple effects”.

- Low RFC (fewer potential responses): Indicates a more contained and predictable piece of code, making it easier to test its direct impact without worrying about an overwhelming number of downstream consequences.

- Weighted Methods per Class (WMC): WMC is a sum that reflects the complexity of all the individual actions (methods) within a single piece of code (a “class”). More complex methods (e.g., those with many decision points or loops) get a higher “weight.”

- High WMC (high total complexity): This suggests that a piece of code is doing a lot of inherently complex work. It’s harder to test thoroughly because there are many different internal paths and conditions to verify.

- Low WMC (low total complexity): Generally indicates a simpler, more manageable piece of code that is easier to test comprehensively.

Benefits of Software Testability

Here are the benefits of having software testability incorporated into the product.

Reduced Testing Costs and Time

With high software testability, everything becomes more efficient:

- Faster Test Cycles: Because the software is easy to control and observe, tests run quickly. You can get through more checks in less time.

- Less Setup Hassle: Setting up test environments and preparing test data becomes a breeze. You’re not wrestling with complex configurations every time you want to run a test.

- Early Bug Detection: When software is testable, you catch problems much earlier in the development process. Finding a bug when it’s just a tiny design flaw is infinitely cheaper and easier to fix than finding it right before launch, or worse, after it’s in your customers’ hands.

Enhanced Software Quality and Reliability

- More Comprehensive Testing: When it’s easy to test, you can test more aspects of the software, more deeply. This means fewer blind spots and more thorough coverage of all its features and potential interactions.

- Fewer Defects in Production: By catching more bugs early and testing more thoroughly, fewer nasty surprises slip through to your users. This means less downtime, fewer customer complaints, and a better reputation.

- Increased Confidence: When you know your software has been rigorously and easily tested, your team gains confidence in releasing new features and deploying updates.

Improved Maintainability and Debugging

- Easier Issue Isolation: When a problem does pop up, testable software helps you pinpoint exactly where it is. Clear logs and modular design mean you’re not searching for a needle in a haystack; you’re looking for a specific part that’s misbehaving.

- Clearer Code Structure: Software designed for testability often has a cleaner, more organized code structure. This makes it easier for new team members to understand, and for anyone to make future modifications without accidentally breaking existing functionality.

- Reduced Technical Debt: When software is hard to test, teams often take shortcuts or avoid making necessary changes because they’re afraid of introducing new bugs. Testability helps reduce this “technical debt“, keeping your codebase healthier over time.

Faster Development Cycles and Agility

- Quicker Iterations: Developers can make changes and additions with confidence, knowing that automated tests can quickly verify their work. This allows for rapid experimentation and iteration.

- Seamless CI/CD Integration: Testable software fits perfectly into Continuous Integration and Continuous Delivery (CI/CD) pipelines, where code changes are automatically built, tested, and deployed. This automation is only truly effective when the software is designed to be tested easily.

- Supports Agile Methodologies: Agile development thrives on quick feedback loops. Testability provides that feedback rapidly, allowing teams to adapt, learn, and deliver value continuously.

Better Collaboration Between Dev and QA Teams

- Shared Understanding: When software is transparent and well-structured, both developers and testers have a clearer, shared understanding of how it works and what it’s supposed to do.

- Streamlined Communication: Instead of vague bug reports or endless back-and-forth, clear testability features (like detailed logs and error messages) lead to precise communication and faster problem-solving. It moves from “it’s broken somewhere” to “this specific module failed when given this input.” Read: Best Practices for Creating an Issue Ticket.

Improving Software Testability

Let us review how we can improve software testability.

Design with Testing in Mind

Don’t just build software and then hope it’s testable. Plan for it from the very beginning.

- Start with the End in Mind: When you’re designing a new feature or a whole system, ask yourself: “How will we test this?” “What information will we need to see?” “How can we control its behavior for a test?”

- Keep it Modular: Break your software into small, independent “blocks” or “modules.” Each block should do one specific job and do it well. This makes it easier to test each piece in isolation. Imagine testing a car’s engine without needing the whole car assembled.

- Define Clear Interfaces: Make sure the “doors” and “windows” (the ways different parts of the software communicate) are well-defined and stable. This means one part doesn’t need to know the messy internal details of another, just how to interact with its public “face”.

Improve Observability

Software often works silently in the background, but for testing, you need it to be vocal about what it’s doing.

- Implement Good Logging: Have your software logging important events, data changes, and decisions it makes. When something goes wrong, these logs become your clues.

- Provide Clear Error Messages: Instead of cryptic error codes, make sure your software gives helpful messages that explain what went wrong, where, and why. This dramatically speeds up bug diagnosis.

- Expose Key Information but Cautiously: Sometimes, you might need to build in special “debug modes” or ways to peek at the software’s internal data. This allows testers to verify intermediate steps or data values that aren’t visible on the main screen.

Make Your Software Controllable

Testers need to be able to manipulate the software to test all possible scenarios.

- Use Dependency Injection (or similar techniques): This means designing your software so that its internal parts aren’t rigidly “glued” to external systems (like a specific database or an online payment service). Instead, you can “inject” a “fake” or “mock” version of that external system during testing. This lets you test your code in isolation, without needing the real, complex external service.

- Allow Easy Configuration: Make it simple to switch between different settings for testing. For example, a “test mode” might use a test database instead of the live one, or skip sending real emails.

- Provide Test Data Management: Have easy ways to create, load, or reset specific test data. Testers shouldn’t have to manually type in hundreds of customer records just to test one scenario.

Keep It Simple, Stupid (KISS Principle)

Complexity is the enemy of testability (and quality).

- Avoid Over-Engineering: Don’t build features or add complexity that you don’t actually need right now. Stick to the simplest solution that solves the problem.

- Write Clear, Concise Code: Code that is easy for humans to read and understand is also easier to test. Break down large functions into smaller, more focused ones.

- Refactor Regularly: Just like cleaning your house, regularly clean up and reorganize your code. This process, called “refactoring,” doesn’t change what the software does, but it improves its internal structure, making it more testable and maintainable.

Automate Your Tests

While not directly improving testability, automation leverages it and highlights where testability is lacking.

- Write Unit Tests: These are small, fast tests that check individual pieces of code in isolation. If your code is modular and controllable, writing unit tests is much easier.

- Build Integration Tests: These check how different parts of your software work together.

- Automate UI Tests: While sometimes fragile, automated tests that interact with the user interface can be valuable. Designing your UI with clear, identifiable elements makes these tests much more reliable. However, if you use AI-based tools like testRigor, you can improve the stability of your UI tests while leveraging your existing QA team, since this tool lets you write all kinds of end-to-end, UI, API, and regression tests using simple English statements.

Read: Test Automation Pyramid Done Right

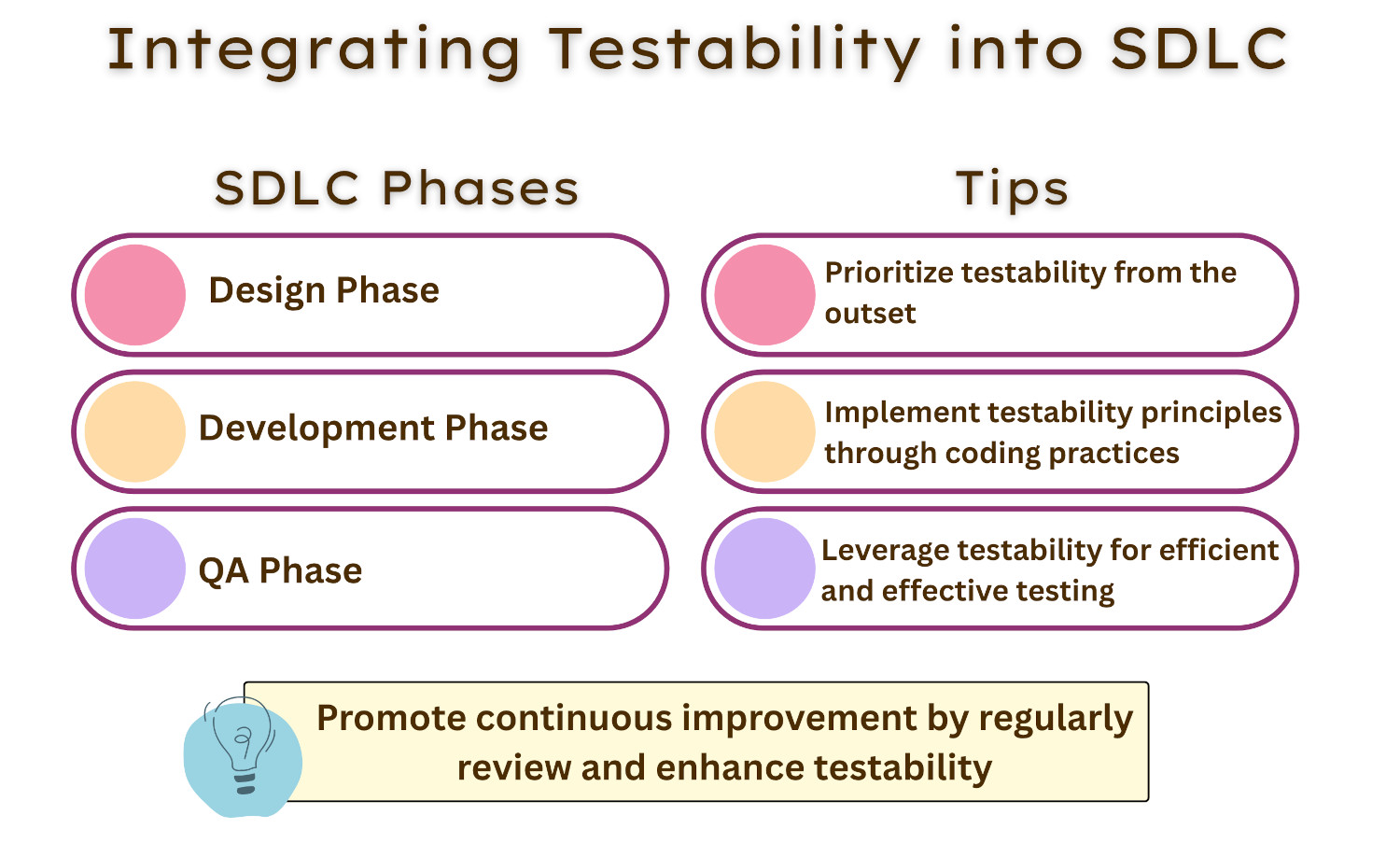

What can People in Different Roles Do to Improve Testability?

Improving testability isn’t about establishing standards and conventions or setting up an ideal environment. It is the responsibility of everyone involved in the SDLC of software, from the leader to the tester. Here is the summary of how people in different roles can influence software testability:

As a Tester

A tester shares the software testing results. However, to improve testability, a tester should not only share results but also the story behind obtaining those results. If it was cumbersome to get the results, that is a signal that the software is not testable enough. When the story is shared, it reveals the risks that others can’t see.

As a Developer

Development shouldn’t just focus on making the application work, but also consider the ease of a user or tester to explore the system. One should be able to execute different workflows, ones that aren’t part of your requirement list but are expected to work in synergy with other modules. This kind of exploration support is a good way to ensure that the software is testable.

As a Product Owner

While green dashboards ensure that everything is working correctly, they aren’t an indication of how testable or healthy the system actually is. Instead, looking deeper and trying to find areas where teams are struggling with the system is the way to encourage testable software.

Conclusion

Improving software testability is an ongoing journey, not a one-time task. It requires a mindset shift where everyone on the team – from designers and developers to testers – thinks about how to build software that “wants to be tested.” The effort you put in up front will pay off immensely in reduced costs, higher quality, and a much happier team.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |