Explainability Techniques for LLMs & AI Agents: Methods, Tools & Best Practices

|

|

For some time, the gold standard in artificial intelligence has been getting computers to predict one thing or another: the price of a stock, the onset of an illness, or critical slowdowns in software and hardware operations. The latest metric today is transparency.

Here’s the thing: modern AI, especially highly capable Large Language Models (LLMs) such as those behind generative chat applications and smart assistants, are gigantic complex neural networks. These models are black boxes in nature, with billions to trillions of parameters, acting as highly performative but fully opaque “black boxes”. We can see how inputs map to outputs, but we don’t have access to the way those mysterious internal decisions are being made.

This is where Explainable AI – or XAI for short – comes into play: it’s the branch of artificial intelligence concerned with making these systems less of a black box, supplying both developers and end-users with tools and methods to understand why an AI system came up with a particular result.

| Key Takeaways: |

|---|

|

Why is Explainability Critical for LLMs and AI Agents?

- Trust and Adoption: Just because an AI agent can route customer service calls or an LLM generates legal summaries doesn’t mean users will fully adopt it unless they trust the results. Explainability achieves that trust, particularly in high-stakes areas such as finance and medicine.

- Debugging and Performance: LLMs have a notorious tendency to “hallucinate”-produce confident but spurious output – and they are often biased in subtle ways. Explainability is the ultimate diagnostic for locating and removing brittle logic or unfair bias.

- Compliance and Regulation: More and more global regulations (e.g., GDPR from the European Union) – and guidelines from organizations such as NIST are calling for algorithmic transparency. If an automated system makes a significant decision (say, to deny a loan or flag a transaction), the user should be entitled to know why. XAI supplies that crucial audit trail to satisfy these critical governance and legal mandates.

Why’s XAI Difficult for LLMs and AI Agents?

Describing AI systems is never easy, and doing so for LLMs and AI Agents presents its own set of difficulties, which traditional Explainable AI (XAI) tools were not designed to solve. Here’s what XAI starts to look like when you go from standard ML models to the advanced systems:

General AI Explainability

The more general form of XAI for classical Machine Learning (ML) models (e.g., deep neural networks, decision trees) paved the way with techniques that are still popular nowadays:

- Model-Agnostic Methods: These treat the model as a “black box” and probe it with inputs and outputs. They are often applied to LLMs and AI Agents.

- LIME (Local Interpretable Model-agnostic Explanations): Explains individual predictions by approximating the complex model locally with a simpler, interpretable model.

- SHAP (SHapley Additive exPlanations): Based on game theory, it assigns an importance value to each input feature for a particular prediction.

- Model-Specific Methods: These leverage the internal architecture of a specific model type.

LLM Explainability

LLMs (like GPT or Claude) are so large and handle such complex data (human language) that traditional methods fall short. The focus shifts from feature importance to token flow and consistency. Challenges like the massive scale, sequential generation, hallucination, and bias need to be addressed here.

AI Agent Explainability

AI Agents (i.e., systems that employ LLMs or any other form of AI to reason, plan, and execute multi-step tasks) must meet the demand for explainability not only on a single prediction but throughout the entire decision process. You might see general challenges in explainability, like understanding multi-step autonomy, tool usage, and contextual state.

- AI Agents in Software Testing

- AI Assistants vs AI Agents: How to Test?

- Retrieval Augmented Generation (RAG) vs. AI Agents

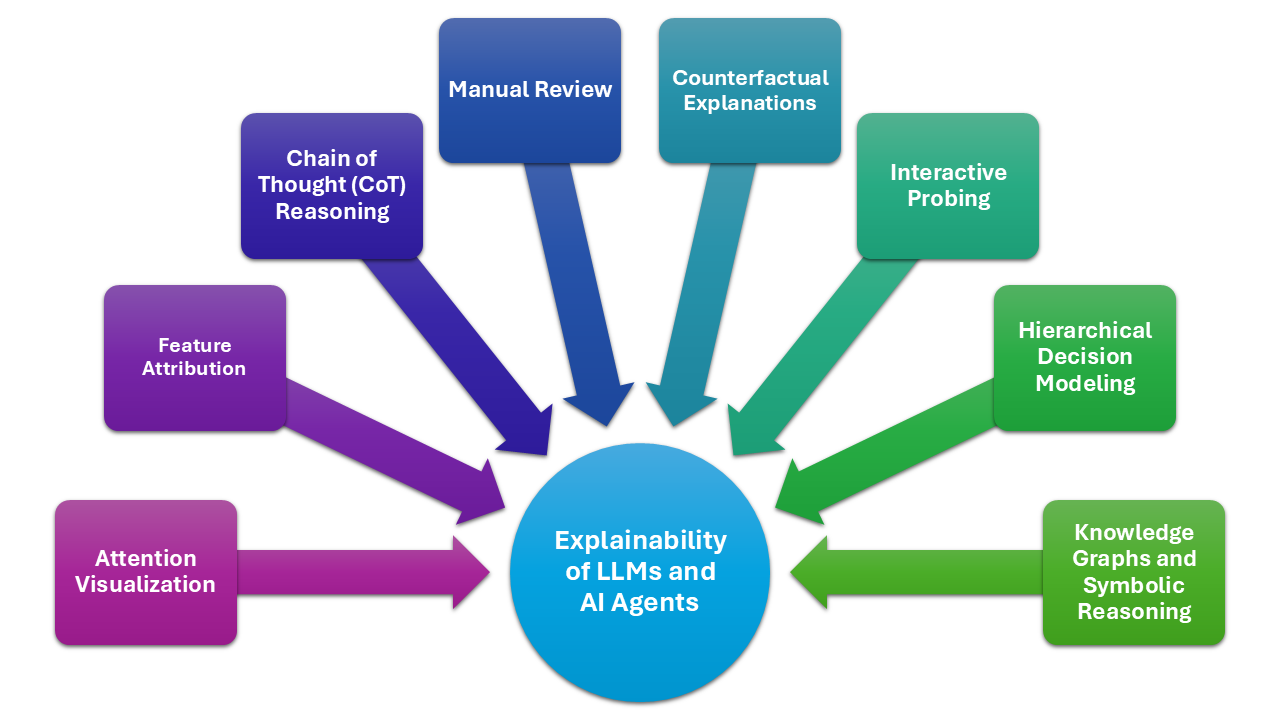

Explainability Techniques for LLMs and AI Agents

You need multiple viewpoints to get an honest peek into an LLM or Agent. These methods allow us to deconstruct the decision process, from the first tokens it sees in its input to an actual action.

Local Introspection (What Influenced This Single Output?)

These techniques focus on a specific prediction and identify the exact input components that drove that result.

- Feature Attribution (SHAP/LIME for Text):

- The Concept: This classic XAI technique is adapted for text. It assigns an importance score to every token (word or sub-word) in the input to show its contribution to the final output.

- What it Reveals: If an LLM classifies a review as “positive,” feature attribution will highlight words like “excellent” or “loved it” as the most influential factors.

- Attention Visualization:

- The Concept: LLMs use a mechanism called “attention” to decide which parts of the input context are most important when generating the next word. This technique makes that internal focus visible.

- What it Reveals: It shows you, often through heatmaps, which words the model was “looking at” or linking together in the prompt to construct its answer. For example, when the model generates the word “French”, the attention mechanism might be strongly focused on the word “Paris” in the input prompt.

Understanding Reasoning and Strategy

- Chain-of-Thought (CoT) Reasoning:

- The Concept: This is the simplest and most powerful XAI technique unique to LLMs. Instead of asking for the final answer immediately, you prompt the model to “think step-by-step”.

- What it Reveals: The model explicitly generates its intermediate steps, logic, and sub-conclusions in natural language. While the model may still occasionally invent a step, it provides a crucial, human-readable justification that can be debugged instantly.

- Counterfactual Explanations:

- The Concept: This asks a simple question: “What would have had to change in the input to get a different, desired output?”

- What it Reveals: It identifies the minimum necessary change in the input to alter the outcome. For example, if a loan was denied, the counterfactual explanation might be: “If your income was $5,000 higher, the loan would have been approved”. This is critical for fairness and regulatory compliance.

Agent-Specific Techniques (Explaining Actions)

- Hierarchical Decision Modeling:

- The Concept: Agents often break a complex task (e.g., “Plan a trip to Japan”) into smaller sub-goals (e.g., “Search flights,” “Check visa rules”). This technique maps the entire flow.

- What it Reveals: It creates a flowchart of the agent’s plan. This provides a global explanation by showing the hierarchy of goals, allowing human reviewers to understand the strategy before the agent even takes the final actions.

- Interactive Probing:

- The Concept: The system is designed not just to give an initial explanation, but to allow users (whether they are end-users, domain experts, or auditors) to request explanations for specific predictions or sub-steps and, crucially, to adapt the explanation based on feedback.

- What it Reveals: This is essential for fields that require multi-layered accountability. It ensures that explanations are useful to a non-technical audience (by starting simple) while allowing technical auditors to probe the underlying logic with deep, specific questions. It shifts XAI from being a presentation layer to being a collaborative debugging and verification tool.

- Manual Review:

- The Concept: This involves human domain experts (e.g., a data scientist, a compliance officer, or a medical professional) actively assessing the explanations that the AI system provides. The goal is to determine the explanation’s faithfulness (does the explanation truly reflect the model’s actual decision process?) and its usefulness (is the explanation clear enough to build trust and inform a real-world decision?).

- What it Reveals: Manual review ensures that the explanations meet the required ethical, safety, and regulatory bar. It validates the output of the XAI tools themselves, ensuring that they are not just plausible, but accurate and actionable for the human operator who bears the final responsibility for the outcome.

- Knowledge Graph and Symbolic Reasoning:

- The Concept: This involves supplementing the LLM’s statistical knowledge with structured, verified facts (a knowledge graph) and logical rules (symbolic reasoning).

- What it Reveals: When the agent needs to justify a fact, it can point directly to the specific, verifiable rule or data point in the external graph, rather than relying solely on the statistical correlations inside the neural network. This grounds the answer in concrete truth.

Best Practices for Explaining LLMs and AI Agents

Here are some best practices that can help you maintain accountability in your LLMs and AI agents:

- Mandate the ‘Show Your Work’ Prompt:

- Action: Apply CoT Reasoning all the time. Don’t jump to the final answer right away. Direct the LLM to record its steps, calculations, and intermediate results.

- Why it Matters: With the help of the CoT trace, you can easily decipher the black box workings of the AI system.

- Audit the Argument, Not Just the Answer:

- Action: When you are performing Manual Review, focus on the steps generated by the CoT.

- Why it Matters: An LLM can “hallucinate” a seemingly sound-sounding CoT even when it reaches the answer through some other, non-logical route. Explanation plausibility is not enough; a human must check the faithfulness (truth) of the explanation.

- Pinpoint Key Variables:

- Action: Leverage Feature Attribution tools (for example, SHAP for text) that show the words or tokens in the input prompt that had the highest impact on the output.

- Why it Matters: This is a fundamental part of bias discovery. In the case where an LLM’s hiring decision is biased toward non-essential keywords and their signals (which can be a candidate’s university over their experience), feature attribution unveils the bias.

- Enforce a Game Plan:

- Action: This creates a high-level flowchart of its goals and sub-goals.

- Why it Matters: You audit your strategy first. Imagine a complex plan has nine steps, and if the second step is flawed, you don’t execute any more of the steps in the process, save yourself time, money, and prevent all real-world errors.

- Log Everything (The Trace Log):

- Action: Instrument the Agent with robust Manual Review logging. Every decision, every internal thought, every tool input/output, and every API call must be time-stamped and recorded.

- Why it Matters: This complete audit trail – or “trace log” – is the Agent’s flight recorder. If an Agent accidentally deletes a file, the trace log is the only definitive way to pinpoint the exact sequence of thoughts and actions that led to the error.

- Ground Decisions in Fact, Not Fluency:

- Action: For all fact-based questions, require that the Agent make a call to an external tool that would query a Knowledge Graph and Symbolic Reasoning system; do not allow it to operate only from its internal LLM memory or cache.

- Why it Matters: When the Agent provides a recommendation, the explanation must link directly to the verified source. This eliminates factual “hallucinations” and makes the explanation legally auditable.

- Build the “Drill-Down” Interface:

- Action: Use Interactive Probing to design an explanation interface with multiple levels of detail. The default view should be a simple, one-sentence summary.

- Why it Matters: You avoid overwhelming the average user while still providing the required detail for experts. The user can “probe” by clicking a button that says, “Show technical details,” which then reveals the full Chain-of-Thought or the Feature Attribution heatmap.

- Prioritize Actionable “What Ifs”:

- Action: When a negative decision is made (e.g., a proposal is rejected, a task fails), provide Counterfactual Explanations that are feasible and simple.

- Why it Matters: The explanation shouldn’t just be why the AI failed, but how the user can succeed next time. A user needs to know: “If you had added a section on logistics, the proposal would have been approved,” not a general statement about the text’s tone.

Conclusion

As powerful as large language models and AI agents are, they can only earn real trust if people understand how and why they make decisions. Explainability isn’t just about building better technology – it’s about building stronger relationships between humans and the systems they use. Done right, it transforms AI from a mysterious black box into a partner you can rely on.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |