How to Work with Requirements as a Tester

|

|

As a tester, the requirement is such a core ability that affects test success directly. Requirements specify what the software must do, including business goals, user expectations, and technical constraints. A proper understanding of these requirements is crucial to ensure the final product actually satisfies stakeholder needs. Where requirements are ambiguous or not understood, major test scenarios can be missed, key functionality can be omitted, and defects may go undetected in the early stages. It causes delay, rework, and a bad user experience that results in increased cost and low product quality.

| Key Takeaways: |

|---|

|

The Role of Requirements in Testing

Requirements are the essence of testing: they describe what to test, and they are the baseline for success. To testers, they serve as a reference point for planning, designing, and executing tests that convey the intended message and contribute to business value. A good requirement acts like a telescope, guiding the attention of testers from what is being done with software to why it is being done, and understanding the value of that behavior.

Read: How Requirement Reviews Improve Your Testing Strategy.

Requirements as the Foundation of QA

Every test effort is enhanced through a focused requirement. They describe what a system is supposed to do, how it is supposed to act, and under which conditions it should work. They assist testers by answering such crucial questions as:

- What should be tested?

- How can the system’s behavior be measured against expectations?

- What risks exist if certain requirements are misunderstood or unverified?

When an application is poorly specified, testers have to work in the dark, having no idea about what exactly needs to be returned (expected result). Also, what users should do with it and under which conditions. In the end, the quality of testing is only as strong as the clarity of the requirements.

The Testing Perspective on Requirements

From a test perspective, requirements serve several functions:

- Guidance: Set the limits for testing and make it clear what is and isn’t part of the test.

- Traceability: Make sure that at least one test covers every function.

- Validation: Let testers check that the product meets the needs of users.

- Compliance: Make sure that the system follows all the rules and standards that apply.

When requirements are well-written, testers can make more transparent and more objective validation criteria. Poor communication creates confusion, breaks the rules of the test, and leads to low-quality products.

Requirements as a Source of Risk

The chances of failure are much greater when requirements are incomplete or ambiguous. Industry reports suggest that 40-60% of defects result from requirements that are either poorly defined or misunderstood. Testers can help to mitigate this risk by asking questions, validating the intent of the requirement, and maintaining traceability as work is done.

Read: Risk-based Testing: A Strategic Approach to QA.

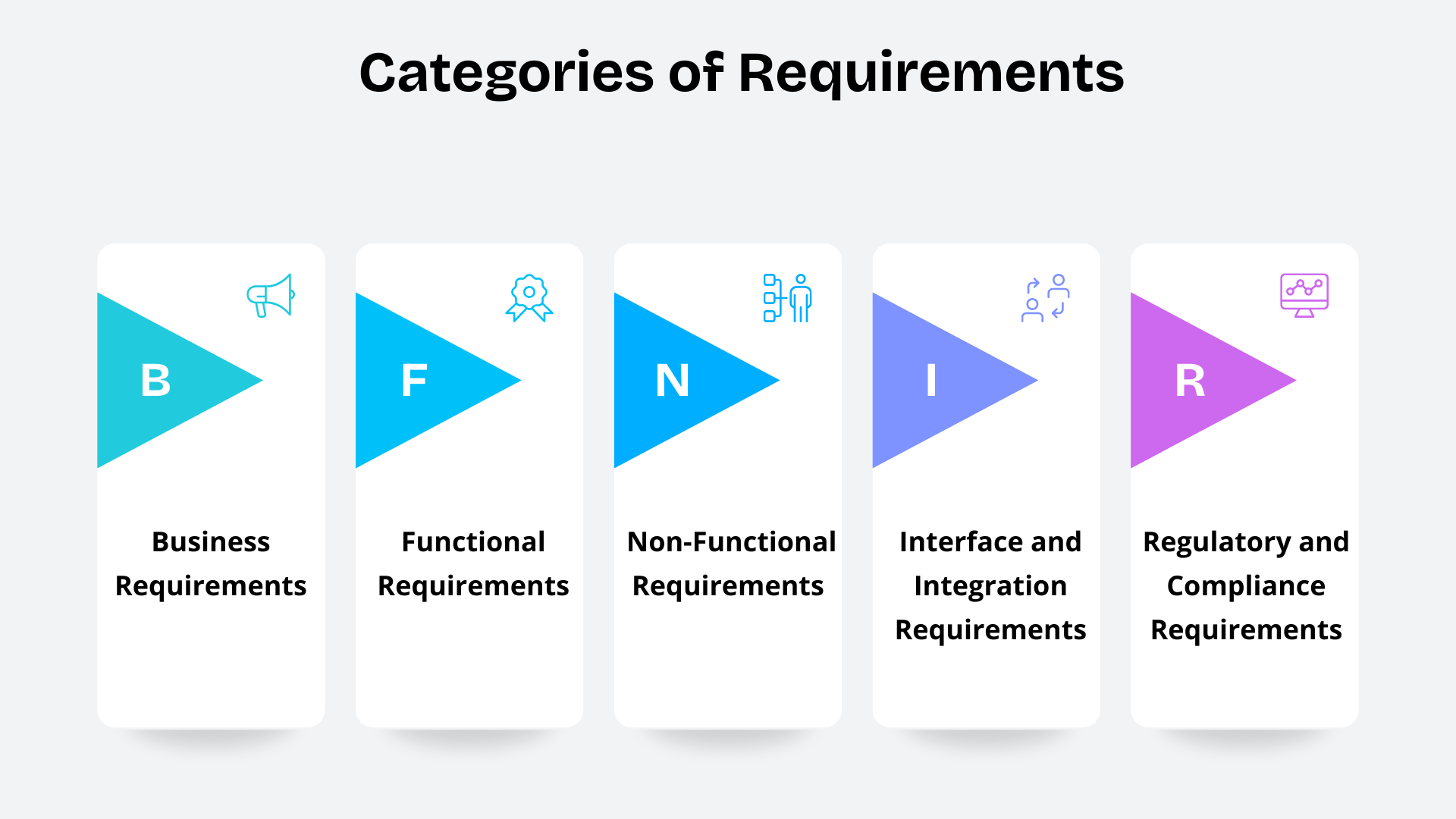

Categories of Requirements

Testers need to know the different types of requirements before they can work with them well. Different types of requirements have different effects on testing.

Business Requirements

Business requirements are the goals or reasons an initiative is undertaken. They tell us what a system is intended to do and for whom it serves. Testers can align their testing with company goals when they know the business requirements. Instead of just testing for functionality, QA can check that what’s being delivered is actually going to serve its business purpose.

For instance, when a business states that the transaction time should be reduced by 50%, a tester’s validation activity needs to focus not just on whether something is correct but also on how it performs under load.

Functional Requirements

Functional requirements describe what the system is supposed to do. They describe how things, processes, and systems interoperate. Then the testers can test each method with some inputs, process, and output.

Testers write test cases and scenarios to make sure that they get the expected results against their functional requirement. For instance, a login feature could indicate that users can be authenticated with legitimate credentials, and invalid credentials would provide an error.

Test cases generated from the functional requirements can cover all positive, negative, and boundary inputs , and how the system should respond.

Non-Functional Requirements (NFRs)

Non-functional requirements represent how well a system performs rather than what the system does. They concentrate on attributes such as speed, security, reliability, and the overall end-user experience. As an example, even if a login feature is successful, it could fail NFRs where it loads slowly or cannot be used by multiple users concurrently. These are standards that characterize performance and behaviour that should otherwise ensure general day-to-day use.

Testers use special methods to check NFRs because simple functional testing doesn’t work for them. Some common ways are:

- Load and stress testing to check performance

- Penetration testing for security

- Accessibility testing for usability

Read: Functional Testing and Non-functional Testing – What’s the Difference?

Interface and Integration Requirements

For complex systems, you have to communicate with some components or services. These interface and integration requirements describe how data will be exchanged between these systems to guarantee seamless communication and compatibility.

Testers validate these with API testing, integration tests, and end-to-end scenarios to confirm the message format, data integrity, and error conditions between modules.

Regulatory and Compliance Requirements

Various sectors like finance, health, and e-commerce need to meet specific standards or regulations (like GDPR, HIPAA, and ISO). Such requirements typically introduce rigorous policies on data protection, auditability, and user privacy. Read: How to Achieve HIPAA Compliance?

From a tester’s point of view, this means guaranteeing that all features implemented abide by legal regulations and validation proofs can be shown during an audit.

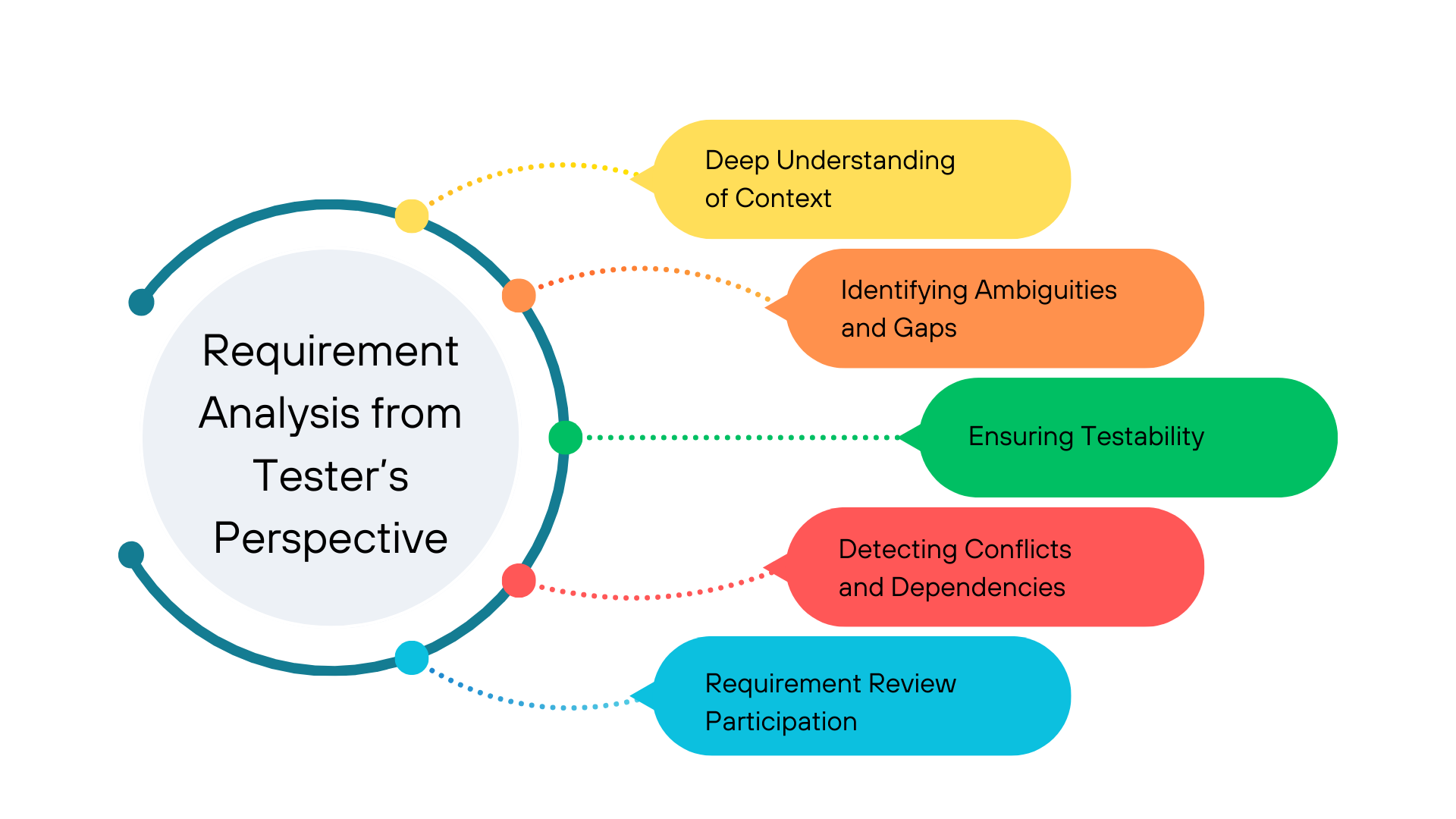

Requirement Analysis from a Tester’s Perspective

The requirements analysis is the basis of the test planning and design phase. It’s in the stage where testers interpret, critically examine, and refine requirements to validate that they are correct, pragmatically testable, and complete.

Deep Understanding of Context

Before diving into the individual features, testers should understand what the system does and who uses it. It includes analysis of the business domain, knowledge of how users work, and recognition of interdependencies between features.

By doing this, testers can predict the real-world usage and find any discrepancies much earlier in time. Contextual comprehension refines needs from universal declarations to concrete statements that could be tested.

Identifying Ambiguities and Gaps

One of the most important early activities a tester can perform is to draw attention to ambiguous, missing, or incorrect requirements. Ambiguity frequently takes the form of words like “fast,” “intuitive,” or “user-friendly”.

These statements have no specific criteria or quantifiable results and are impossible to objectively confirm. Testers need to interrogate these signifiers by asking:

- How fast is “fast”?

- What does “intuitive” mean in measurable terms?

- What conditions define “secure”?

Clarifying these points before development prevents costly misunderstandings later.

Ensuring Testability

A testable requirement is one that can be objectively tested using a set of given inputs and expected results. It also must be something that is measurable or observable; otherwise, the tester cannot easily gauge whether they have satisfied it. Testers should collaborate with analysts or product owners if a requirement is not testable, ensuring that it is refined so that clear validation criteria can be set.

Detecting Conflicts and Dependencies

Requirements may interact with or depend on each other. For instance, if you have a feature that blocks an account for too many failed attempts, it has to conform to session expiration policies and authentication rules. Testers must recognize these interdependencies to design tests that address the integrated system behavior, not only the standalone functionality.

Requirement Review Participation

Testers are required to participate in requirements review sessions. Their inquisitive nature is useful to uncover logical gaps, missing use cases or technical limitations. Testers who attend early discussions also have visibility into what’s most important from a stakeholder perspective, and can help push on requirements to make them clearer or more doable.

Read: Software Requirements Guide: BRS vs. SRS vs. FRS.

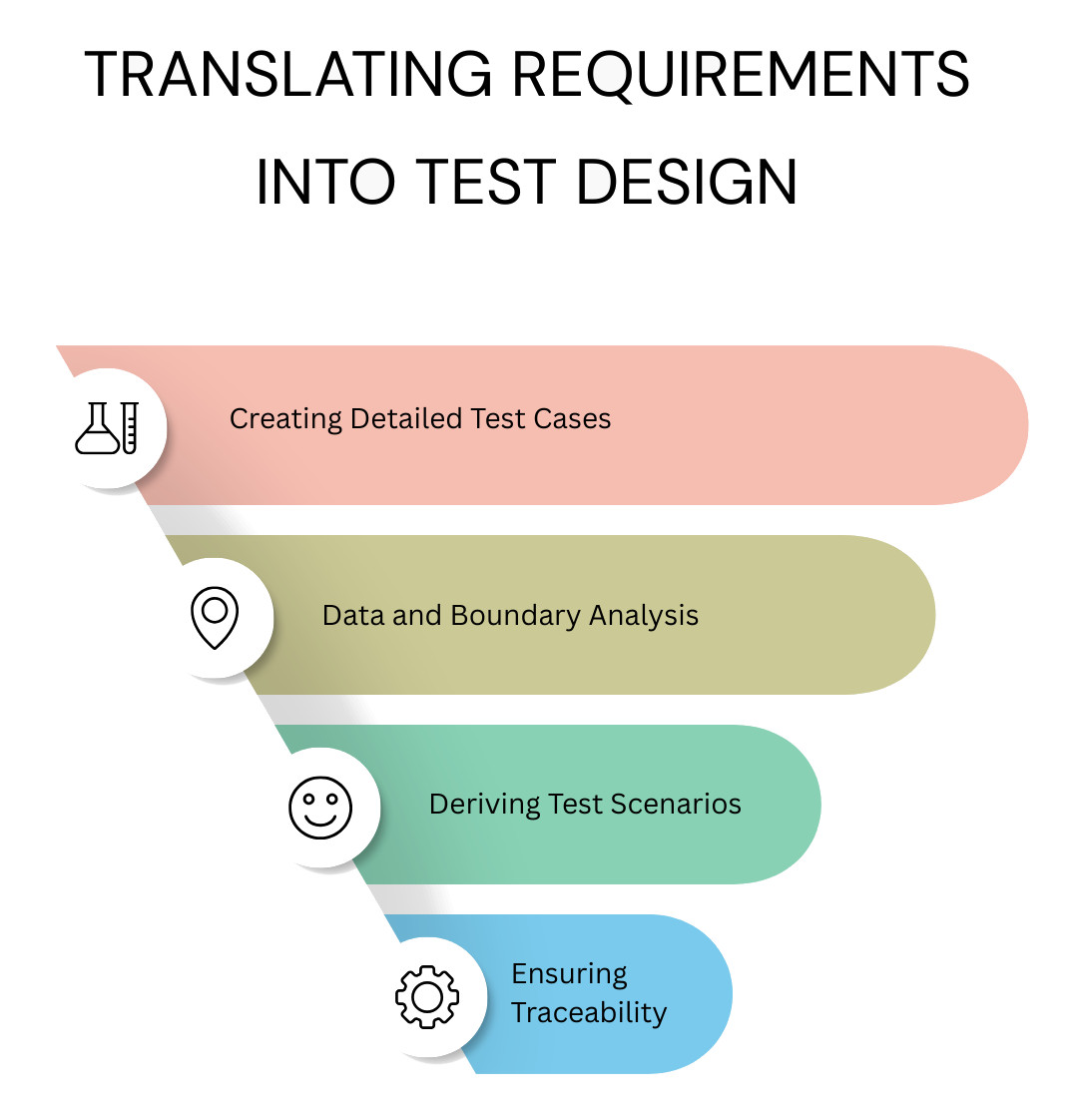

Translating Requirements into Test Design

After analyzing, testers turn the clarified and validated requirements into test artifacts. This step connects the business goal with the execution of the test.

- Deriving Test Scenarios: For each requirement, several test scenarios can be derived, that is, a high-level goal or purpose of testing on that feature. A proper scenario should summarize ‘what needs to be verified’ without going into the fine details. Good scenario design provides logical coverage, which includes common, uncommon, and error flows. Read: Test Scenarios vs. Test Cases: Know The Difference.

- Creating Detailed Test Cases: After the scenarios are finalized, testers write detailed test cases outlining each step of execution, prepared test data, preconditions, and the expected results. This procedure then converts the abstract requirements into tests that can be performed again and again in a consistent manner. Each test case is a proof that confirms one requirement. Read: How to Write Test Cases from a User Story.

- Data and Boundary Analysis: Most of the requirements consist of some form of data constraint, including input range, formats or value ranges. Testers use boundary value analysis and equivalence partitioning to create input data sets that will test both normal and extreme cases. For instance, if a field allows values between 1 and 100, test data should include 0, 1, 100, and 101 to verify boundary behavior. Read: Test Design Techniques: BVA, State Transition, and more.

- Ensuring Traceability: Traceability ties each test case to the requirement from which it was derived. This relationship is critical for following up on measuring coverage and conducting impact analysis when flights took place. Testers ensure that there is no feature left untested and all requirements are covered in the validation by keeping a requirement traceability matrix (RTM). Read: Requirement Traceability Matrix RTM: Is it Still Relevant?

Managing Changing Requirements

In contemporary development models such as Agile and DevOps, the requirement is not a static concept; it changes as you gather feedback from users, observe the market (and competitors), as well as when technology makes a leap. This dynamism presents testers with both challenges and opportunities.

- Embracing Change as a Constant: Rather than overcoming the resistance to change, testers should get ready by formulating flexible test strategies. Ongoing development keeps testing in sync with existing aims. This method requires coordinated work, rapid revalidation, and automated mechanisms to handle frequent changes in an efficient manner.

- Version Control and Baselines: Version control of requirement specifications helps to maintain clarity of what has changed, when, and why. Maintaining baselines allows teams to compare historical versions and see how a feature has evolved. What this means to QA teams is that it enables focused re-testing, not all-encompassing regression.

- Impact Analysis: When a requirement changes, testers must assess how it affects the existing test suite by mapping all test cases linked to the modified requirement and reviewing dependencies with other functionalities. Based on this analysis, outdated tests should be updated or removed. Effective impact analysis saves time, reduces duplication, and ensures the test suite remains relevant.

- Regression Testing Strategy: Requirements frequently change, and hence can result in some unintended regressions. A strong regression test suite validates that previously implemented features still function as required following subsequent changes. Risk and business impact prioritisation ensures quality without all the overhead of testing.

- Collaboration During Requirement Evolution: Testers, developers, and product owners will have a shared understanding as the requirements grow. Testers act in a consulting capacity, confirming feasibility, emphasizing testability as an issue, and matching acceptance criteria to what can be achieved in actuality.

Read: All-Inclusive Guide to Test Case Creation in testRigor.

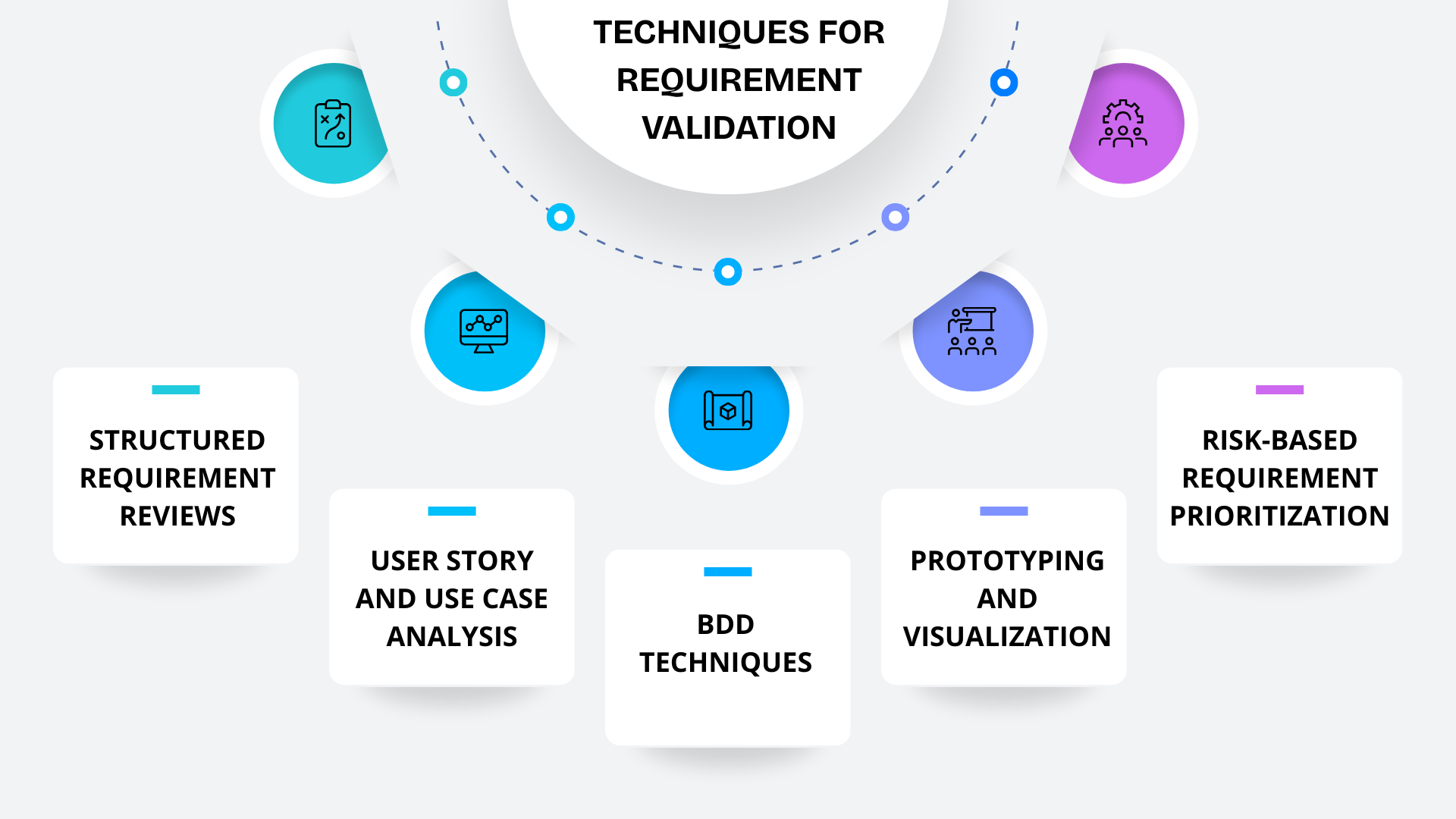

Techniques for Requirement Validation

Validation makes sure that the requirements actually represent what stakeholders want, are technically possible, and can be tested. Testers use several analytical and collaborative techniques for this.

Structured Requirement Reviews

A formal requirement review allows testers, developers, and business analysts to examine every requirement collectively. Testers evaluate:

- Coverage: All user stories and scenarios are well described without missing any details.

- Coherence: Ensures that no two requirements in the specification are conflicting.

- Traceability: Ensures that you can trace each requirement through to a test case that proves the need.

- Feasibility: Assessing whether the need can actually be implemented within technical, time, and resource constraints.

This proactive approach prevents misinterpretation and reduces defects that would otherwise surface much later in the cycle.

User Story and Use Case Analysis

Splitting the requirements into user stories or use cases will allow testers to have a better understanding of how the system should behave from a user’s point of view. Testers can predict the possible corner cases through analyzing alternate flows and exceptional paths, which may be missed in development. This customer-led approach means testing is consistent with customer demand and results in high levels of satisfaction. Read: How to Write Test Cases from a User Story.

Behavior-Driven Development (BDD) Techniques

In Behaviour Driven Development, testers, developers, and business stakeholders work together to define requirements using a structured language called Gherkin. This pattern has the benefit of ensuring that there is shared understanding between teams and removes ambiguity by explicitly stating preconditions, actions, and desired effects. What BDD really does is reinforce the alignment by taking requirements and converting them to living documentation that can be used both for developing and testing. Read: What is Behavior Driven Development (BDD)? Everything You Should Know.

Prototyping and Visualization

Prototypes and wireframes provide visual context when text requirements are hazy. Testers can look at the mock interface to see if there are any missing workflows, inconsistencies in layout, or usability problems before implementation. Visual validation provides added comprehension and aids in accessibility and usability test planning.

Risk-Based Requirement Prioritization

Some requirements are more important than others, and testers should evaluate the risk based on the business value of the functionality, the likelihood of failure, and the potential impact of defects. By considering these factors, they make decisions about which parts of a system need deeper or earlier testing. This, in turn, ensures that effort is spent where it can be most effective and that resources are used most efficiently while providing feedback as quickly as possible.

Common Challenges in Working with Requirements

Even if there are well-defined processes, testers often face challenges that prevent them from achieving clarity or alignment. Typically, these challenges arise due to communication deficiencies, shifting priorities, and differences in documenting or understanding requirements. Identifying and overcoming these challenges early in development is necessary to ensure product quality and efficacy of testing.

- Ambiguous Language: Ambiguous language leads to ambiguities about definition and validation; hence, testers need to look for quantifiable operational definitions.

- Incomplete or Missing Requirements: Crucial information, such as error handling or other flows, might be missing. Team members need to make inquiries and verify if updates need to be tracked.

- Frequent Changes and Scope Creep: Constant changes can disrupt test planning and therefore continuity in re-assessment, as well as strong traceability, are requirements.

- Conflicting Stakeholder Expectations: Competing priorities can affect requirement clarity, and testers must help balance these viewpoints without compromising quality.

- Lack of Communication Channels: Misplaced conceptions are the result of bad communication, so testers need to promote collaboration through documentation, reviews, and clarification sessions.

How testRigor Enhances Requirement-Based Testing

testRigor makes it easier for testers to work with requirements, reducing ambiguity, simplifying test creation, and aligning testing activities directly with business expectations. Since testRigor allows tests to be written entirely in plain English, requirement statements can be translated into automated tests almost word-for-word, minimizing the interpretation errors that typically arise when converting requirements into scripts.

enter "[email protected]" into "Email" enter "Password123" into "Password" click "Login" check that page contains "Dashboard"

This approach removes the need for brittle locators, technical scripting knowledge, or framework setup. Instead, testers focus on validating the requirement itself, not managing automation code. This clarity supports stronger traceability as each requirement maps directly to a readable automated test, making audits, reviews, and regression coverage easier to maintain.

Read: testRigor Locators.

Conclusion

A solid testing process starts with well-defined, testable requirements; they influence every decision a tester makes and define whether the end product really fulfills user and business expectations. When testers actively analyze, clarify, and validate requirements, they reduce risk, prevent defects early, and ensure complete, meaningful coverage. With tools like testRigor, organizations are now able to transform requirements into automated tests directly and confidently, improving precision while accelerating time to market and product reliability.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |