Integrating AI into DevOps Pipelines: A Practical Guide

|

|

Artificial Intelligence (AI) and DevOps are redefining the software landscape today like never before. DevOps introduced automation, velocity, and collaboration to development and operations. AI adds the elements of prediction, learning, and adaptation. Together, they constitute AI DevOps, a smarter, self-improving means of building, testing, deploying, and managing applications.

This is not just about adding a chatbot or some analytics to your pipeline. It’s fostering your DevOps system to think. To monitor logs and metrics, to forecast build failures before they happen, to automate efficient resource provisioning, and to adapt based on the past natural patterns without a human explicitly training it.

As companies shift toward continuous everything, including integrating, testing, deploying, and monitoring, the requirement to push new code faster without compromising stability increases.

| Key Takeaways: |

|---|

|

Why Integrating AI into DevOps Pipelines Matters

Traditional automation simply can’t keep up as development cycles speed up and systems increase in complexity. By adding intelligence to DevOps, companies shift from reactive task performance towards imperative system optimization and operation by systems that learn what to do next: predict, adapt, and improve constantly.

- Moving Beyond Static Automation: DevOps AI brings adaptive learning systems. AI doesn’t simply do what it’s told to do; it looks, listens, learns, and responds accordingly.

- Driving Predictive Operations: With AI for DevOps, your monitoring is predictive. Rather than reacting to incidents, AI spots outliers in advance and recommends preventative measures.

- Enhancing Decision-Making: AI improves the intelligence of humans in DevOps. It doesn’t replace the engineers; it equips them with information. AI discovers hidden risk in your releases, uncovers coverage gaps in your tests, and helps you prioritize the backlog item that adds the most stability to your product.

- Reducing Cognitive Load: An engineer juggling numerous environments, hundreds of metrics, and constant alerts will miss something. AI sorts through all the noise, triaging itself and minimizing what’s seen to only what really matters. This leads to more serene and focused DevOps teams.

Learn more about what DevOps is

Core Concepts Behind AI for DevOps

If you want to appreciate the value of AI DevOps, it is helpful to understand its underlying principles. AI doesn’t simply improve DevOps; it turns it into a system that is self-learning, predictive, and that continually gets better.

What Is AI DevOps?

AI DevOps refers to the combination of AI and ML with DevOps that results in smarter data-driven automation. It provides systems with the ability to examine data, experience, and make predictive decisions during the lifecycle of the software delivery. When automation is integrated with cognitive intelligence, the teams can turn static processes into adaptive ones. For example, using an AI model to forecast build failures prior to deployment and subsequently automatically executing remediation actions.

Read more about AI In Software Testing

The Evolution Path

The journey from DevOps to intelligence has been iterative and transformational. It started with scripted automation, progressed to orchestrated pipelines, and is now at AI DevOps, where pipelines can learn, adapt, and optimize themselves through continuous feedback loops and generative things.

DevOps 1.0 – Scripted Automation

The initial phase of DevOps was based on predefined scripts and static workflows, whose sole purpose was to perform repetitive tasks relatively well, without much flexibility. These would automate deployment, but couldn’t understand data or recover from unexpected failure.

DevOps 2.0 – Orchestrated Pipelines

The second stage introduced tools as Jenkins, GitLab, and Azure DevOps for continuous integration and delivery. As much as orchestration improved us in terms of speed and uptime, it still needed a high level of human intervention for fine-tuning and issue-solving.

AI DevOps – Autonomous Pipelines

In the advanced stage, the pipeline becomes autonomous. It learns from actions and feedback. And they can leverage Generative AI DevOps to test and find anomalies, and even to self-heal without human intervention.

Read more about DevOps Testing Tools

Pillars of AI Integration

- Data: Data is an integral part of any AI-driven DevOps solutions as it feeds the machine learning systems, enabling them to analyze and predict.

- Learning: Machine Learning algorithms learn from data and discover hidden patterns and relationships in the data.

- Action: Once discoveries are made, AI systems initiate automated interventions or suggest human-vetted interventions.

- Feedback: Learning cycles close with feedback loops that send real-world results back into AI models. As more and more new data is processed, this iterative process of refinement leads to a better accuracy of the model and also allows us to improve our pipeline on an ongoing basis.

Machine Learning Foundations for DevOps AI

Machine Learning (ML) is at the crux of enterprise AI DevOps, fueling intelligent Automation and Predictive Insight. ML translates raw operational data into actionable intelligence that leads to smarter decisions throughout the pipeline. By using historical patterns to adjust its models, ML can help DevOps systems evolve from mere automation to adaptive and self-improving ecosystems.

Read more about Machine Learning Models Testing Strategies

Understanding Machine Learning in Pipelines

Machine Learning (ML) enables DevOps to learn autonomously. Rather than fixed rules, ML models learn from historical data such as builds, test results, incidents, and performance metrics. Once trained, they can be used to predict or classify future behavior.

Types of ML Used in AI DevOps

- Supervised Learning: Supervised learning is used to predict various outcomes, like a build failure, the severity of a defect, or the risk of deployment using labeled data.

- Unsupervised Learning: Hidden patterns are discovered by unsupervised learning in similar error or performance metric classes.

- Reinforcement Learning: Reinforcement learning further learns to optimize resource allocation or deployment strategies from feedback over time.

- Natural Language Processing (NLP): NLP processing of logs, tickets, and commit messages is used to discover patterns and insights.

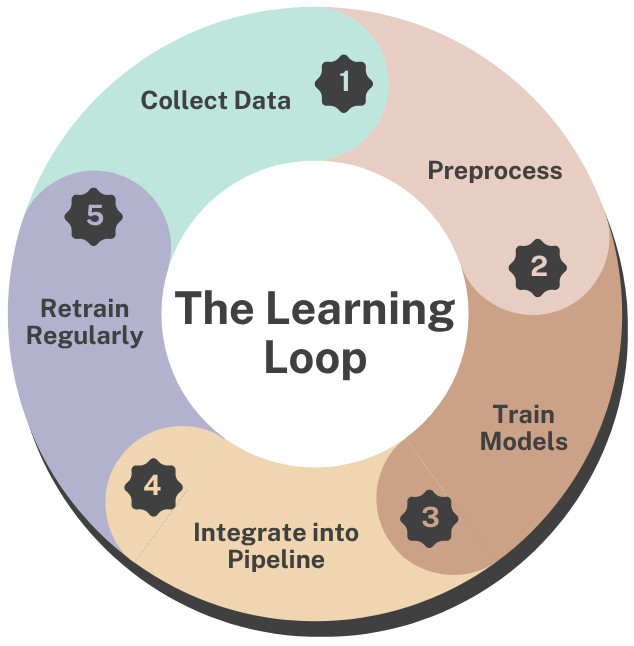

The Learning Loop

- Collect Data → Collect data from CI/CD systems, monitoring solutions, and Git AI commits as the basis of model training.

- Preprocess → Refine raw data through cleaning, statistical normalization, and feature extraction to prepare it for proper Machine Learning.

- Train Models → Leverage libraries such as TensorFlow and PyTorch to build models that predict, classify, or optimize outcomes for the pipeline.

- Integrate into Pipeline → Integrate trained models into tools like Jenkins, GitHub Actions, or Azure DevOps for immediate decisions.

- Retrain Regularly → Retrain models regularly to account for changes in data, reduce model drift, and ensure sustained success.

This cycle keeps the system aligned with evolving infrastructure and codebases. Read more about Machine Learning to Predict Test Failures

Building the AI-Enhanced DevOps Architecture

To implement an AI-powered DevOps architecture, intelligence should be embedded at every stage of the software delivery process. Every layer of the pipeline is critical, from collecting and transforming data to training models, interpreting results, and continually improving performance. GraphQL and AI tools, as described above, working in harmony would be the base of a self-learned, adaptable, and more efficient AI DevOps ecosystem. Read more about The Role of DevOps in QA Testing

Data Collection Layer

This forms the fundamental building block of any AI-driven pipeline – capturing data across disparate sources and stitching it together.

- Version Control Systems (Git AI) – commits, branches, code diffs.

- CI/CD Tools – build status, artifact metadata, logs.

- Testing Tools – results, durations, flaky tests.

- Monitoring Systems – metrics, traces, alerts.

The more unified your data lake, the more accurate your AI DevOps predictions.

Feature Engineering and Data Preparation

Once you have gathered your raw data, it needs to be put into a more organized, usable form. This includes monitoring essential metrics such as

- Build frequency, test pass rate, and code churn.

- CPU utilization trends and latency spikes.

- Text vectorization for logs and error messages.

Model Training Layer

ML models are trained for special DevOps issues in this layer. While regression models forecast build times, classification models presage success or failure rates, and clustering models uncover anomalies. The training step is used to fine-tune model prediction, which ultimately affects pipeline performance and reliability.

Inference Layer

The inference layer integrates trained AI models into active CI/CD pipelines, allowing for real-time decisions. For example, if a model predicts a likely build failure, the pipeline can automatically pause the process and alert the AI DevOps Engineer for review. This immediate feedback enhances operational efficiency and reduces deployment risks.

Feedback and Optimization

This layer is responsible for making sure the system keeps learning and improving, feeding back the post-deployment results into the model. Retraining and threshold updating periodically will improve accuracy, allowing the pipeline to adapt to new conditions.

Step-by-Step Guide to Implementing AI in CI/CD

- Step 1: Define Business and Technical Objectives: Begin by pinpointing what you want to achieve with AI for DevOps (such as lowering failure rates, cloud cost optimization, or faster testing) and work toward short- and long-term goals. Set quantifiable KPIs such as build success rate, MTTD, MTTR, and deployment frequency to accurately measure progress.

- Step 2: Establish Data Foundations: AI performance relies on data, so make sure to centralize log and metric collection. Maintain consistency in tests and deployments, while securely storing data in accordance with company and legal obligations.

- Step 3: Choose AI Tools and Frameworks: Select tools that align with your DevOps setup for integration, such as Jenkins, GitLab CI, Azure AI DevOps, or GitHub Actions. Leverage Machine Learning and/or deep learning frameworks, such as TensorFlow, PyTorch, or Scikit-learn, for modeling, while using tools like Elasticsearch, Prometheus, or Datadog for data ingestion.

- Step 4: Build ML Models: Build Machine Learning models around important DevOps tasks such as Predictive build analysis, Smart test prioritization, Failure correlation, and Resource utilization prediction. Any model should at least explicitly target a particular pipeline requirement or performance problem.

- Step 5: Integrate Models into the Pipeline: Integrate AI inference processes across different pipeline phases to make decisions in real-time. With Git AI, metrics analyze the risk of a commit prior to builds, evaluate test coverages following tests, and make deployment-risk predictions pre-releases.

- Step 6: Automate Feedback Loops: Feed telemetry and outcome data back into the AI model’s training set after every release. Such continuous feedback enables the system to learn from evolving situations and become increasingly accurate.

- Step 7: Monitor and Iterate: Monitor the performance of any AI model you develop using metrics for accuracy, precision, and recall, as well as false positives and the deployment speed.

How Git AI Strengthens the Pipeline

Git AI adds a layer of intelligence to version control by analyzing commit practices, code complexity, and developer behavior in order to achieve better collaboration and code quality. It turns repositories from static code storage into active participants in the DevOps infrastructure, driving smarter business decisions and more resilient software.

- Predictive Code Review: Git AI finds security vulnerabilities, business logic errors, and code smells in commits before they merge into your codebase to keep your codebase clean and safe.

- Automated Pull-Request Insights: It inspects code changes in your CI/CD process to determine the execution priority of pull requests according to the impact and risk of merging.

- Commit-Based Test Selection: Git AI detects and triggers just the right tests for recent code changes, saving test execution time and resources.

- AI-Assisted Documentation: It translates automatically your commit messages into clean summaries in order to enhance traceability and comprehension of your project.

Generative AI in DevOps

Generative AI DevOps uses LLMs and generative algorithms to create, alter, or improve different elements of the DevOps. It is the creative arm of automation. Rather than having engineers handwrite YAML or craft intricate Terraform scripts, they can describe what they want to happen using natural language. The AI creates, tests, and even sticks to those settings.

- Script Generation: Automatically creates CI/CD configs, container manifests, or monitoring queries to ease setup and deployment.

- Test Authoring: Generates ready-to-use test scripts with natural language into test frameworks for superfast and intelligent automation.

- Deployment Planning: Recommends the best rollout actions and rollback conditions to achieve smooth & dependable releases.

- Incident Summarization: Transforms complex logs into clear, prioritized incident summaries for quicker issue resolution.

Read more about Generative AI in Software Testing

Why Azure AI DevOps Matters

With services like Azure Machine Learning, Azure Pipelines, Application Insights, and Azure Monitor, organizations can infuse AI intelligence into their CI/CD lifecycle without having to integrate a handful of discrete tools separately.

Key capabilities include:

- Predictive analytics over build and release histories enables predictions on what might go wrong, advancing deployment reliability.

- Automated AI-powered anomaly detection in production telemetry instantly recognizes abnormal system behavior tendencies.

- Azure Repos Git AI integrations to add intelligent analyzers for risk detection and write better code.

AI DevOps Workflow in Azure

A simplified Azure AI DevOps workflow looks like this:

- Developers commit code to Azure Repos or GitHub.

- A Git AI model analyzes commit metadata to assess change risk.

- Azure Pipelines triggers a build and runs an ML model to predict build success likelihood.

- Automated test prioritization selects test subsets using historical data. Azure Machine Learning re-trains and deploys updated models if performance thresholds change.

- Application Insights continuously feeds operational data back into training pipelines.

Read more about CI/CD Series: testRigor and Azure DevOps

Challenges, Risks, and Ethical Dimensions

- Data Quality: Incomplete, biased, or noisy logs can lead to unreliable models. Building proper data hygiene practices, validation, labeling, and anomaly filtering is non-negotiable.

- Model Drift and Maintenance: As codebases evolve, old training data loses relevance. Without continuous retraining, predictive accuracy decays. Incorporating automatic retraining within CI/CD (for instance, via Azure AI DevOps) helps mitigate this.

- Integration Complexity: Integrating Machine Learning into CI/CD requires multidisciplinary expertise, from Python model deployment to container orchestration. Misalignment between DevOps and data teams can delay adoption.

- Human Trust and Transparency: Engineers may resist AI recommendations if the reasoning is opaque. Hence, explainable AI should accompany DevOps pipelines, enabling teams to understand why an alert or decision was made.

- Ethical and Security Considerations: AI systems must manage bias, protect sensitive data through anonymization, and safeguard models from tampering or malicious attacks.

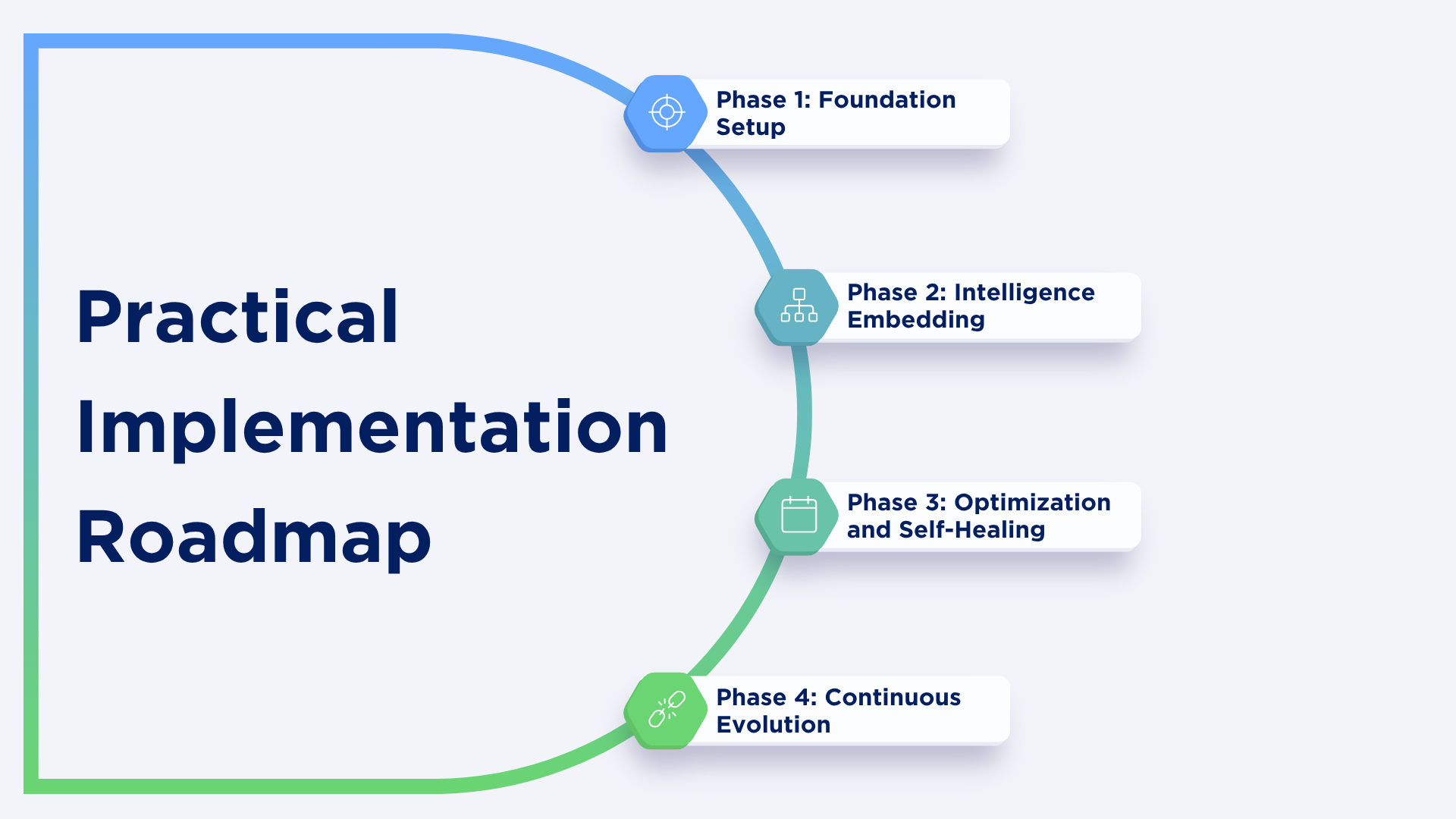

Practical Implementation Roadmap

Phase 1: Foundation Setup

Start by collecting logs, metrics, and events centrally for observability in a single place. Use cloud-native services, like Azure AI DevOps or Kubernetes-integrated AIOps, and empower Git AI for intelligent insights at an early stage in your development.

Phase 2: Intelligence Embedding

Embed predictive ML models into your pipelines so you can forecast build failures or anomalies. Add Generative AI DevOps capabilities to auto-configure or generate tests and create a continuous feedback loop for model retraining.

Phase 3: Optimization and Self-Healing

Apply reinforcement learning to make pipelines self-optimizing and adaptive. Implement automated rollbacks that allow AI to identify problematic deployments and initiate a rapid recovery, utilizing KPIs such as MTTR and deployment velocity tests to ensure success.

Phase 4: Continuous Evolution

Build a shared resource directory of AI models, decision factors, and forms of rationale to increase transparency. Foster explainability through graphical dashboards, and continue to broaden AI integration into other areas, including testing (both QA and performance) as well as security compliance.

Wrapping Up

Bringing AI into DevOps pipelines represents the next generation of intelligent automation that takes systems from being just executed to being learned and self-optimized. With a combination of Machine Learning, Git AI, Generative AI, DevOps, and Azure AI DevOps, businesses will see quicker, reliable software delivery that is adaptive to unique, specific needs. The future of DevOps is continuous intelligence automation that’s insight-driven, resilient, and continually learning.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |