What is Model Context Protocol (MCP)?

|

|

“AI is not just a tool; it’s a partner for human creativity” – Satya Nadella.

We have access to artificial intelligence in its different forms – voice assistants, recommendation engines, smart home devices, chatbots, search engines, facial recognition, and more. These creative ways of harnessing the different flavors of AI, be it through machine learning (ML), natural language processing (NLP), or neural networks, are possible. Thanks to the human mind’s access to vast stores of information. If we want our technology to be as adept as human intelligence, won’t it need access to equally large stores of information? After all, just writing algorithms for AI models won’t help unless you train them with data.

So the question that begs to be asked is, “How do these AI systems access data?”

You might be wondering where MCP fits into all this. Fret not. You’ll understand these concepts in a bit.

How do AI Models Access Data?

You must have used AI models in some capacity or another. Maybe large language models (LLMs) like ChatGPT or Gemini, some computer vision models for image recognition, or maybe robotic systems that need real-time sensor data. All these models are trained with algorithms and data to present anticipated outcomes.

In the absence of a widely adopted standard, current AI data access is handled through a patchwork of methods, often with less security and standardization.

- Direct Database Access: AI models or applications might directly connect to databases using database connectors or drivers. While this approach can be efficient, it poses significant security risks if not properly implemented.

- API Integration: AI models often access data through APIs provided by data sources. While APIs offer some level of control, they don’t always provide fine-grained access management.

- Data Warehouses and Data Lakes: Organizations might consolidate data into data warehouses or data lakes, which provide a centralized source for AI models. While this simplifies data access, it can also create security risks if the data warehouse is not properly protected. Data might still be scattered across different systems, which can make it difficult to integrate.

- Custom Data Access Layers: Some organizations build custom data access layers to control how AI models access data. This approach can provide more control but requires significant development effort. It also leads to a lack of standardization and maintenance overhead.

- Cloud Provider Integrations: Cloud providers offer various AI and data services that are designed to work together. While this simplifies integration within a specific cloud ecosystem, it can create vendor lock-in.

- Ad-Hoc Methods: In some cases, AI models might access data through ad-hoc methods, such as file transfers or data dumps. This approach is highly insecure and difficult to manage.

What is Model Context Protocol (MCP)?

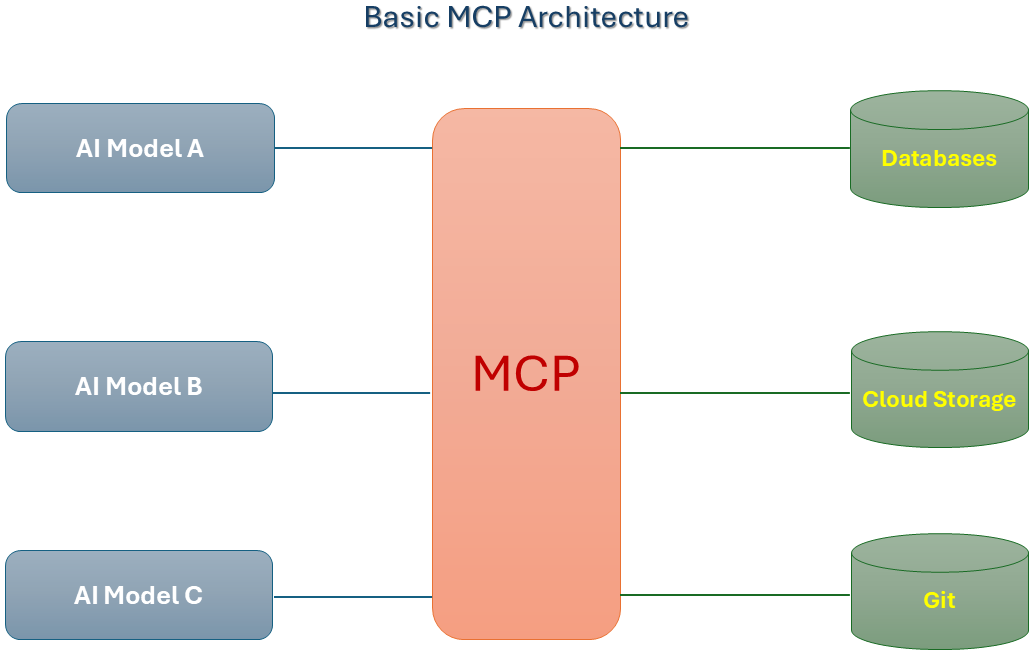

Think of the Model Context Protocol (MCP) as a set of instructions that guides the AI on what data is needed, when to use it, and how to stay within the boundaries of relevant and safe information. It helps the AI focus on the right data at the right time to make sure it produces more accurate and context-aware responses.

MCP fits into the picture of AI data access by providing a standardized and secure way to manage the flow of information, specifically between data sources and AI models, similar to the well-established HTTPS protocol.

MCP’s Role

- Controlled Contextual Access: MCP is built to give AI models access to only the specific data and context they need for a particular task. This helps minimize data exposure and protect sensitive information.

- Standardized Communication: It establishes a common language and set of rules for how AI models and data sources communicate. This simplifies the integration of AI tools with various data systems.

- Security Layer: MCP provides a security layer that controls how AI models access and use data. It allows developers to define fine-grained access controls and data usage policies.

- Data Governance: MCP supports data governance by enabling organizations to enforce data usage policies and track how AI models are accessing data.

- AI Application Development: It makes it easier for developers to build AI-powered applications that rely on external data sources.

For example, imagine an AI-powered customer service chatbot. Instead of giving the chatbot direct access to the entire customer database, an MCP server can be set up. The MCP server would be configured to allow the chatbot to access only customer names and order histories. The chatbot, as an MCP client, would connect to the MCP server to retrieve this specific information.

Why Do We Need MCP?

The increasing prevalence of AI and its growing need for access to diverse data sources are what’s driving the need for protocols like MCP.

- The AI Data Explosion: AI models, especially large language models (LLMs) and other deep learning systems, require vast amounts of data to learn and perform effectively. This data comes from various sources like databases, APIs, files, cloud storage, and even real-time streams. Simply granting AI tools unrestricted access to all this data poses significant security and privacy risks.

- Security and Privacy Concerns: Many data sources contain sensitive information, such as personal data, financial records, or intellectual property. Without proper controls, AI tools could potentially access and misuse this data. The risk of data leaks increases when AI tools are connected to multiple data sources. Moreover, regulations like GDPR and CCPA require organizations to protect sensitive data. Read: AI Compliance for Software.

- Controlled Contextual Access: AI tools often need specific context to perform tasks effectively. MCP allows developers to define precisely what data and context AI tools can access. This helps minimize the amount of data exposed to AI tools to reduce security and privacy risks.

- Interoperability and Standardization: The AI landscape is fragmented, with various tools and platforms. MCP aims to provide a standardized way for AI tools to communicate with data sources. It simplifies the integration of AI tools with existing data infrastructure.

Components of MCP

MCP utilizes the popular client-server architecture. Here’s what all goes into it.

- MCP Server: The MCP server acts as the intermediary between data sources and AI models (MCP clients).

- It exposes data sources through a standardized interface.

- It enforces access control policies to make sure that only authorized AI models can access data.

- It gives AI models just the information they need, keeping it context-specific.

- It handles authentication and authorization.

- It logs data access and usage for auditing purposes.

- MCP Client: This is the component within the AI model or AI-powered application that connects to the MCP server.

- It communicates with the MCP server to request data.

- It provides authentication credentials to the MCP server.

- It receives data and context from the MCP server.

- It adheres to the data usage policies enforced by the MCP server.

- Prompt: This is the instruction or query that the MCP client (AI model) sends to the MCP server. It specifies what data the AI model needs. The MCP server uses the prompt to understand the AI model’s request and retrieve the appropriate data. The MCP server would use that prompt, to then query the correct database, or API, to retrieve the correct data.

- Tools: These are the functions or capabilities that the MCP server makes available to the AI model. Tools allow the AI model to perform specific actions or data manipulations. They can be:

- Database queries.

- API calls.

- Data transformation functions.

- External service integrations.

- Resources: These are the actual data assets that the MCP server provides to the AI model. They can be:

- Structured data (tables, JSON).

- Unstructured data (text documents, images).

- Metadata (information about the data).

How does MCP Work?

Here’s how the system works

- AI Model (MCP Client) sends a Prompt: The AI model sends a prompt to the MCP server in which it specifies the data it needs.

- MCP Server Identifies Tools: The MCP server analyzes the prompt and identifies the appropriate “tools” to use.

- MCP Server Retrieves Resources: The MCP server uses the identified “tools” to retrieve the necessary “resources” (data) from the data source.

- MCP Server Sends Data to Client: The MCP server sends the “resources” and any relevant metadata back to the AI model.

Thus, AI models needn’t make unique connections with every type of dataset. They just need to connect to the MCP and MCP fetches the data for the model. This way MCP behaves as an intelligent memory management system for AI models (like LLMs).

MCP and Software Testing

MCP can make software testing smarter. But when AI is involved in software testing, especially when you need to get information from different places, testing can become tricky. This is what MCP helps with – giving selective and useful context to AI. By having a set of protocols, you can use your AI models like LLMs to achieve low-code and even no-code test automation. Read: Codeless Automated Testing: Low Code, No Code Testing Tools.

By simply configuring the tools’ functions within MCP, you can control what data your AI model can access. Since AI models are meant to be intelligent, you can add descriptions to the tool’s functions, which the models can read to better understand which tool to use to complete a query/prompt. For example:

- You can automate web testing by using available MCP servers that offer tools that help you navigate within browsers, click on elements, and more. You can find many MCP servers, like Microsoft’s Playwright MCP, that are written to offer pre-written tools to help you. Thus, you can simply tell the LLM or AI model what to do in plain English, and it will do it for you, without you having to write explicit test steps or code.

- You can use plain language to make the AI model fetch all kinds of data for you. It can execute SQL queries on your behalf and fetch the results, as well as test APIs and check responses.

Let’s look at a use case here.

Let’s say you integrate an LLM like Claude into your testing process to make testing codeless (which is going to be a huge help for your QA team by the way!). You need the LLM to be able to test your web application and also look into the local database. On its own, the LLM cannot access your local database. Hence, you need to first set up the MCP (Playwright MCP, for example). For that, you need to add 3-4 lines of code into the LLM’s settings, which are readily available in the MCP’s documentation. This will give you access to all the pre-defined tools that the MCP has, including ones that work with databases and web browsers. Now, if you ask the LLM to go and fetch customer information from the database, the LLM will use its intelligence to access your database via MCP, understand your database and table structure, formulate the SQL query, and then give you the response you want.

Similarly, if you’re using the Playwright MCP, then you can tell your LLM to do a smoke test on the login page by clicking on a few links. The LLM, by using the MCP tools, will intelligently figure out what actions are important for a smoke test and do it on your behalf.

Thus, by configuring MCP servers in AI models, you can provide AI the necessary context that it will need to simplify testing for you as you’re building your own AI test agent. Read: AI Agents in Software Testing.

Future of MCP

Anthropic created and released MCP as a way for different AI models to talk to different databases and tools without everything getting muddled. Although it’s still in its early stages and adoption is gradually increasing, MCP has the potential to become an important part of the future of AI data management. As AI models are increasingly embedded in essential systems, we’ll see the need for secure and well-regulated data access increase.

We might see cloud companies and AI platform builders start adding it into their systems. That would make it way simpler for programmers to make AI apps that are actually safe. Plus, with AI agents, those programs that act independently are becoming more common, and we need a way to keep them from going wild with our data. With all these data privacy laws like GDPR and CCPA, having a way to keep track of where the AI is getting its information would be a huge need.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |