Prompt Design vs Prompt Engineering: Key Differences, Use Cases & Best Practices

|

|

Today, we have generative AI tools like writing assistants and even complex code generators. While these tools continue to transition from being novel in our lives and gradually becoming indispensable, there is an increasing urgency for us to be able to communicate with them. Typing in a question is simply no longer good enough. The reason is that the quality of the output varies in direct proportion to the quality of your input. This leads us to two key terms – Prompt Design and Prompt Engineering.

In this post, we will clarify the distinction between the art of Prompt Design and the science of Prompt Engineering, looking at specific use cases for both. We will then make this discussion concrete in the context of software testing, showing that there exists a clear competitive edge for testers who master such proactive techniques.

| Key Takeaways: |

|---|

|

Understanding Prompt Design

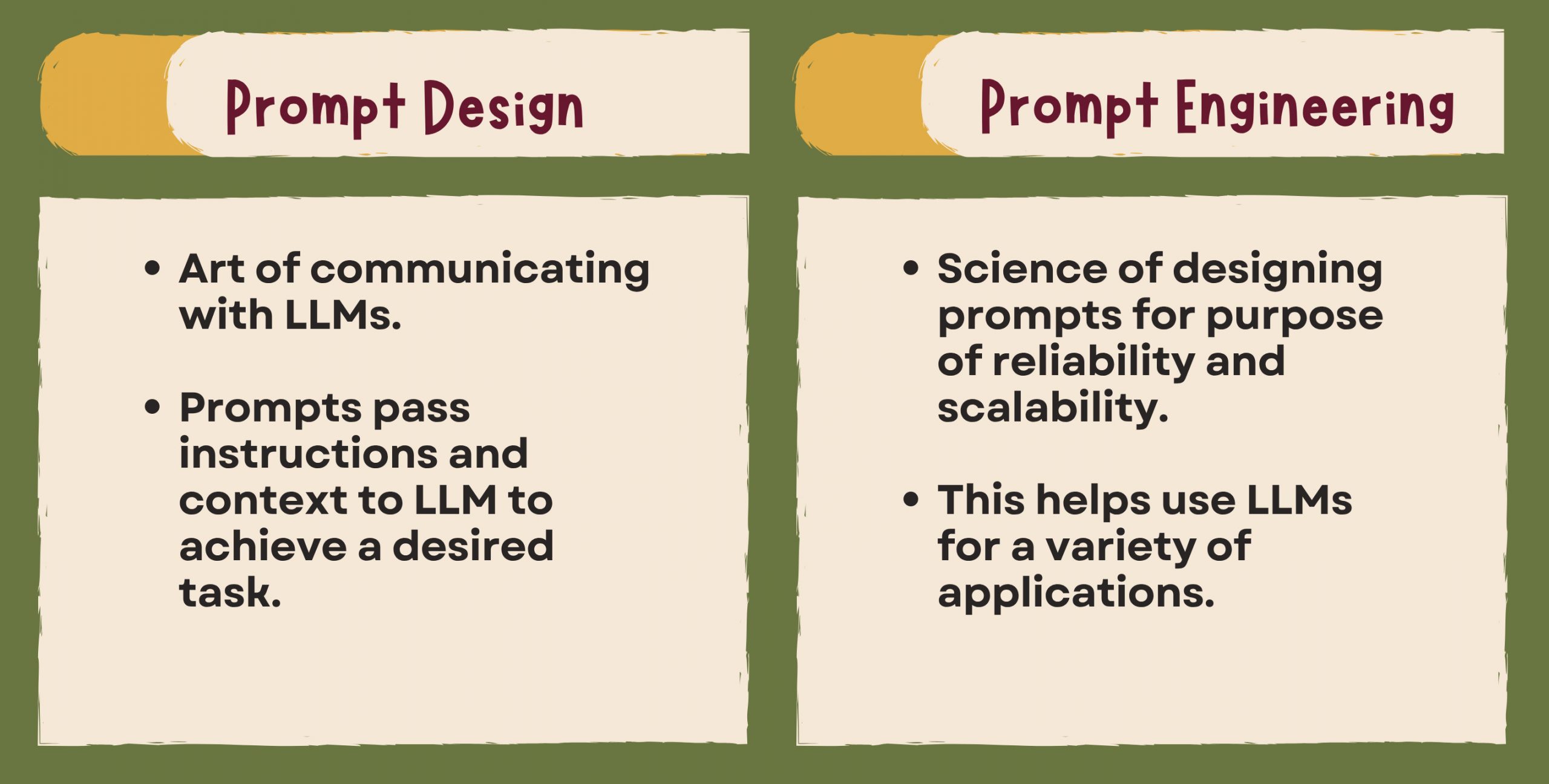

Prompt design is essentially like chatting with an AI. It is dedicated to generating instructions in natural human language that direct a Large Language Model (LLM) to an unambiguous, immediate, and desired output. It is a first pass, an early draft of engagement or conversation that prioritizes clarity and meaning over formal organization.

Key Characteristics of Prompt Design

The main purpose of Prompt Design is to let the LLM know what you want it to do, how you expect it to perform the job, and in which format the output should be. This involves focusing on:

- Clarity and Concise: Eliminating ambiguity. A well-designed prompt leaves little room for the AI to misunderstand the core task.

- Context Setting: Giving the AI a specific role or background. For instance, asking the model to “Act as a senior DevOps engineer” immediately scopes its response and tone.

- Format Specification: Explicitly dictating the output structure, such as “List the results in a bulleted Markdown list” or “Provide the answer in a JSON object”.

- Tone: Specifying the required tone, whether it’s professional, humorous, or highly technical.

Prompt Design is based on fast, human feedback – you try a prompt, examine the output, and modify the prompt until it generates an appropriate result. It works because it taps into the model’s huge pretraining data and its ability to understand what a user wants from a conversational context.

Core Use Cases of Prompt Design

It is “the quick design solution” for small interactions and everyday tasks that do not require high-stakes precision or turn after turn of complex multi-step reasoning. These include:

- Generating drafts of marketing copy, emails, or summaries.

- Creating basic code snippets or function shells (E.g., “Write a Python function to parse a CSV file”).

- Quickly retrieving and summarizing information from large documents.

- Drafting internal documentation or creating first-pass content outlines.

Best Practices for Prompt Design

- Be Explicit, Not Implicit: Never assume the LLM knows what you mean. Clearly specify the goal, persona, and constraints in your prompts.

- Define the Format: Ambiguity in output format leads to inconsistent results. Always specify the structure you need. For example: “Provide the test cases as a three-column Markdown table with headers: Test ID, Description, Expected Result.”

- Provide Sufficient Context: Give the model all the necessary background information before the request. If you want a function written, include the input data format, the desired output, and the specific library it should use.

- Iterate and Refine: Treat the prompt like a draft. If the first output is inadequate, don’t just ask the same question again. Analyze what the model missed and update your prompt to address that gap directly.

Understanding Prompt Engineering

If the Prompt Design is the art of a conversation, then the Prompt Engineering is the science of how to design this conversation in such a way that it’s scalable, optimized, and reliable. This field extends beyond casual human natural language input and includes the engineering of developing, testing, and refining prompts so that the response to them is high-quality, reliable, and reproducible from LLMs.

Key Techniques of Prompt Engineering

Prompt Engineering is necessary when a light, conversational prompt will not allow you to get the particular precision or depth you desire. It works by coded patterns and instruction types, so the prompt becomes a little mini-program for the LLM. Key techniques include:

- Chain-of-Thought (CoT) Prompting: Instead of just asking for the answer, you instruct the model to “think step-by-step” or “first outline your reasoning”. This forces the LLM to structure its internal processing, dramatically improving accuracy for complex logical, mathematical, or multi-step reasoning tasks.

- Few-Shot Learning: This technique involves providing the LLM with a small set of meticulously crafted input/output examples within the prompt itself. This primes the model by explicitly showing it the desired format and logic, leading to highly consistent results, especially when dealing with nuanced categorization or specific data transformation tasks.

- Self-Correction/Critique: The prompt is structured to not only generate an answer but then immediately evaluate its own output against a defined set of criteria. For example, “Generate the SQL query, and then check if it correctly handles null values. If not, correct it.”

- Integration with External Tools (Tool-Calling): In advanced systems, the engineered prompt defines functions or tools the LLM can use (e.g., a calculator or a database lookup). The LLM’s primary output then becomes a reasoned decision on which tool to call and with what parameters, making it an autonomous agent.

Core Use Cases of Prompt Engineering

Prompt Engineering is crucial for integration into production systems, handling sophisticated data processing, or any application requiring guaranteed precision and minimal human oversight.

- Complex Data Analysis and Summarization: Systematically extracting and synthesizing key insights from vast, unstructured datasets where reliability is paramount.

- Advanced Code Generation and Refactoring: Generating complete modules, translating code between languages, or automatically identifying and fixing common anti-patterns in existing codebases.

- Building Autonomous AI Agents: Creating sophisticated instruction sets that allow the LLM to operate independently, perform complex workflows, and manage multi-stage tasks. Read: Retrieval Augmented Generation (RAG) vs. AI Agents.

- Integrating LLMs for High Consistency: Deploying AI features in enterprise applications where output must adhere strictly to predefined formats, schemas, and logic rules.

More on Prompt Engineering:

- Talk to Chatbots: How to Become a Prompt Engineer

- How to Test Prompt Injections?

- Prompt Engineering in QA and Software Testing

- Prompt Engineering Interview Questions

Prompt Design vs. Prompt Engineering

The distinction between Prompt Design and Prompt Engineering boils down to the difference between effective communication and technical optimization. While both are necessary for working with Large Language Models (LLMs), they require distinct mindsets and skill sets.

Here is a detailed comparison:

| Feature | Prompt Design | Prompt Engineering |

|---|---|---|

| Primary Focus | Clarity, user intent, natural language fluency, conversational flow. | Reliability, optimization, sophisticated technical techniques (e.g., CoT, few-shot). |

| Skillset | Strong communication, domain knowledge, creativity, and understanding of human psychology. | Technical expertise, computational thinking, systematic experimentation, code-like structuring. |

| Approach | Iterative, conversational, quick refinement based on output quality. | Systematic, algorithmic, structured, often involving testing and metrics. |

| Complexity Handled | Simple to moderate tasks where natural language is sufficient. | Moderate to highly complex tasks requiring guaranteed, structured, and logical output. |

| Goal | Effective output now, making the model understand the request. | Optimized, repeatable, and reliable output at scale, making the model reason correctly. |

| Input Style | Conversational, instruction-based, setting the scene or persona. | Structured, often including metadata, examples, explicit reasoning steps, and error checks. |

Prompting: The New Skill in Testing

Prompt Design and Prompt Engineering are not merely tangential to testing; they are integrated into several key QA activities:

Test Case Generation

- Prompt Design is used for quick, high-level ideation. A tester uses clear language to generate a preliminary list of happy path or smoke tests. Example: “Act as a user. Give me 10 simple steps to test the login page of a banking app.”

- Prompt Engineering is used for complex, structured, and high-quality test artifacts. Example: The tester can use Chain-of-Thought (CoT) prompting, telling the AI to “First, analyze the user story for all acceptance criteria. Second, identify potential negative paths and boundary conditions. Third, generate a formal test case in a three-column Markdown table (Test ID, Steps, Expected Result) for each identified path”. This systematically ensures better test coverage and generates structured outputs that are directly usable by the QA team.

Test Data Generation

Prompt Engineering is vital for creating realistic, diverse, and compliance-aware test data, which is often difficult and time-consuming to create manually.

Example: Few-Shot Prompting is used by providing the LLM with a few samples of valid, structured data (e.g., a few valid customer records with specific age and location constraints) and then instructing it to generate thousands more records that adhere strictly to those patterns. It significantly reduces the time spent mocking data while increasing the realism needed to validate complex systems.

Code & Vulnerability Analysis

Testers (especially SDETs) use sophisticated prompts to evaluate code quality, security, or to debug.

Example: The tester might prompt the model to “Act as a security expert specializing in injection vulnerabilities. Review the following Python function and identify any unhandled user inputs that could lead to a security risk”. This provides an immediate, intelligent peer review, helping the tester find complex defects that might be missed by simple static analysis tools.

Test Automation Scripting

A key part of a tester’s job is automating tests. Prompting speeds up this process.

- Prompt Design: Used to generate basic automation code boilerplate. (“Write a Selenium script in Java to click the ‘Submit’ button”).

- Prompt Engineering: Used to enforce standards and modularity. (“Write the Playwright test using TypeScript. Ensure all page elements are defined in a separate Page Object Model class, and the test only calls methods from that class”).

Modern tools like testRigor that use generative AI to manage test creation, execution, and maintenance benefit from testers knowing such prompting techniques. If one has the ability to write smart prompts, then they can create descriptive inputs in plain English that a tool like testRigor can use to create test cases from. Here’s a depiction of how simple this process can be: How to Create Tests in Seconds with testRigor’s Generative AI

Essential Skills for the AI-Enabled Tester

The shift towards AI augmentation means testers need to acquire new skills that blend their traditional QA mindset with computational guidance.

| Skill | Why It Matters for Prompting |

|---|---|

| Computational Thinking | The ability to break down a complex user requirement (e.g., “The payment should fail if the card has expired”) into logical, sequential instructions for the AI (the foundation of CoT prompting). |

| Domain Expertise | Knowing the business rules, compliance requirements, and error codes ensures the tester can give the AI the correct context and critically validate the AI’s output for accuracy. Read: Why Testers Require Domain Knowledge? |

| Structured Communication | Opt for unambiguous language. The LLM cannot understand vague intent – a good tester will word their prompt more clearly. |

| Test-Driven Prompting | Treating the prompt itself like code that needs unit testing. A tester must A/B test different prompts against a known good scenario to ensure the one they deploy is reliable and consistent. |

| Critical Evaluation | One should always bear in mind that the LLM could hallucinate or be mistaken. No expert tester ever believes the AI “blindly”; they use it as a tool or assistive tool to continue to do their job, looking at code and verifying what the AI concludes. Read: What are AI Hallucinations? |

Conclusion

In the end, the difference between Prompt Design and Prompt Engineering is about focus: the former being a fundamental of communication, and the latter being a refined technical study on optimization and reliability. Both are necessary in order to unlock the promise of Generative AI.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |