What is the Role of Quality Assurance in Machine Learning?

|

|

Imagine a self-driving car. It’s a marvel of modern technology, capable of navigating roads, avoiding obstacles, and even making decisions in real-time. But what happens when that car misinterprets a stop sign as a speed limit or confuses a pedestrian with a shadow? The consequences could be catastrophic. This is where the critical role of quality assurance (QA) in machine learning comes into play.

In the world of machine learning, where algorithms are trained on vast datasets to make predictions and decisions, QA can no longer be about just finding bugs. It’s about ensuring that these intelligent systems are reliable, fair, and effective in real-world scenarios.

In this blog post, we will examine the essential role of quality assurance in the machine learning lifecycle and explore how it shapes the future of intelligent systems.

Quality Assurance in Machine Learning

QA has a multifaceted role in ML. It involves a comprehensive evaluation of the entire ML lifecycle, from data collection and preparation to model training, evaluation, and deployment.

The key aspects that you need to focus on when it comes to ML are:

- Data Quality: Accuracy, completeness, and relevance of the data used to train the model.

- Model Performance: Model’s ability to make accurate predictions or decisions.

- Fairness and Bias: Model’s impartiality and avoiding discriminatory outcomes.

- Explainability: How the model arrives at its conclusions.

- Robustness: Testing the model’s performance under various conditions and scenarios.

QA Differences Between Traditional and ML Systems

While traditional QA focuses primarily on functional correctness and defect detection in software systems, QA for ML systems has unique challenges due to the inherent complexity and uncertainty involved in machine learning.

| QA Factors | Traditional Systems | ML Systems |

|---|---|---|

| Focus | Code functionality and performance | Data quality, model performance, and ethical considerations |

| Data Dependency | Emphasizes testing code functionality and focuses on bugs, performance, and user experience. | Places significant emphasis on the quality of the data used to train models. Since ML models learn patterns from data, any issues in data quality can lead to suboptimal model performance. |

| Model Learning Behavior/ Determinism | Predictable outcomes; same input yields the same output | Stochastic outcomes; the same input can yield different results |

| Testing Process | Mainly involves manual and automated testing of software | Involves data validation, model training/testing, and ongoing monitoring |

| Bias Consideration | Limited focus on bias and ethical concerns | Emphasis on identifying and mitigating biases in data and models |

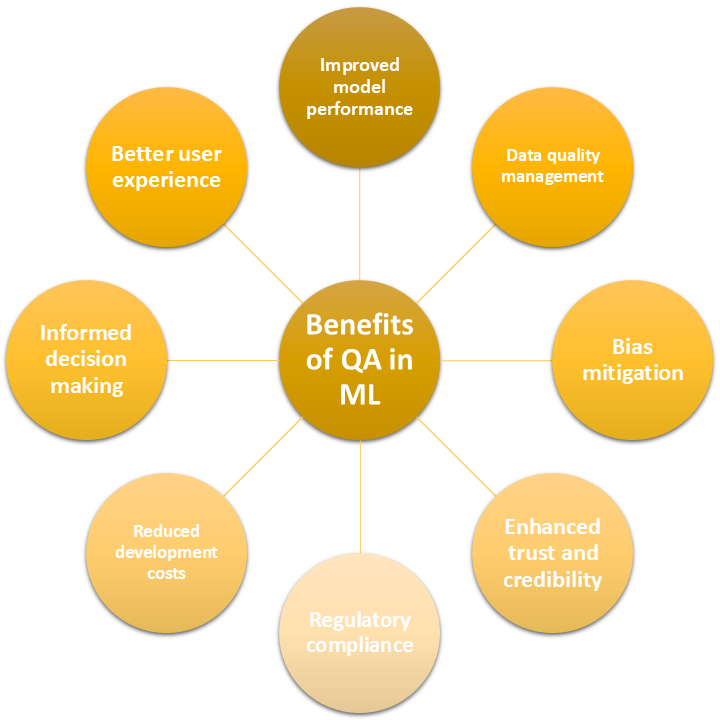

Benefits of QA in Machine Learning

QA Focus When Testing ML Systems

You need to cover the following areas while testing your ML system:

Data Quality Assurance

Let us have a look at the steps that complete the data QA for ML testing:

-

Data Validation: Data quality is the cornerstone of effective machine learning models. Poor-quality data can lead to inaccurate predictions, biased outcomes, and unreliable insights. QA plays a critical role in ensuring that the data used in ML processes meets high-quality standards. Here’s how QA contributes to data quality in machine learning:

- Schema Validation: It ensures that the dataset’s structure adheres to predefined formats, such as data types, ranges, and relationships. QA teams establish a schema that defines the expected data types (e.g., integers, floats, strings), constraints (e.g., non-null values), and relationships between different data fields. Automated validation scripts are run to ensure that incoming data matches this schema.

- Range and Constraint Checks: It validates that the values within the dataset fall within acceptable ranges or meet specific criteria. For example, if a dataset includes age as a feature, QA checks that all values fall within a realistic range (e.g., 0 to 120). Any values outside this range can be flagged for review.

- Cross-Referencing Data Sources: It confirms the accuracy of data by comparing it with trusted external sources. Data validation involves checking the dataset against reliable benchmarks or databases to verify its accuracy. Discrepancies can be highlighted for correction.

- Completeness Checks: It ensures that all required fields are present and populated in the dataset. QA tools are employed to assess the presence of missing values in critical fields. Missing data can significantly affect model performance, so any missing entries are flagged for further action.

-

Data Cleaning: It is the process of identifying and correcting errors or inconsistencies in the dataset. QA plays a key role in ensuring data cleanliness through the following methods:

- Error Detection: Identifying inaccuracies, typos, and formatting inconsistencies in the dataset. Automated scripts or manual reviews are conducted to find errors. For example, if a categorical feature like “Country” contains values like “USA” and “United States,” QA identifies this inconsistency.

-

Handling Missing Values: Addressing gaps in the data where values are missing. Various strategies are employed to handle missing data:

- Deletion: Removing records with missing values if they constitute a small percentage of the dataset.

- Imputation: Filling in missing values using techniques such as mean, median, mode, or predictive modeling to estimate missing entries based on other available data.

- Outlier Detection and Treatment: Identifying and addressing anomalies that could distort model training. Statistical methods (e.g., z-score, IQR) are employed to detect outliers. Once identified, QA teams determine the best approach to handle them – either by removal, transformation or further investigation to understand their context.

- Standardization: Ensuring consistency in data formats and representations. Data cleaning includes standardizing formats across the dataset, such as converting all date formats to a consistent standard (e.g., YYYY-MM-DD) or ensuring uniformity in categorical variables (e.g., converting all text to lowercase).

-

Data Preprocessing: Prepares the dataset for model training, which enhances its suitability for machine learning algorithms. QA helps in the following ways:

- Normalization and Scaling: Adjusting the range of features to ensure they contribute equally to model training. Techniques such as Min-Max scaling or Z-score normalization are applied to transform features so that they fall within a specified range (e.g., 0 to 1) or have a mean of 0 and a standard deviation of 1. QA checks that these transformations are applied consistently across the dataset.

- Encoding Categorical Variables: Converting categorical data into numerical formats that algorithms can process. Techniques such as one-hot encoding or label encoding are employed. QA ensures that these transformations maintain the integrity of the data and that no information is lost during encoding.

- Feature Engineering: Creating new features from existing ones to improve model performance. QA teams collaborate with data scientists to identify which new features could enhance model accuracy. For example, creating interaction terms or aggregating data over time to capture trends can be beneficial. QA validates that these new features are correctly computed and relevant.

- Train-Test Split: Dividing the dataset into training and testing subsets to evaluate model performance effectively. QA ensures that the split is done randomly and appropriately, preventing data leakage. This helps maintain the independence of the test set, which is critical for unbiased evaluation of model performance.

Model Validation and Testing

-

Performance Metrics Evaluation: They are essential for assessing how well an ML model achieves its objectives. Here’s how QA contributes to this process:

-

Selection of Relevant Metrics: Choosing appropriate metrics based on the nature of the ML problem (classification, regression, etc.). QA teams collaborate with data scientists to identify the right metrics to evaluate model performance. By selecting relevant metrics, QA ensures that the evaluation reflects the model’s real-world applicability. Common metrics include:

- Classification: Accuracy, precision, recall, F1 score, area under the ROC curve (AUC).

- Regression: Mean absolute error (MAE), mean squared error (MSE), R-squared.

-

Calculation and Reporting: Accurately calculating and presenting the performance metrics. Automated scripts are often employed to compute these metrics consistently across different runs. QA verifies that these scripts are functioning correctly and that the reported metrics accurately reflect model performance.Additionally, QA teams ensure that metrics are reported in a clear and interpretable format, making it easier for stakeholders to understand the results.

- Benchmarking: Comparing the model’s performance against predefined benchmarks or previous models. QA involves setting baseline metrics based on prior models or industry standards. This benchmarking helps assess whether the new model offers improvements in performance.

-

-

Robustness Testing: Evaluates how well a model performs under various conditions and inputs, including edge cases and adversarial scenarios. QA is crucial in this area through the following methods:

- Stress Testing: Evaluating model performance under extreme or unusual conditions. QA teams create test cases that include outlier data points, noise, or adversarial examples designed to exploit model weaknesses. The model’s ability to maintain performance levels in these scenarios is rigorously assessed.

- Adversarial Testing: Testing the model against inputs designed to deceive or confuse it. QA includes developing and deploying adversarial examples that challenge the model’s decision-making process. This testing helps identify vulnerabilities and provides insights into potential areas for improvement.

- Boundary Testing: Assessing model performance at the boundaries of input data. QA teams test how the model reacts to inputs that are at the edge of acceptable ranges (e.g., minimum and maximum values for a feature). This helps ensure that the model can handle a wide range of input values without degradation in performance.

-

Cross-Validation: This technique is used to assess how well a model generalizes to an independent dataset. QA contributes to this process in the following ways:

- Implementation of Cross-Validation Techniques: Systematically partitioning the dataset into subsets for training and validation. QA teams ensure that appropriate cross-validation methods (e.g., k-fold, stratified k-fold) are correctly implemented. For instance, in k-fold cross-validation, the dataset is divided into k subsets, and the model is trained and validated k times, each time using a different subset for validation and the remaining for training.

- Ensuring Randomness and Independence: Maintaining the independence of training and validation sets to avoid data leakage. QA verifies that the random selection process for splitting data does not inadvertently introduce bias. Techniques such as shuffling the dataset before splitting help maintain randomness.

- Performance Aggregation: Combining performance metrics from multiple folds to obtain a single evaluation metric. QA ensures that the aggregation of results (e.g., averaging accuracy across all k folds) is conducted accurately. This gives a more reliable estimate of the model’s performance compared to a single train-test split.

- Hyperparameter Tuning: Optimizing model parameters using cross-validation results to improve performance. QA teams validate the process of tuning hyperparameters by ensuring that they are selected based on cross-validated results rather than on a single validation set. This helps avoid overfitting and enhances model generalization.

Bias Detection and Fairness

-

Bias Assessment: Process of identifying and analyzing biases present in the data and the model itself. QA contributes to this process through several key activities:

- Data Source Evaluation: Reviewing the sources from which data is collected to identify potential biases in representation. QA teams examine the demographics and characteristics of the data sources. For example, if a dataset primarily consists of data from a specific geographic region or demographic group, this could introduce bias. QA ensures that diverse and representative sources are included in the dataset to mitigate these issues.

- Data Distribution Analysis: Analyzing the distribution of features within the dataset to identify imbalances. QA uses statistical methods to assess the distribution of key features across different demographic groups (e.g., age, gender, ethnicity). Disparities in representation can indicate potential biases. For instance, if a model trained on data with an overrepresentation of one gender performs poorly on the underrepresented gender, it suggests bias that needs to be addressed.

- Model Output Analysis: Evaluating the predictions made by the model for different groups to identify discriminatory outcomes. QA teams conduct audits of the model’s predictions, examining how different demographic groups are affected. This may involve comparing error rates or performance metrics (e.g., precision, recall) across groups to uncover any biases in the model’s decision-making process.

- Adversarial Testing: Using adversarial examples to evaluate model robustness and detect biases. QA can implement adversarial testing techniques by generating inputs specifically designed to test the model’s handling of different demographic groups. This helps identify vulnerabilities where the model may perform unfairly against certain populations.

-

Fairness Evaluation Metrics: They are quantitative measures used to assess the fairness of ML models. QA helps implement and validate these metrics in the following ways:

-

Selection of Fairness Metrics: Choosing appropriate metrics that align with the fairness goals of the model. QA teams collaborate with data scientists and stakeholders to determine the relevant fairness metrics based on the application context. Common fairness metrics include:

- Demographic Parity: Measures whether the positive prediction rates are similar across different demographic groups.

- Equal Opportunity: Assesses whether true positive rates are similar across groups.

- Disparate Impact: Examines the ratio of favorable outcomes for different groups to ensure that one group is not disproportionately disadvantaged.

- Implementation of Fairness Metrics: Integrating fairness metrics into the model evaluation process. QA teams develop automated scripts to compute fairness metrics alongside traditional performance metrics during model validation. This ensures that fairness is evaluated consistently across different models and iterations.

- Reporting and Interpretation: Presenting fairness metrics in a clear and interpretable manner.QA ensures that the results of fairness evaluations are reported transparently, highlighting any disparities or concerns. This may involve visualizing fairness metrics through charts or tables that clearly depict how different groups are impacted by the model.

- Iterative Refinement: Using fairness metrics to guide model improvements. Based on the findings from fairness evaluations, QA teams work with data scientists to refine models or adjust training data. This iterative approach helps mitigate identified biases and enhance the model’s overall fairness.

-

Monitoring and Maintenance

Model Drift Detection refers to the phenomenon where a model’s performance degrades over time due to changes in the underlying data distributions or relationships that the model was trained on. QA plays a crucial role in detecting and managing model drift through the following activities:

- Establishing Baselines: Setting performance benchmarks based on initial model evaluation metrics. QA teams establish baseline performance metrics (e.g., accuracy, precision, recall) during the model validation phase. These baselines serve as reference points for ongoing monitoring, allowing teams to quickly identify when performance deviates from expected levels.

- Continuous Monitoring: Implementing systems to track model performance over time. QA teams develop automated monitoring systems that regularly evaluate model performance against established baselines. This may involve setting up dashboards that visualize key performance indicators (KPIs) and alerting stakeholders when performance metrics fall below predefined thresholds.

- Statistical Tests for Drift Detection: Using statistical methods to assess changes in data distributions. QA teams apply techniques such as the Kolmogorov-Smirnov test, Chi-square test, or population stability index (PSI) to compare the distribution of incoming data with the original training data. Significant differences can indicate model drift, prompting further investigation.

- Performance Evaluation on New Data: Regularly evaluating the model’s predictions on new incoming data. QA involves assessing the model’s performance on fresh data and comparing it to the performance metrics calculated during the initial validation. A significant drop in metrics may signal the need for retraining or model adjustments.

-

Feedback Loops: They are mechanisms that allow organizations to gather information on model performance from end users or systems, providing insights for continuous improvement. QA enhances feedback loops through the following methods:

- User Feedback Collection: Gathering insights from users regarding model predictions. QA teams implement mechanisms to collect user feedback on model outputs, such as confidence ratings, correction of incorrect predictions, or user satisfaction surveys. This feedback provides valuable information on model accuracy and areas for improvement.

- Incorporating Feedback into Model Updates: Using collected feedback to inform model retraining or adjustments. QA teams analyze user feedback to identify patterns in model errors or areas where the model consistently underperforms. This feedback is then used to inform the data collection process for retraining the model or fine-tuning existing parameters.

- Closed-Loop Systems: Creating systems that automatically integrate feedback into the model lifecycle. QA processes may include the development of automated pipelines that use incoming feedback to update datasets, retrain models, and redeploy improved versions. This ensures that the model continually evolves based on real-world performance.

- Monitoring User Interactions: Tracking how users interact with the model and its predictions. QA teams analyze user interaction data to assess how users respond to model outputs. For instance, if users frequently override model recommendations, it may indicate that the model needs refinement. Monitoring these interactions provides insights into user behavior and expectations.

Challenges Involved with QA in ML

QA in machine learning presents unique challenges compared to traditional software development. These challenges arise from the inherent complexity of ML models, their reliance on data, and the evolving nature of their performance.

Data Quality and Quantity

- Data Bias: ML models can inherit biases present in the training data, which can lead to unfair or inaccurate predictions.

- Data Noise: Noisy or corrupted data can degrade model performance and introduce errors.

- Data Drift: Changes in data distribution over time can cause model performance to deteriorate.

- Data Scarcity: Insufficient or imbalanced data can limit the model’s ability to generalize and learn effectively.

Model Complexity

- Black Box Problem: Many ML models, especially deep neural networks, are complex and difficult to understand, making it challenging to interpret their decisions and identify potential issues.

- Overfitting: Models can become too specialized to the training data, which can lead to poor performance on unseen data.

- Underfitting: Models may not be able to capture the underlying patterns in the data, resulting in poor performance.

Model Evaluation and Metrics

- Choosing Appropriate Metrics: Selecting the right metrics to evaluate model performance can be challenging, as different metrics may emphasize different aspects of the model’s behavior.

- Interpretability: It can be difficult to understand how a model arrives at its predictions, which can make it challenging to assess its accuracy and reliability.

Continuous Monitoring and Maintenance

- Model Drift: ML models can degrade over time due to changes in data distribution or environmental factors.

- Retraining: Regularly retraining models to maintain their performance can be resource-intensive and time-consuming.

- Error Analysis: Identifying and addressing the root causes of model errors can be challenging, especially for complex models.

Ethical Considerations

- Bias and Fairness: Ensuring that ML models are fair and unbiased is a critical ethical concern.

- Privacy: Protecting user privacy while training and deploying ML models is essential.

- Explainability: Making model decisions understandable and transparent is important for building trust and accountability.

Conclusion

In an era where data reigns supreme, machine learning (ML) has emerged as a transformative force across industries like healthcare, finance, retail, and automotive. As organizations harness the power of ML to unlock insights, streamline operations, and enhance decision-making, the stakes have gotten higher.

However, with great power comes great responsibility. Ensuring the reliability and effectiveness of ML models is paramount. This is where quality assurance (QA) steps into the spotlight. QA in machine learning is not just a safety net. It’s a critical framework that ensures models perform well, adhere to ethical standards, and deliver consistent results.

Additional Resources

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |