What Are Edge Test Cases & How AI Helps

|

|

When we work on software quality, we tend to cover the scenarios that are obvious and right in front of us. Yet, there are instances when the user may use the software in a way that tests its limits. For example, a user might want to checkout 100 items through your software when only a 50 can be handled at a time. This is an instance of the user testing an edge case in your software.

| Key Takeaways: |

|---|

|

What Are Edge Test Cases?

An edge case is an extreme scenario in software operation that lies at the extreme boundaries of what is supported and expected.

It is a behavior or a condition that is rare, unusual, or outside of normal operating conditions. Edge test cases are specially designed test cases that verify the system’s behavior under extreme or boundary conditions.

- The system supports a password of up to 128 characters, but the user enters a 512-character password. This may cause the app to crash or incorrectly reject input.

- Another example is of a shopping cart. A customer may try to add 0 or 500 units of a product to the shopping cart. Although both values are technically correct, they may expose performance issues or logical errors.

Thus, if you ignore edge cases, it may lead to software crashes, security risks, data loss, or even product failure.

Why “edge”?

The term “edge” emanates from the idea of pushing one variable or condition to its minimum or maximum (or some unusual combination). Applying it to software is like sitting at the edge of what the software was designed to handle.

For example, if a function accepts values from 0 to 100, testing with ‘-1’ or ‘101’ is pushing an edge. For example, a shopping cart allows you to add a minimum of 1 and a maximum of 50 products at a time. You can push the software running the shopping cart to its edge by trying to add zero products or 55 products.

Thus, edge cases constantly challenge software with unusual scenarios that fall outside normal use.

Examples of Edge Test Cases

-

Extreme Input Values: Providing extreme inputs, such as huge numbers or excessively long usernames, can trigger software errors or even cause system crashes if not handled correctly.For an input field that accepts numeric values from 1 to 10, the edge test case will include -1, 0, 1, 10, 11, 100.

-

Date Handling: For data containing dates, an edge test case, such as February 29 in a leap year or the 31st of a month with 30 days, can be used to test the software for extreme cases.

-

Concurrent Operations: Operations such as two users simultaneously deleting the same record or accessing a register value at the same time for update constitute edge cases.

-

System Under Limits: Scenarios such as large file uploads, slow networks, or low memory can push the system to its limits. If not adequately handled, it may freeze and ultimately cause the system to crash.

-

Device-specific Bugs: These bugs are exclusive to specific devices, such as macOS machines. For example, a low battery state on macOS may highlight the importance of cross-device testing.

- Special Character Input: A social media platform usually handles special characters. However, if they are not handled properly, then entering special characters in a social media post might break functionalities.

These examples highlighting potential scenarios show the importance of testing edge cases to strengthen software reliability, enhance user experience, and reduce the risk of unexpected failures.

Why Are Edge Test Cases Important?

Testing software for edge cases is essential for the following reasons:

- Reliability and Robustness: With the help of edge cases, you can reveal flaws or vulnerabilities that are overlooked by standard tests. If these are missed, the software might behave abnormally, crash, or produce incorrect output under unusual but real-world conditions. By identifying edge cases, you ensure that the system handles varied inputs and produces the expected outputs, thereby guaranteeing its robustness and reliability.

- User Experience: Even if one in 100 users reaches the edge scenario, the impact can be disproportionate, damaging customer trust and reputation. Therefore, edge cases are critical for enhancing user experience by minimizing unexpected errors or crashes during software usage.

- Security and Compliance: Edge cases may be exploited for malicious intents and jeopardize security. Scenarios such as unhandled input, extreme values, and resource exhaustion are all typical ones that can compromise the system’s security if left unaddressed.

- Technical Debt Reduction: Addressing edge cases early or at least before deployment helps avoid accumulating hidden defects and reduces maintenance costs.

Edge Case vs. Corner Case vs. Base Case

Here is a distinction between an edge case, a corner case, and a base case.

- Base Case: This is a typical, expected scenario that application users face, such as standard inputs or typical usage of components.

- Edge Case: This refers to an input or condition at one boundary or when the application is pushed to its extreme, such as the maximum value, minimum value, or unusual user behavior.

- Corner Case: Many extreme inputs or conditions co-occur, such as maximum values across many variables combined.

How to Identify Edge Cases in Testing?

Identifying edge test cases effectively takes a methodical approach. It involves creative thinking that extends beyond normal, routine operational scenarios. In other words, it is not enough just to guess; you will want techniques and frameworks.

Focusing on edge cases during development results in a more comprehensive approach. Identifying edge cases involves elements such as test planning, creative thinking, and systematic testing, along with specific steps including identifying edge cases, developing test scenarios, and analyzing the results.

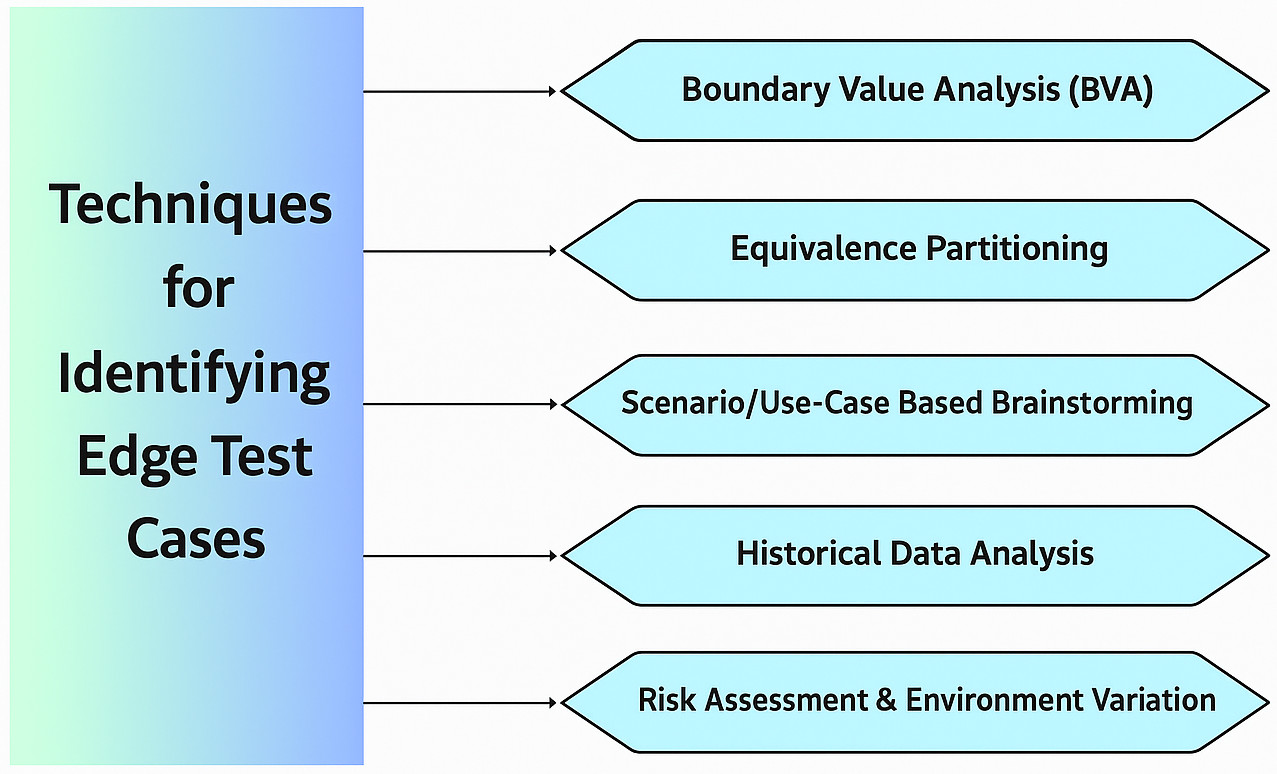

Techniques for Identifying Edge Test Cases

- Boundary Value Analysis (BVA): This is a classic technique of identifying edge cases. In this technique, focus is on examining the input value and values are tested Outside, on, and just inside the boundary. In general, this method emphasizes testing extreme values that are just below, at, and just above defined input limits to uncover potential errors. Developers can identify potential, unexpected issues occurring at the limits of input ranges by rigorously testing edge cases at these boundaries.

- Equivalence Partitioning: In this technique, the data is divided into sets or classes, and one scenario from each set is tested to efficiently identify edge cases. In equivalence partitioning, one representative value from each set is selected for testing, ensuring that various scenarios are accounted for without having to test every possible input. As it focuses on diverse input data groups, equivalence partitioning helps testers cover a wide range of scenarios with fewer tests. This technique ensures that potential edge cases are identified and addressed systematically without wasting much time.

- Scenario/Use-Case Based Brainstorming: This technique utilizes user stories to create realistic test cases that can uncover edge cases by simulating user interactions. As test cases are created to mimic the real-world scenarios, testers can uncover edge cases that are relevant to the actual usage patterns. Using this technique, the application’s functionality is thoroughly tested from the end user’s perspective. The scenario-based technique is particularly effective in identifying edge cases arising from complex user interactions, thereby contributing to the most reliable and robust product.

- Historical Data Analysis: This technique relies on historical production incidents and bug reports. Analyzing this historical data helps identify edge cases that may have originated from areas previously overlooked.

- Risk Assessment & Environment Variation: This technique considers unusual devices, browsers, network conditions, locale/language combinations, and resource constraints (such as battery and memory) to identify potential edge cases.

Read: Test Design Techniques: BVA, State Transition, and more

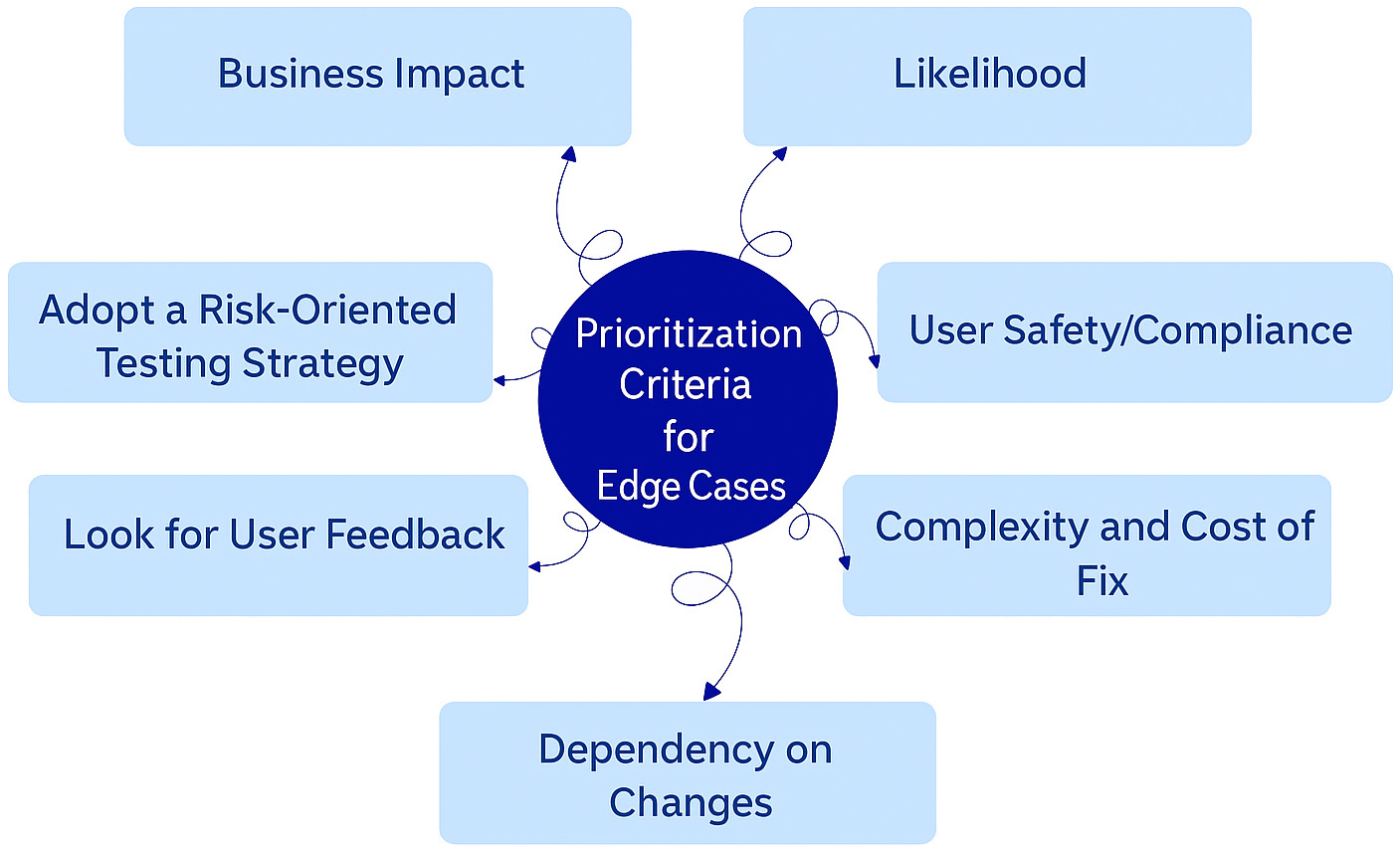

Prioritization of Edge Cases

Edge cases should be prioritized based on their potential user impact, severity of issues they may cause, and frequency of occurrence. Feedback from stakeholders and beta testers should also be considered when prioritizing edge cases.

When prioritizing edge cases, user safety, legal compliance, and business requirements are critical considerations, in addition to the percentage of affected users and the impact on revenue. Apart from these factors, the availability of potential workarounds and the complexity of fixes are also evaluated.

Prioritization Criteria for Edge Cases

In an application, there may be too many edge cases. The following is the criteria based on which the edge cases are commonly prioritized:

-

Business Impact: This criterion identifies edge cases that may cause users to lose trust, be harmful, or generate no revenue. In general, these are those edge cases that have a significant impact on the overall business.For example, a payment gateway that collapses under heavy transaction volumes would have a significant impact on the business. Read: Why Testers Require Domain Knowledge?

-

Likelihood: Even though edge cases are rare by definition, some of them would be more likely to occur in the real world. Hence, this criterion identifies edge cases based on the likelihood of their occurrence.For example, when testing the app with low bandwidth or under distraction interference, edge cases are likely to occur.

-

User Safety/Compliance: In regulated industries, such as healthcare or finance, any failure is critical. These industries must prioritize the edge cases that impact user safety or compliance.A medical app, for example, must handle incorrect or invalid data entries to prevent the dissemination of misinformation.

-

Complexity and Cost of Fix: Edge cases can be prioritized if the cost of a defect is high or if it is more complex.

-

Dependency on Changes: If upcoming code changes might affect many conditions, include related edges that depend on these changes.

-

Look for User Feedback: Based on feedback from end customers, some previously unknown scenarios may emerge in the production cycle that were not detected during the testing phase.For example, a user can complain about a bug they encountered while traveling, multitasking between apps.

- Adopt a Risk-Oriented Testing Strategy: As per this criterion, edge cases are prioritized according to both risk-based approach factors, like probable consequences and likelihood of occurrence. In this, the high-impact scenarios are considered first. Read: Risk-based Testing: A Strategic Approach to QA

Prioritization Framework

Based on the prioritization criteria discussed above, the following table shows the prioritization framework options:

|

|

|

|

Edge Case Testing Approaches

- Manual Exploratory Testing: Human testers explore the application and test unusual conditions to ensure the application works well. This approach is beneficial for UI/UX, device/locale combinations.

- Automated/Scripting: For reproducible edge cases, such as significant value inputs, performance loads, and concurrency, scripts are helpful. However, it is necessary to maintain the scripts.

- Hybrid: This approach combines automated and manual tests. Automated tests are used to test high-priority edge cases, while manual tests are performed for new/unexpected ones.

Challenges

- Infinite Possibilities: Although edge cases are rare, it is challenging to test all of them. Hence, there must be a smart selection.

- Maintenance Burden: Edge tests often involve UI changes or environmental shifts, and are brittle. Maintaining them is a burden.

- Tooling and Environment Setup: Simulating a device/network for testing edge cases is harder, as it requires setting up an extreme scenario.

- Human Imagination: Edge cases often arise from the real-world usage of an application and are difficult to anticipate.

Challenges of Traditional Edge Case Testing

- Coverage Gaps: Testers primarily focus on happy paths, time/budget constraints, and unknown conditions, which are often constrained by predefined inputs and conventional assumptions. Hence, many edge cases are simply missing from test suites.

- Time and Resource Constraints: Writing specialized edge-case test scenarios is a time-consuming process. While manual testing slows down the release, automated scripts need effort to build and maintain.

- Predictability and Re-use: Edge cases may be unfamiliar and unpredictable, as they may change with the product or environment. Consequently, test scripts may break when UI changes or logic evolves.

- Identification Difficulty: It’s challenging to imagine all extreme or rare conditions. Specific scenarios, like obscure devices, locales, or combination-based scenarios, may not be anticipated until they occur in production.

- Complexity of Real-world Environments: Real-world environments comprise distributed systems, microservices, various devices, networks, locales, and integration points; the more dimensions there are, the larger the edge surfaces. The complexity of real-world environments poses a challenge when it comes to handling edge cases.

- Maintenance and Brittleness: Edge cases mostly rely on specific conditions. Hence, when a product changes, it may fail or need an upgrade. This makes them brittle and difficult to maintain.

How AI Can Help with Edge Test Cases

So far, we have discussed edge cases in a traditional setup. You should be aware by now that the failure of a single edge-case scenario will result in significant business disruption, financial loss, and reputational damage.

Test automation has improved baseline performance and reliability, but predefined inputs and conventional assumptions limit most test suites. Due to these limitations, systems remain vulnerable to unexpected behaviors, especially under rare, unpredictable, or complex scenarios.

Edge cases, which are often excluded from traditional test coverage, are a persistent blind spot in quality assurance. Although they are rare, it does not mean they have no impact. Instead, it underlines the need to address them quickly and systematically.

Artificial intelligence (AI) is emerging as a strategic partner in this context. It augments traditional testing with machine learning (ML)-driven techniques, helping organizations to enhance their test coverage, identify defects, and ensure software performs reliably not only in normal cases but even in adverse, extreme, or unusual scenarios.

AI, when applied thoughtfully, can advance how teams generate, prioritize, execute, and maintain edge test cases.

How AI can help

-

Intelligent Test Case Generation: AI can analyze requirements and code to dynamically generate a broader range of tests for extreme or unusual scenarios, including those that are not immediately obvious to human testers. The scenarios may include:

- Entering exceptionally long queries or massive data sets.

- Simulating extreme network conditions, such as fluctuating network or low device storage conditions.

- Testing rapid, simultaneous clicks or complex sequences of actions.

- Identifying Blind Spots: AI can analyze system behavior through documents, user stories, code, and design files, such as Figma mockups, to understand system logic and data flows. This helps AI identify patterns that deviate from the norm (potential gaps) and recognize boundary conditions (minimum/maximum values, extreme input combinations), suggesting potential edge cases that would be difficult for humans to detect.

- Automating Execution and Managing Variables: AI models consistently execute tests without fatigue, bias, or error, and can manage complex environment variables (like different user settings, languages, or payment gateways) to create more realistic scenarios.

- Improving Resilience: For AI-driven applications, AI generates synthetic data to simulate challenging conditions, testing the model’s limits and making it more resilient to real-world variations.

- Adaptive Fuzz Testing: AI generates invalid or unexpected input data and observes the system’s reaction, thus enhancing traditional fuzz testing. By observing how the system handles these unusual inputs, it adapts subsequent test generation to focus on sensitive areas such as silent failures or unhandled exceptions.

- Learning from Real-World Anomalies: AI models monitor production logs and telemetry data to identify behavior that deviates from the norm. These real-world anomalies are then converted into specific, AI-generated test cases and injected into the QA process. This way, testing is based on actual user behavior and potential failure points.

- Risk-Based Prioritization: AI models analyze historical defect data and code changes to the areas where defects are most likely to emerge. QA teams can then prioritize their testing efforts on these high-risk areas, identifying critical edge cases that can be addressed early in the development cycle.

- Accelerated and Consistent Execution: AI generates thousands of test cases in minutes, compared to days or weeks manually, and executes them with high consistency and without fatigue or bias. With this speed, even the extensive edge cases can be run frequently.

AI Methodologies for Edge Case Testing

AI-based approaches to edge case testing dynamically generate test scenarios based on system behavior and data insights, unlike traditional testing. AI uses data and models to explore various combinations, anomalous inputs, and system behaviors that deviate from expected norms.

Here are some of the AI methodologies used for edge case testing:

AI-driven Test Case Generation

- Natural language processing (NLP) is used to parse requirement documents and produce test scenarios for both standard and boundary conditions.

- Machine learning (ML) is used to learn from past defects and usage data, identifying patterns of where edge failures have occurred and proposing new test cases.

AI-based Risk Prediction and Prioritization

AI models analyze past defects, usage patterns, and code change data to identify modules or components that are at higher risk and more likely to experience edge case failures. The testing team can then focus more on these areas.

Self-healing Test Automation and Maintenance

Edge test cases are brittle in nature and change when UI or logic evolves. AI-powered automation tools can adapt to changes; they can automatically update scripts or re-map locators when UI changes occur. This reduces the need for maintenance of edge-case automation.

Test Data Generation for Extreme Conditions

AI generates synthetic data sets that represent extreme values, abnormal inputs, unusual combinations, rare locales, large volume, high concurrency, etc., enabling better coverage of edge cases.

Exploratory/Unsupervised Edge Detection

A few emerging AI tools can scan an application (or logs) and autonomously identify anomalous or extreme behaviors or usage patterns that represent edge cases. AI models then trigger tests or raise alerts for these scenarios.

Benefits of Using AI for Edge Test Cases

- Improved Coverage: AI covers more edge and boundary scenarios than human/manual testers.

- Faster Test Case Generation and Execution: AI tools reduce manual effort significantly by generating and executing test suites more quickly.

- Better Prioritization: By focusing its resources on areas where defects are most likely to occur, AI facilitates more effective prioritization of edge cases.

- Reduced Maintenance Overhead: With AI’s self-healing ability and adaptive test scripts, less time is spent fixing broken tests instead of finding bugs.

- Enhanced Reliability and Risk Mitigation: AI models cover a wider range of edge conditions, reducing the risk of unexpected failures, which is especially important in mission-critical systems.

- Insights and Analytics: AI provides insights into areas where edge failures are likely, helping teams improve architecture, robustness, and design earlier.

Practical Use-Cases of AI in Edge Case Testing

- An E-commerce System: AI models analyze user behavior logs and find that on rare occasions when a customer applies both coupon A and coupon B (unsupported combo), the system enters an invalid state. This is an extreme condition, and AI generates test cases for these coupon combinations and payment-gateway concurrency.

- A Mobile App: AI generates test scenarios for extreme scenarios such as low battery, network-disconnect in the midst of syncing, abrupt locale-change during data entry, etc.

- An API/Microservice System: AI assesses code changes, metrics, and historical data or defects, to predict issues in microservices such as “file upload” that has risk for large file sizes and network dropouts. It then generates edge test cases (huge file, interrupted upload, and time-out) and triggers the automated testing pipeline accordingly.

Considerations & Implementation Steps

- Data Requirements: AI can generate meaningful edge cases only when you have fed it proper data, such as historical usage data, bug/incident logs, and test-case databases.

- Tools Selection: Choose AI tools/platforms that support test-case generation, test-data generation, and self-healing scripts. Tools like testRigor can generate self-healing test cases based on an English-like description.

- Integration in Pipeline: Your CI/CD pipelines and DevOps workflow should be properly integrated with AI to trigger edge case tests early. This way, there will be early feedback, and issues can be fixed without delay.

- Human-in-the-Loop: Although AI automates the testing process, it still needs human testers. Testers need to review AI-generated test cases, validate, refine, and interpret the results to evaluate the correctness of edge case testing.

- Governance & Traceability: In regulated industries, edge testing requires audit trails, explainability (why a particular scenario was picked), and coverage reports.

- Continuous Improvement: As systems evolve, AI models must retrain or update, data must be refreshed, and edge case definitions must be updated.

Limitations

- In case the training data is weak or biased, the edge test cases generated by AI may be irrelevant or low-value.

- There will still be some scenarios where human intuition is required.

- Setting up an AI-driven testing infrastructure has a cost overhead.

Best Practices for AI-Enhanced Edge Case Testing

- Start Small with a Pilot Project: Select a module with some complexity, historical defects, and try AI-augmented edge test generation.

- Define Edge Case Taxonomy: Prepare a shared report of edge scenarios for your organization, consisting of input extremes, resource limits, and device/locale extremes.

- Ensure Quality of Training Data: Use defect logs, test history, and usage analytics to improve the quality of training data. After all, the better the data, the better the AI suggestions.

- Combine Manual + AI: Prioritize and separate the testing approaches. Use AI for bulk generation and prioritization while assigning exploratory, novel, and UX-centric edge cases to human testers.

- Integrate with QA/DevOps Pipeline: integrate edge-case test generation, execution, and maintenance into your workflow.

- Track Metrics: Track continuously the coverage of edge cases, defect escape rate (especially from edge scenarios), maintenance cost of the test suite, and cycle time.

- Governance & Traceability: Maintain a record of audit logs of AI decisions, test-case selections, and prioritization rationale. This is especially essential in regulated industries such as healthcare and finance.

- Regular Review and Maintenance: Update edge case taxonomy and AI models regularly so that the latest edge cases and AI models are always relevant.

Conclusion

Edge test cases often fall into the blind spot of many test suites, yet ignoring them can lead to serious user impact, instability, and security issues. While traditional methods of identifying and testing edge cases remain essential, they are increasingly challenged by the complexity, pace, and scale of modern software systems.

AI can help generate edge-case test scenarios from specifications and data, prioritize where to apply them based on risk, maintain tests as the system evolves, and free testers to focus on novel exploratory work rather than solely on bulk scenario writing.

However, successful implementation of AI in edge case testing requires good data, tool integration, human oversight, governance, and continual refinement. When all factors are set, AI-backed edge-case testing can help teams move from “we hope nothing breaks in rare scenarios” to “we have structured and scalable coverage of the edges”.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |