What is a Neural Network? The Ultimate Guide for Beginners

|

|

“You don’t train a neural network. You let it struggle, fail, adapt, and repeat—until its failures look like intelligence” – Emmimal P. Alexander.

Neural networks have attracted more attention in the past few years more than any other technology in the fast-developing industry of artificial intelligence. Neural networks are considered the very foundation of modern machine learning, allowing everything from facial recognition and voice assistants to self-driving cars and financial forecasting.

However, what exactly is the definition of a neural network, and what makes it so potent?

| Key Takeaways: |

|---|

|

Neural networks are described in detail in this holistic guide, along with their types of architectures, differences from other deep learning systems, and functions. We’ll also dive into the relationship between neural networks and software and the importance of AI-empowered tools to ensure dependable AI-driven applications.

What is a Neural Network?

Neural networks are essentially computational models that derive inspiration from the intricacies of the human brain. It is built of layers of nodes, also called neurons, each of which performs mathematical functions. By modifying the weights of connections between nodes, these operations help the network to identify complex relationships in data.

This model is also called an artificial neural network (ANN), which emulates the synaptic communication of biological neurons. Neural networks are excellent tools for pattern recognition and prediction in machine learning.

How Neural Networks Work

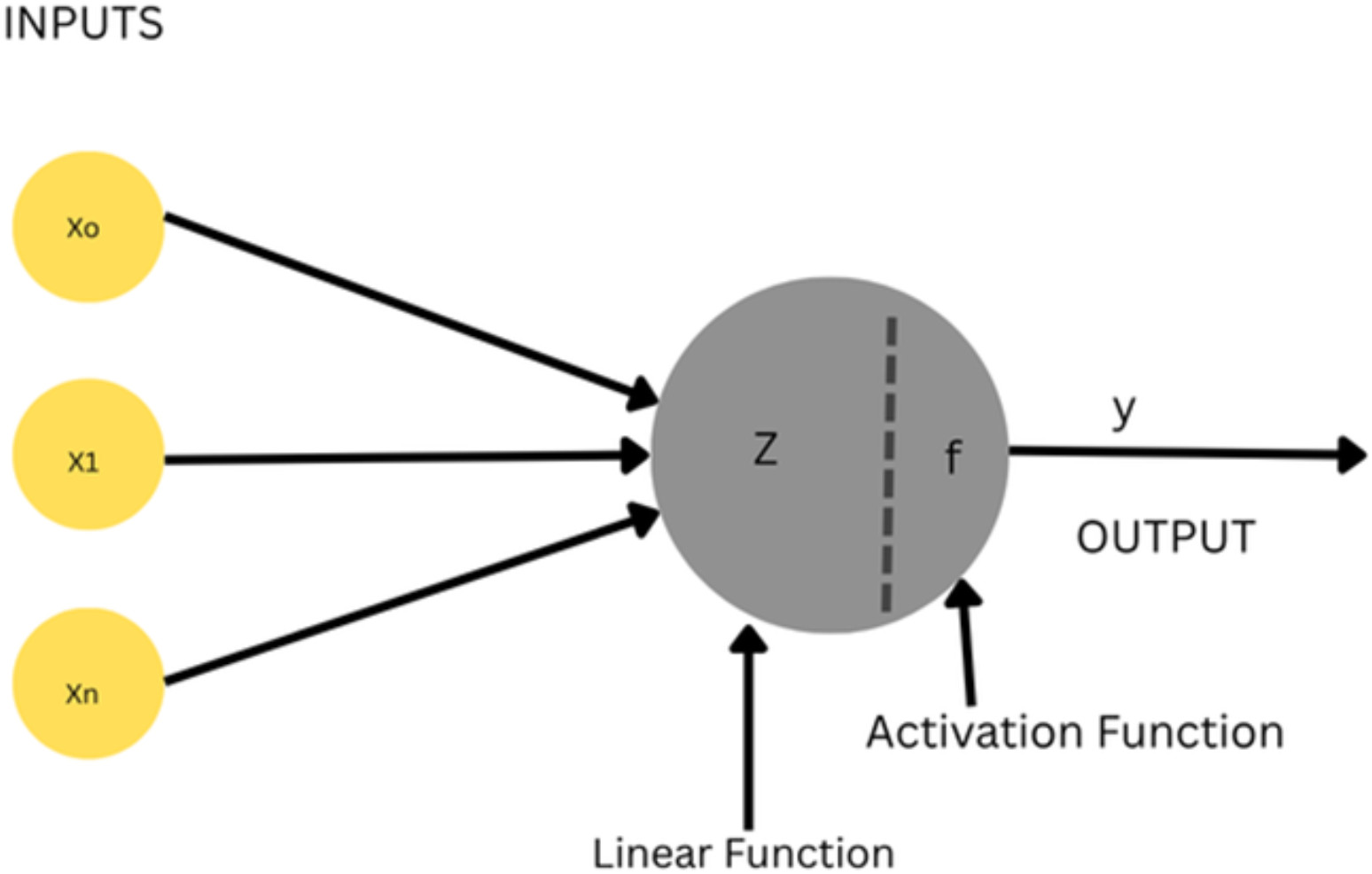

The layered structure of neural networks simulates the manner in which the human brain functions. An input layer, one or more hidden layers, and an output layer are all main components of this structure. Weighted links enable the neurons, also called as nodes, that make up the layers; allow them to communicate with one another. Numerical values in a dataset or pixel values of an image are all instances of raw data that are pushed to the input layer. The hidden layers then receive these inputs.

The inputs are then multiplied by predetermined weights, added to a bias term, and then passed through an activation function in each hidden layer neuron. By adding non-linearity, this function facilitates the network to train from complex and unstructured data. Tanh, sigmoid, and ReLu (Rectified Linear Unit) are examples of popular activation functions.

Forward propagation is the term generally used to define the prediction process. Once an output is obtained from the network, the error (difference between the actual and the predicted value) is calculated using a loss function. The popular loss function includes cross-entropy or mean squared error. The backpropagation process, which is explained in much more detail later in this blog, is then utilized to reduce the error. It updates the network’s weights leveraging an optimization algorithm like Adam Optimizer or Stochastic Gradient Descent (SGD).

Neural networks gain insight from data and gradually improve their predictions through the repetitive processes of forward propagation, backpropagation, and loss computation. Neural networks increase their accuracy over time in tasks such as speech recognition, natural language comprehension, image classification, and much more.

Neural Network Architecture: Layers, Nodes, and Connectivity

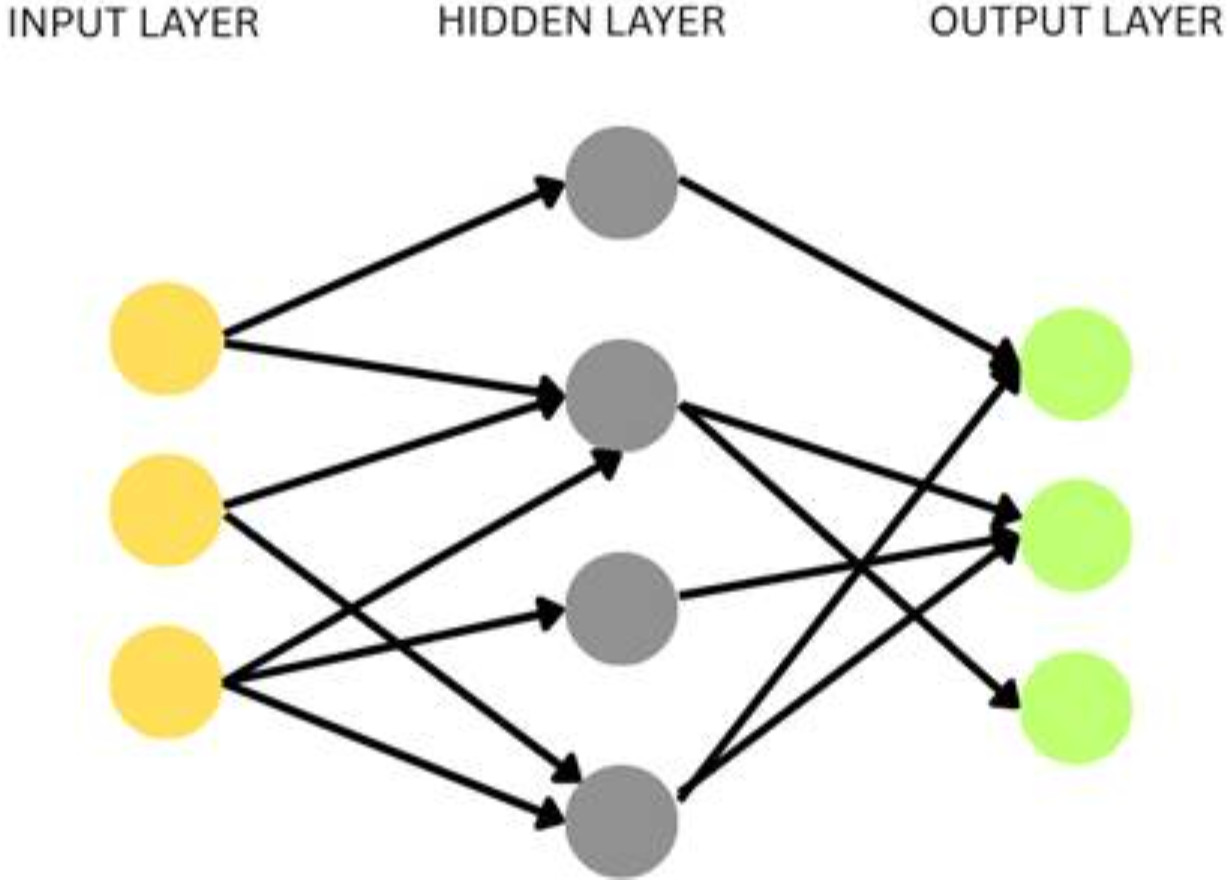

To fully grasp the true potential of neural networks, one must understand their architecture. Layers, nodes (or neurons), and the connections between them consist of the three main segments of any neural network. The input layer, where unprocessed data goes into the system, is where the architecture starts. One or more hidden layers come next, where complex calculations are used to separate and modify the data. The output layer produces the network’s final prediction at the conclusion.

After receiving input from the layer previous to, each neuron in that layer processes it by adding a bias, computing a weighted sum, and then passing the result through an activation function like a ReLu (Rectified Linear Unit), tanh, or sigmoid. The resilience and adaptability of these connections are established by the biases and weights, which enable the network to adapt and learn from data.

Multiple hidden layers and progressively more complicated neuronal connectivity are functionalities of deep neural networks. Techniques such as batch normalization to stabilize learning or dropout for regularization may also be incorporated in layers. Computational efficiency, generational ability, and model performance are all greatly impacted by the architecture design.

Neural Network Types: Exploring Common Architectures

There are multiple types of neural networks, and each is correct for a specific set of tasks and data. Selecting the right architecture for your application can be made straightforward if you are conscious of the main types.

Feed Forward Neural Network

Feed forward neural network is simply the most basic and straightforward type of neural network. There are no cycles or loops in this structure; data flows unidirectionally from the input layer through the hidden layers to the output layer. These networks form the base for more complex models and are often used for easier classification and regression tasks.

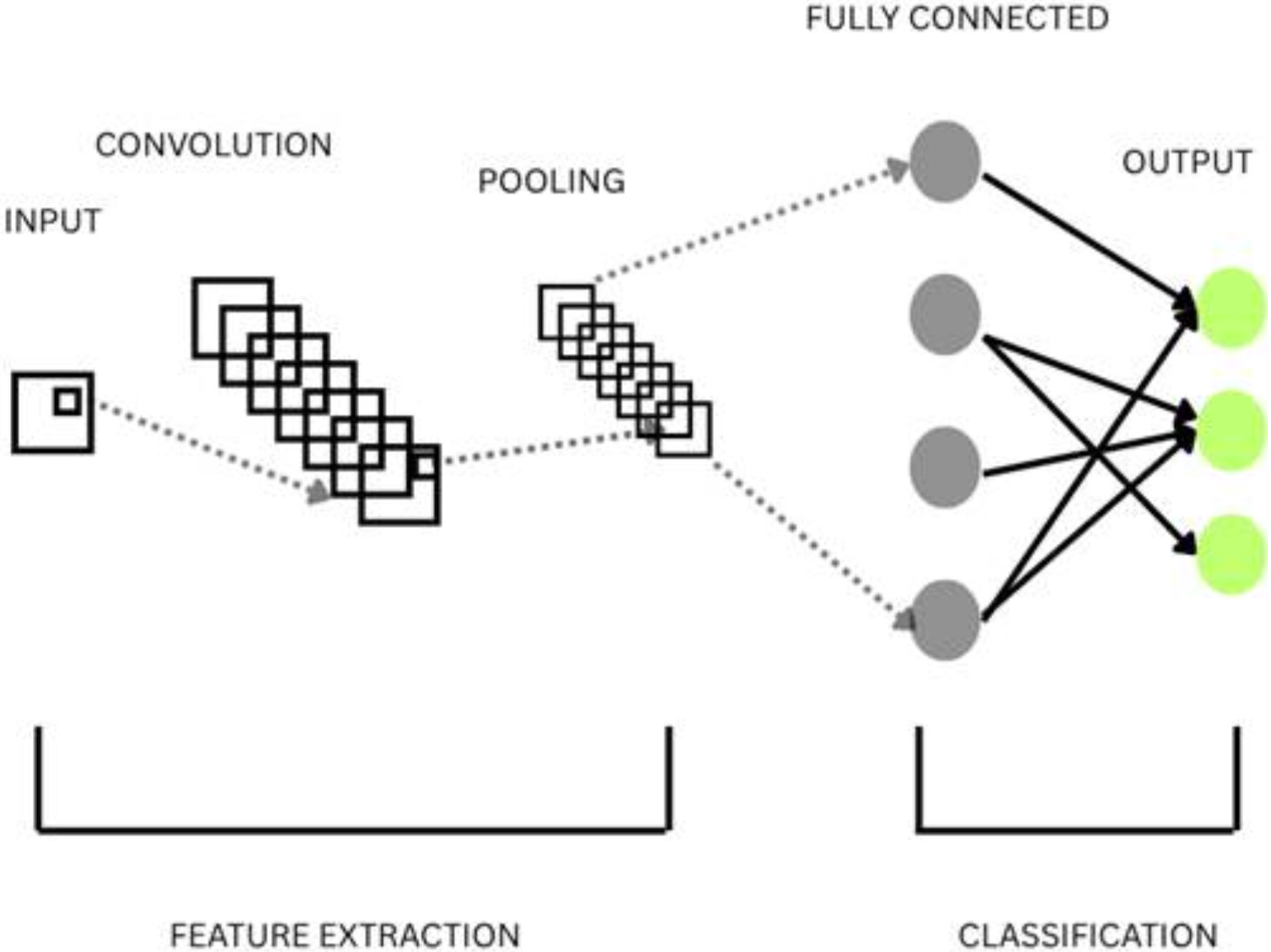

Convolutional Neural Network (CNN)

These are made to analyze and interpret data that mimics a grid, like pictures. Convolutional layers with filters (kernels) that slide across input data are used by CNNs to detect patterns and spatial hierarchies. As data progresses through the layers, these filters capture edges, textures, shapes, and higher-order functionalities. CNNs are quite often used in computer vision tasks like facial recognition, object detection, and image classification. Read: Vision AI and how testRigor uses it.

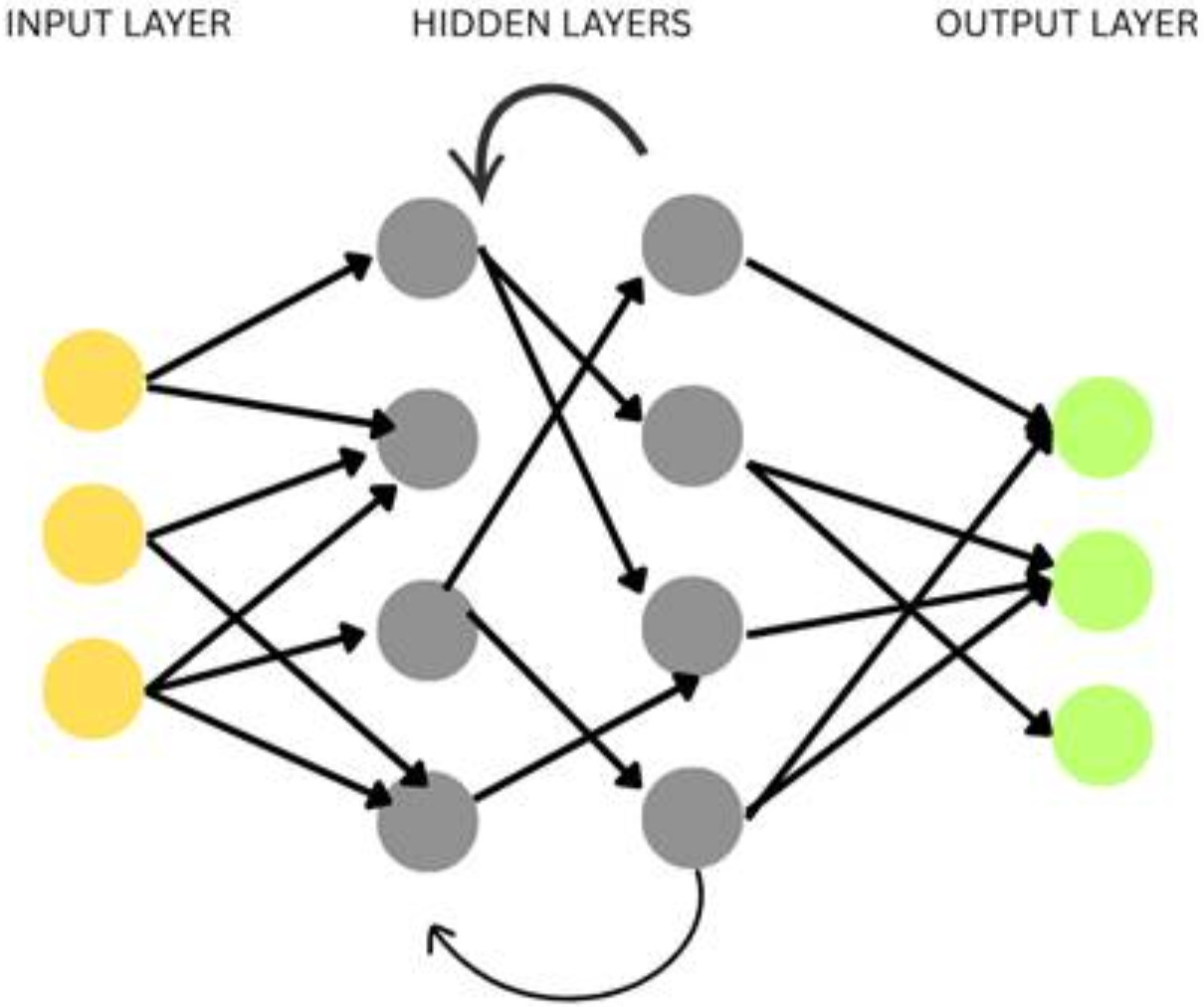

Recurrent Neural Network (RNN)

As opposed to feed forward networks, recurrent neural networks leverage loops to preserve information across the sequence’s steps. For handling time-series or sequential data, like text, financial, or speech trends, this architecture is ideal. RNNs are useful for real-world language modeling and translation tasks because variants such as LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) are especially ideal for overcoming obstacles like vanishing gradients.

All three architectures: feed forward, convolutional, and recurrent, address unique data patterns and computational needs, making them efficient instruments in multiple machine learning domains.

Simple Neural Network Example

Imagine a straightforward task:

Estimating the cost of a home based on characteristics such as area, location, and number of rooms. A simplified neural network will have:

- Three inputs (the features).

- One hidden layer with a few neurons.

- One output neuron (predicted price).

Even with this simple setup, the network can learn from data to offer surprisingly accurate predictions over time.

Neural Network vs. Deep Learning: What’s the Difference?

Deep learning and neural networks are interconnected but distinct machine learning concepts, in spite of their prevalent interchangeability. In order to mimic the way the human brain processes information, a neural network is a computational framework composed of layers of interconnected nodes, or neurons. It can detect trends and predict outcomes from data thanks to this structure.

Conversely, neural networks with multiple hidden layers, sometimes dozens or even hundred, between the input and output are especially referred to as deep learning. An increased number of abstract and high-level representations of data are made possible by these deep neural networks. And these help the networks to tackle increasingly challenging tasks such as image recognition, gaming (e.g., AlphaGo), and natural language understanding.

High-performance computing resources and massive datasets are also beneficial to deep learning since they aid them to reach greater accuracy levels and reduce the need for manual feature engineering. Deep learning models learn feature representation directly from data, whereas conventional neural networks may require manually built features.

To put it in simple terms, not all neural networks are deep learning models, but all deep learning models are neural networks. Thanks to deep learning, conventional neural networks are well-suited for large-scale issues combining large datasets and high-dimensional inputs, expanding their capabilities and scope.

Learning Through Errors with Backpropagation Neural Network

Backpropagation is a basic idea in neural network training. It is the base of modern deep neural network supervised learning. This algorithm determines the contribution of each weight in the network to the general prediction error. It basically instructs the network how to get better by utilizing this information to update weights in a manner that reduces the overall error.

When a loss function is used to contrast the network’s output to the final received target output following forward propagation, the process is initiated. The term “backpropagation” refers to the process of the resulting error propagating backward through the network. To fully understand how much even a minor change in weight would affect the overall error, a gradient (partial derivative) is calculated for each weight in the network.

To soften and reduce the loss, the network changes its weights utilizing an optimization algorithm such as Adam or stochastic gradient descent (SGD). The model’s performance is incrementally improved by this iterative process, which is iterated for multiple epochs (full runs through the training data).

Neutral networks can learn complex, multi-dimensional mappings between inputs and outputs as a direct consequence of backpropagation. Deep network training would be inefficient and difficult without it. Recognizing the relationships between thousands or millions of weights is critical in large-scale architectures like RNNs and CNNs.

Neural Networks in AI and Machine Learning

In the development of modern machine learning and artificial intelligence, neural networks are important. By enabling machines to learn directly from unprocessed data rather than relying on explicitly written rules, they have transformed traditional systems. Neural networks in AI help systems to drive cars, identify faces, comprehend languages, and suggest content. They are flexible function approximators used in machine learning that can respond to noisy datasets, nonlinear patterns, and high-dimensional inputs.

Neural networks stand apart in this field because of their capability to generalize, that is, to detect major trends in large, varied datasets and implement them to previously unseen data. Neural networks have pushed the limits of machine abilities from basic classification tasks to innovative generative models, defining the groundwork for cutting-edge advancements in deep learning.

Neural Network Layers’ Role in Building Blocks and Complexity

The process of extracting and transforming features from the input data is the responsibility of each layer of a neural network. The network discovers increasingly abstract and high-level representations as the data moves through additional layers. Neural layers thus function as the fundamental units of deep learning models.

The fully connected (dense) layer is the most popular kind, in which each neuron is connected to every other neuron in the layer previous and next. General feedforward architectures make use of these layers.

Convolutional layers employ CNNs, which leverage data such as images to learn spatial hierarchies. They are computationally effective and very successful at identifying patterns because they apply filters to particular areas of the input.

Recurrent layers, such as those used in RNNs, have internal memory, enabling the network to store information across sequences. For temporal tasks like speech recognition or language modeling these are vital.

By decreasing the spatial size of the data, pooling layers help in the management of computation and overfitting. In order to improve generalization during training, dropout layers arbitrarily deactivate neurons.

Networks can model extremely complex and subtle patterns as they dig deeper. That is, with more layers stacked on top of one another. However, there are disadvantages to deeper networks as well, like vanishing gradients or overfitting. These can be addressed with the aid of normalization layers and residual connections.

Neural Networks in Software Testing: Enter testRigor

Even though neural networks are helping artificial intelligence, testing and validation become more complex when they are embedded into software systems. Because AI is data-driven and displays non-deterministic behavior, testing neural network-powered applications is challenging. This is where testRigor and other AI-empowered testing automation tools come in with a bang and become invaluable.

Why use testRigor?

testRigor is an AI agent, and you can use it to test LLMs, chatbots, and more. Read: Top 10 OWASP for LLMs: How to Test? You can use it to test AI features such as sentiment analysis, positive/negative statement analysis, true/false statements, etc. Organizations can leverage testRigor to maintain high software quality while adopting intricate neural network models in production. testRigor provides an AI-driven practice to test automation that aligns correctly with applications using neural networks:

- No-code Test Authoring: Ideal for teams that need to rapidly test AI features.

- Intelligent Test Adaptation: Automatically adapts to changes and learns UI flows.

- Scalable Across Environments: Able to validate workflows that depend on AI/ML logic along with the interface.

- Test AI using AI: Use testRigor’s intelligence to test the AI-based applications, know here more: How to use AI to test AI.

The Future of Neural Networks

Neural networks are powerful tools that are transforming every industry and are not just a scientific curiosity. They play an unquestionable role in enhancing user experiences and aiding more intelligent automation.

But to quote Uncle Ben, “With great power comes great responsibility.” It is vital to ensure the dependability, openness, and testability of applications fueled by neural networks. Our approach to software testing needs to evolve along with AI. Platforms like testRigor offer a progressive response to this new challenge.

Building intelligent systems in the future demands a comprehension of neural networks, regardless of your background and experience as a developer, data scientist, or software tester.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |