What is Sandwich Testing?

|

|

Integration testing is the place where all software and testing assumptions meet across data formats, timeouts, authentication, ordering, idempotency, race conditions, missing fields, feature flags, and caching. And the fact during integration testing is that a system really is a conversation between many parts.

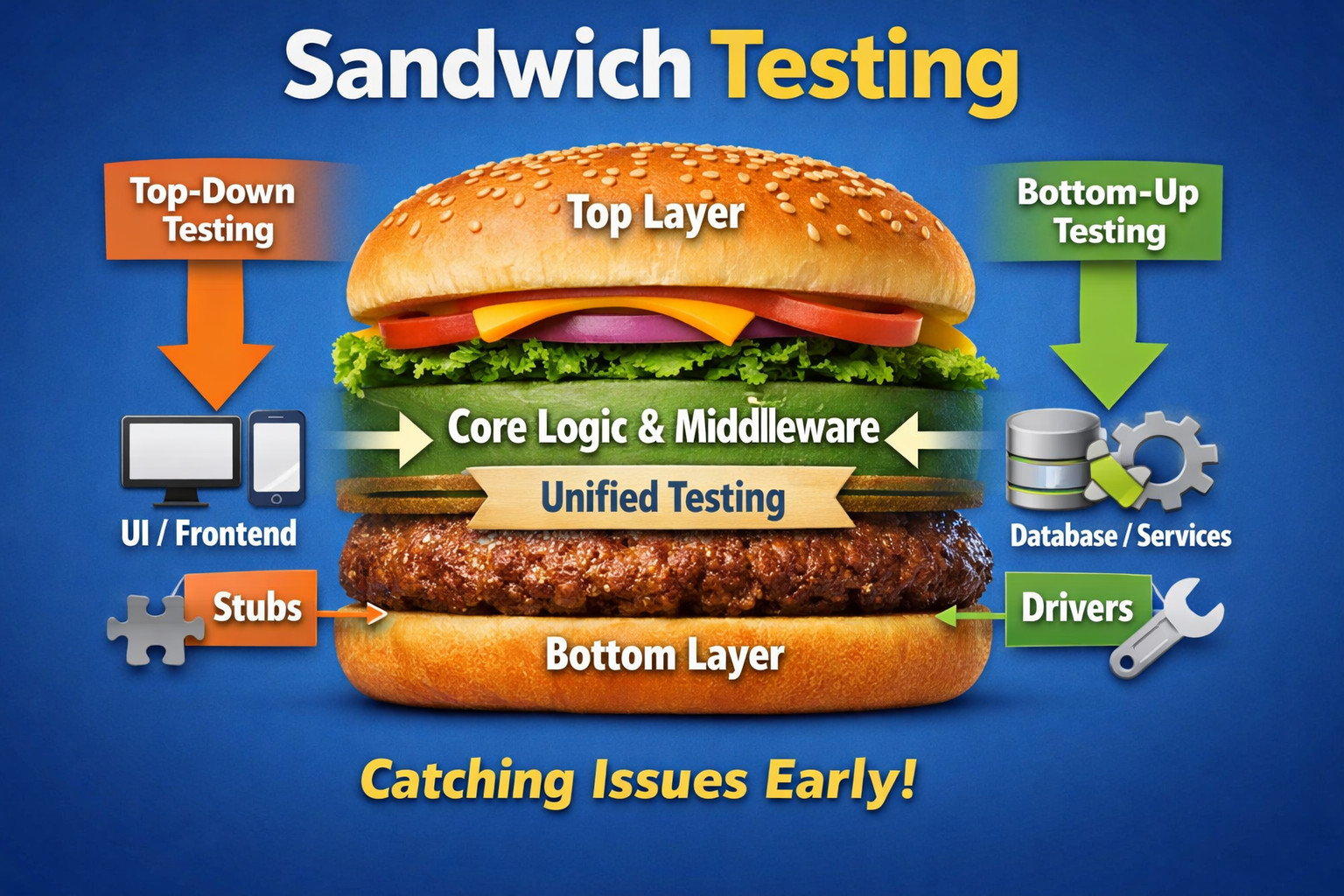

Teams typically approach it top-down, starting from the UI or entry point and working their way down with stubs. Or bottom-up, starting from the low-level modules or services and working their way up using drivers. Top down uncovers end-to-end flow issues early but can hide low-level issues under stubs, while bottom up strengthens base code bit by bit but may put off finding user flow breakages until late.

That’s exactly why sandwich testing exists.

The sandwich testing is a hybrid strategy based on the mix of top-down and bottom-up approaches, allowing for simultaneous checking of flows visible to users as well as mechanisms lying directly at the heart of the system. It focuses on meeting in the middle when “core” features are available. It’s called “sandwich” because you are testing the top slice and bottom slice together, while the integration fades into the middle layer.

| Key Takeaways: |

|---|

|

About Sandwich Testing or Hybrid Integration Testing

Hybrid Integration Testing (also called Sandwich Testing) is a form of integration testing in which both top-down and bottom-up testing approaches are employed simultaneously. The upper layer is merged with stubs from missing lower layers, and the lower one upward with drivers from missing higher layers. The two integration paths meet at the intermediate layer, where both testing and training paths converge. In other words:

- Top-down Integration testing, which starts at the top layer through to the entry point and drills down through business logic.

- Bottom-up Integration starts from the base/core services and tests up to the orchestration.

- Sandwich Testing runs in parallel until the two testing branches meet at a certain point in time, when the intermediate components are stable enough and can be merged.

Read: Integration Testing vs End-to-End Testing.

Why Use Sandwich Testing?

Sandwich testing is not a fancy academic concept; it is simply a way to get early feedback when the full stack is not available. Instead of requiring your entire system to be integrated before you can test it, exposing problems late (and making them expensive to fix), you verify user-flow logic early with top-down checks, and confirm base data accuracy early via bottom-up checks.

It also eliminates dependency bottlenecks in large-scale projects where a team completes before another. The front-end and the API team can work in stubs, the data/service team can verify with drivers, and both parties can construct useful integration testing coverage long before a real end-to-end pipeline even exists.

Most importantly, it gives balanced risk coverage rather than betting on a single direction. Top-down tends to expose broken routing, auth, request wiring, UI/API mismatches, and orchestration failures, while bottom-up catches data integrity bugs, DB constraints, transaction issues, core business rule defects, and foundational performance or latency problems.

Read: Smoke Testing vs Regression Testing: Key Differences You Need to Know.

Sandwich Testing vs. Top-Down vs. Bottom-Up

Sandwich testing sits between top-down and bottom-up integration testing by combining both directions, so you don’t have to choose between early user-flow feedback and early core-layer confidence. When you compare all three side by side, the trade-offs become obvious; each approach optimizes for a different risk area and team constraint.

| Testing type | Pros | Cons |

|---|---|---|

| Top-Down Integration Testing | Early validation of user flows and navigation; high-level architecture and wiring issues found early (routing, auth, controller-to-service orchestration) | Deep logic and data issues may be discovered late; stubs can hide real behavior because they’re often “too perfect.” |

| Bottom-Up Integration Testing | Strong validation of data access and internal APIs early; core and middle-layer correctness builds confidence upward | User flows are validated late; drivers may not represent real usage patterns well |

| Sandwich Testing (Hybrid) | Parallel progress from top and bottom; earlier defect discovery across user flows, and foundational services; middle-layer integration tends to be cleaner and lower-risk | Requires coordination to maintain both stubs and drivers; needs careful test design to avoid duplicate coverage or confusion |

Sandwich Testing in Layered Systems

Sandwich testing is most easily illustrated in a five-layer architecture. The presentation layer comprises the web UI, mobile app, desktop client, and CLI, as well as external clients. Then the service/API layer contains controllers, API gateway request validation, and auth middleware; and “business logic,” or services orchestration, workflow coordination, and a rule engine.

The data layer includes repositories, ORM mappings, DB schemas, and caching. External dependencies include payment processors, email and SMS providers, analytics, and identity providers. In sandwich testing, the top-down stream validates the presentation layer, then the service and API layer, then the business logic layer, using stubs for what is below.

The bottom up stream validates data layer, then business logic layer, then service and API layer using drivers for what is above, and then the middle layer is integrated to connect the two streams fully. Where the middle starts depends on the architecture in microservices; it is often an orchestration service, and in monoliths, it is often domain services.

Read: API Contract Testing: A Step-by-Step Guide to Automation.

Sandwich Testing with Stubs and Drivers

Because sandwich testing is hybrid, it relies on both stubs and drivers to let testing move from the top and the bottom at the same time.

A stub is a simplified replacement for a downstream dependency so the component under test can keep moving, such as stubbing backend API responses for the UI or stubbing payment authorization results for a service.

A driver is a temporary caller that invokes a lower layer that does not yet have its real upstream caller, such as calling repository methods before the service layer exists or publishing messages before the producer is ready.

In sandwich testing, stubs support the top downstream stream, and drivers support the bottom upstream, and both are meant to be temporary until real integration replaces them. A solid sandwich testing plan includes an exit strategy that defines when stubs and drivers are removed and what becomes the real integration contract.

Read: How to Test in a Microservices Architecture?

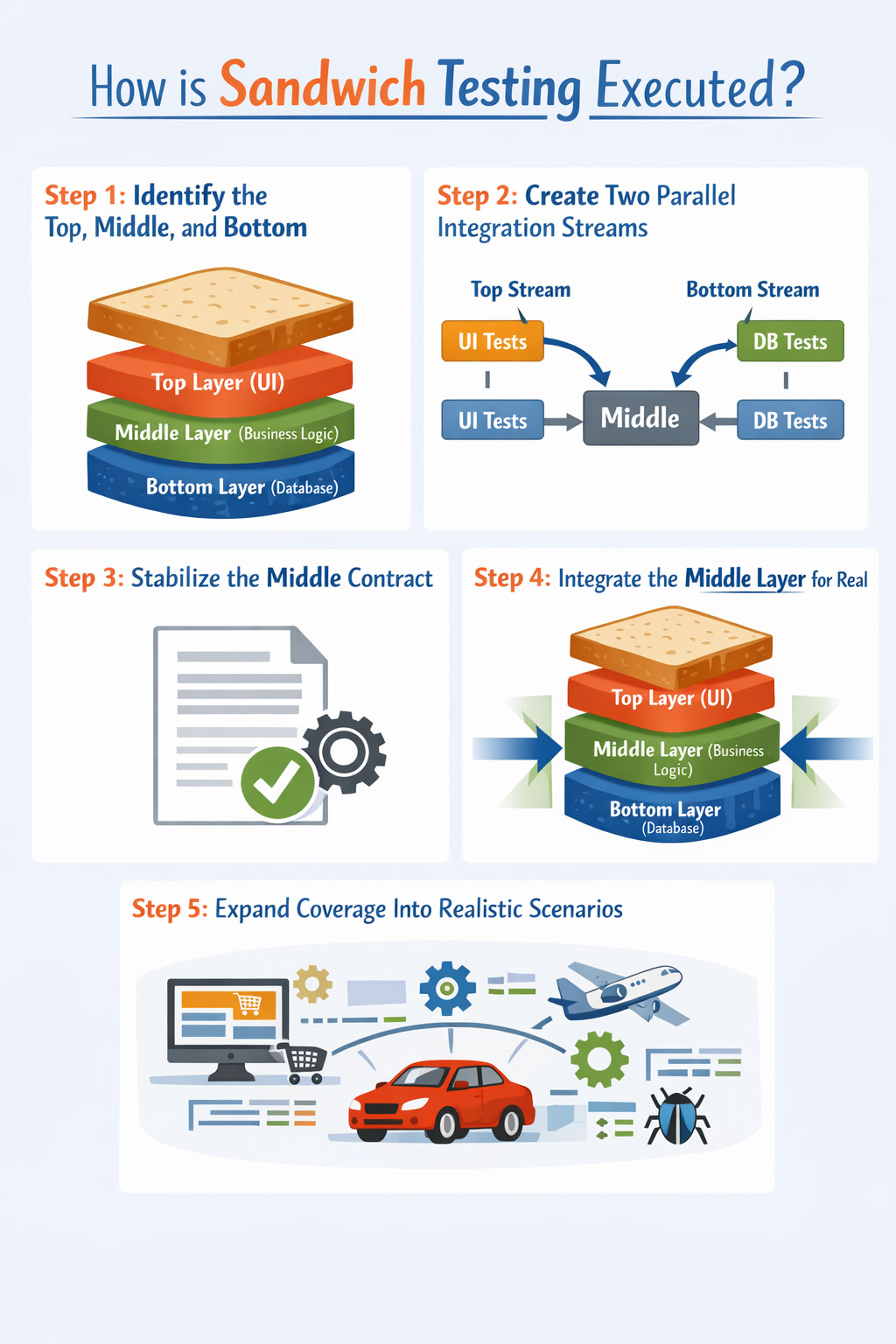

How is Sandwich Testing Executed?

Sandwich testing is executed by running two integration streams in parallel, so you can learn fast without waiting for full-stack readiness. The flow below shows how teams move from partial integrations with stubs and drivers to a real connected system.

Step 1: Identify the Top, Middle, and Bottom

First, define what counts as the top, such as UI API endpoints and controllers, the bottom, such as DB external adapters and core utilities, and the middle, such as orchestration business rules and coordination. This matters because the middle is where the two streams will eventually meet.

Step 2: Create Two Parallel Integration Streams

In the top-down stream, integrate from UI or controllers downward and use stubs wherever lower components are missing. In the bottom-up stream, integrate from the DB or services upward and use drivers to invoke these components until real callers exist.

Step 3: Stabilize the Middle Contract

While both streams run, align the data contracts and refine schemas so the two ends converge cleanly. Validate API payload fields, error models, and domain invariants so the middle layer becomes a reliable meeting point.

Step 4: Integrate the Middle Layer for Real

Once the top and bottom are stable, remove stubs and drivers and connect the top to the middle to the bottom. Run regression integration tests end-to-end while keeping the scope focused on integration behaviors rather than full system testing.

Step 5: Expand Coverage into Realistic Scenarios

Move from simulated dependencies to realistic environments such as actual message brokers, actual databases, and sandboxed third-party systems or contract tests. Add complex workflows and stress cases to confirm behavior under production-like conditions.

Read: Mocks, Spies, and Stubs: How to Use?

Sandwich Testing Coverage Map

Sandwich testing, also called hybrid integration testing, validates how the top layer and bottom layer integrate, often in parallel, using stubs and drivers until real middle connections are ready. It is strong for catching integration contracts and data issues early, but it does not replace full end-to-end validation with real production-like dependencies.

| What Sandwich Testing Covers | What Sandwich Testing Does Not Cover |

|---|---|

| Top-down integration behavior, where UI or API endpoints correctly call the next layer and handle responses and errors, while lower layers are simulated using stubs | Full end-to-end flows where every layer is real and connected, including UI, backend database, and external systems running together in the same environment |

| Bottom-up integration behavior, where database repositories, adapters, and low-level services work correctly together and can be exercised using drivers that imitate the missing upper layers | Production-like performance and scalability validation, such as peak load throughput, latency under stress, and resource saturation behavior in real infrastructure |

| Contract and interface mismatches between layers, such as missing fields, wrong field names, incorrect status codes, invalid event schemas, and inconsistent validation rules across components | Real third-party provider behavior, including sandbox differences, rate limits, intermittent failures, regional routing quirks, and true outage scenarios, unless you integrate and test against real provider environments |

| Data mapping and persistence correctness, such as serialization formats, transformations, constraints, default values, transaction boundaries, rollback behavior, and idempotency for repeated requests | Deployment and environment configuration issues, such as IAM permissions, networking, DNS, TLS certificates, secrets management, and misconfigured environment variables that only appear after deployment |

| Orchestration sequencing and error handling, such as retries, timeouts, compensating actions, partial failure recovery, and correct state transitions across multiple steps in a workflow | Comprehensive security testing, such as penetration testing, vulnerability scanning, threat modeling, and advanced authorization bypass checks, requires dedicated security practices |

How to Automate Sandwich Testing

Automation is where sandwich testing either becomes a superpower or turns into a maintenance nightmare. The key is to automate the integration seams (contracts, orchestration outcomes, persistence, and error handling) without over-investing in fragile UI scripting. In system-style flows, tests are often triggered through the UI, but verification needs to confirm behavior across services; this is why resilient automation matters. testRigor is designed to reduce maintenance by keeping UI interactions more stable even when the frontend changes, while still letting teams validate outcomes through APIs and backend checks.

Read: How to do API testing using testRigor?

Advantages and Risks of Sandwich Testing

Sandwich testing helps teams find important integration issues earlier while enabling parallel progress across the stack. At the same time, it introduces coordination and maintenance risks that need to be managed deliberately to avoid false confidence.

| Advantages | Risks |

|---|---|

| Earlier risk discovery because you find UI and DB or provider issues early rather than late | Complexity in setup because you may maintain both stubs and drivers initially |

| Better parallelization because UI and backend or data teams can work independently and still test meaningful integration | Double maintenance cost if stubs and real services drift apart, and tests start lying |

| Reduced late-stage chaos because big bang integration causes defect storms, and sandwich testing reduces the blast radius | Requires architectural clarity and agreement on layers and interfaces, or the strategy collapses |

| More stable feedback than full end-to-end because tests can focus on API and service boundaries and reduce UI brittleness | Environment dependencies because integration testing needs stable DBs, queues, and sandbox providers |

| Better coverage of real contracts because unit tests do not catch contract mismatches, while sandwich tests do | Risk of flakiness and slow feedback if environments and dependencies are not reliable |

Best Practices for Effective Sandwich Testing

Sandwich testing works only when teams treat it as a disciplined integration strategy rather than a loose mix of partial tests. These practices keep feedback fast, contracts real, and temporary stubs and drivers from becoming permanent shortcuts.

- Keep the Middle Layer Contract First: Define the inputs and outputs for the middle layer early and treat them as the primary integration contract. When top-down and bottom-up streams converge, the middle layer becomes the handshake point that prevents mismatched assumptions.

- Don’t Overuse UI in Sandwich Tests: Avoid building your top-down stream mainly through full UI automation because it increases flakiness and slows feedback. Prefer API level tests, controller-level integration tests, and minimal UI smoke tests only for the most critical flows.

- Use Realistic Data Shapes: Make stubs return payloads that match real services in fields, types, and optionality. If the stub is cleaner than reality, you will miss contract bugs and get false confidence.

- Test Failures Intentionally: For every integration seam, test not only for success but also for validation failures and downstream failures. Include timeout and retry behavior so your orchestration and error handling are proven, not assumed.

- Prefer API-first Sandwich Tests + Minimal UI Smoke: Keep the top stream mostly API/controller-level to reduce flakiness, and reserve UI for only the most critical entry flows. Tools like testRigor support this style well because teams can describe tests in plain English while still validating API + workflow outcomes across services.

- Make Replacement of Stubs and Drivers Continuous: Remove stubs and drivers continuously as soon as the real component exists, ideally at least one meaningful replacement per sprint. If you do not, sandwich testing becomes permanent fake world testing and stops reflecting production behavior.

Conclusion

Sandwich testing or hybrid integration testing helps teams validate user-visible flows from the top while simultaneously proving the correctness of core services and data behavior from the bottom, and then merging both streams at a stable middle layer. This approach reduces late-stage integration surprises by exposing contract mismatches, orchestration failures, and persistence errors early without waiting for every component to be fully ready. With tools like testRigor, teams can keep the top stream lightweight and resilient using minimal UI and more stable intent-based automation while still verifying real integration outcomes across APIs and backend layers as the system converges end-to-end.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |