What is Test Oracle in Software Testing?

|

|

If you once started writing a test case and suddenly stopped, thinking, “Hmm, how do I even figure out the right result?” Well done, you just figured out what a test oracle is.

It feels kind of mysterious… oracle… almost like a voice whispering replies. Truth is, well, it is pretty close. In software checks, a test oracle can be someone, a file, an app, or reasoning. It is what shows if your program’s result is logical. Lacking one? Then you are testing blindly.

| Key Takeaways: |

|---|

|

What Is a Test Oracle? (And a Simple Test Oracle Example)

A test oracle is something that highlights if your test worked or tanked. Let’s look at a real-life analogy of what a test oracle can look like.

A simple real-life analogy

You grab the box when your pizza is delivered. What is the first thing you look at? It could be the smell, maybe the toppings peeking through.

- You check the order receipt.

- You remember the toppings you selected, right?

- You use your expectations: “Hey, I ordered a thin crust, not deep dish!”

The receipt versus your expectation? That is your oracle. It helps see if the final result and the expectation you had are the same.

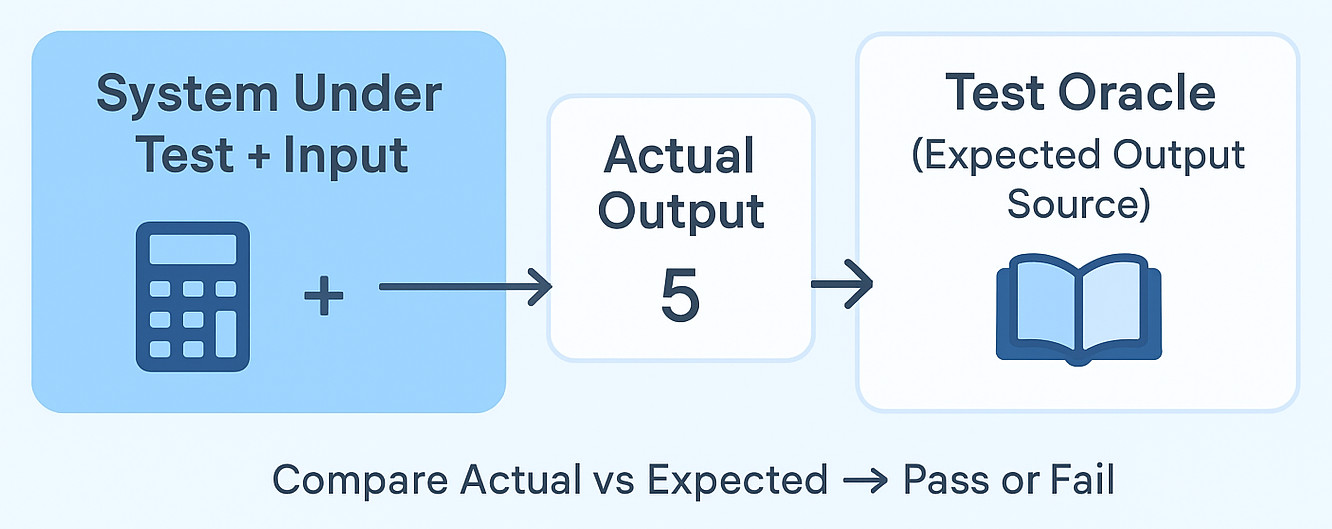

A quick test oracle example in software

Suppose you’re testing a calculator app.

Expected output: 5

Your source for “5”, whether it’s common knowledge, a requirement document, or the output from a trusted calculator. It is the test oracle.

Why the “Test Oracle Problem” Matters So Much

Imagine scaling up that small calculator idea to something major. Say, a system that decides who gets loans or diagnoses illnesses. Immediately, knowing what result should be expected becomes tricky. It is often even unclear. Instead of clear answers, you get gray areas. Each case might depend on hidden factors that no one talks about. Not every mistake unveils itself immediately. Sometimes it takes months before anyone notices things are wrong.

This issue is referred to as the test oracle puzzle.

Why is it a real challenge?

- Sometimes you won’t find one right answer.

- The system might act in an unexpected manner because of loads of factors.

- The reasoning can be so tricky that people can’t figure it out on their own.

- Factors like how easy an interface is to use often rely on personal opinion.

- Very few demands get written down properly, or even mentioned.

Testers usually fall into one of two paths:

- Guessing? Risky

- Relying on partial cues (logs, sanity checks, comparisons, etc.)

The test oracle issue? It is a big reason testing software gets complicated, especially when you are working with AI-powered setups that work on tons of data and evolve fast.

Different Types of Test Oracles: A Quick Tour

Some oracles function differently from others. Actually, those who verify stuff use different kinds, often without knowing.

Let us take a quick look at the main types of test oracles.

1. Human Oracle

This is maybe you. Or maybe your QA lead, perhaps a designer, can be a business analyst, even a domain specialist.

Humans decide if a result seems right, especially when reasoning gets tricky.

Good for:

- UX tests

- Visual testing

- Ambiguous business rules

Not good for:

- Repetition

- Precision

2. Documentation-Based Oracle

These come from requirements, acceptance criteria, formal specifications, and user stories.

Works well for steady setups that come with clear guides.

Bad news is that in the real world, documentation often lags behind development.

3. Pseudo Oracles

You input similar data into two different setups. And then verify if the results match.

Example: Comparing an in-house tax calculator with a government API.

4. Regression Oracles

You store results from a working system version. Future versions need to line up unless the system behavior is changed intentionally.

Great for regression testing.

5. Partial Oracles

Sometimes you can’t verify the whole result, but you can validate parts of it.

For example, you can not predict fully what an ML model will do, but it is possible to establish boundaries.

- It won’t crash or fail out of the blue.

- It works within the relevant timeframe.

- It generates results in the correct range.

These are not the full expected results; these are constraints.

6. Implicit Oracles

“Software should not crash.”

“Loading time for a page should be less than 3 seconds.”

They are not written down in stone; rather, they are just taken for granted.

Such rules are common in everyday use.

Test Techniques in Software Testing & Their Relationship With Test Oracles

When people talk about testing methods, they discuss splitting inputs, checking edges, and trying stuff on the go. Unknowingly, test oracles just show up without much fanfare.

Here is how they are linked.

Testing methods give you new ideas for inputs.

Test oracles allow you to judge results.

An instance is Boundary Value Analysis, which shows what numbers to verify. The highest or lowest ones.

The test oracle shows what the system should do with them.

One without the other is incomplete.

Exploratory testing leans more on gut feeling. People notice odd stuff because they have seen it before. The moment something feels wrong, you check deeper instead of following the below steps. It is less about rules. More about curiosity, pushing what to try next. You might change direction abruptly if a weird output pops up.

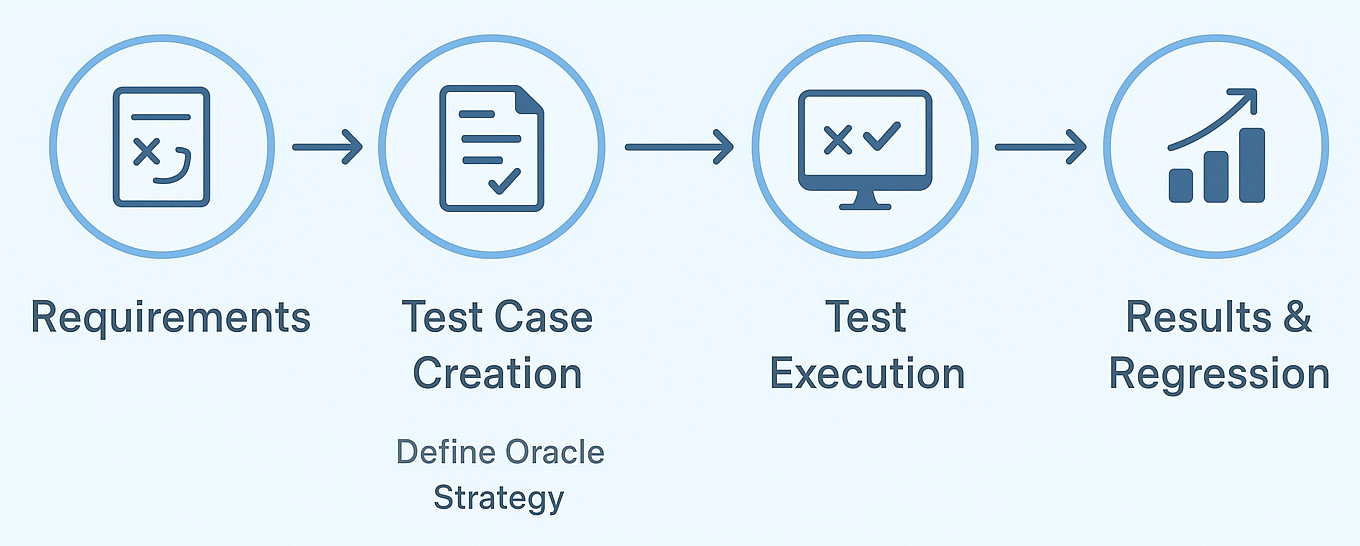

Test Planning in Software Testing: Where Do Test Oracles Fit?

While testers build a test strategy, they usually jot down:

- Objectives

- Scope

- Test environments

- Test data

- Test schedule

- Roles and responsibilities

Still, many teams skip building a clear oracle plan. So how do we actually know if every test passes or fails?

A solid test plan often includes:

- Which sources will help decide: people, machine, historical results, or something else?

- Which parts can be done by machines instead?

- What needs manual validation?

- What maintains stable results?

- How to behave if you are unsure about the next steps.

Missing this analysis usually leads to:

- Inconsistent test results.

- “False failures” when the rules aren’t clear.

- Slower test cycles.

- Teams rely too much on tribal knowledge.

Including oracles right at the start of the test plan helps you predict results. It also simplifies automation tasks. And it also sharpens focus during execution phases without adding complexity.

Test Metrics in Software Testing & How They Help Validate Oracles

Metrics highlight how trustworthy your oracles can be. It gives a clearer picture over time. Useful ways to validate tests in software might be:

- Test case success ratio: Can confusion about what is expected lead to failures?

- Bug refusal ratio: Do coders say no to issues when real results don’t match the expected results?

- How well does automation function: Do automated oracles often produce false positives?

- Check the tests: Where is the missing guidance? Can some areas lack oracles?

These metrics help QA teams tweak their processes. False alarms are reduced during testing.

What is Test Oracle in Software Testing?

To put it simply, here is a short summary:

- A test oracle is just something that explains what should occur when you run a test.

- A file might work, or maybe a person. It can even be a previous version of the software, some devices, or perhaps just guidelines.

- When you log in, seeing the dashboard means it worked, your oracle. If the login’s good, the screen shows the main page, that’s how you know. A correct sign-in should take you straight to the dashboard, no doubt. Do you have the right credentials? Then boom, dashboard emerges. This tells you everything is running smoothly.

- Different types of test oracles match different situations. Some work here, others somewhere else. Each serves a distinct role based on what is needed.

- The “test oracle problem” means it is hard to understand what the right result should be, more so when things get complex.

Since the basics are done with, we can take a look at AI-driven ones. With AI-based test oracles, you adapt your oracle (thanks to AI’s adaptability) and keep scaling with your application. This helps overcome the rigidity that might come in with many forms of automated test oracles wherein changes to the expectation need to happen manually.

How Test Oracles Support Better Collaboration Across Teams

One might wonder what test oracles have to do with team collaboration, as it seems like a code-level/test-level consideration. Yet, it helps how coders, QA teams, product managers, or even UI designers talk about what they are building.

When team members see the project differently, checking the code is trial and error. Some testers log issues only for coders to say they are not defects. Coders will change features that were working fine before. The person leading the product can expect something totally different than their idea. Things go down the drain fast.

A clear test oracle would be a spec, a step-by-step user scenario in plain English. A golden master baseline, or an automated assertion, offers everyone a common language. Think of it as a small promise stating simply, “This is exactly how the app was meant to work.”

This one is easier when using something like testRigor. Since the tests are in plain English, anyone can get what they are supposed to do. No need to break your head over scripts or dig through pages just to understand a single test. Testing becomes teamwork rather than only a QA responsibility.

Clear oracles help people understand each other better and reduce mix-ups. It provides them with the boost they need to develop tools without regular hurdles.

Modern Testing & AI: How Tools Like testRigor Help With Test Oracles

When the system gets complicated, predicting the outcome becomes harder. Also, people verifying results manually can’t keep up with the scaling of the product. Thus, a human oracle isn’t enough. You need automation to help you cover more ground while also scaling with the system. This is where testRigor excels. Fueled by AI, it can be a helpful test oracle that does not bypass the final layer of human judgment. Here’s how testRigor does it.

Where testRigor Fits In: Reducing the Oracle Problem in Real Projects

Natural-Language Testing Makes Oracles Easier to Define

In traditional test automation, testers have to define clear results inside the code. Using checks, paths, or similar bits. While writing test scripts, they include these exact checkpoints to validate. This includes element finders like XPaths or comparison steps.

This might be painful as the underlying system code can keep changing, leading to fragile and unreliable test oracles.

click on "Login" enter "[email protected]" into "Email" enter "mypassword" into "Password" check that page contains "Welcome John"

The expected result (the oracle) is available directly in the plain English command: “check that page contains ‘Welcome John'”.

This makes oracles much easier to write, maintain, and review.

AI Helps Identify and Adapt the Right Oracles Automatically

Certain kinds of software testing, say verifying how a dynamic element behaves on the screen, are difficult to automate with traditional code-based, non-AI testing. Neither can someone manually check this after every release. Having a tool that can validate the Oracle’s clause without a complete test failure is very helpful. If you know that the element or the image containing a certain string is expected at the bottom of the web page, you can simply say so in plain English in testRigor. The tool will look for that element, without your assistance for exact coordinates or other code-level details.

testRigor uses AI to:

- detect visual changes

- determine stable user flows

- infer expected outcomes based on previous passing runs

- adapt tests when UI changes

This reduces the testing headaches, as you are not stuck updating outcomes manually every time the software evolves.

Great for Regression Oracles

testRigor monitors how tests perform in different runs. If a result changes, it triggers alerts.

You don’t need to store mountains of expected output manually.

The tool helps maintain:

- Baseline behavior

- Historical comparisons

- Automatically updated expected values (when changes are intentional)

This helps regression oracles to be more robust and less manual.

Suitable for Both Technical and Non-Technical Team Members

Besides being no-code, testRigor works by:

- Business analysts can define oracles

- Designers can validate expected UI behavior

- Manual testers can automate tests without writing code

This increases the chance for more people to help decide what “normal actions” look like.

Reduces Flakiness by Stabilizing Expected Results

UI automation often results in unreliable tests. testRigor’s AI keeps tests stable by analyzing the application as a human emulator rather than relying on brittle UI selectors. It works smarter without breaking easily.

- fewer false failures

- fewer flaky oracles

- more reliable pass/fail judgment

This improves Oracle accuracy in automated testing.

Why Test Oracles Matter More Than Ever

The software scene evolves fast, scales, and stays messy. Though processes get sharper or tools smarter, one core issue remains the same.

It’s still hard to know the right answer for every test.

So, test oracles help. Especially now that intelligent tools like testRigor are available, as they make establishing expectations easier, keep tests up to date when code changes. It also allows tech and non-tech employees to contribute to testing without hurdles.

With solid test plans, sharp oracles, efficient methods, and an AI tool, teams are better aligned for success. They can achieve more reliable and scalable testing.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |