Why Do LLMs Need ETL Testing?

|

|

Large Language Models (LLMs) like GPT-4, Claude, and PaLM have reshaped the landscape of artificial intelligence, enabling machines to process, understand, and generate human language with astounding fluency. From powering conversational agents to summarizing documents and even writing code, LLMs are becoming central to modern enterprise solutions. But beneath the sophistication of these language models lies a vast, complex machinery of data pipelines.

At the heart of this machinery is the ETL (Extract, Transform, Load) process, an essential framework for gathering and preparing the data that trains and powers LLMs. Ensuring the reliability, correctness, and performance of this process is critical to the success of any LLM-based application. This is where ETL testing becomes indispensable.

| Key Takeaways: |

|---|

|

Understanding LLMs (Large Language Models)

Large Language Models (LLMs) are advanced machine learning models that are created to process, comprehend, and generate human language. Deep learning driven by these models has the capability of performing intricate natural language processing (NLP) applications, e.g., text generation, translation, and question-answering, in addition to text summarization. By far, the most famous LLMs are:

- GPT (Generative Pretrained Transformer): GPT is a transformer model produced by OpenAI, trained on huge text data, capable of producing human-like text. It can be used on a wide range of applications including chatbots, content creation, and coding assistant since it guesses the following word or token by using the context of the previous words.

- BERT (Bidirectional Encoder Representations from Transformers): The BERT is developed by Google that helps comprehend the context of words in the sentence taking into account the words that precede and follow the words on both sides (bidirectional). This enables BERT to perform well in activities like question addressing and sentiment analysis. As opposed to GPT, BERT models are usually applied to situations where information not only in a sentence or passage but also in its context is essential.

The models are referred to as large due to the sheer volume of parameters (in some cases billions or even trillions) that they employ to express their knowledge and require large bodies of training data in order to work effectively. Learn more: What are LLMs (Large Language Models)?

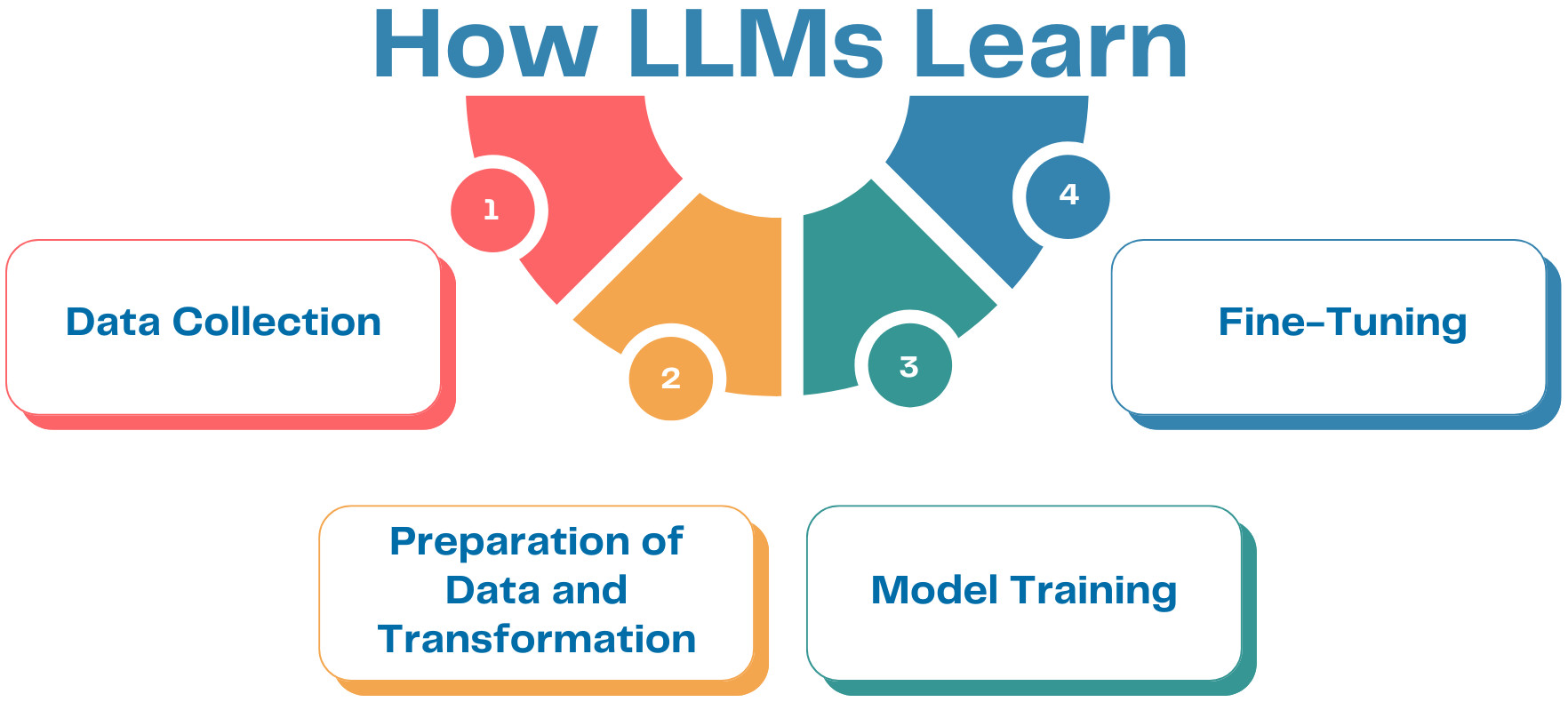

How LLMs Learn

The data that LLMs are processed on contains a multi-step pipeline. The overall procedures all entail the following steps:

- Data Collection: To begin with, there is a need to collect as much data as possible in terms of texts through diverse sources, which include books, websites, articles, social media and other written materials. Such raw data is usually unstructured and requires cleaning, as well as preprocessing to take a suitable form that is capable of training.

- Preparation of Data and Transformation: The raw data, upon being extracted is subjected through preprocessing. It includes cleaning off any unnecessary content (e.g. HTML tags or special characters), tokenizing (dividing into smaller units like words or sub words) of the text and transforming it into numbers that can be fed to the model.

- Model Training: Transformer is the most influential architecture in LLMs, and this architecture and its variants use self-attention of words or tokens in a series to phrase the relationships between them. The model acquires patterns, semantics, grammar and context in training using the large text data corpus. It does so by tuning its parameters in order to reduce the error between its guesses and the true results (e.g., to predict the next word in a sentence). Read more: Machine Learning Models Testing Strategies.

- Fine-Tuning: LLMs can be fine-tuned after pretraining on large datasets on particular tasks (or on particular domains: such as medical or legal language) using domain-specific data.

Data pipelines are very important to the smooth running of LLMs. They guarantee that the data travels through preprocessing to the training phase with the help of the initialization, efficiently, and with constant updates and enlargements to the model.

Data Sources for LLMs

The training data sources define the paramount success of an LLM regarding quality and diversity. The LLMs are normally trained with a set of data that is very huge and covers a broad range of subjects, languages, and contexts. These are some of the common data sources:

- Texts on Sites: Texts accessible on the internet, such as independent websites, books, research journals, news articles, and so forth.

- Private or Proprietary Data: Special data that could be proprietary, including technical manuals, lawful records, and even industry-related knowledge databases.

- Human-Generated Data: The data generated by humans, i.e., conversations, discussions, etc., that may contain slang, idiomatic expressions, and informal language.

Why Training Data Matters

The training set plays a significant role in the performance of the LLM in generalizing and comprehending various areas. For example:

- Data Bias: The implication is that an LLM can acquire the biases in data, in case it is trained on a predisposed set of data. It may lead to the model giving wrong or biased variables.

- Data Gaps: As long as the training data does not cover some of the topics or languages well enough, LLM will struggle on tasks where those topics or languages are required.

- Data Noise: Data such as irrelevance, poor word spelling, and misinformation may cause the model to give wrong information.

Thus, the more explicitly the data pipeline is built and the better the quality of data on which training is occurring, the more correct and reliable the results of the LLM will be. That is why ETL (Extract, Transform, Load) processes can be essential in preparing and handling the data that you will be using for training and fine-tuning LLMs. They see to it that not just the data is of high quality, but it is also the data that fits the needs of the LLM.

Understanding ETL Testing

ETL (Extract, Transform, Load) is a very important data processing exercise that is employed to transfer and transform data between systems (in this case, between a source system to an application or a data warehouse) and is a key data transformation process. The process requires three major steps:

- Extraction: The extraction step deals with drawing raw data out of different sources. This data might be databases, APIs, flat files (such as CSV or JSON) or even cloud storage. The main aim of this stage is to simply acquire all the data as it is and make the data ready to be processed, cleaned and made into a more usable form.

- Transformation: After extracting, the transformation of the data follows. This is the stage during which raw data is standardized, enriched, and put into a form to suit the demands of the target system. Transformation includes:

- Data cleaning: Eliminating duplicates, treating missing values, and reconciling inconsistencies.

- Data mapping: transforming/transforming the data into the format/structure needed by the destination system.

- Data aggregation: Pulling individual data together to obtain a synthesis or a combination of data.

- Data enrichment: Refers to addition of more information to a data set by external source or to make it more useful.

- Loading: The last step is actually loading the transformed data to the target system, which may consist of a data warehouse, a database, or some other system. This information can now be analysed, reported, or processed. Any loading process needs to be performance optimised particularly when large volumes of data have to be loaded and delivered in a very efficient manner.

Testing the Data Pipeline

It is important that the ETL pipe be tested to make sure that the data is aptly and steadily transferred, transformed, and loaded into the target system. This is done to guarantee qualities of data integrity, which specifically affect the quality of business interpretations and decision-making. There are a number of problems that can take place without appropriate testing:

- Data Mismatches: Mapping or transforming data inaccurately may cause the source to be different with target systems and hence producing inaccurate reports or data analysis.

- Data Loss: When dealing with huge chunks of data, they can be accidentally lost during transformation or while loading a batch. The loss of some valuable data could lead to disastrous outcomes, particularly in vital operations of business.

- Performance Problems: Slow data processing may result due to ineffective ETL processes, thus affecting the performance of the entire data pipeline as well as the systems utilizing this pipeline.

- Compliance Risks: In the regulated industries, compliance with data privacy laws and industry regulations depends greatly on ensuring the right processing and storage of the data. The use of ETL testing will aid in the maintenance of the necessary levels of data transformations and storage.

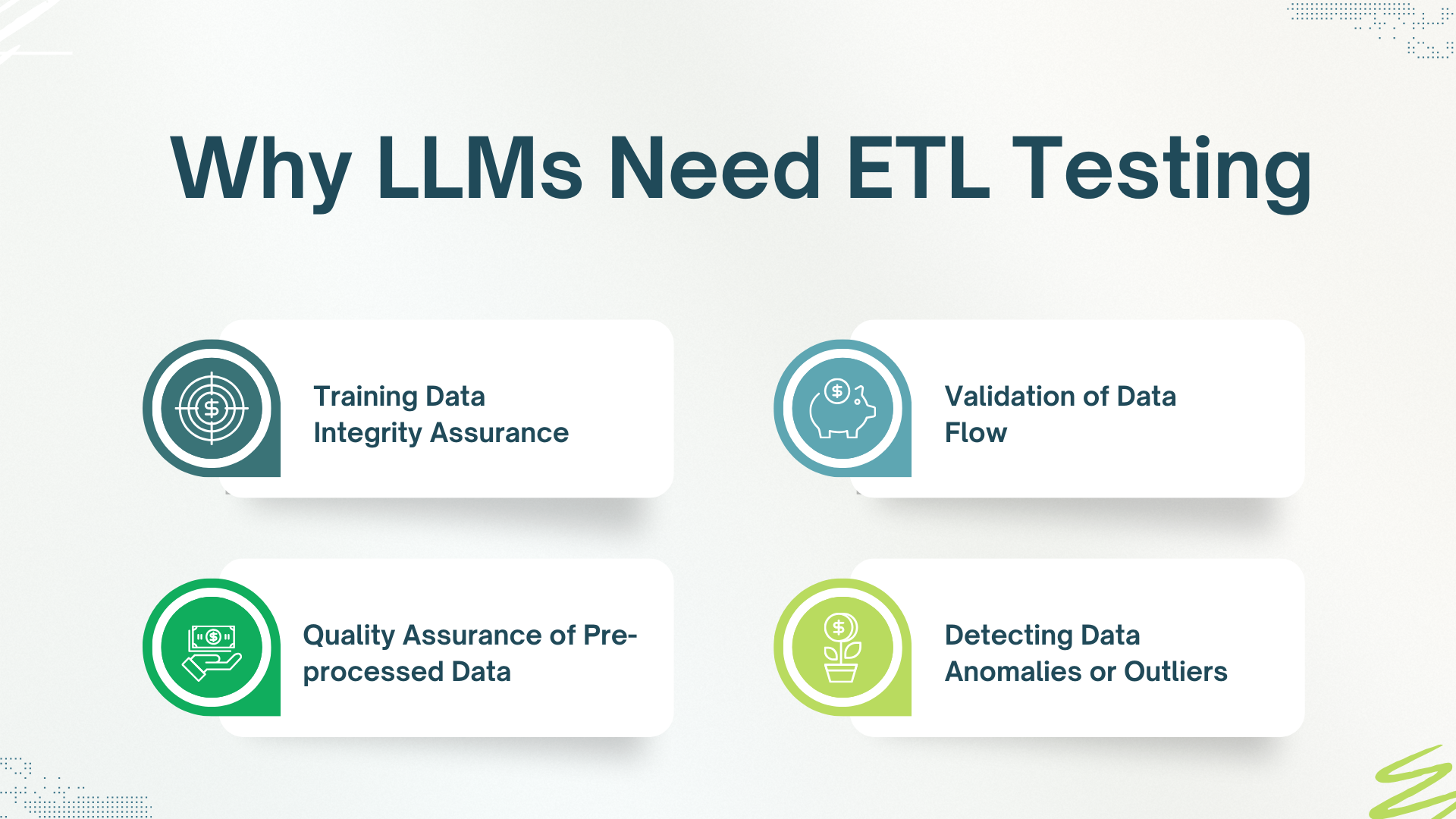

Why LLMs Need ETL Testing

Data pipeline is critical in the training and fine-tuning of Large Language Models (LLMs) like GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), etc. Such models are as good as the data with which they have been trained, and problems in the data pipeline can critically affect their performance and accuracy.

In such a way, it is necessary to apply ETL (Extract, Transform, Load) testing to control the quality and integrity of data used to train LLMs. The following are some of the main reasons why LLMs require ETL testing:

Training Data Integrity Assurance

Any machine learning model and LLMs, in particular, require data integrity. Inaccurate or garbled data may have catastrophic effects on the performance of the model, which may either result in biased model performance or inaccurate predictions and may even orphan the model. For example:

- Corrupt Data: When training with corrupt data, such as incomplete, with missing values, or improperly formatted data, the LLM may learn spurious relationships, resulting in adverse behaviour on inference. To give an example, a model trained on corrupt data (corruption of text data e.g. a mixture of incomplete sentences or jumbled characters) may be unable to output any sensible or grammatically correct utterance.

- Data Inconsistency: LLMs depend on consistent information to identify patterns and generalize, effectively. The model can get confused during training due to any mismatch in the data, which may result in an inaccurately labelled training set or other mismatched data types.

Quality Assurance of Pre-processed Data

Raw data is crucial in the pre-processing stage in order to train LLMs. Normalization, tokenization, stemming, lemmatization and text cleaning are common preprocessing actions. The steps aid in transforming raw data into a format that can easily be made comprehensible and learnt by the LLM.

- Normalization: This is the process whereby the text-based data is converted into a standardized form i.e., changing all the text to lower case, or eliminating stop words or treating punctuation consistently. The ETL testing would determine that such transformations are being done properly and that the data is pre-processed in a uniform way.

- Tokenization: It is a process of dividing text into smaller units(tokens), which may be words, characters or sub words. When tokenization becomes inaccurate it disintegrates meaningful context, and this situation has a bearing on the quality of an understanding by the model. ETL testing is done to make sure that the process of tokenization is done properly.

Validation of Data Flow

With LLMs, data is processed at various points within the ETL pipeline- extraction, transformation, and loading, among others. Proper distribution of data passing through each of these phases plays a vital role in the successful completion of training the model.

- Extraction Phase: The information can be retrieved through various channels, which include: text corpora, data, APIs, or web scraping. ETL testing is to ensure the data is rightly extracted with no missing, incomplete or incorrect data.

- Transformation Phase: In this transformation stage, data are cleaned, standardized and pre-processed. ETL testing ensures that the transformation rules (e.g. tokenization, normalization) are applied in the correct way and consistently across datasets, so as to preserve the desired structure on which to train.

- Loading Phase: The last stage is loading the refined information into the training system or model pipeline. The ETL testing that involves loading of data in the system in the proper form sufficiently and in the proper format makes the data accessible to the process of training the model to train the model.

Detecting Data Anomalies or Outliers

The data anomalies or outliers may appear in case we will execute data extraction and transformation, and they may be viewed without further testing. Such outliers may pose a serious impact on the training outcomes of LLMs.

- Outliers: This can be mislabelled data, data that falls outside the range, or data points that are drastically different from the other data points. As an example, random or nonsensical text within the training set of a language model (e.g. an entirely different language or gibberish) might bias the model towards predicting and generating coherent text.

- Effect on Training: Anomalies or outliers in data may skew the patterns as learned by the model leading to wrong outputs given by the model. The example is that in case of some rare words or phrases, they are not correctly filtered, the LLM can begin to learn similar words as a part of the language model, and this neighbouring language can be dissimilar.

ETL Testing Challenges in LLMs

Although ETL testing fundamentals are important to ensure robust, clean, and reliable data pipelines for LLM, its operation introduces complexities. These challenges are compounded by the scale, diversity, and dynamism of the data that fuels models such as GPT, Claude, and PaLM. The following are some of the biggest challenges to overcome while performing ETL testing in LLM ecosystems:

- Big Data Scale: LLMs are trained on the order of petabytes, and full validation is impractical with typical ETL testing. Without creating sampling bias that might overlook an anomaly, testing frameworks should be able to scale to massive amounts of data.

- Variety and Heterogeneity of Data: Training information can take various forms such as CSV, JSON, HTML, PDFs, as well as be in several languages. And harmonising ETL across such disparate sources is complex and error-prone.

- Bias and Fairness Risks: The ETL process can inadvertently support social biases or allow inappropriate content to remain. In the absence of proper investigation, this bias can affect the fairness and ethical behavior of LLMs.

- Detection of Semantic Anomalies: Existing ETL checks only target structure, but LLM data requires semantic validation as well. Finding gibberish, mislabelled text, and more subtly bad text is something that is not common when building LLM pipelines.

- Compliance and Privacy: Many LLMs work with sensitive information, making it necessary to have stringent tests for anonymization and to ensure secure storage. ETL testing needs to cover the ground on regulations such as GDPR and HIPAA, among others, which might bring legal and reputation risk.

Conclusion

Large Language Models may represent the cutting edge of AI, but they rest on an older, more foundational pillar: Data Integrity. Ensuring that the data moving through ETL pipelines is accurate, complete, and consistent is the only way to ensure that LLMs behave as expected, especially in high-stakes domains like healthcare, finance, customer service, and law.

ETL testing is not just a technical task. It’s a gatekeeper of trust, a validator of intelligence, and the cornerstone of safe, ethical AI. By rigorously applying ETL testing to your LLM pipelines, you not only improve model performance but also reduce risk, cut costs, accelerate development, and build solutions that users can depend on.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |