Machine Learning Models Testing Strategies

|

|

“Machine learning is the next internet” – Tony Tether.

We can all see this happening today. This quote is quite true to its essence. As revolutionary as the Internet was, ML is rapidly changing technology and our lives.

Let us think about a self-driving car. It has to be super accurate to keep us safe, doesn’t it? But how can we be sure it is reliable? That’s why testing machine learning models correctly is so important. Just like we thoroughly test a car’s brakes or steering, we need to carefully test these models to make sure they make the right decisions and so avoid unexpected errors.

In this blog, we will discuss some important strategies for testing machine learning models.

What is Machine Learning (ML)?

It is equal to teaching a computer to think for itself. And instead of telling it exactly what to do, you show it lots of examples. Then, let it figure things out. That is exactly machine learning in a nutshell!

It is like teaching a kid to recognize fruits. You do not give them a list of rules. You just show them lots of bananas, apples, and oranges. Pretty soon, they can tell them apart! Machine Learning is like that but with computers learning from the information they’re given.

What is a Machine Learning Model?

So, what is an ML model? Look at an ML model as the “brain” of the computer, which is built after learning from examples. It’s a set of mathematical rules or patterns that the computer creates to make decisions or predictions.

- You give a computer lots of data about houses (size, location, number of rooms) and their prices.

- The computer learns patterns from this data (e.g., bigger houses tend to cost more).

- This “learning” results in a model.

- Later, when you give the model details about a new house, it uses its “brain” to predict the price.

So, an ML model is essentially the computer’s way of understanding and applying knowledge from data to solve problems like predicting prices, recognizing images, translating languages, or even recommending your favorite movies.

Why Machine Learning Testing is Critical

Testing ML models is incredibly important because ML is different from traditional software. Unlike regular software that follows clear instructions, ML models learn patterns from data, and this makes them unpredictable and sometimes unreliable. Here’s why you should be extra prompt to test ML models:

-

Need for Accurate Predictions: We use ML models to make decisions, such as predicting the weather, recommending products, or detecting fraud. If the model isn’t tested properly:

- It might give wrong answers.

- A weather prediction app might say it’s sunny when it’s raining.

Testing makes sure that the model gives accurate and reliable results in real-world scenarios. -

Deal with Unseen Data: ML models learn from the data you give them (training data). But in real life, they often see new and unexpected situations. For example:

- A spam filter might see a type of email it wasn’t trained on.

Testing helps you check how well the model handles these “unseen” situations. -

Avoid the Bias: ML models learn from the data you provide, but if that data is biased, the model will also be biased. For example:

- If a hiring model is trained on past hiring data that favors one group, it might unfairly reject candidates from other groups.

Testing will help you identify and fix these biases and make the model fair for everyone. -

Detect Overfitting: Sometimes, ML models get too good at memorizing the training data but fail to work well on new data. This is called overfitting. It’s like a student who memorizes answers to practice questions but doesn’t actually understand the topic. Testing will help you check whether the model can generalize its learning to work on new, real-world data.

-

To Have Interpretability: Many ML models are like black boxes – they give you answers without explaining why. For example:

- A model might reject a loan application without saying why.

It’s important to test if the model’s decisions can be understood and explained, especially in critical areas like healthcare or finance. -

Handle Changing Data: The world changes over time, and so does the data that ML models see. For example:

- An e-commerce model might not perform well during holiday sales because buying patterns are different.

Testing will help you ensure that the model is robust enough to handle changing data or at least alert you when it needs to be updated. -

Identify Edge Cases: ML models can behave strangely with unusual inputs or edge cases. For example:

- A chatbot might give nonsensical answers to certain questions.

- A facial recognition system might fail with blurry or partially hidden faces.

Testing helps find these edge cases so they can be addressed. -

Build Trust: What if the ML model gives you dodgy, unexplainable answers that aren’t what you’re looking for? Will you trust it? For example:

- A healthcare provider won’t rely on an untested diagnosis model.

- Customers won’t trust a recommendation system that frequently gets things wrong.

Testing builds confidence that the model works as expected and can be trusted. -

Reduce Risks: Errors in ML models can have serious consequences, such as:

- Financial losses (e.g., a stock trading model making bad decisions).

- Safety issues (e.g., a self-driving car model making wrong turns).

Testing minimizes these risks by ensuring the model performs well and avoids critical mistakes.

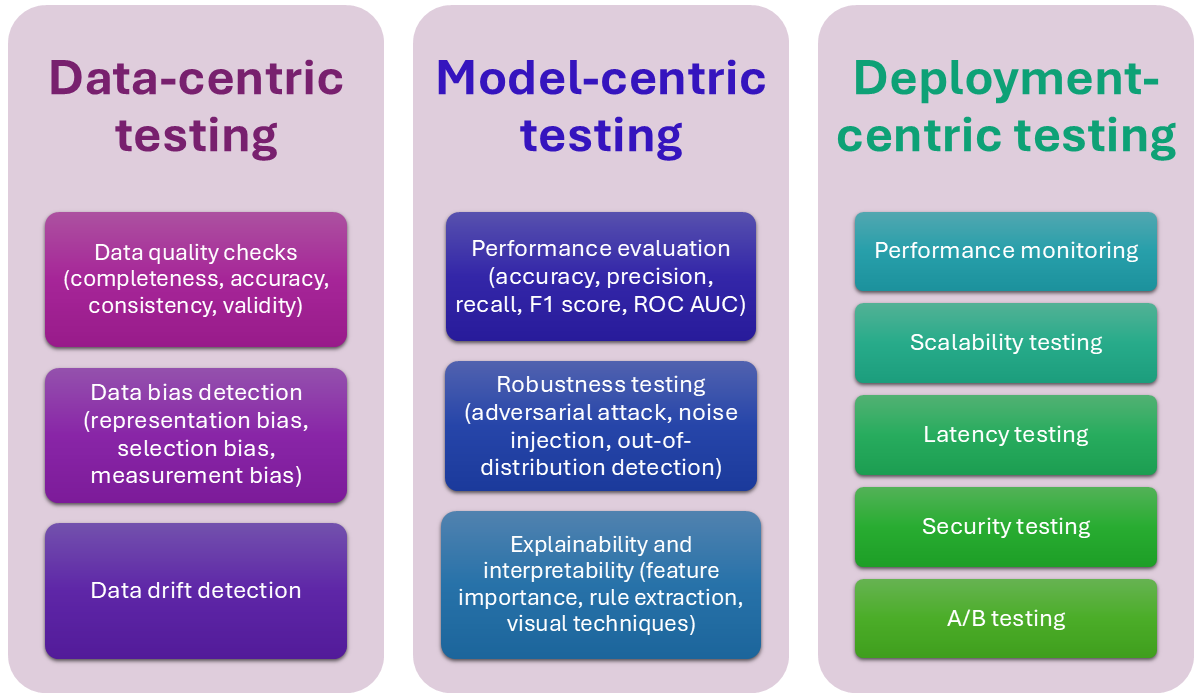

Types of Testing for ML Models

ML models learn from data. This means we need specific types of tests to ensure they work as expected. Each type of testing focuses on a specific aspect of the ML model’s lifecycle, from ensuring the data is clean to monitoring the model after deployment. Together, these tests ensure the model is accurate, reliable, fair, and ready for real-world use. Let’s look at some of the common types of testing used for ML models:

Dataset Validation

This is the first step in testing ML models. It involves checking whether the data used for training and testing the model is correct and reliable.

Why It’s Important: If the data is bad (e.g., contains errors, is incomplete, or has biases), the model will learn the wrong things.

- Check for missing or incorrect values in the data.

- Ensure the data is diverse and represents all possible scenarios (e.g., different age groups, genders, regions).

- Split the data properly into training, validation, and test sets to avoid overfitting.

Unit Testing

This is about testing individual components or steps of the ML pipeline, like data preprocessing, feature extraction, or a specific function.

Why It’s Important: Ensures that each part of the pipeline is working correctly before looking at the model as a whole.

- Test if a data-cleaning step correctly removes duplicates.

- Verify that a feature extraction step calculates the right values.

Read more: Unit Testing: Best Practices for Efficient Code Validation.

Integration Testing

This tests how well different components of the ML system work together as a whole.

Why It’s Important: Ensures the end-to-end ML pipeline works seamlessly.

- Check if the model correctly uses preprocessed data for predictions.

- Ensure the output of one step is correctly passed to the next.

Here is more about it: Integration Testing: Definition, Types, Tools, and Best Practices.

Model Validation

Here, you focus on how well the model performs during training and validation.

Why It’s Important: It helps ensure the model is learning properly and generalizes well to unseen data.

- Use techniques like k-fold cross-validation or train-test splits.

- Check for overfitting (too good on training data but poor on new data).

- Use performance metrics like accuracy, precision, recall, F1 score, and AUC-ROC.

Explainability Testing

Over here, you try to understand why the model is making specific predictions.

Why It’s Important: It helps build trust and ensures the model isn’t relying on wrong or irrelevant patterns.

- Check if important features (like age or income) are driving decisions instead of irrelevant ones (like user ID).

Performance Testing

This tests how well the model performs overall on unseen data (the test set).

Why It’s Important: It helps evaluate the model’s effectiveness in the real world.

- Test how well a spam detection model identifies spam emails.

- Measure performance under different scenarios (e.g., edge cases or noisy data).

Bias and Fairness Testing

This checks whether the model’s predictions are fair and unbiased for all groups.

Why It’s Important: Prevents discriminatory behavior in sensitive applications like hiring or credit scoring.

- Check if a hiring model favors one gender over another.

- Test if a healthcare model performs equally well for different ethnic groups.

Regression Testing

Apart from testing new features, you also need to make sure whatever is present already is in ship shape. Regression testing is one of the best ways to do this.

Why It’s Important: Ensures the model’s performance doesn’t degrade after updates.

- Ensure a new version of the model doesn’t perform worse than the old one.

Read: What is Regression Testing?

End-to-End Testing

Think like your user. What operations would they expect out of your application? That is precisely what you check in end-to-end testing.

Why It’s Important: Ensures the system works as expected once deployed.

- Check if a deployed fraud detection system processes transactions in real-time.

Use intelligent testing tools that can take care of many types of testing through a single tool, like testRigor. Since this tool uses generative AI, it allows you to create/generate/record test cases in plain English. This AI-powered tool gives you stable test runs as it does not depend on implementation details of UI elements like XPaths and CSS to run tests. testRigor offers a strong set of commands that will allow you to automate all forms of end-to-end, functional, regression, API, and UI test scenarios across different platforms (web, mobile (hybrid/native), desktop).

Monitoring and Maintenance

This involves tracking the model’s performance after deployment to ensure it remains effective.

Why It’s Important: Models can degrade over time due to changes in data (concept drift) or other factors.

- Monitor a language model for accuracy as new slang or terms emerge.

- Check if a pricing prediction model adapts to changing market trends.

Here’s a detailed list of what you can test when working with ML models:

Strategies for Testing ML Models

By now, you must have guessed that testing ML models is trickier than testing regular software. You can’t just check if it works. You need to make sure it works right in the real world. Here are some ideas to help you:

Test the Data, Not Just the Model

Data is the foundation of any ML model, and issues in data can lead to flawed models. Testing strategies should start with rigorous data validation.

- Verify the integrity, accuracy, and completeness of the training and test datasets.

- Check for data imbalances (e.g., too many examples of one class compared to others).

- Use exploratory data analysis tools to identify anomalies or patterns that could bias the model.

- Ensure data distributions in training, validation, and test sets are consistent with real-world data.

Define Clear Success Metrics

Select evaluation metrics that align with the business goals and model objectives.

- For classification problems, consider precision, recall, F1 score, or AUC-ROC, depending on the use case.

- For regression problems, use metrics like mean absolute error (MAE) or root mean squared error (RMSE).

- Use domain-specific metrics where applicable (e.g., time-to-event predictions in healthcare).

- Set thresholds for acceptable performance and incorporate these into automated tests.

Perform Iterative Validation

Rather than testing the entire model at once, validate in small, incremental steps.

- Validate each stage of the ML pipeline separately (e.g., data preprocessing, feature engineering).

- Start with small, simple models (baseline models) and test their performance before progressing to complex ones.

- Use holdout validation and cross-validation to assess model performance iteratively.

Incorporate Real-World Scenarios

Test the model with scenarios that mimic real-world conditions to ensure robustness.

- Use stress testing to evaluate how the model performs under edge cases or noisy data.

- Simulate changing data distributions or concept drift to see how the model handles new environments.

- Test the model’s behavior with adversarial examples like slightly modified inputs designed to confuse it.

Ensure Explainability and Transparency

Try to adopt strategies to test the interpretability and explainability of the model’s predictions.

- Use tools to identify the factors driving the model’s decisions.

- Test if predictions align with domain knowledge and avoid reliance on irrelevant features.

- Incorporate explainability as part of user-facing applications to build trust with stakeholders.

Test for Bias and Fairness

Bias in models can lead to unfair or harmful outcomes. Testing for fairness should be a key strategy.

- Analyze the model’s performance across different demographic groups to ensure consistency.

- Use fairness metrics like disparate impact, equalized odds, or demographic parity.

- Regularly audit datasets and models for potential sources of bias.

Automate Testing and Monitoring

Automate as much of the testing and validation process as possible to ensure consistency and efficiency.

- Use frameworks to automate data validation, performance testing, and drift detection.

- Integrate testing into CI/CD pipelines for continuous validation during development and deployment.

- Set up real-time monitoring to track model performance in production.

Plan for Continuous Improvement

ML models often require updates to maintain performance over time. Plan strategies to support ongoing improvements.

- Set up mechanisms to collect feedback from production environments (e.g., user interactions, new data).

- Schedule regular retraining with updated data to combat concept drift.

- Maintain a version control system for data, code, and models to enable easy rollback and comparison.

Use Ensemble Testing

For complex use cases, consider testing ensembles of models rather than relying on a single model.

- Combine predictions from multiple models to reduce variance and improve robustness.

- Test each individual model within the ensemble as well as the combined output.

- Use voting or averaging mechanisms to evaluate ensemble predictions.

Involve Domain Experts

Incorporate feedback and testing inputs from domain experts to validate model behavior.

- Collaborate with stakeholders to define edge cases and critical test scenarios.

- Use domain expertise to evaluate the relevance and correctness of predictions.

- Conduct user acceptance testing (UAT) where end-users validate the model in real-world conditions.

Monitor and Adapt Post-Deployment

Testing doesn’t end when the model is deployed. Continuous monitoring and adaptation are essential.

- Track performance metrics in production to detect degradation or drift.

- Use shadow testing (testing a new model alongside the live one) before replacing the current model.

- Implement alert systems to notify teams of significant drops in accuracy or other issues.

Maintain Ethical Oversight

Ethical considerations are a big part of working with AI and ML. You need to make sure that the testing strategies align with ethical guidelines and regulatory requirements.

- Review the impact of predictions on different groups and individuals.

- Ensure compliance with privacy and data protection regulations.

- Incorporate ethical considerations into the testing and monitoring process.

Challenges in ML Testing

Here are some of the reasons why ML Testing is challenging:

- Dependency on Data: ML models are only as good as the data they have learned from. So, the quality of the training data matters a lot. If the data is messy, incomplete, or biased, the model won’t perform well. For example, if a model learns from a biased dataset (like hiring data that favors men), it will make biased decisions.

- Non-Deterministic Behavior: Unlike traditional software, ML models don’t always give the same result for the same input, especially during training. Testing becomes tricky because you can’t always predict or replicate the results.

- Difficulty in Explaining Predictions: ML models, especially complex ones like deep learning, often work like “black boxes” where it’s hard to understand how they make decisions. If you can’t explain why a model makes a certain prediction, it’s tough to trust or test it.

- Testing for Bias: Identifying and fixing these biases can be difficult, especially when they’re hidden. For example, a facial recognition system might perform poorly for darker skin tones if it wasn’t trained on diverse images.

- Assess Performance: It’s not always clear how to measure an ML model’s success because different scenarios require different metrics. This is challenging because if you choose the wrong metric, then you will receive the wrong information. This will result in unreal performance evaluations.

- Deal with Edge Cases: ML models struggle with rare or unusual inputs that they haven’t seen before. Therefore, these edge cases can cause models to behave unexpectedly and in a weird manner.

- Integration with Real-World Systems: ML models usually aren’t used on their own – they’re part of bigger systems, such as apps or websites.

Conclusion

So, while testing the ML models, you need to be very careful. As you can see in this article, the strategies that you use need to consider the probabilistic nature of ML. Create an approach that best suits your project’s or organization’s needs and is easily doable. Do not forget to make use of intelligent and supportive testing tools to thoroughly test the ML model for better results.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |