Why DevOps Needs a ‘PromptOps’ Layer in 2026

|

|

In 2026, DevOps is no longer the same as it was at its inception.

Breaking down silos between developers and operations was the main focus of DevOps when it first emerged more than a decade ago. Next came the terms ChatOps, Continuous Delivery, and Infrastructure as Code (IaC), which brought in workflows and commands into Microsoft Teams or Slack. Automation grew from straightforward scripts to complex CI/CD pipelines over time.

The digital era and AI-powered operations have taken over the IT world. With the support of protocols such as MCP (Model Context Protocol), AI agents and large language models (LLMs) can analyze issues, read logs, write code, and even execute operational fixes without the need for human micromanagement. However, this evolution also introduced new hurdles into the system.

PromptOps can help with this.

Quite similar to code or infrastructure definitions, PromptOps views prompts as operational assets that are governed, versioned, tested, and monitored. In the absence of it, DevOps’ utilization of AI transforms it into a field of unsupervised changes, unpredictable outputs, and potential security risks.

| Key Takeaways: |

|---|

|

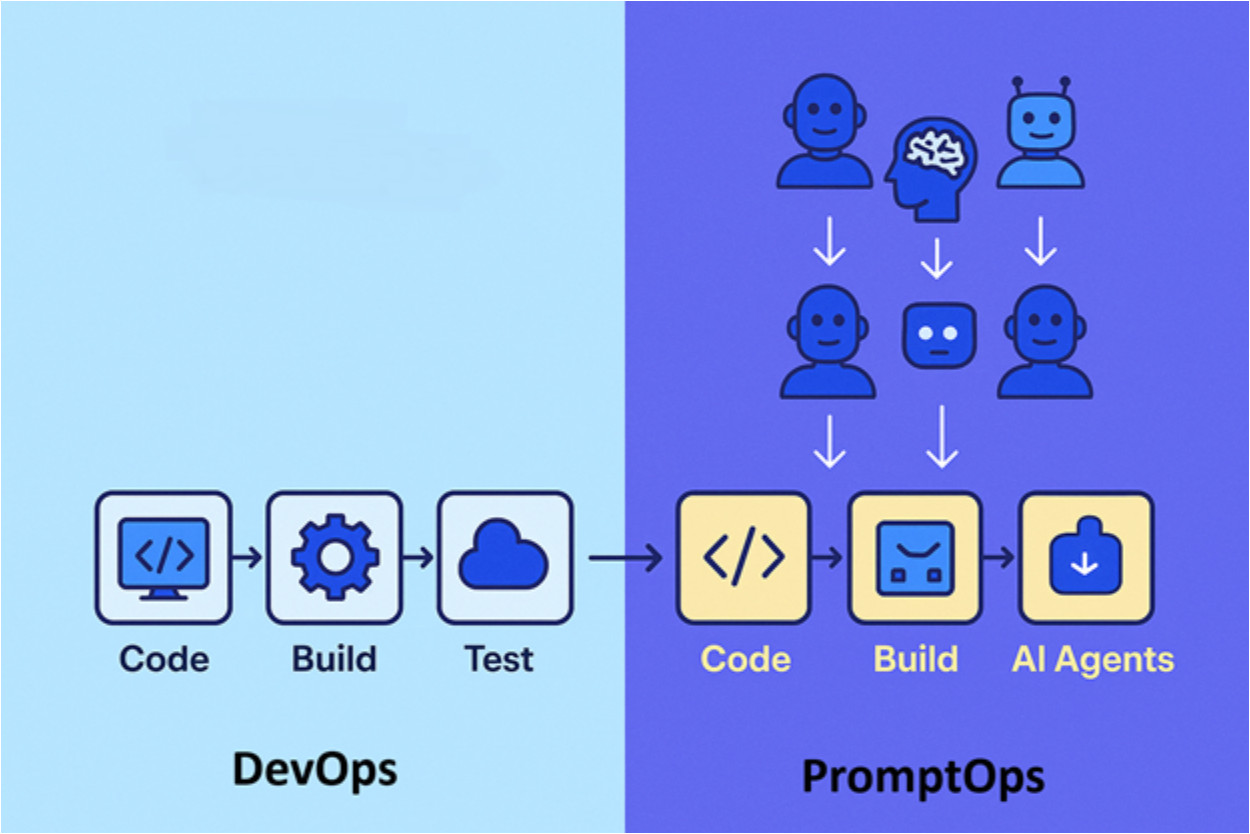

What is PromptOps?

Automation in conventional DevOps depends on explicit commands, like Ansible playbooks, Terraform modules, and shell scripts. Automation in the AI-empowered world regularly happens when LLMs or AI agents are provided with natural language instructions.

These prompts or instructions could:

- Request a deployment strategy.

- Request summaries of error logs.

- Initiate a scale-up for Kubernetes.

- Develop templates for Terraform infrastructure.

- Make code modification suggestions.

The systemic handling of these prompts is called PromptOps. This will help in:

- Prompt Registry: A central, searchable hub where prompts utilized in operational workflows can be looked for.

- Version Control: Utilizing history, diffs, and approvals to treat prompts as code.

- Automated Testing: Ensuring that prompts always deliver the intended and desired outcomes.

- Observability: Monitoring timely performance and detecting drift.

- Governance: Rules regulating who has the authority to modify prompts, how they are approved, and how they are protected.

Why it is essential: In the absence of PromptOps, prompts may transform into impromptu snippets concealed within personal notes or Slack conversations. Inconsistent results, security issues, and compliance headaches are unavoidable.

PromptOps vs. ChatOps vs. AIOps vs. MLOps

Let us make a quick comparison to succinctly yet clearly understand the place of PromptOps in the DevOps ecosystem:

| Practice | Core Focus | Example Use Case |

|---|---|---|

| ChatOps | Chat-based command execution | Deploy staging in Slack |

| AIOps | AI/ML to identify anomalies and offer insights | Forecasting disk failures before they happen |

| MLOps | Handling the ML model lifecycle efficiently | Training, testing, and deploying predictive models |

| PromptOps | Managing LLM prompts for operational workflows | Using a stored, tested prompt to generate an incident remediation plan |

The distinction between the four is that ChatOps focuses on how people initiate automation through chat. AI-based operational issue identification is the main objective of AIOps. MLOps considers models as deployable assets as its primary focus. Prompts highlight the connection between DevOps automation, AI agents, and people.

Why Traditional DevOps Practices Fall Short?

It may seem like existing DevOps practices can achieve it all, as they already handle configurations, pipelines, and automation. However, the modern AI-driven systems introduce challenges that traditional DevOps tooling was not designed to address. Some of these challenges are:

- Non-deterministic Behavior: CI pipelines behave deterministically. For example, test passes or fail, and builds succeed or fail. This deterministic behavior is not possible in prompt-driven systems. For instance, evaluating an agent requires determining its quality, safety, relevance, and consistency across many executions.

- Behavioral Regressions instead of Functional Failures: A prompt change might make an agent overly verbose, miss edge cases, take unsafe actions, or hallucinate more often. Traditional DevOps cannot handle such dangerous regressions.

- Hidden Coupling to Model Versions: The same prompt may behave differently when the model is upgraded. This change needs to be tracked and evaluated explicitly; otherwise, it is difficult to understand why the model’s behavior suddenly shifted.

- New Attack Surfaces: Traditional security tooling cannot cover prompt injection, data leakage, and tool misuse. New monitoring strategies and defensive patterns are needed for this.

- Human-AI Interaction Loops: Unlike DevOps, which focuses on system-to-system interactions, PromptOps involves humans in the loop, such as developers, operators, and support agents. Their trust and efficiency depend on AI reliability. Read: How to Keep Human In The Loop (HITL) During Gen AI Testing?

PromptOps addresses these gaps directly when it is associated with DevOps.

Why does DevOps need PromptOps now, in 2026?

The rise of agentic DevOps best explains this.

In 2026, DevOps AI agents are no longer futuristic; instead, they are becoming increasingly commonplace. An AI agent can access CI/CD pipeline data, assess deployment logs, open pull requests, and automatically execute runbook steps with MCP.

A considerable portion of operational decision-making is now under the control of prompts as a result of the shift from scripted to agent-driven automation.

The Risk of Not Using PromptOps

In the absence of an efficient PromptOps framework:

- Prompts Get Lost: Inconsistencies emerge because there is no one single source of truth.

- Security Issues: Prompt injection attacks can trick AI into performing risky acts.

- No Auditability: It is difficult to understand the reasoning behind an AI’s specific choice.

- Quality Drift: Unexpected modifications in prompt behavior can be a result of LLM updates.

- Noncompliance: Untracked prompts don’t meet the industry regulations.

The Benefits of Including a PromptOps Layer

- Governance: Workflows for approval and control are centralized.

- Reliability: Validated prompts that behave consistently.

- Security: Swiftly scanning for possibly risky patterns.

- Observability: Metrics of timely performance and shortcomings.

- Collaboration: Devs, Ops, and Security teams can access a shared registry.

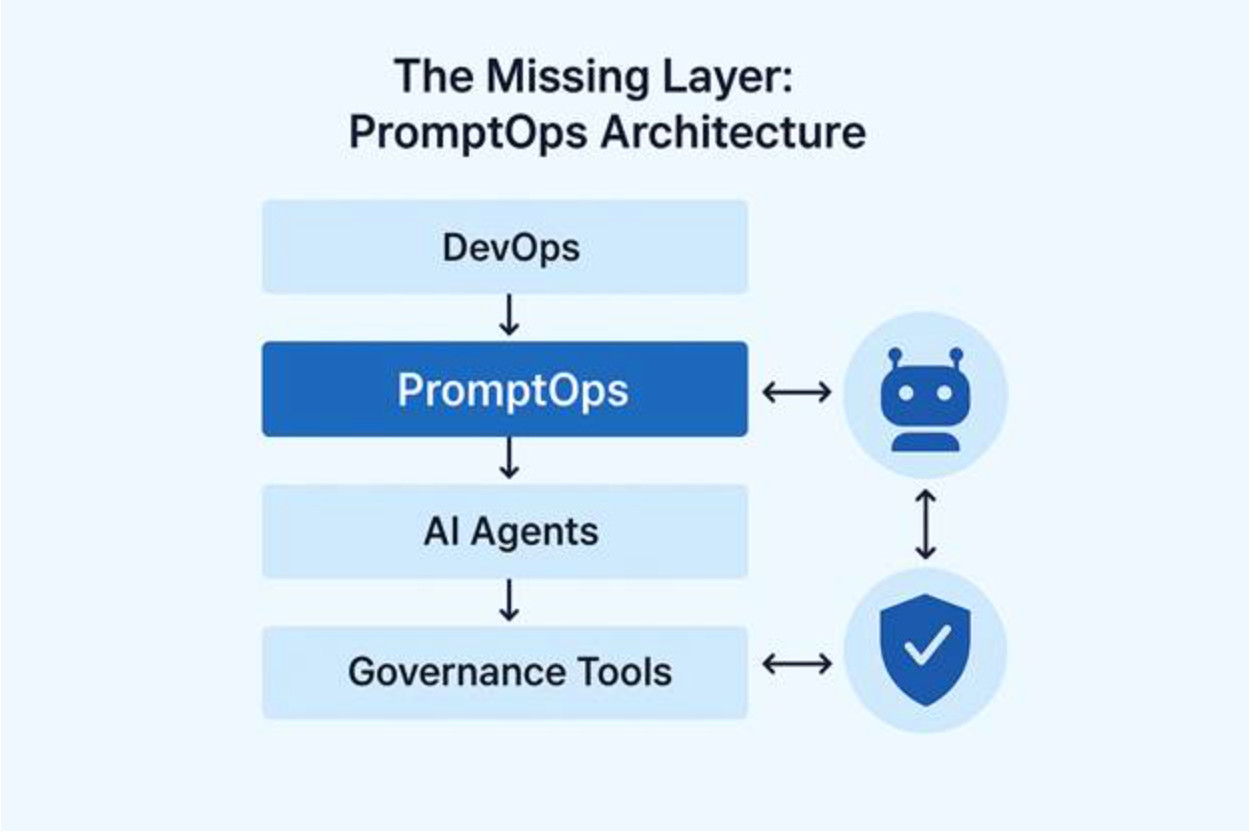

The PromptOps Architecture

With regards to AI-driven DevOps, a PromptOps architecture provides a structured governance and operational framework that ensures that prompts are managed as first-class operational assets and not ad-hoc text snippets.

Important Components of PromptOps Architecture

- Prompt Registry: The prompt registry guarantees discoverability and consistency across teams by working as a centralized database that stores all operational prompts currently in use, along with descriptions, tags, and ownership information.

- Version Control: Every code change is tracked with commit history when prompts are stored in Git or another VCS. This allows for code reviews, open approval procedures, and rollbacks that closely mirror software development.

- Testing Framework: Before prompt edits or LLM model changes are introduced into production environments, an automated system validates prompt outputs against expected results to detect regressions. Read: AI Features Testing: A Comprehensive Guide to Automation.

- Observability: Trend analysis and prompt performance standardization are made possible with the metadata that is recorded with each prompt execution, including time, user, execution latency, and result success rate.

- Security and Governance: While automated scanning identifies risky instructions or patterns that could lead to security incidents, role-based permissions limit who has the authorization to edit, approve, or deploy prompts.

- Integration Layer: This layer ensures smooth orchestration without the need for manual reconfigurations by connecting the prompt framework directly to DevOps tools such as Jenkins, GitHub Actions, Kubernetes, or Terraform.

In the absence of these layers, prompts are scattered, unmonitored, and often risky, which could lead to production outputs that are automation failures or often inconsistent.

MCP and AI Agents in PromptOps

The base for interaction between AI agents and operational systems is the Model Context Protocol (MCP), which substitutes free-form, possibly error-prone text exchanges with structured, context-aware interactions.

In a PromptOps setup:

- MCP enables AI agents to fetch structural operational data, such as logs, metrics, or configuration files, without giving unauthorized access to sensitive systems.

- The prompts can store contextual rules that ensure agents take only authorized actions, avoiding unintended system changes or data exposure.

- All agent executions are recorded with holistic logs, allowing incident reconstruction, debugging, and compliance audits when questions regarding AI-driven modifications arise.

- Agents fetch prompts directly from the controlled registry, ensuring that every automation step adopts vetted, pre-approved operational language.

- By facilitating the seamless integration of prompt-based decision-making into existing CI/CD pipelines, MCP integration converts AI from an advisory function into a dependable operational partner.

Organizations acquire autonomy and oversight by tightly coupling MCP with PromptOps governance, which ensures AI can take decisive steps without bypassing human accountability.

Security and Governance in PromptOps

Because AI-driven workflows often use natural language to execute actions, which can be more susceptible to manipulation or oversight gaps compared to scripted code, PromptOps introduces new governance hurdles. They are:

- Prompt Injection: Using malicious instructions inserted into input data, attackers can fool AI into executing unsafe commands or exposing sensitive system data without permission. Read: How to Test Prompt Injections?

- Data Leakage: Unintentionally requesting or displaying private information by poorly designed prompts could expose logs, customer data, or credentials to unauthorized parties.

- Shadow Changes: In the absence of version control, team members might commit impromptu changes to prompts, skirting approval processes and introducing unpredictably high operational risks.

Best Practices for PromptOps

- Store all prompts in a safe version control system, recording all past changes to ease simplified rollback and forensic auditing post-incident.

- To bring down the potential of insider threats, implement role-based access control so that only authorized individuals can build, edit, or approve prompts for production use.

- To identify malicious phrases, commands, or a lot of permissions before they get to deployment pipelines, use static prompt analysis tools.

- Keep detailed execution audit logs that document who executed a prompt, when it was run, the inputs used, and the outputs obtained.

- With these security controls in place, PromptOps shifts from an operational layer that aids productivity to one that is fully compliant and able to adhere to even the most demanding industry standards.

Use Cases for PromptOps in DevOps

Here are some popular real-life use cases of PromptOps in DevOps:

- Incident Management: Here are the steps:

- AI agents fetch an established “incident triage” prompt.

- Collate logs, examine symptoms, and suggest solutions.

- Automatically update incident tickets.

- On-Call Efficiency

- AI summarizes several alerts into actionable steps.

- Makes important runbook entry suggestions.

- Self-Repair Runbooks: To fix common issues without human assistance, agents run pre-approved prompts.

- Cost Optimization: AI analyzes cloud utilization and suggests scaling modifications.

- Security Patching: The agent monitors CVE feeds and suggests PRs for resolutions.

Where Software Testing fits into PromptOps

The quality of PromptOps’ prompts determines its effectiveness. This is where AI-driven test automation makes its entry.

Software testing is necessary because:

- LLM updates can modify output behavior.

- Minor wording changes can impact workflows.

- Prompt regressions can lead to incidents.

How testRigor Enables PromptOps Testing

With its Gen AI-powered, no-code testing platform, testRigor is necessary for:

- Automated prompt testing in CI/CD.

- Regression detection in response to model or prompt changes.

- Testing edge cases by mimicking real-world inputs.

- Integrating with version control to prevent merges in situations where tests fail.

- Testing end-to-end, web, mobile, and API workflows for AI agents.

This ensures stability and trust in AI-powered automation. Read: How to use AI to test AI.

Step-by-Step Guide to PromptOps Implementation

- Examine AI Usage: Map all existing AI workflows currently in use and identify areas where prompts directly drive operational decisions.

- Define governance guidelines: All production-bound prompts need to have explicit approval, security, and version control policies.

- Build a prompt registry: For the ease of retrieval, centralize the prompts’ ownership, usage, and description metadata.

- Integrate with DevOps pipelines: Integrate timely execution and updates directly into CI/CD processes for seamless deployment.

- Include Testing Frameworks: Minimize errors by utilizing automated tools to validate timely outputs before the production rollout.

- Enable observability: For auditing, troubleshooting, and optimization steps, record each prompt execution along with performance metrics.

- Train teams: Educate your teams of developers, operators, and testers on the PromptOps best practices and operational guidelines.

The ROI of a PromptOps Layer

PromptOps implementation provides quantifiable, compounding returns in terms of quality, productivity, and operational risk reduction. It is not restricted to only technical governance. Here are the key ROI drivers.

- Reduced Incident Expenses: PromptOps eliminates AI-driven errors by ensuring that prompts are version-controlled and validated, which gradually reduces incident resolution costs and related downtime penalties.

- Faster Adoption of AI: Enterprise AI rollout is expedited by standardized prompt processes and governance frameworks, which also decrease experimentation hurdles and aid faster proof-of-concept to production deployment cycles.

- Improved Developer Productivity: Consistent, pre-approved prompt libraries that work reliably in production aid developers and DevOps engineers to invest more time innovating and less time troubleshooting unexpected AI behaviors.

- Decreased Risk of Noncompliance: PromptOps minimizes the potential for regulatory violations that might lead to penalties or impact one’s reputation through integrated security checks, audit trails, and role-based approvals.

- Higher Automation Dependability: By ensuring that AI-driven processes deliver reliable results, PromptOps frameworks reduce rework, unsuccessful deployment, and operational uncertainty, which regularly erodes trust in automation systems.

- Optimized Resource Utilization: Teams can identify inefficient prompts proactively with automated prompt testing and performance monitoring, which reduces unnecessary compute expenses and avoids wasteful resource allocation.

Build vs Buy: Tools for PromptOps in 2026

- Prompt registries (SaaS/OSS).

- MCP servers like AWS, Azure, and GitHub.

- Observability platforms with AI logging.

- Testing tools such as testRigor.

How can DevOps take Advantage of AI?

DevOps development has always focused on improving productivity, reducing risk, and enabling teams to deliver more with minimal effort. The rise of LLM-driven workflows and AI-powered agents in 2026 represents a turning point, ushering in new operational vulnerabilities and previously unexpected potential. PromptOps ensures that this new frontier is navigated safely and successfully.

Organizations can use AI with the same level of rigor as infrastructure-as-code or CI/CD pipelines by viewing prompts as operational assets that are versioned, tested, governed, and monitored. Organizations can be sure AI will behave consistently, securely, and in alignment with their goals when integrated with resilient testing tools like testRigor.

PromptOps is the operational guardrail for AI in DevOps, and not just a best practice. While the DevOps environment evolves swiftly, one rule is timeless: automation should be reliable as it is strong. PromptOps ensures that standards are not only met but surpassed in the age of AI-driven operations.

The Future: PromptOps as Standard Practice

PromptOps is likely to become as standard as CI/CD pipelines or infrastructure as code. Tooling will mature, patterns will stabilize, and best practices will be widely shared.

However, the underlying reason for using PromptOps will remain the same: Systems must be managed with discipline when they become powerful enough to shape outcomes.

By 2026, AI will no longer be optional. It is embedded in how software is built, deployed, and operated. Prompts and agents are part of the production surface area. Ignoring that reality creates hidden fragility.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |