Battle of the Testers: Technical vs. Non-Technical in the AI Era

|

|

The rapid development of AI has presented revolutionary opportunities within the software development process. Testing, which has traditionally been split between technical and non-technical skills, is undergoing one of its most significant transformations. Categorical representations by coding skill level, tooling familiarity, or manual testing competency are insufficient to describe the role, functions, and contributions of today’s QA practitioners.

Let’s discuss the implications of AI on existing definitions of what a tester is, how technical and non-technical roles are being redefined, and where the discussions in the testing industry are heading. It is intended to give organisations, leaders, and practitioners a holistic approach for transforming teams, processes, and skills in order that they can keep up with these changes.

The object is not to decide which type of tester “wins” but rather, how each type will need to change. What skillsets will shape tomorrow’s QA teams? And the new balance between human input and that from machines.

| Key Takeaways: |

|---|

|

Understanding the Traditional Division

The difference between technical and non-technical testers emerged from necessity at a time when functional precision and technical scalability needed to be reconciled. The two groups’ strengths have long complemented each other.

The Technical Tester

Technical software testers, otherwise known as test automation engineers, SDETs (Software Development Engineers in Test), or quality engineers, typically possess the following characteristics:

- Strong background and familiarity with programming languages or scripting.

- Ability to create, run, and maintain automated test cases

- Understanding of testing frameworks, CI/CD systems, and software architecture

- Comfort with debugging, reviewing logs, and analyzing system-level behavior

- Experience in performance, API, integration, and system testing

Their work provides consistency, speed, and scalability in situations where automation is needed for repetitive regression coverage or continuous deployment pipelines.

The Non-Technical Tester

Non-technical testers, otherwise called manual or domain-focused testers, usually bring strengths in the following areas:

- Exploratory testing

- Business logic validation

- Requirements analysis and clarity

- UX and usability evaluation

- User acceptance testing

- Domain-specific risk assessment

These testers are great at things that need human judgment, domain knowledge of the field, or a nuanced understanding of how people behave.

Why the Division Persisted

This dissociation remained, as both areas were needed. Technical testers drove automation and efficiency, whilst non-technical testers provided insight into accuracy, relevance, focus, and user-centred quality. But the difference also produced unintended results:

- Non-technical testers were considered less valuable

- Divergent career trajectories and wage systems

- Friction/silos between automation and manual QA teams

- Unrealistic expectations that automation alone could ensure quality

AI challenges the relevance of this historical division.

Read: AI QA Tester vs. Traditional QA Tester: What’s the Difference?

Traditional Technical vs. Non-Technical Testers

The historical split between technical and non-technical testers was a compromise for accepted automation productivity versus human validation. The following chart emphasizes how the two positions historically added to software quality in different ways via certain skills, responsibilities, and strengths.

| Category | Technical Tester | Non-Technical Tester |

|---|---|---|

| Primary Focus | Automation creation, scripting, framework maintenance | Manual testing, exploratory testing, and business validation |

| Core Skills | Programming, scripting, debugging, CI/CD tools | Requirements analysis, domain knowledge, usability evaluation |

| Test Activities | Writing automated tests, integrating with pipelines, API, and performance testing | Executing test cases, identifying UX/usability issues, and validating user workflows |

| Strengths | Scalability, speed, consistency, repeatability | Human judgment, contextual awareness, and user empathy |

| Approach to Testing | Structured, tool-driven, code-based validation | Intuitive, exploratory, scenario-driven validation |

| Knowledge Areas | Software architecture, APIs, databases, and system behavior | Business logic, customer workflows, product rules |

| Value Contribution | Increases test coverage and reduces manual effort | Ensures relevance, real-world accuracy, and human-centered quality |

| Limitations | High maintenance of scripts requires strong coding skills | Limited scalability, lacks automated regression coverage |

| Common Responsibilities | Building automation suites, maintaining locators, and integrating tests with CI/CD | Exploratory testing, test case execution, defect reporting, and acceptance testing |

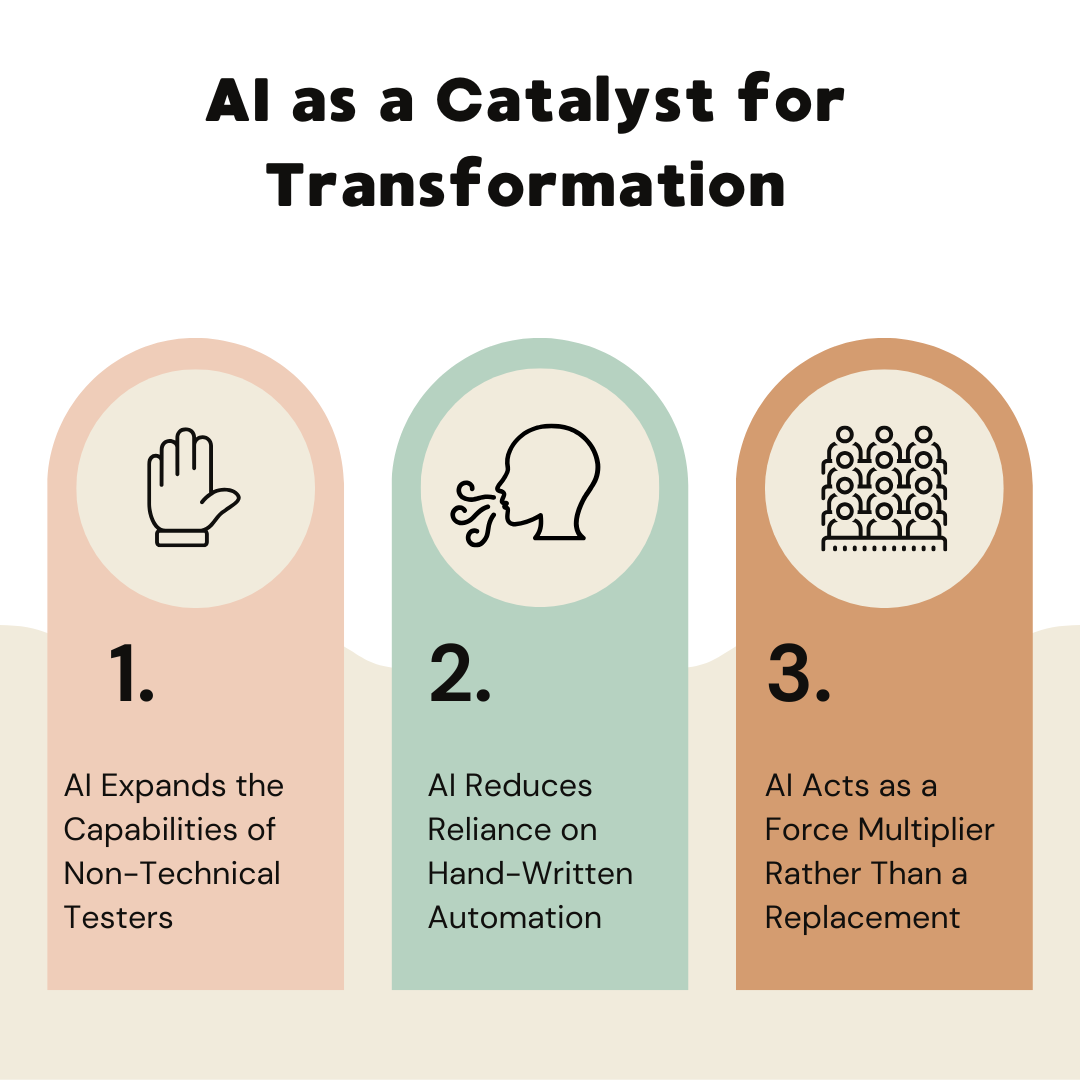

AI as a Catalyst for Transformation

However, AI brings about capabilities that can fundamentally challenge the underlying assumptions of the two traditional categories. It not only speeds up decision-making, but it also transforms the way teams think about and work with data. Through the door to automation, and into the abstract space of figuring out, AI is reorienting how businesses have always worked and what it means to be efficient.

AI Expands the Capabilities of Non-Technical Testers

Non-technical testers would either manually execute or use non-automatable tools. AI-powered testing platforms turn this around by allowing for the following:

- Test creation through natural language instructions

- Automated test maintenance through self-healing features

- Automated detection of UI, functional, or behavioral changes

- Analytical insights based on user behavior or defect patterns

- Conversion of business requirements into executable tests

These platforms eliminate the need for scripting skills and make testers able to deliver at a much higher strategic level. Teams can thus democratize test automation and empower everyone to be an active part of quality assurance, even if they don’t have as much technical knowledge.

AI Reduces Reliance on Hand-Written Automation

Technical testers built careers around writing and maintaining automated scripts. AI introduces the following shifts:

- Automated generation of test cases

- Intelligent selection of tests based on impact analysis

- Reduced need for element locator maintenance

- AI-generated assertions

- Automated regression selection

Automation programmers are now measured not by the number of lines of code they write, but rather by their ability to monitor systems, endorse AI-generated signals, and plan testing approaches at a higher degree of abstraction.

Read: Test Automation with AI.

AI Acts as a Force Multiplier Rather Than a Replacement

AI speeds up delivery, broadens the scope of testing and cuts down on low-level repetition, but human expertise is still required for testing. The role of humans changes from execution to validation, risk assessment, strategic oversight, and supervision. Rather than replacing human judgment, AI enhances it, allowing testers to concentrate on complex cases where intuition and domain knowledge are needed. This fusion of human correctness with machine scale allows for superior results than either could produce in isolation.

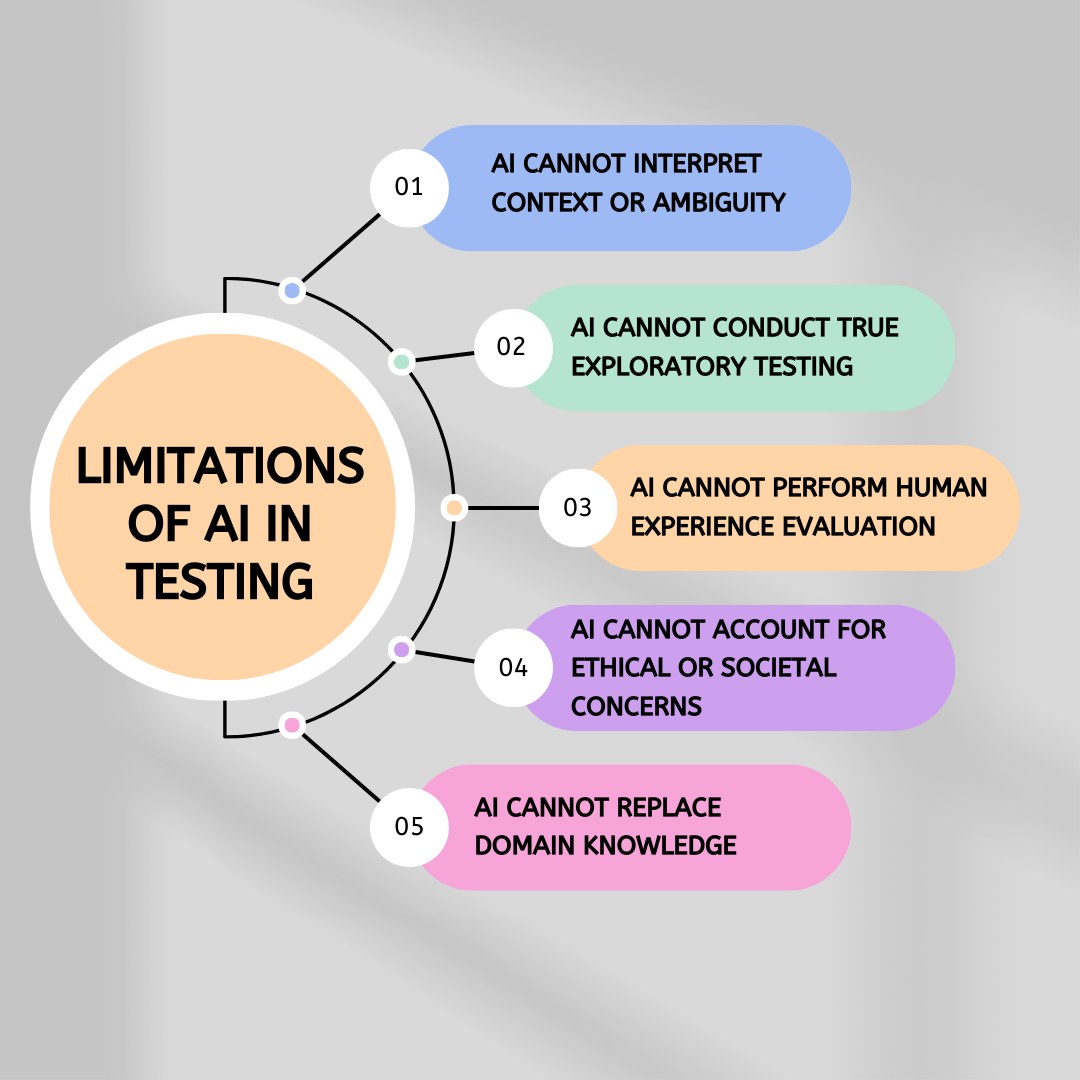

Limitations of AI in Testing

It’s critical to know the limitations of AI in order to decide where human judgment can’t be mirrored in testing. AI enables automation and speeds up coverage, but there’s only so much it can assess well. Awareness of these limits enables businesses to distribute responsibilities efficiently between human testers and AI-based tools.

AI can NOT Interpret Context or Ambiguity

AI is based on statistical patterns, not real comprehension. Ambiguity, conflicting interpretations, hallucinations, and domain-specific nuances in requirements need to be interpreted by humans. These constraints underscore the necessity of human judgment to interpret meaning and validity before implementing automation. AI cannot:

- Identify subtle contradictions in requirements

- Evaluate business alignment

- Understand regulatory or compliance implications

- Recognize missing requirements

AI can NOT Conduct True Exploratory Testing

Exploratory testing requires domain knowledge, creativity, and inductive reasoning of a human. There’s no such thing as curiosity or situational awareness in AI. It is not capable of changing the focus of your testing based on intuition or experience. The consequence is that AI-enabled exploration is limited to predefined trajectories and lacks truly exploratory behavior.

AI can NOT perform Human Experience Evaluation

Usability, accessibility, intuitiveness, and overall user experience are matters of subjective human perception. There are a plethora of nuances in terms of emotional response, cognitive effort or user comfort that shape the way people interact with a product, and this is something AI can never accurately evaluate. AI cannot reliably judge:

- If a workflow is confusing

- If a button label is misleading

- If a chatbot response sounds inappropriate

- If the visual hierarchy is aligned with human expectations

AI can NOT account for Ethical or Societal Concerns

The AI solutions that are being used by the applications can suffer from bias, fairness concerns, and or flawed decision-making. These questions demand human judgment to ensure that AI-driven behaviors are compatible with our ethical and social norms and the values of the organization. Testers must evaluate:

- AI decision boundaries

- Potential discrimination

- Bias in training data

- Ethical implications

AI cannot evaluate itself for ethical soundness.

AI can NOT Replace Domain Knowledge

Areas such as healthcare, finance, insurance, logistics, and compliance require domain expertise while testing. Experienced domain testers know rules that are not documented, historical restrictions, and even client expectations that AI cannot deduce.

Read: Top Challenges in AI-Driven Quality Assurance.

Skills Required in the AI-Driven Testing Era

The terrain of AI software testing requires an even wider and more diverse range of capabilities than ever before. The old class distinction between technical and non-technical testers is breaking down as both become responsible for the same tasks. In this ecosystem, particular core competencies define a tester’s effectiveness and ultimate longevity.

Read: Top QA Tester’s Skills in 2025.

AI Fluency

AI fluency demands that testers be tactical users of AI tools rather than passive consumers. This involves developing intuitive natural-language instructions, checking AI-generated outputs, and pinpointing mistakes or misunderstandings. Testers would also need to understand the risks and limitations of AI while effectively incorporating insights provided by AI with respect to overarching quality strategies.

Data Literacy

With Machine Learning (ML)-powered analytics increasingly influencing quality-related choices, data literacy is a necessity. Testers need to know how to read a dashboard, understand impact analysis, and notice patterns or deviations. This faculty, too, depends on being able to recognize true signals amid false positives or misleading indicators.

Enhanced Communication and Collaboration

In today’s era, QA must be totally integrated with many different teams, including developers, product managers, UI/UX roles, data scientists, and security people. Testers should ensure clear communication and create a shared understanding among all stakeholders. This involves clearly stating assumptions and rationalising testing decisions in order to facilitate informed, cross-functional alignment

Domain Specialization

With AI assuming general or shallow testing tasks, domain specialization will rise in importance. Testers who understand industry regulations, business workflows, and customer pain points can unearth risks that AI can’t foresee. This knowledge leads to a more rigorous validation of real-world edge cases and helps keep in line with industry standard expectations.

Strategic and Analytical Thinking

Testing is transitioning from primarily execution-based work to positions based on strategy and informed decision-making. This entails testers to be good in test prioritization, risk-based testing, and root-cause analysis. In addition, a good grasp of the system architecture and quality governance also boosts their capability to lead testing efforts efficiently.

Adaptability

The AI and testing tools are rapidly maturing, changing how things are done as well as what is expected. Testers need to be avid learners, always looking forward to learn new means and methods with emergent technologies. One must be adaptable, for success is built on an ever-growing capacity to quickly step into and out of new work streams and responsibilities.

Read: AI Engineer: The Skills and Qualifications Needed.

The Evolving Role of Technical Testers

Technical testing is not going anywhere, but it needs to move beyond scripting and building frameworks. Their duties also include monitoring AI-based automation and verifying complex system actions to fix problems that AI tools can’t accurately interpret.

They also need to work closely with development and architecture teams as they would have to deal with integration, performance, and reliability issues. As testing evolves to be more intelligent and self-directed, the technical tester is now required to work at a higher level of strategy and engineering.

Read: The Future of Testing.

Shift Toward Higher-Level Engineering Work

Technical testers are increasingly being asked to be involved more in engineering-level activities, for example, verifying AI automation is doing what it’s supposed to and debugging complicated failures that automated systems cannot derive by themselves. Now, part of their job is creating specialized test harnesses, working closely with developers on API and performance testing, and ensuring quality across distributed or microservices architectures.

In addition, platforms such as testRigor that reduce the requirement for script-heavy automation continue to push technical testers in the direction of strategic oversight, complex debugging and engineering design over conventional script building.

Focus on System-Level Quality

Technical testers need to focus more on system-level characteristics such as scalability, performance, reliability, security, and robustness. Their duties also involve evaluating the efficiency of CI/CD servers to ensure quality is built into automated delivery pipelines. These responsibilities require a strong understanding of software architecture, as they involve analyzing complex interactions across distributed systems.

AI Oversight Becomes a Core Responsibility

AI supervision has shifted to be a key task of technical testers as they validate the correctness and coverage of AI-generated test cases. They also need to make sure AI operations logic matches the application structure and test the robustness of AI-driven predictions under scenarios. Thus, the nature of the technical skillset changes more towards monitoring and checking AI-based test systems than implementing automation.

The Strengthened Role of Non-Technical Testers

Non-technical testing becomes increasingly important as AI reaches its limitations in assessing the human aspects of software quality. Their expertise in user behavior, business context, and real-world workflows enables them to catch the issues that automated systems miss.

These thought processes may become even more important as AI takes on more mundane execution steps, since the perspective of non-technical testers is what really keeps products relevant and usable.

Increased Influence on Automated Coverage

Natural-language test generation and AI-assisted workflows allow testers with no coding skills to transform their domain knowledge into automated test scripts. Solutions like testRigor take this a step further by allowing testers to write and maintain automated tests without having to learn a script or code, purely using natural language. This means that guided exploratory testers can test real-world user flows and add value to regression coverage without the need to write code. Read: Decrease Test Maintenance Time by 99.5% with testRigor.

Expanded Focus on Exploratory and Contextual Testing

Non-technical testers have a major place in exploratory and contextual testing, which require human judgment to see things that cannot be found through automation. Their capability to find usability issues, confirm business logic, and validate workflows under realistic scenarios provides critical insights into product quality. This user-centred perspective means even the minor inconsistencies and usability issues can be captured and communicated.

Critical Involvement in AI System Testing

With AI being included in more products, non-technical testers are also essential to testing for fairness, bias, and real-world behavioral effects. Their participation is crucial for the evaluation of user perception, for validating non-functional behavior, and to ensure the responsibility of AI-driven features. These tasks currently have to be done by human hands and cannot be handed off entirely to automation.

New Roles Emerging in the AI Era

As AI transforms testing routines, new roles are beginning to surface with a mix of technical depth, analytics and human-centered competency. These roles cover unmet aspects of traditional testing, especially in AI governance, ethical review, and data-informed decision making. Together, they are the blueprint for future modern QA teams and a sign of an increasingly interdisciplinary skill set that is required.

Read: How to Keep Human In The Loop (HITL) During Gen AI Testing?

- AI-Augmented Tester: Testers leverage AI tools as part of their day-to-day work, blending human intuition with machine automation to quickly increase coverage and efficiency. They are the link between domain expertise and AI-based automation.

- Quality Strategist / Test Architect: These roles will define the overall testing strategy that blends AI automation, exploratory testing, analytics, and risk-based approaches. They work at the strategic level to ensure testing is associated with business requirements and system design.

- AI Quality Supervisor: This role is responsible for evaluating AI-generated test assets, confirming model behavior, and reliable functioning of AI-based testing components. Supervisors track AI maintenance logic and evaluate its alignment with changing application architectures.

- UX and Accessibility Quality Specialist: Experts in this sector will make sure digital products meet accessibility requirements and provide an inclusive experience for all users. Their research focuses on usability, clarity, cognitive load, and adherence to accessibility guidelines.

- AI Model Tester: They verify ML components for fairness, bias, accuracy, stability, and security. They guarantee that AI-based capabilities behave ethically and work reliably in various real-life situations.

- Data-Driven Test Analyst: These analysts interpret insights that AI has generated, investigate anomaly patterns, and make quality risks visible based on data analysis. Their results can be used to aid rational decision-making and enhancement throughout the testing life cycle.

Organizational Challenges and Required Adaptation

The adoption of AI brings about both structural and cultural challenges that organizations need to navigate carefully. Apart from all the tooling changes, there are shifts in workflow, expectations, roles and responsibilities that teams need to address in order to successfully plug AI into quality processes. To mature an AI-empowered testing environment, one also needs to consider intentional transformation, supportive leadership, and transparent governance.

- Resistance to Change: Teams might resist integrating AI for multiple reasons, such as fear of losing their jobs, lack of knowledge about AI capabilities, and relying on old automation methods or inadequate training and support.

- Quality as a Shared Responsibility: Organizations need to constantly reiterate that quality cannot be the responsibility of only QA teams; everyone needs to collaborate across design, development, product, data, and engineering functions.

- Governance and Oversight: AI-enabled testing demands a robust governance framework that includes human validation, accountability mechanisms, documentation of AI’s decisions, and persistent monitoring for unintended consequences.

The Decline of the Technical vs. Non-Technical Divide

The distinction between technical and non-technical testers becomes increasingly irrelevant in an age of evolving testing practices. The need for analytical thinking, strategic insight, and human-centered evaluation is among the skills set in a world of AI-driven tools and cross-functional collaboration. It means that future testers would be described not so much on a technical continuum, but based largely on their ability to adapt and solve problems.

Key Future Characteristics of Testers

Future testers will be expected to:

- Understand the role and limitations of AI in the testing lifecycle

- Contribute to automated test coverage, even without traditional coding skills

- Execute strategic, analytical, and exploratory testing responsibilities

- Collaborate effectively across engineering, product, UX, data, and AI/ML teams

- Evaluate quality holistically, considering functional, non-functional, ethical, and user-experience dimensions

The future of testing prioritizes adaptability, intelligence, and critical reasoning over rigid labels.

Wrapping Up

In a world where AI-driven testing is the next frontier for software quality, the long-standing barrier between technical and non-technical testers becomes obsolete. What counts in the end is a tester’s capacity to think critically, understand context, work strategically with AI, and contribute meaningful insights that machines cannot provide. As this transformation in the role of testing moves from execution into intelligence, the best testers will be those who can evolve, keep learning, and mix human judgment with AI capabilities. The outcome is a QA organization that’s more cohesive, collaborative, and future-proof, where both humans and machines lift each other.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |