Feature Flags: How to Test?

|

|

Specialized mechanisms in software development that enable teams to ship code safely, quickly, and with greater flexibility are Feature Flags. However, this is possible only if they are tested well. Wrongly tested feature flags can result in hidden bugs, unusual edge cases, and the most dreaded “it only breaks in production” moments.

| Key Takeaways: |

|---|

|

This is a deep dive, practical guide on how to test feature flags end-to-end, from unit tests to production monitoring.

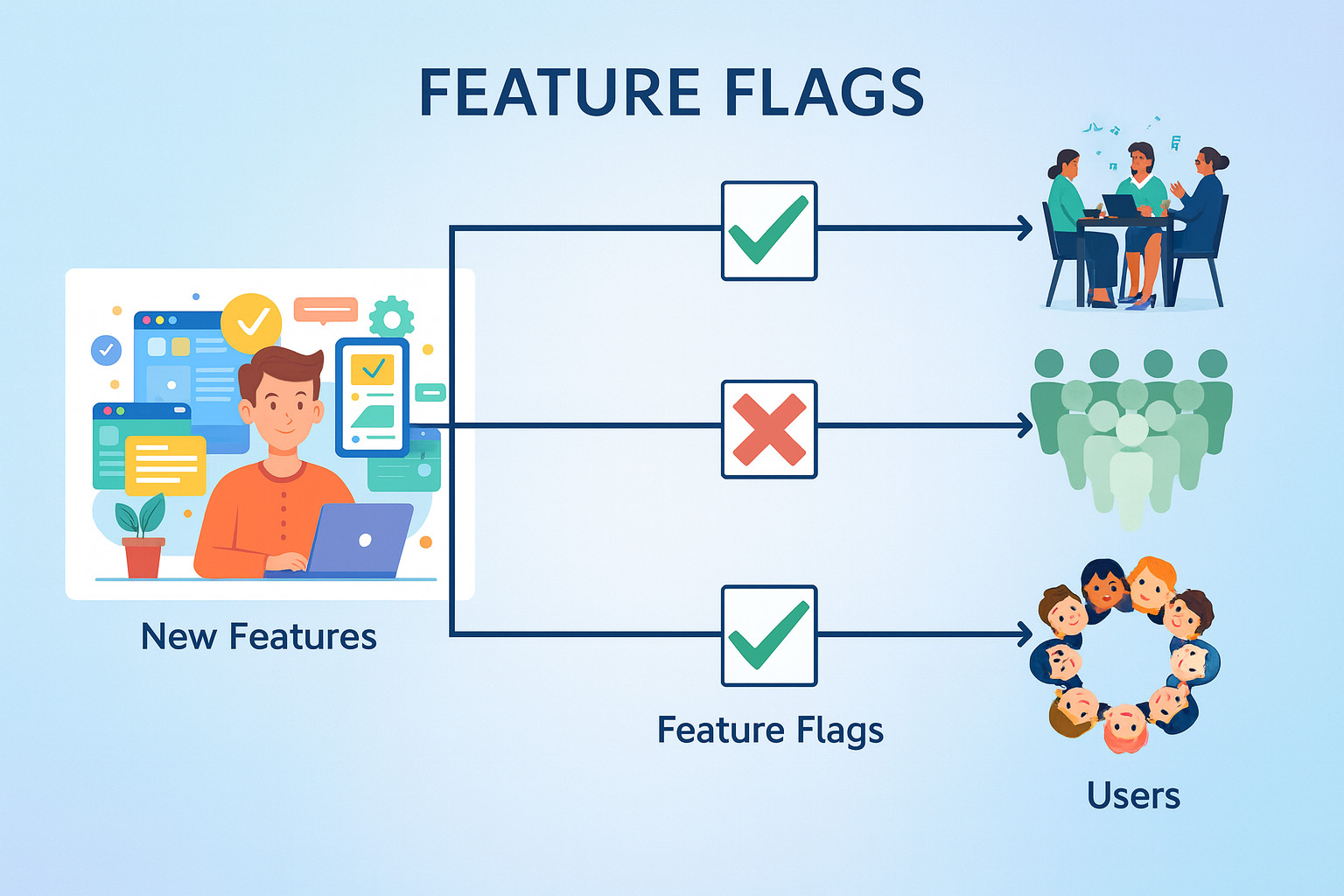

What Are Feature Flags?

Feature flags are a software development mechanism for managing features in a controlled environment.

Using feature flags substantially increases the scope and complexity of testing requirements. New permutations of system states are introduced with each flag that must be rigorously tested to ensure stability and performance. This poses a testing overhead that can stretch resources and extend timelines.

To prevent this, organizations adopt automated testing frameworks designed to handle multiple flag states. It is also advisable to clearly define the testing scopes and objectives for each feature flag, based on its type and purpose.

- Turn a feature on or off without redeploying.

- Gradually roll out features to a subset of users.

- Run experiments / A/B tests for the application.

- Control kill switches for risky functionality.

if (flags.newBehaviorEnabled) {

testNewBehavior();

} else {

testOldBehavior();

}

- Flag off → existing behavior

- Flag on → new behavior

The above code can become more complex if you use more flags and nest them or combine them. Therefore, an appropriate testing strategy should be adopted for testing feature flags.

Ensuring the number of feature flags in the system is at a bare minimum to meet the system’s goals is the best way to test and maintain feature flags.

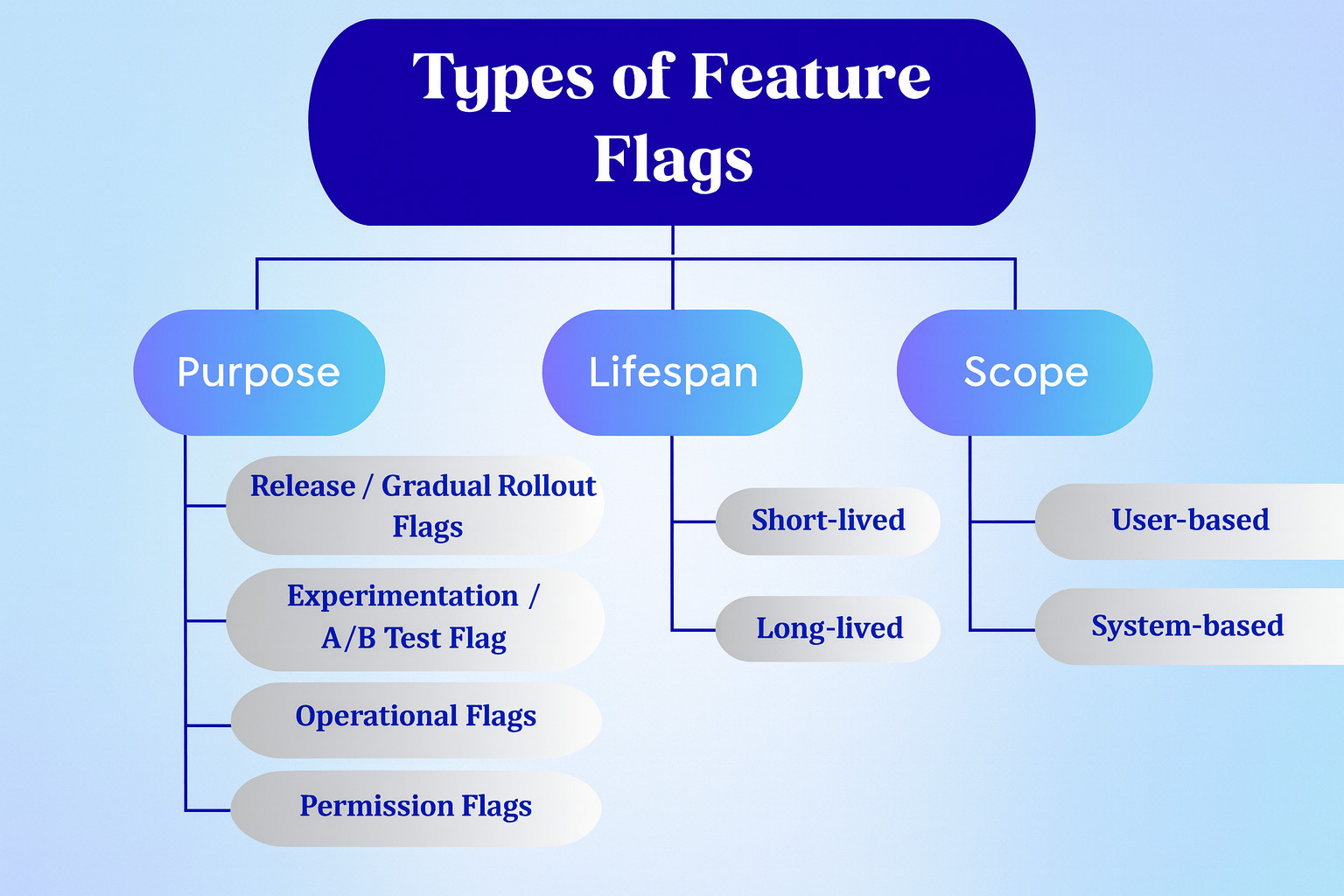

Types of Feature Flags

Feature flags are mainly classified based on their purpose as follows:

The following table summarizes these four types of flags:

| Flag Type | Objectives | Key Testing Focus | Dependencies | Compliance Considerations |

|---|---|---|---|---|

| Release | Rollout of the controlled feature | Functional testing | User segments, application versions | Release management procedures |

| Experimentation | User experience metrics | A/B testing | A/B testing capabilities, user data | Data privacy regulations |

| Operational | Performance and system stability | Performance and resilience testing | System resources, backend services | Operational standards and regulations |

| Permission | Feature availability and user access | Security testing | Security protocols, user roles | Data access and security |

Release / Gradual Rollout Flags

These flags are used to ship code dark and allow it for a subset of users. It is often temporary and is removed once rollout is complete. This flag focuses on testing old and new code paths (backward compatibility) and internal users. It also considers a full rollout, ensuring that turning the flag off is safe, even if partial rollout data exists.

Experimentation / A/B Test Flags

This type of flag is used for controlled experiments with multiple variants (variant = “control”, “treatmentA”, or “treatmentB”) and also in situations where traffic is split according to specific rules.

The primary focus of testing is on correct assignment logic (ensuring users consistently get the same variant). It ensures that metrics and events are correctly recorded for each variant, and no variant disrupts the core journey, such as login, purchase, and so on.

Operational Flags

Operational flags are used to control the operational aspects of a system. System behavior during different conditions or incidents is managed using these flags.

These flags are system-based and affect the entire application. These flags play an essential role during migrations from one back-end to another or during the adoption of a new type of service.

Some examples of operational flags are “Maintenance mode”, “Disable payments”, and “Use backup provider” flag.

The flag-on/off mechanism should be reliable under stress, and switching should not corrupt data.

Permission Flags

Permission flags are used to control feature access based on user segmentation or roles, allowing fine-grained control over access.

For example, early adopter users receive a new product experience, while VIP customers experience a different deposit process, and so on. With permission flags, engineers can test on production without affecting the general player population.

Permission flags focus on regression coverage for all configurations and also ensure all unsupported combinations fail fast or are blocked.

- Short-lived: These flags exist only for a single release or experiment cycle.

- Long-lived: Long-lived flags exist for multiple release cycles. However, the number of cycles is limited.

- User-based: This flag directly affects UX and controls what users see.

- System-based: This type of flag controls the operational aspect of the application.

What Exactly Should You Test?

Before moving on, let’s identify exactly what you’re trying to test and why. Are you looking to test how your app interacts with feature flags, or are you questioning whether the flagger itself is working? Is it compatibility or performance you are testing? This should be decided before you jump into other aspects.

- Functional Behavior: Ensuring that each flag state (on/off or variants) behaves correctly as per expectations. Read: Functional Testing Types

- State Transitions: Flipping the flag at different times doesn’t break things, and the transition is smooth. Read: What is State Transition Testing?

- User Targeting: The right users see the proper behavior, which means users are targeted correctly.

- Data and Compatibility: Data written in one state is safely readable and compatible in another.

- Performance: Flag evaluation doesn’t affect performance, cause latency, or memory issues. Read: What is Performance Testing

- Resilience: The application is resilient enough to handle events where the flag service is down or slow. Read: Reliability Testing

When testing feature flags using testing strategies (unit, integration, E2E, etc.), these dimensions should be covered across environments.

Next, we will discuss various testing strategies for feature flag testing.

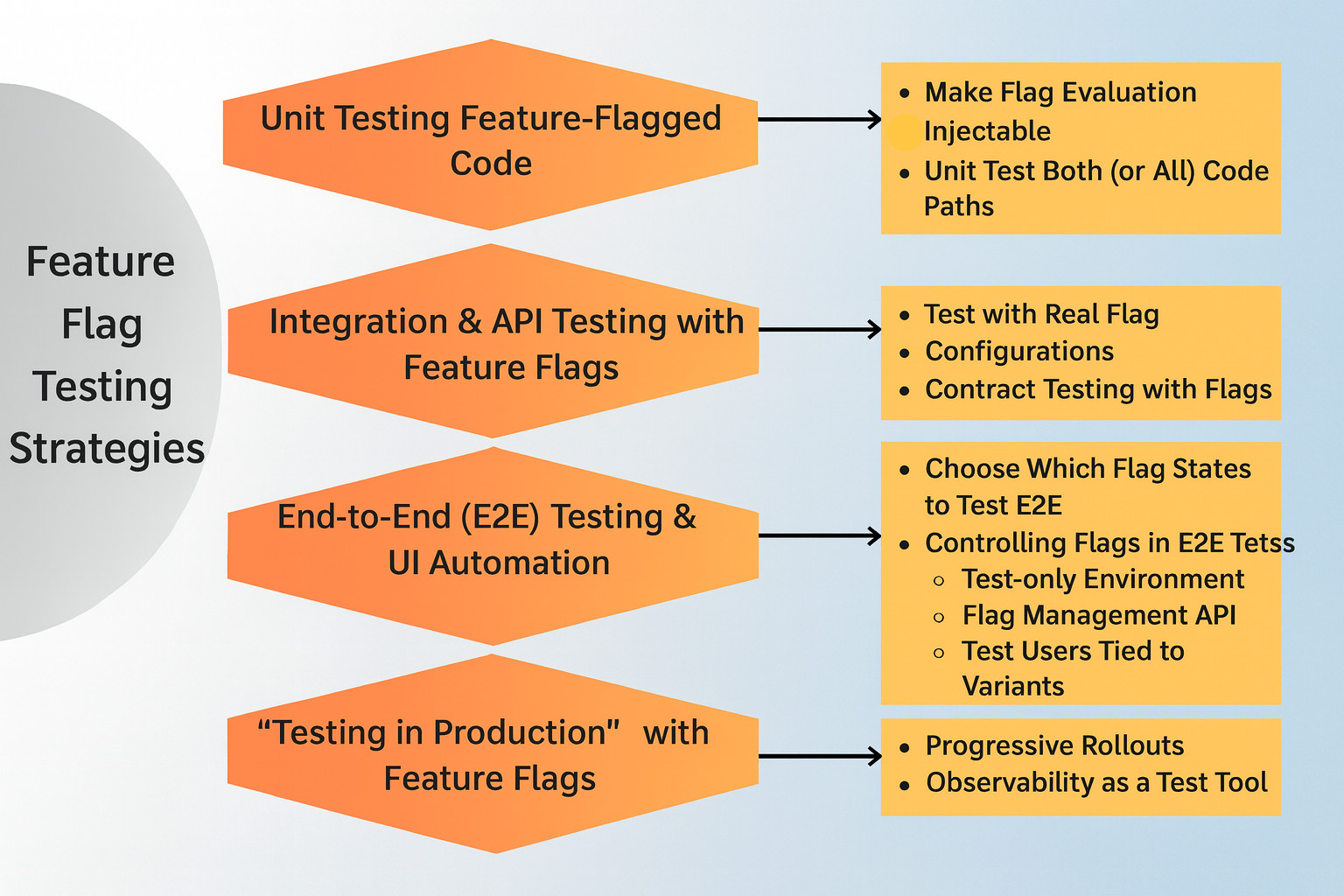

Unit Testing Feature-Flagged Code

Unit testing is the simplest part of testing feature-flagged code. Unit tests validate individual pieces of functionality, which are then wrapped in feature flags. Testers write unit tests as usual for all pieces of code in the application and test them independently.

Strategies used for unit testing of feature flags are:

Make Flag Evaluation Injectable

def checkout(total, flags):

if flags.is_enabled("new_checkout"):

return new_checkout(total)

return old_checkout(total)

//Now in tests, you can mock or pass a fake flags object

def test_checkout_new_flow_enabled():

flags = FakeFlags({"new_checkout": True})

result = checkout(100, flags)

assert result.payment_screen == "new"

Thus, while unit testing each functionality, a specific version is enabled as a result of flag evaluation.

Unit Test Both (or All) Code Paths

- Flag off: Test the existing behavior when the flag is off. It should remain unchanged.

- Flag on: Test the new version when the flag is on and validate the new logic.

- If it’s multi-variant, test each variant separately.

Integration and API Testing with Feature Flags

Unit tests don’t cover every functionality, especially when you have to verify that services collaborate correctly when flags are toggled. For integration testing of feature flags, the following techniques are employed:

Test with Real Flag Configurations

- All flags are off (baseline case).

- New feature on for a specific user segment.

- Mixed flags, especially when they interact with one another (e.g., new checkout + new pricing).

- Local environments (e.g., Docker compose).

- Shared integration environments with a test flag configuration.

Contract Testing with Flags

- Response shapes

- Error formats

- Required fields

- Old customers can still work when the flag is on.

- New version/behavior conforms to a documented contract.

- Changes that are backward incompatible:

- Use versioned APIs, or

- They are only enabled when all consumers are ready.

Read: API Contract Testing: A Step-by-Step Guide to Automation

End-to-End (E2E) Testing and UI Automation

In this strategy, it may be overwhelming to E2E-test every flag combination. However, you should cover all the critical paths. Use the following approaches for E2E testing:

Choose Which Flag States to Test E2E

- High-impact user workflows such as signup, checkout, and payments.

- Flags that change UI workflows.

- Flags that interact with external systems, such as payments and identity providers.

- At least one flag is set off in an E2E test (baseline case).

- At least one flag on the E2E test for the new behavior is tested.

- For experiments, one test per variant that materially changes the flow is verified.

Controlling Flags in E2E Tests

- Test-only Environment: In this environment, the flags are hardcoded or configured to known values, ‘c’, per test suite.

- Flag Management API: The flags are set before/after test runs using the feature flag platform’s API. It should be ensured that everything is cleaned up after tests.

- Test Users Tied to Variants: In this scenario, test users are created so that their IDs are guaranteed to fall into specific variants. For example, the user ID hash is for controlled assignment.

Automating Feature Flag Testing

- Automate Tests in English: Feature flag testing involves a variety of scenarios that need to be tested due to the different states of the flags. With testRigor, you can write tests to validate these scenarios in plain English statements, without the hassle of having to write any code. This not only saves time but also makes testing inclusive, as now, the non-technical members of your team, like product owners and manual testers who know more about the product, can automate directly.

- Reduced Test Maintenance: Test maintenance can become a challenge with such scenario-intensive testing. But with testRigor, that is the least of your worries. This is because the tool can adapt to the changing UI and update tests accordingly, as it does not rely on the code-level details of the UI elements mentioned in your test cases. For example, if your test case step says ‘click on “login”’, then testRigor will look for the login button rather than the XPath or CSS selector associated with that element.

- Leverage Gen AI: With testRigor, you can use generative AI to create test cases on your behalf and also rely on it to test complex UI elements or pieces of modern websites like AI features (chatbots, LLMs, etc.) while having test maintenance being taken care of.

- Integrate with CI/CD: Promote continuous testing by integrating these test cases into your CI/CD pipelines to ensure that your feature flags are always working.

“Testing in Production” with Feature Flags

Some issues will inevitably arise in production even with great pre-release tests. Feature flags enable the testing of controlled output. The following are the approaches for this:

Progressive Rollouts

- Starting with a small section of users, such as internal users/staff only.

- Rolling out the release to 1% of users.

- Monitoring key metrics, including errors, latency, and conversion.

- Slowly increasing the percentage if everything is healthy and functional.

Observability as a Test Tool

- Logs: Flag states are included in log entries.

- Metrics and Dashboards: Errors and response times are tested and monitored by flag state/variant.

- Tracing: Assess which features were active for a given trace.

Testing Feature Flags in CI/CD Pipelines

To truly benefit from feature flags, it is essential to integrate testing into your delivery workflow. Testing feature flags within CI/CD pipelines ensures the stability and functionality of your software, regardless of which features are enabled or disabled. Approach this as follows:

Recommended Pipeline Flow

- Pre-merge (PR) stage

- Execute unit tests for both flag states (ON/OFF).

- Run fast integration tests with baseline configs.

- Post-merge / main branch

- Run broader integration tests with selected flag combinations to cover the main functionality.

- Run E2E tests for critical flows (with flags on/off)

- Before rollout/release

- Perform smoke tests in a staging environment with real-like flags.

- Use sanity checks on metrics and logs (no error spikes with the feature enabled).

Configuration as Code

- Code is easier to version, review, and reproduce.

- It can support repeatable test runs.

- Configuration as code reduces “it worked last week, why is staging different now?” moments.

Common Testing Pitfalls with Feature Flags

-

Forgetting the “Flag Off” Path: Teams mainly focus on new behavior and forget old paths. This way, old paths may remain untested and become stale.Keeping regression tests for the off state as long as the flag is in existence can mitigate this drawback.

-

Long-Lived Flags: flags that live long and never get removed increase complexity, cause test-case sprawl, and confuse new team members.Some ways to overcome this challenge are to treat flags like code, so they have a lifecycle, and add tickets to remove flags once a feature is fully rolled out. And clean up tests for outdated paths.

- Hidden Test Dependencies on Flag State: Sometimes, test environments may share flag configurations with other teams, resulting in test runs becoming flaky and tests passing or failing depending on who last changed a flag.

To fix this, test flag configurations should be made explicit and controlled by the test suite. Additionally, the flag state should be reset before and after each test run.

Feature Flag Best Practices

As discussed in this article, feature flags offer significant advantages for software development when implemented effectively. By adhering to certain best practices, teams can maximize the benefits of feature flags, enable faster iterations and safer deployments, and maintain control over feature releases.

- Plan and Define Scope: Before you begin incorporating feature flags in your project, clearly define the purpose and lifecycle of each feature flag. You should understand which parts of the codebase will be affected and how the flag will interact with existing features. Try to avoid adding overly broad or complex flags.

- Consistent Naming Conventions: Establish clear and consistent naming conventions for your feature flags to improve readability, maintainability, and make it easier for team members to understand the flag’s purpose and status.

- Manage Flag Lifecycles: A feature flag lifecycle has the following steps:

- Introduce: Implement flags in your project and ensure they are initially off or targeting a small internal group.

- Rollout Gradually: Start with a small group of users and then rollout gradually using progressive rollout strategies (e.g., internal testers, beta users, then full production) to minimize risk and gather feedback.

- Clean Up: It is essential to remove obsolete flags to reduce technical debt and maintain a clean codebase. Automate the process where possible.

- Testing in Production: Use feature flags for safe testing in production environments. Specific user segments or internal users can be used to validate functionality and performance without affecting the entire user base.

- Robust Monitoring and Logging: Follow the steps below for effective monitoring and logging:

- Monitor Performance: Track how feature flags impact application performance.

- Log Changes: Record all feature flag changes, including the user who made the changes and the date and time. This is useful for debugging and auditing.

- Integrate with Analytics: Utilize analytics and support systems to leverage flag usage data and gain insights into feature adoption and user experience.

- Access Control and Security: Implement strict access controls to manage the creation, update, or deletion of feature flags. Regularly review and audit access logs.

- Avoid Flag Dependencies: Minimize or eliminate dependencies between feature flags to prevent unexpected behavior and simplify management. Document and monitor dependencies as necessary.

- Automate Flag Management: Integrate flag cleanup and other lifecycle management activities into your CI/CD pipeline to reduce manual effort and ensure consistency across your organization.

- Consider Abstractions for Complex Features: Encapsulate flag logic with objects or services instead of using if/else conditions throughout the codebase for larger projects.

Conclusion

Feature flags are used to manage features in a controlled environment. They multiple possible behaviors and hence, testing has to be intentional. All the permutations and combinations should be considered during feature flag testing. The testing tasks should be prioritized by risk, and a mix of unit, integration, E2E, and production monitoring should be used to cover all functional, data, performance, targeting, and resilience aspects.

Both sides of the flags and all their variants should be tested. Additionally, flags should be kept as short-lived as possible and cleaned up once they are obsolete.

In a nutshell, flag states and configurations should be treated as part of your testable system and not as an afterthought.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |