How to Test in a Microservices Architecture?

|

|

The software landscape has dramatically changed over the past decade, and so has modern application architecture. Conventional monolithic designs (which dominated due to their simple architecture and centralized control) have been largely replaced by microservices, a decentralized philosophy of breaking code into smaller independent components that are designed to be modular, scalable, and resilient. Companies such as Netflix, Amazon, and Uber have adopted microservices to speed up their development cycles, increase fault tolerance, and allow for self-contained deployment.

However, this architectural freedom comes at a price: Increased complexity in testing. In a microservices architecture, tens or hundreds of small, decoupled services communicate with each other through APIs, message queues, and event buses. This leads to challenges around integration, data integrity, versioning, and observability. Testing is now more about ensuring the reliability of a distributed ecosystem than simply validating functionality.

Whether you’re a QA engineer, developer, or test architect, understanding how to effectively test microservices is essential to delivering robust, scalable software.

| Key Takeaways: |

|---|

|

Understanding Microservices Architecture

Before we discuss more about testing, we need to have a clear understanding of the model that we are going to test.

A microservices architecture is an architectural style that focuses on small, loosely coupled yet independently deployable services. Each service:

- Implements a specific business capability (e.g., Orders, Payments, Inventory).

- Maintains its own codebase and often its own database.

- Interacts with other services using APIs or messages (e.g., HTTP/REST, gRPC, events, queues).

Instead of a monolithic deployment, you have many small deployables, each developed and owned by (usually) a small team.

Key Characteristics of Microservices

Most microservices-driven systems have these in common:

- Independent Deployability: Every service can be deployed independently; there is no need to redeploy the whole system. This is great for rapid iteration and team independence, but challenging for compatibility and testing.

- Loose Coupling, Tight Boundaries: Services speak only through well-defined contracts (APIs, schemas, message bodies). Internals can change, but contracts have to be stable.

- Polyglotism in Technology: Each team can select the technology stack it wants to use for a service, including language, framework, and data store requirements. That’s empowering, but the testing and tooling also have to play nicely across multiple stacks.

- Distributed Infrastructure: Services are commonly operated in containers, scheduled by platforms such as Kubernetes, and run on clusters, regions or clouds. Networking, discovery, load balancing, and resiliency are all part of the architecture.

Read: Micro-frontends Automated Testing: Is It Possible?

Why Microservices Exist?

Microservices are a thing because they allow smaller teams to deliver faster, independently, and you scale only the part of the system that takes a heavy load. They offer better fault isolation and are easier to align with business domains using well-defined bounded contexts. However, those advantages have pushed complexity into deployment pipelines, infrastructure, communication, versioning, and observability. This complexity has a concrete impact on testing, as each service boundary becomes a network boundary of its own with its attendant failure modes and latency.

When testing services, let’s not forget the boundaries around a service and what constitutes an externally dependent component. We need to decide the level of testing, including service-level tests, integration tests, end-to-end tests or contract tests, and realize the problems that will come to light only in production-like environments.

What Makes Microservices Testing Different: Challenges

Before going into “how” it is important to know why microservices need a different testing strategy when compared to monolithic applications. Some of the core challenges:

- Service Boundaries Over the Network: Services communicate using network calls (HTTP / APIs / messaging) and not via plain in-process method calls. This adds latency, serialization or deserialization, partial failure, timeouts, network partitions, and so on.

- Independent Deployability and Versioning: A microservice can be developed independently. That is, API contracts, data schemas, and communication protocols are all subject to change, and this can potentially break consumers if not properly handled.

- Distributed Data Ownership: With microservices, it is common for each service owner to utilize its own database instead of a single unified monolithic database. This isolation complicates data consistency, transactions spanning services, and testing scenarios that span data across services.

- Complex Interactions & Dependencies: A single user request can trigger a series of calls going through multiple services that are asynchronous and possibly using queues, retries, event-driven messaging mechanisms, etc. Testing all the possible paths would be combinatorial.

- Infrastructure Dependencies: Services may rely on external resources such as databases, message brokers, caches, third-party services, environment variables, and configurations. The use of these in realistic situations often necessitates environmental orchestration.

- Scaling and Performance Concerns: Inter-service communication overhead level under load, data store contention, network latency, cascading failures or timeouts, all can exhibit problems that aren’t visible with single service tests.

- Resilience and Fault Tolerance: In distributed systems, things go down, like a service is down, network hiccups, timeouts, and retries. We ensure that resilience mechanisms (fallback, circuit breakers, retries) are working properly under failure.

As a result, naive testing strategies (for example, simply having unit tests per service) frequently miss lots of bugs. However, attempting to “end-to-end test everything under production-like conditions” becomes infeasible, fragile, and slow. The art is in the balance between coverage, speed, maintainability, and realism.

Read: API Testing Checklist.

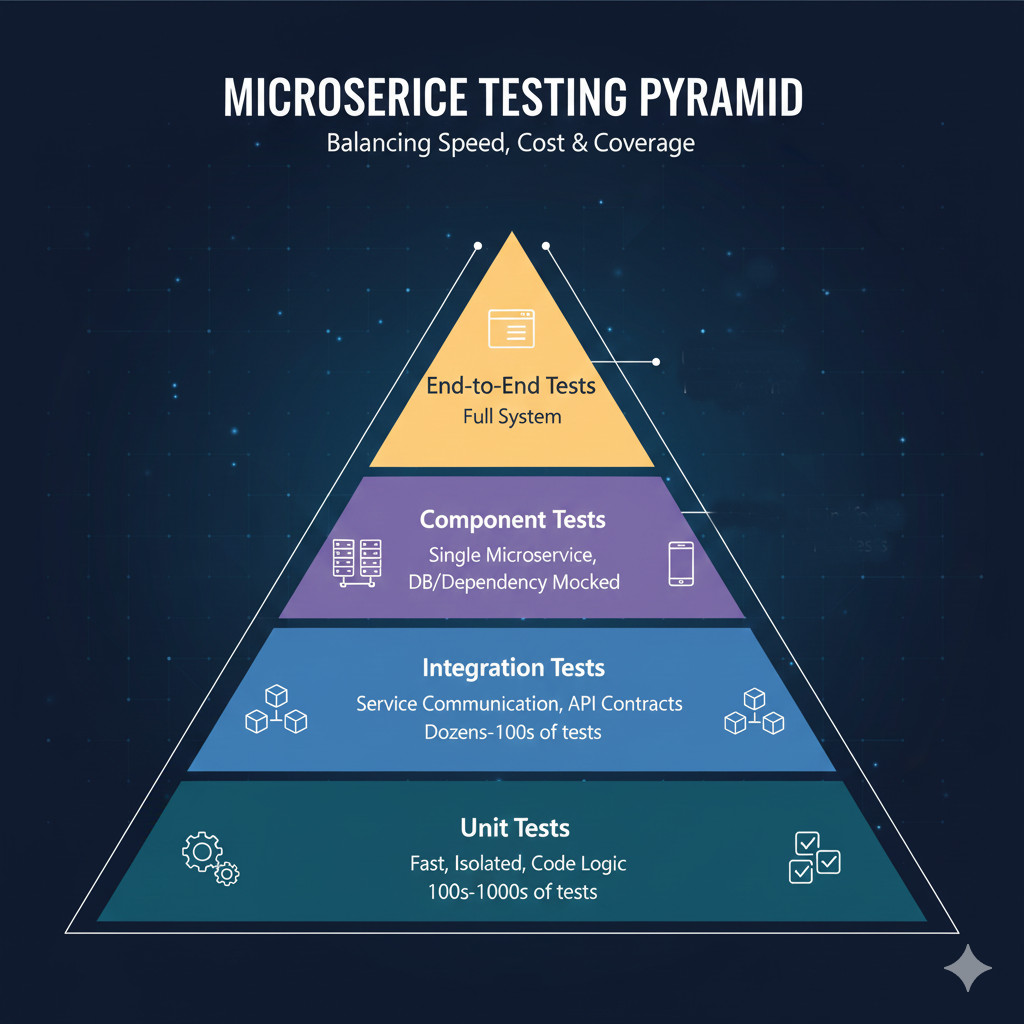

The Testing Pyramid (and Why It Still Matters)

A traditional mental model for test organization is the Testing Pyramid: lots of inexpensive, fast unit tests at the bottom, fewer integration tests in the middle, and only a handful of expensive (slow) end-to-end or UI tests at the top. With microservices, this is still useful, but the traditional boundaries between test types become less clear.

Questions arise, such as what constitutes a “unit” when the smallest meaningful entity might be a whole service. And what “integration” means in an environment in which services are communicating over APIs or message queues. The basic idea is still there. However, most testing should continue to be fast, deterministic, and low-cost at the service level. Here, we will have only a limited set of tests covering the entire system end-to-end.

- Advantage: Early feedback, fast developer feedback loops, ability to catch regressions quickly.

- Risk: Missing cross-service interactions, real-world failures, or environment-related issues.

The trick is to maintain the philosophy while changing the definitions from “method-level unit tests” to “service-level (unit or shallow integration) tests,” and from UI-level E2E to “system-level or workflow-level E2E.”

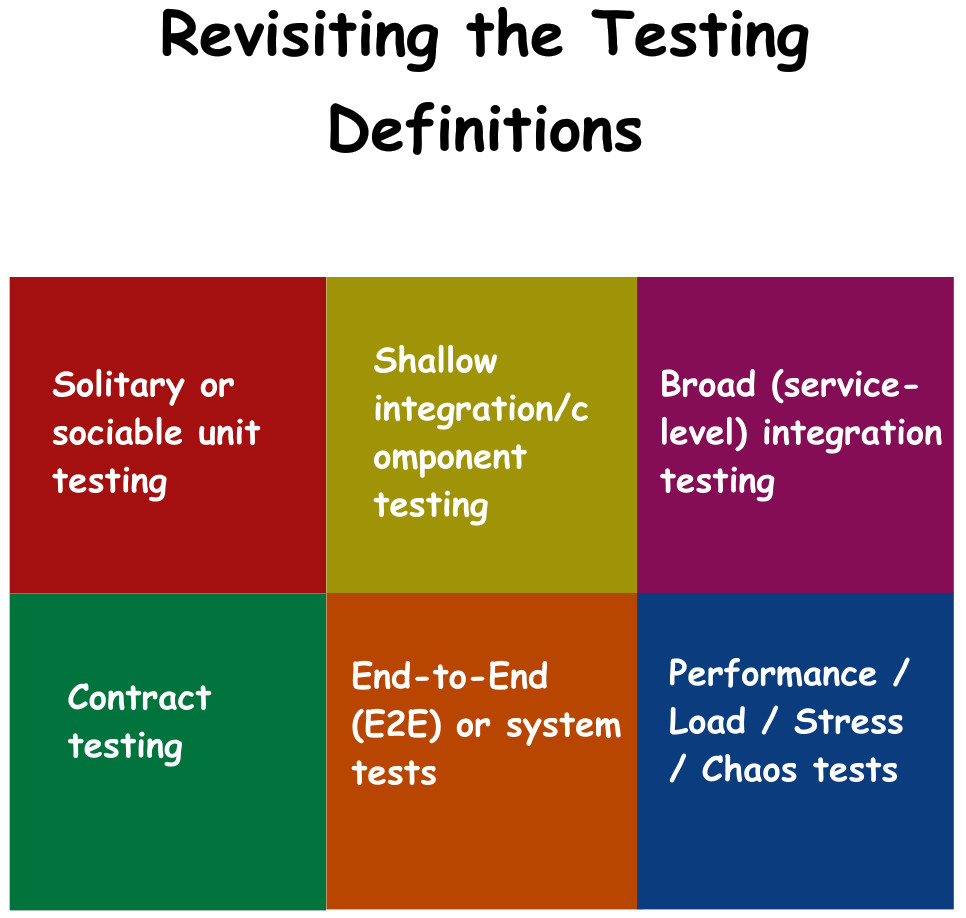

Revisiting the Testing Definitions

Since microservices are distributed and decoupled, the definition of a traditional test is often lost. For instance, there may not be value in testing every getter and setter, or it is not clear if one class calling another could qualify as an integration test when comparing interactions within a service and between services. Likewise, you can consider an API endpoint that is tested with a mock database as a unit test by some, and for others as a form of shallow or narrow integration.

To bring more clarity, practitioners often adopt more granular classifications, for example:

- Solitary or Sociable Unit Testing: Tests in this category focus on logic in a single service, and occasionally mock internal dependencies to keep the scope small and deterministic.

- Shallow Integration/Component Testing: These test multiple levels of a service, like the controller and the service layer, using an in-memory or stubbed database to avoid external dependencies.

- Broad (Service-level) Integration Testing: These are tests that interface between one or more services, potentially using real API calls or message production in a controlled/stage-like environment.

- Contract Testing: These tests verify that services adhere to established API or message contracts, validating request/response shapes, message schemas, and version compatibility.

- End-to-End (E2E) or System Tests: These tests focus on propagating a complete workflow through various services and the UI, pretending to be actual users in a production-like environment.

- Performance / Load / Stress / Chaos Tests: These tests ensure that the services are capable of handling high traffic, failures, latencies, and resiliency. This, in turn, makes sure a solution can scale infinitely and is robust.

Understanding and naming these clearly helps avoid confusion, avoid over-testing, and build a balanced test architecture.

A Practical Microservices Testing Strategy

An effective testing strategy for microservices is layer-wise, consisting of tests that serve different and complementary purposes. By layering this in the right way, teams can get strong coverage without being overly dependent on slow and brittle system tests. This philosophy matches what a lot of real microservice deployments use among the more experienced engineering teams.

Service-level Tests (Unit / Shallow-integration / Component)

This early testing layer is supposed to have quick, deterministic feedback that catches regressions early and validates the core logic with a single service. It focuses on business validity and avoids testing databases, networks, file systems, or any external agency. By making tests small and isolated, a team can quickly identify an issue before it spirals out of control.

To achieve this, they employ unit tests with either mocks or shallow integration (against lightweight in-memory dependencies). These run all the time in development and CI, providing extremely rapid feedback loops. Though they do not address cross-service interactions or environment-level concerns, they provide a baseline that allows simple defects from escaping to slower, more challenging test layers.

Tools like testRigor can help at this layer by enabling teams to create API-level tests using plain English, eliminating the need for complex frameworks. This is particularly valuable when multiple services expose REST or gRPC APIs. This is because teams can quickly validate business logic at the service boundary while keeping tests readable and easy to maintain across evolving microservices.

Contract Testing

Contract testing verifies that one service can talk to another over an API or messaging connection with known request and response structures, and destructive changes don’t happen by accident as individual services learn on their own. In addition, these tests concentrate on payload structure validation, version compatibility, and message schemas used in asynchronous communication. By designing to enforce these rules, teams preserve reliability between independently deployed services.

For this, while synchronous APIs typically make use of consumer-driven contract tests, messaging systems enforce schema validation to ensure the publisher and consumer align. Contract tests run in CI when an interface changes as well (early warning before breaking changes reach production). However, contract tests have their limitations in that they only assert on the shape of an integration, not full semantic behavior or successful workflow outcomes.

While contract-testing frameworks (like Pact) are the industry standard, some teams also extend contract validation using testRigor’s API testing steps. This helps ensure that API changes do not break consumer expectations while keeping tests accessible to non-developers.

Read: API Contract Testing: A Step-by-Step Guide to Automation.

Integration Testing (Broad / Cross-Service)

High-level integration testing involves making sure multiple services work well together and have proper data exchange, API calls, messages, and joint workflows. They also serve as a validation point for data integrity amongst services and system failure modes such as timeouts, retries, and partial failures. By recording actual communication, they find problems that individual service tests may miss.

These tests are usually carried out in staging-like environments, or dynamic test benches running many services with few mocks. These tests are performed in CI before you merge changes that impact multiple services, and often in nightly builds or pre-release pipelines. While really good at catching integration errors, they’re also slower, more brittle, and harder to write than service-level tests.

For multi-service integration flows, tools like testRigor provide a significant advantage because they allow you to orchestrate cross-service workflows entirely in English. This means a single test can call one service’s API, validate the database state of another, trigger an event, and verify the downstream consumer’s behavior, all without writing code or maintaining brittle test harnesses.

End-to-End (E2E) / System Testing

End-to-end testing is the process of validating entire user workflows by testing the whole system, from beginning to end, covering all systems and interlocking dependencies. It ensures that user flows work as expected in the real world, and that data remains valid across services along the entire processing chain. Such testing also surfaces problems with authentication, configuration, service discovery, and real-world latency or failure events.

Such tests involve running the entire stack against a production-like environment, then driving actions as real users would do via UI or APIs using automated tools. They are generally run as pre-release checks, within nightly runs or as scheduled synthetic probes to verify system-wide integrity.

Read: End-to-end Testing.

A number of teams rely on tools like testRigor for end-to-end tests in microservices environments. Since the tool allows test creation using plain English, it becomes much easier to validate entire user workflows that span multiple services, UIs, APIs, queues, and asynchronous interactions. testRigor’s autonomous element detection significantly reduces maintenance in highly dynamic microservice-backed UIs, where selectors and APIs frequently change. This makes E2E testing more resilient and scalable across fast-moving distributed architectures.

Production Monitoring, Observability & Regression Detection

Production monitoring and observability mean that once a system is deployed, it stays healthy, resilient in the face of failure, and free from regressions under real user load. This includes monitoring logs, error rate, latency, throughput, resource usage, and other relevant metrics that may expose anomalies or performance degradation. You may also use synthetic tests or scheduled health-check workflows to validate that critical user flows work in production. Read: Iteration Regression Testing vs. Full Regression Testing.

Dashboards, alerts, and regression detection mechanisms help teams catch issues early by comparing performance and reliability across deployments. This layer is essential because even the strongest pre-release test suite cannot predict every real-world failure scenario, especially in distributed microservice environments. However, maintaining effective observability comes with tradeoffs, such as infrastructure overhead, noisy alerts, and ensuring that monitoring captures the right information without harming performance or privacy.

Microservices: Strategy and Test Automation

An effective test strategy for microservices is not just in what to test but also how to manage tests, environments, and automation. Some key architectural considerations:

- CI/CD Integration: The tests (service-level, contract, integration) should be executed automatically on each commit or pull request. This is a way to get early feedback and avoid regressions. Read: What Is CI/CD?

- Isolated Test Environments: Use an ephemeral (on-demand) environment for integration and E2E tests. This minimizes production contamination and doesn’t carry over “dirty state” from one test run to the next. Some companies do this through Kubernetes with dynamic environment provisioning.

- Test Data Management: Utilize test databases, dummy datasets or seed data sets. Avoid using production data. For stateful tests, have a known start state (clean DB) or run tear-down scripts.

- Test Doubles / Mocks / Stubs vs Real Dependencies: Use mocks (for tests that deal specifically with the service-level) or stubs. When in integration, go for real dependencies (or minimal realistic). For third-party external services, consider contract testing or sandbox/staging endpoints. Read: Mocks, Spies, and Stubs: How to Use?

- Test Parallelization & Isolation: This is especially true for E2E or integration tests. Parallelize your tests whenever possible; isolate state across different runs of the test so that a test cannot interfere with another’s results; and clean up any residual state between one run and the next. Read: Parallel Testing: A Quick Guide to Speed in Testing.

- Versioning & Backwards Compatibility: When you evolve your APIs, maintaining contract tests and making backward-compatible changes becomes important. Deprecate old contracts responsibly once the consumers have migrated.

- Observability & Logging in Tests: Tests should emit logs, metrics, and traces, especially integration/E2E. This helps diagnose failures, identify performance regressions, and follow interactions across services.

Tools like testRigor integrate seamlessly into CI/CD pipelines, making it possible to run UI, API, and workflow tests automatically on every deployment. Because testRigor does not require code-based test frameworks, teams can quickly adapt tests as services evolve and deploy frequently in a microservice environment.

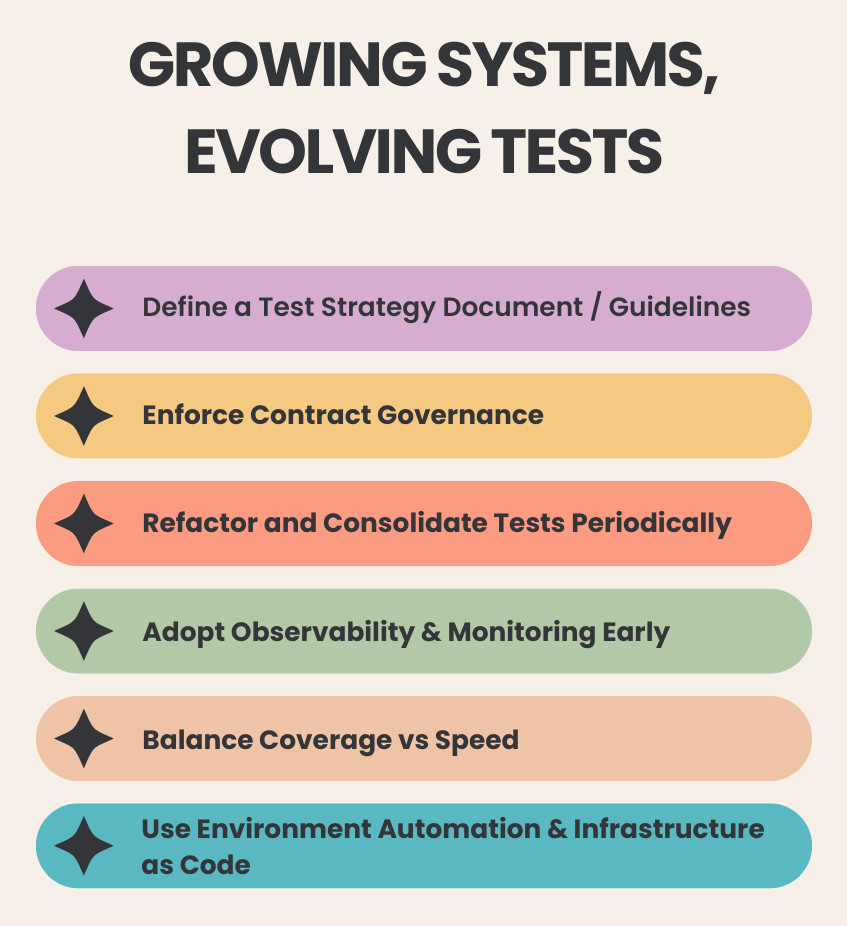

Growing Systems, Evolving Tests

Frequently, microservice architectures are the result of a long-term evolution; services are gradually added, teams expand, APIs change, and features pile up, while legacy services endure. In that case, quality can only be preserved by changing the test strategy.

- Define a Test Strategy Document / Guidelines: Keep the living document with guidelines on what types of tests each service is supposed to have (unit, contract, API integration, E2E), when to run them, ownership & expected coverage, and responsibilities. This is a reference and is useful for getting to know new team members. Read: Test Strategy Template.

- Enforce Contract Governance: As services are updated, API or message contracts might be modified. Utilize versioning (v1, v2, backward-compatible changes), deprecation paths, contract test automation, and cross-team communications to limit breaking integrations.

- Refactor and Consolidate Tests Periodically: Over time, tests require refinement as the codebase evolves; some of them can be removed or replaced with better methods, and sometimes you should refactor slow tests. Think about maintaining your tests as a time of technical debt. Plan for it.

- Adopt Observability & Monitoring Early: As your services multiply, understanding behavior across them becomes challenging. Invest early in distributed tracing, structured logging, monitoring, and dashboards. Make test suites (especially integration / E2E) produce metrics/traces to supplement monitoring.

- Balance Coverage vs. Speed: With a small system, perhaps most tests were at the service layer and executed fast. That said, as an application grows, some integration or E2E tests might become necessary; however, too many slow tests will ultimately slow development. Continuously tune which tests are essential.

- Use Environment Automation & IaC (Infrastructure as Code): For a wide range of services, maintain reproducible environments using tools like Docker, Kubernetes manifests, Terraform, or Helm, allowing us to spin up or tear down testing environments in a predictable manner. Read: What is Infrastructure as Code (IaC)?

As microservices grow, E2E tests often become brittle because service boundaries and UI elements change. Tools like testRigor help reduce this maintenance burden through their autonomous element detection and natural language test authoring, allowing large distributed teams to maintain stable tests even as services evolve independently.

Read: How to Do API Testing in Microservices Architecture: A Beginner’s Guide.

Conclusion

Microservices provide scale, speed, and independence but bring in the same time massive test complexity resulting from distributed systems communication, independent releases, and changing service boundaries. A reliable testing strategy is a balanced approach; a mix of rapid, service-level tests, rigorous contract validation, focused integration checks, and lightweight but high-value end-to-end workflows supported by strong observability. This is further reinforced by tools like testRigor that provide stable, codeless API and UI tests described in plain English, making it easier to maintain high-confidence test coverage while microservices grow and change quickly.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |