Production Testing: What’s the Best Approach?

|

|

When it comes to software testing, the more is usually the better. That is why many companies are now employing continuous testing practices to ensure they provide their users with the highest-quality products. Today, we are going to talk about one of the stages: testing in production, which is the actual live environment after the release. We will cover the main challenges and considerations so that you can develop the best strategy for your company and your software project.

At the beginning of a new iteration, lower testing environments are typically used to verify changes during the development process. Next is the staging environment, meant to be as close to production as possible – which helps to eliminate most potential issues. However, getting an exact 100% match of the production environment is an almost impossible task. Even if we achieve this exact parity, some issues might still get introduced during deployment to production.

After all, no one cares if any of the lower environments were bug-free. What matters is that the actual production environment is bug-free. Therefore, testing has to be done during this stage as well.

Some people might think testing in production is a sign of lower testing quality since they believe it is a concept of deploying poorly tested applications to the end-user. However, this is not the right way to employ this technique. If we test in a production environment, it is not because it was poorly tested during the previous stages. Rather, it is the last defense shield against any real-time bugs. Another reason we might need to test in production is that some types of testing simply have to be done with real users.

Benefits of Testing in Production

Testing in production has a lot of advantages, and it has become essential for software development in recent days. Although risky when not taken with caution, testing in production allows organizations to test software in actual conditions, where stability, proper responsiveness, and alignment with actual user needs can be observed.

The main advantages of testing in production are:

- Real User Data: Production testing lets teams see how an application performs in the real world, complete with the actual users, traffic patterns, and data. This gives a better reflection of how the software will behave in the real world, often exposing problems that would be detected in staging or testing environments.

- Faster Issue Discovery: As production includes real usage, testing in production helps in the quicker detection of bugs and performance bottlenecks that may not be visible in test environments. Read: Minimizing Risks: The Impact of Late Bug Detection.

- Real User Feedback: Testing in production means you get to hear from users right away. Bouncing off of things like A/B testing, product teams can observe how users react to changes and iterate accordingly based on what actually matters and what users want.

- Automated Rollbacks: Most production testing configurations assist automatic rollback systems. They sense the issue and roll back to their previous state to avoid widespread disruption and quicken up the recovery time.

- Accelerated Feedback Loops: Continuous feedback is possible with production testing. This minimizes the time taken from identifying an issue to patching it, which is vital when it comes to agile and DevOps-centric workflows focused on quick iterations.

- Enhanced Customer Trust: You are indeed going to have more trustworthy software in case it remains steady-performing. Additionally, it serves customer needs & insights better and you will witness higher retention and satisfaction rates.

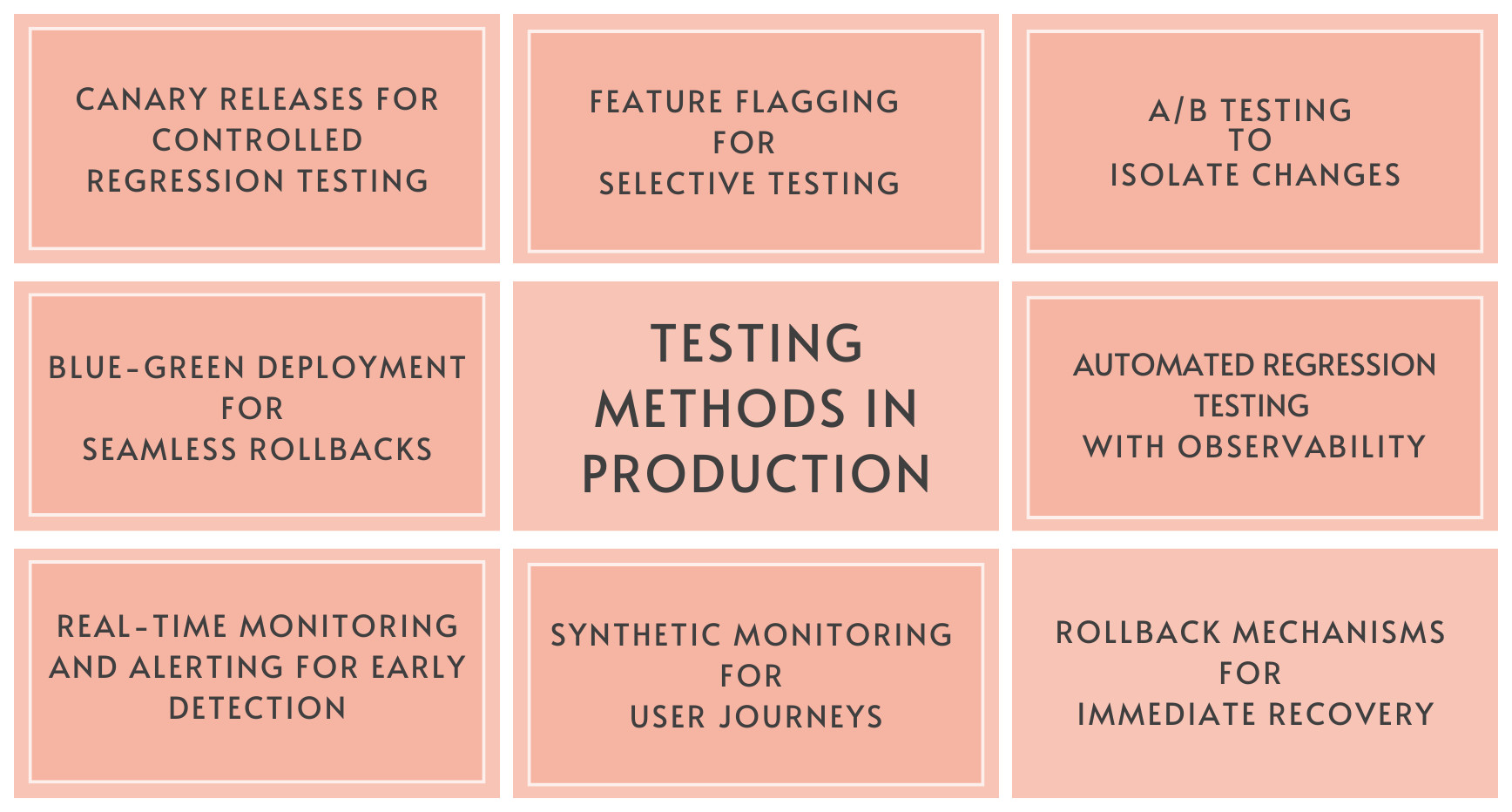

Testing Methods in Production

Let’s explore each production testing technique with added depth to understand how they contribute to safe and effective testing.

Canary Releases for Controlled Regression Testing

In a canary release, a new version of software is deployed to a small, isolated subset of users or servers before rolling it out to the entire user base. This staged rollout allows teams to monitor the system’s performance and quickly catch issues before they impact everyone.

Benefits: By testing changes on a smaller scale, canary releases mitigate risk and provide an early warning system for detecting potential issues.

Use Cases: Ideal for testing major updates or critical new features where teams want early feedback without affecting the broader user base.

How to Use: Identify a small, diverse subset of users or servers to receive the new update. Monitor performance, stability, and user feedback, then expand the rollout if no regression issues are detected.

Best Practices:

- Choose diverse user segments to get comprehensive feedback.

- Use automated monitoring tools to detect anomalies during the canary phase.

- Ensure quick rollback mechanisms in case issues are detected.

Feature Flagging for Selective Testing

Feature flags, or toggles, allow teams to enable or disable specific features in production dynamically. This approach provides control over which users see which features, making it possible to conduct live experiments without redeployment.

Benefits: Teams can test new features with real users without risking the entire production environment, and rollback is as simple as turning off the flag.

Use Cases: Feature flagging is useful for testing features under development, A/B testing, or rolling out changes to specific user segments (like beta testers).

Best Practices:

- Maintain a clear strategy for enabling, disabling, and removing flags to avoid “flag debt.”

- Monitor feature performance and behavior under flagging conditions.

- Use flags as a fallback for quick rollback during incidents.

How to Use: For features with potential impacts on existing functionality, use feature flags to gradually enable them for certain users. Monitor metrics for regressions (e.g., increased error rates or latency) and disable the feature immediately if issues arise.

A/B Testing to Isolate Changes

A/B testing involves deploying two versions of a feature (e.g., A and B) to different user groups to determine which performs better. This allows teams to compare versions and make data-driven decisions about the best user experience.

Benefits: A/B testing provides clear insights into user behavior and preferences, helping teams fine-tune features based on real feedback.

Use Cases: Frequently used to test UX/UI elements, call-to-action buttons, or algorithm variations that affect user engagement.

Best Practices:

- Identify clear metrics to measure the success of each version.

- Ensure statistical significance by testing with a sufficient user base.

- Monitor operational metrics (e.g., latency, error rates) alongside user engagement to avoid performance regressions.

How to Use: Run A/B tests focusing on operational metrics (e.g., response times, error rates) and user behavior to validate that the new release doesn’t degrade existing functionality or negatively impact user experience.

Blue-Green Deployment for Seamless Rollbacks

In blue-green deployment, two production environments (e.g., blue and green) are maintained. When a new version is deployed to one environment (say, green), the other (blue) remains active. After successful validation, traffic is switched to the updated environment. Read about Continuous Deployment with Test Automation.

Benefits: This method provides a nearly seamless rollback option by switching back to the previous environment if issues arise, thus minimizing downtime.

Use Cases: Blue-green deployments are ideal for high-availability systems where downtime must be minimized and user impact needs to be limited.

Best Practices:

- Ensure both environments are identical to avoid environment-specific issues.

- Use real-time monitoring to assess performance before fully switching traffic.

- Automate the traffic switch and rollback mechanisms to expedite responses to detected issues.

How to Use: Deploy the new version in the green environment, then conduct regression tests while monitoring for any issues. Only route full traffic to the green environment once regression tests pass without issues.

Automated Regression Testing with Observability

Regression testing verifies that recent changes don’t negatively impact existing features. Automated regression suites can be run periodically or triggered by new deployments to validate system stability.

Benefits: Ensures that recent code changes don’t break or degrade the performance of previously functioning features.

Use Cases: Essential for complex systems with frequent updates, especially those with mission-critical functionality that must remain stable.

Best Practices:

- Focus on automating regression tests for the most critical functionalities.

- Schedule tests during low-traffic periods to minimize user impact.

- Use real-time monitoring alongside regression testing to identify performance regressions.

How to Use: Schedule automated tests for critical functionalities (e.g., login, transactions). You can use observability tools like Datadog, Prometheus, or Grafana to monitor metrics like error rates and response times for any anomalies that might indicate regressions.

Real-Time Monitoring and Alerting for Early Detection

Automated monitoring and observability tools track metrics such as error rates, response times, and resource utilization. These tools provide insights into system health and performance in real-time.

Benefits: Continuous monitoring allows teams to detect issues early, often before they impact users, enabling proactive resolution.

Use Cases: Essential for all production environments, especially those with complex, distributed architectures where issues can be difficult to trace manually.

Best Practices:

- Set up alerts for critical thresholds on metrics like latency, error rates, and memory usage.

- Use distributed tracing to track user interactions across services.

- Use machine learning-based anomaly detection to identify unexpected changes in system behavior.

How to Use: Set up alerts for critical thresholds on metrics that indicate regressions, such as increased error rates or degraded performance. Configure automated alerts to notify the team when anomalies are detected, enabling quick responses to issues.

Read: Understanding Test Monitoring and Test Control.

Synthetic Monitoring for User Journeys

Synthetic monitoring involves simulating user actions within the production environment to test critical workflows (e.g., login, checkout, or payment processing).

Benefits: This proactive approach ensures that essential features remain functional and perform well, even when no actual users are interacting with them at a given time.

Use Cases: Ideal for validating core user journeys in production to ensure a smooth experience and detect regressions early.

Best Practices:

- Identify and monitor the most common and critical user flows.

- Run tests regularly and automate synthetic interactions to capture performance changes over time.

- Use synthetic monitoring in conjunction with real-user monitoring for comprehensive coverage.

How to Use: Define essential user journeys and set up synthetic monitoring to simulate these flows in production. This helps detect regressions in functionality that could impact the user experience, even if no real users have yet encountered the issue.

Rollback Mechanisms for Immediate Recovery

Incremental rollouts gradually deploy updates across different servers or user groups, allowing for monitoring and rollback at each stage if issues are detected.

Benefits: Limits the exposure of new changes, allowing for quick intervention, and reducing the risk of large-scale issues.

Use Cases: Useful for complex updates where the potential for regression is high and continuous monitoring is needed.

Best Practices:

- Deploy to a small subset of servers or regions first and expand gradually.

- Automate the rollback process to reduce the impact of potential failures.

- Monitor user feedback and performance metrics at each increment to gauge impact.

How to Use: Incorporate a rollback mechanism into the deployment pipeline, which can be triggered manually or automatically. Continuous monitoring helps ensure any major issues are swiftly identified, allowing an automated or quick manual rollback.

Solutions to Challenges in Testing in Production

Testing in production introduces certain challenges unique to live environments. Here are some strategies for addressing these challenges.

Ensure Minimal User Impact

Controlled rollouts are essential to minimize user impact. Using canary releases, feature flags, and blue-green deploymentswe can isolate changes to smaller user segments. This enables a lower risk of disrupting the entire user base.

Maintain Data Privacy and Compliance

Handling real user data raises privacy and regulatory challenges. When designing tests, teams must adhere to privacy regulations like the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA). Read about AI Compliance for Software.

Techniques for Privacy Preservation:

- Data Masking: Use obfuscation techniques to mask personal information in production logs.

- Anonymization: Remove identifiable information to prevent unauthorized access to personal data.

- Access Controls: Limit access to sensitive production data, ensuring that only authorized personnel can view it.

Managing Costs with Scalable Infrastructure

Production testing requires infrastructure flexibility to support blue-green deployments, additional logging, or A/B tests. Using cloud environments with auto-scaling features can help optimize infrastructure costs by scaling up resources when needed and scaling them down during low-demand periods. Know How to Save Budget on QA.

Training and Building a Culture of Continuous Learning

Effective testing in production requires a skilled team proficient in monitoring tools, feature management, and observability practices. Organizations should encourage continuous learning and cross-functional collaboration to strengthen production testing capabilities.

Testing in Production: Best Practices

Now, let us ponder over some best practices or approaches to follow while doing testing in production, which could save us from the above risks involved.

Stress Testing

Since production testing intends to identify bugs in the real world, it needs to occur in the most challenging real-world conditions. So, stress testing the application with high traffic is a way to determine how app functions behave when the load suddenly increases with greater incoming requests. That way, we can ensure to follow some error recovery processes in such extreme conditions.

Forced Failure Testing

Deploy a Chaos Monkey to introduce frequent random failures like a system crash to make recovery mechanisms even stronger. An analogy for this might be forcing someone (or something) to face hardships or harsh environments to achieve saturation or immunity to such bad effects.

Timely Monitoring

Choose your sanity or regression tests to be run during business hours instead of non-working hours with proper close monitoring of key performance indicators.

Finally, we can say production testing helps create a better user experience with a better risk mitigation structure in place, leading to proper business revenue. Nowadays, as a part of the software testing life cycle (STLC), it is quite easy to do production testing with proper DevOps infrastructure with continuous integration and deployment.

How testRigor Can Help?

If you’ve been following us for some time, you might already know that testRigor is the optimal solution for automated end-to-end UI testing across web, desktop, and mobile platforms – all with the convenience of plain English commands.

Key Features of testRigor:

- Plain English Test Creation: Users can write tests in natural language, eliminating the need for coding skills and making test creation accessible to everyone on the team.

- Generative AI for Testing: testRigor utilizes generative AI to generate tests based on documented test cases, accelerating the test creation process.

- Cross-Platform Support: The platform supports web, mobile, and desktop applications, allowing for comprehensive testing across various platforms.

- Reduced Test Maintenance: By focusing on the end-user perspective and minimizing reliance on locators, testRigor reduces the time spent on test maintenance, especially for rapidly changing products. Read: Decrease Test Maintenance Time by 99.5% with testRigor.

- Integration Capabilities: testRigor integrates with tools like TestRail for test case import and supports CI/CD pipelines, facilitating seamless incorporation into existing workflows.

- Scalability: testRigor’s efficient test creation and maintenance processes allow teams to scale their test automation efforts without being hindered by maintenance challenges.

And if you don’t have a testRigor account yet, you can now register for one for free and start automating right away in plain English. Explore the possibilities on how to strengthen your automated testing.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |