What is Minimum Viable Testing?

|

|

As engineering teams sprint to keep pace with product feature requests, the problem isn’t just about building features; it’s delivering them fast without sacrificing quality. Agile workflows and DevOps pipelines, not to mention the rise of continuous delivery practices, have made quick releases the norm when it comes to deployment, but they’ve also turned testing efficiency into a mission-critical requirement.

Out of today’s current set of testing strategies, one shines above the rest in terms of speed and reliability: Minimum Viable Testing (MVT).

MVT is not about cutting corners or eliminating quality checks. Instead, it concentrates on pinning down the least amount of testing required to have confidence that a release is stable, valuable, and reliable. By focusing on what matters most, teams can keep up with the demands of modern-day development without sacrificing user trust and business reputation.

| Key Takeaways: |

|---|

|

Concept of Minimum Viable Testing

To get some clarity on MVT, it would be interesting to take a step back and look at the MVP (Minimum Viable Product). As outlined by Eric Ries in his book, The Lean Startup, MVP is a strategy designed to ensure that your assumptions about customer value are correct without requiring too much investment. Instead of creating an entire product, teams release the smallest version that works and can prove or disprove a hypothesis.

Alignment with Minimum Viable Product (MVP)

The principles behind MVP deeply influenced modern engineering strategies:

- Reduce waste

- Validate early

- Learn continuously

- Iterate rapidly

Minimum Viable Product (MVP) adapted these same lean concepts to the product world, and Minimum Viable Testing (MVT) does exactly the same, but for software quality practices.

- MVP: What’s the smallest thing we can build to test an idea?

- MVT: What’s the least amount of testing that you can do to release with confidence and start getting real-world insight?

Instead of treating testing like a fixed checklist that needs to be done on a stage, MVT sees it as a flexible, lean activity that focuses on learning quickly and lowering risks quickly.

Read: Mastering MVP Testing: Implementing Proven Strategies for Success.

How testRigor Aligns with MVT

testRigor directly supports the philosophy behind MVT by allowing teams to automate only the most valuable tests quickly and with minimal maintenance overhead. Because tests are written in plain English and backed by Gen AI, teams can create reliable coverage much faster than with traditional automation frameworks. This naturally supports lean, iterative cycles where the goal is rapid learning with stable and high-confidence releases.

The Core Question Behind MVT

MVT is based on a seemingly obvious question: What’s the least possible amount of testing we can get away with before shipping a feature or product?

This requires understanding:

- What users value most

- What could break and cause significant harm

- What is stable versus what is experimental

- Where the highest risks lie

- What feedback is most urgent

The underlying philosophy behind this question is a broader shift in thinking we need to make: testing should not be about reaching completeness; it should be about obtaining learning and confidence. Read: What is POC (Proof of Concept)?

MVT as a Risk-Management Strategy

Even though traditional testing treats all defects the same, in practice, some defects easily surpass others in terms of importance. MVT sees testing as a risk mitigation, not a risk elimination approach, aiming at mitigating catastrophic failures and accepting minor ones, and relying on monitoring for real-world validation. This is what makes MVT such a pragmatic approach to product validation in high-velocity, iterative development environments.

Read: Technology Risk Management: A Leader’s Guide.

The Evolution of Testing Toward MVT

Contemporary software development practices require us to deliver faster and test smarter, driving teams away from traditional regression testing approaches towards leaner, risk-based ones. This transition opened the doors to Minimum Viable Testing as a pragmatic and cost-effective approach.

From Waterfall to Agile: A Shift Toward Faster Validation

Waterfall’s sequential, document-centric approach resulted in lengthy release cycles, late discovery of defects, and high costs associated with last-stage fixes. Agile changed all that with continuous testing, faster feedback loops, and rapid iteration, creating the need for a more strategic testing approach aligned with MVT.

In Agile environments:

- Features are smaller

- There are more releases

- The loops of feedback are shorter

- Testing needs to change to keep up with faster cycles

This change made teams more likely to focus on delivering value early instead of waiting for all the features to be ready.

Read: What is WAgile? Understanding the Hybrid Waterfall-Agile Approach.

How CI/CD Influenced Testing Priorities

CI/CD pipelines accelerated development by orders of magnitude. We need test suites that are fast, reliable, automated, and very selective. With large test suites being a bottleneck, teams were forced to focus on high-value tests and remove unnecessary ones, making MVT indispensable for achieving continuous delivery. This evolution reinforced the importance of testing only what truly impacts release confidence.

Goals of Minimum Viable Testing

Minimum Viable Testing is designed to assist teams in delivering high-quality software faster by doing only the least amount of testing required for confidence. It focuses on a more strategic risk-driven approach to testing rather than the exhaustive methods of some other frameworks, and is especially adaptable for modern agile development environments. Ultimately, the aim is to provide fast delivery and still have substantial QA.

Accelerating Release Cycles

MVT reduces the time needed to test a release by optimizing the execution of unnecessary tests. This allows teams to release features more often and adapt to market needs more quickly. It’s also very conducive to quick iteration, where devs get real feedback faster and tweak functionality in smaller chunks.

Reducing Testing Overhead

Traditional testing can be extremely manual-heavy and includes a lot of duplicated work in test cases and large regression suites, which slows down teams. MVT can target lower-value tests and make automation more efficient for easier maintenance. So, QA teams can spend their time on high-impact areas, not checking the same thing over and over.

Using testRigor, teams can drastically reduce overhead because tests are much faster to create and are almost maintenance-free. This lets QA teams focus only on high-value testing while the platform handles element identification, dynamic locators, and cross-browser/device execution automatically.

Read: Decrease Test Maintenance Time by 99.5% with testRigor.

Supporting Continuous Delivery

Fast, reliable, and scalable test automation is indispensable for the technology of continuous deployments. MVT makes sure that only the essential tests are integrated into CI/CD pipelines to avoid delays because of an excessive number of test cases. This makes for smoother, predictable releases with high confidence about the stability of builds.

Balancing Speed and Quality

MVT isn’t about testing less for the sake of speed, but about testing smarter. By focusing on problems and high-value areas, teams can reach the best coverage ratio without hampering the pace of development. They strike a balance here so that fast releases don’t gimp the experience or reliability for us.

Enabling Faster User Feedback

By reducing the amount of pre-release testing, teams can ship features faster and get real-world feedback from users. It speeds up learning and makes it easier to validate product decisions. It also helps ensure that iterative refinements are based on real user behavior, not guesswork.

Read: Quality vs Speed: The True Cost of “Ship Now, Fix Later”.

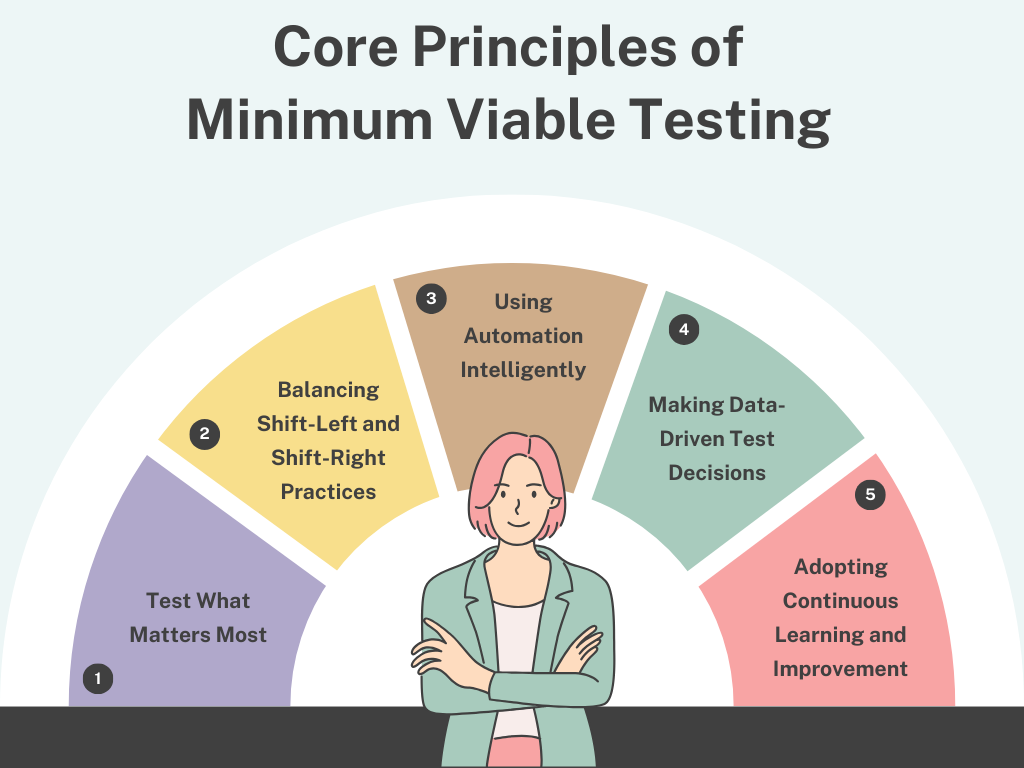

Core Principles of Minimum Viable Testing

The fundamental principles of Minimum Viable Testing help to focus teams on leaner, smarter, and more effective testing. These principles emphasise the importance of value, waste reduction, and using data and automation to facilitate speedy, assured releases. Together, they create viable ingredients for a testing strategy designed for a modern, iterative workflow.

Test What Matters Most

Minimum Viable Testing starts by identifying the places that have the most business and user impact. Rather than evenly distributing testing effort across all features, teams concentrate on key workflows and high-risk pieces. This allows for protecting critical values, taking less effort in the demand areas. Focused on what really matters, teams ship sooner while maintaining core quality.

Balancing Shift-Left and Shift-Right Practices

MVT, combining early-stage prevention (shift-left) with real-world validation (shift-right), balances the testing approach. Shift-left approaches identify bugs early on while shift-right methods verify behavior in real–world use. This combination enables teams to test much less before releasing and still have high confidence in product stability. It also extends learning beyond development.

Read: Shift Everywhere in Software Testing: The Future with AI and DevOps.

Using Automation Intelligently

Instead of seeking to automate as much as possible, MVT encourages automating only the most valuable and reliable tests. This prevents the maintenance overhead of building a big, fragile automation payload. A well-curated suite of automated tests offers fast, dependable feedback while not being a bottleneck to CI/CD pipelines. Smart automation makes certain that you get maximum value for teams with the smallest amount of overhead.

testRigor embodies the MVT principle of automating only what matters most. Because test creation is simple and stable, teams don’t need to build large, fragile automation suites. Instead, they can automate just the critical workflows using English-based steps, drastically cutting maintenance while ensuring reliable regression coverage.

Read: AI-Based Self-Healing for Test Automation.

Making Data-Driven Test Decisions

Data is a key component of MVT, where teams decide what to test through real usage data patterns, risk factors, and past defects. Teams can iteratively improve what they are testing based on telemetry, customer pathways, and data from previous releases. This minimizes the guesswork and helps to make sure that the testing effort is in line with product requirements. Facts-based decision-making keeps the testing strategy lean but with exceptional impact.

Adopting Continuous Learning and Improvement

MVT isn’t a static framework; it grows and shifts as products, users, and risks change. They frequently reassess the necessary testing coverage, prioritize, and adapt to production behavior. This iterative approach keeps testing effective and efficient for the long haul. By adopting learning, teams accelerate quality assurance and delivery velocity with each release.

Minimum Viable Testing vs. Traditional Testing

Minimum Viable Testing is often misunderstood as simply “doing less testing.” In reality, it is a strategic shift away from traditional testing paradigms. The differences become clear when comparing MVT with traditional approaches across goals, scope, speed, and feedback loops.

| Minimum Viable Testing (MVT) | Traditional Testing |

|---|---|

| Tests only the most critical and high-risk areas. | Tests a wide range of features, workflows, and scenarios for completeness. |

| Aims to validate value quickly and enable fast releases. | Aims to eliminate as many defects as possible before release. |

| Supports rapid, iterative deployments with minimal testing overhead. | Slower release cycles due to extensive testing phases. |

| Relies on production monitoring and real user feedback. | Relies heavily on pre-release QA phases for defect discovery. |

| Uses lean, maintainable automation suites. | Requires large automation suites and significant manual effort. |

| Accepts low-impact, manageable risks to maintain speed. | Seeks to minimize risk by testing broadly and deeply. |

| Focuses on continuous learning and quick adjustments post-release. | Focuses on ensuring readiness before release, with limited post-release iteration. |

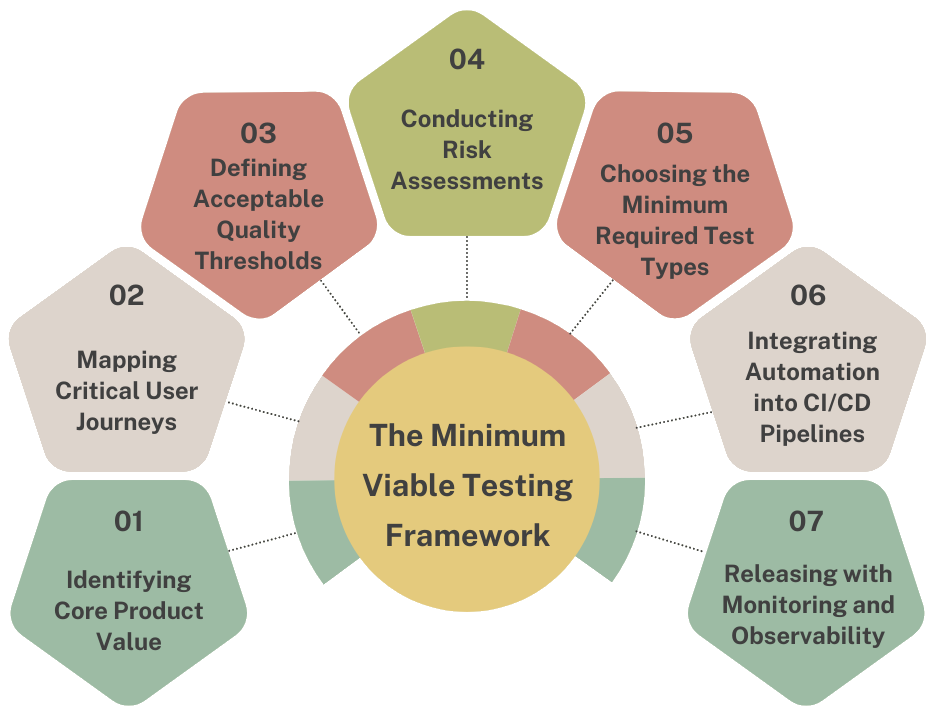

The Minimum Viable Testing Framework

Minimum Viable Testing focuses on applying lean testing to the entire development lifecycle in a structured way. It aids teams in determining what actually needs to be tested before release and what can be validated via monitoring or user feedback post-release. Following this model can help ensure quality and speed of delivery, and make the effort of testing lower. The MVT framework consists of several interdependent phases:

Identifying Core Product Value

The first step in MVT is determining the core value users gain from a product or feature. Teams should be clear about what problem the feature addresses and which parts of the workflow are crucial for delivering that value. This visibility helps us identify where we absolutely cannot have failure.

Example: For a food delivery app, maybe the core value is getting your order placed. Some aspects, such as social media login or coupon recommendations, add a little extra value, but the checkout flow is what users are looking for, and that could be considered the main value of the product.

Mapping Critical User Journeys

After the core value is defined, teams map out the user journeys to drive toward it. Those journeys are the actual paths that users traverse to accomplish their goals, and they often determine whether the product seems functional and trustworthy. By testing those flows, you guarantee that the most pivotal experiences in the product hold strong even if there are some minor problems with secondary features.

Example: In an online banking application, critical journeys might include transferring funds, checking account balances, or depositing a check via mobile capture.

Defining Acceptable Quality Thresholds

To perform MVT, there must be a clear definition of the level of quality that is acceptable for a release. Not every feature has to be perfect; some can tolerate cosmetic or low-impact issues, particularly in early stages. Thresholds also prevent teams from making arbitrary release decisions, while ensuring that engineering, QA, and product all share a common understanding.

Example: A team might define that no critical or high-severity defects are allowed in the payment system, but minor UI inconsistencies in non-core pages are acceptable for the initial release.

Conducting Risk Assessments

Risk assessments allow pinpointing which features are the most susceptible to threats to functionality, user confidence, and business stability. Considering technical complexity, dependencies, and change frequency, teams make decisions where additional testing should be deeper or more cautious. This is possible for MVT as it depends a lot on the consideration of what type of failures are expensive and which ones are not.

Example: A new API integration with a third-party payment provider carries a higher risk than updating text labels on a dashboard, and therefore requires more focused testing.

Choosing the Minimum Required Test Types

The objective is not to minimize testing, but to select the minimal set of types that adequately validate core flows and address the highest risks. In general, teams use a combination of unit testing, API testing, integration testing, and selected end-to-end tests based on the complexity of the features. Exploratory testing may also be part of the mix to catch what automation can’t think of. This gives us a lean, but balanced, test suite.

Example: For a microservices-based feature, a team might rely heavily on API tests and a single end-to-end scenario for the critical flow, rather than dozens of UI-based E2E tests.

Integrating Automation into CI/CD Pipelines

Automation is critical to reach MVT at scale, and particularly in rapid development cycles. Only the most critical and stable tests are promoted to CI/CD for fast, reliable feedback. It keeps your pipeline from getting bogged down by bloated test suites or flaky automation. Teams promote CD without an obstacle by adding some minimal, but effective automation.

Example: A CI pipeline may run unit tests on every commit, API tests on every build, and just two or three end-to-end tests before deployment to staging.

Releasing with Monitoring and Observability

Considering MVT encourages limited pre-release testing, strong observability functions as a critical safety net. With monitoring, a team can quickly pick up on problems that arise after deployment and intervene before they affect a large percentage of users.

Example: Dashboards tracking error rates, latency, and user behavior help detect if a new feature release causes increased checkout failures or login timeouts.

Read: How to Build a Test Automation Framework.

Challenges in Implementing Minimum Viable Testing

Introducing MVT can be hard, as it forces people to reconsider what good testing is. Organizations should move from validating everything to a strategic, risk-based testing, which takes both cultural and technical maturity. These issues need to be managed carefully in order to make MVT effective and sustainable.

- Defining the “Minimum” Testing Scope: Deciphering how much testing is really required can be subjective, and depends on the feature, risk or stage of the product. Teams are constantly grappling with the balance between safety and speed, choosing what to do and what not to.

- Overcoming Cultural Resistance: Many teams are accustomed to testing everything and may fear that testing less introduces unacceptable risk. Shifting this mindset requires trust, education, and evidence showing that lean testing can still maintain quality.

- Relying on Strong Automation Foundations: MVT relies on automation, which has to be stable, fast, and reliable. If not, cutting down manual testing becomes dangerous. MVT is not easy to implement with teams with brittle or partial automation suites.

- Ensuring Adequate Monitoring Post-Release: Given MVT cuts pre-release testing, robust monitoring and observability are important to quickly discover issues in production. Minor bugs could explode before teams get enough telemetry.

- Addressing Communication Gaps Across Teams: MVT needs seamless collaboration between QA, dev team, product, and DevOps teams. They define risks, priorities, and acceptance criteria. Any confusion can lead to misunderstandings about release readiness or acceptable risk levels.

How testRigor Helps Overcome This Challenge

Many organizations struggle with MVT because their existing automation is flaky or code-heavy. testRigor solves this by providing an extremely stable, no-code automation platform where tests self-heal and require little upkeep. This gives teams the confidence to lean into MVT without compromising quality.

Read: What are Flaky Tests in Software Testing? Causes, Impacts, and Solutions.

When Not to Use Minimum Viable Testing

Although Minimum Viable Testing stands to gain in terms of speed and efficiency, it is not suitable for every type of product and environment. In other industry sectors, validation processes can be significantly more stringent because of safety, regulatory or operational concerns. In these circumstances, reducing testing to a minimal level could introduce unacceptable consequences.

- Safety-Critical Environments: Systems affecting human safety, like medical devices, aviation software, and automotive controls, need to be thoroughly tested. Even MVT is not appropriate for them, because such failures, even in small doses, can induce life-threatening consequences.

- Regulatory or Compliance-Heavy Industries: Highly regulated industries require thorough testing, documentation, and audit trails. You cannot replace the depth of validation to operate, which is required for compliance in finance, healthcare, or government, with MVT.

- Low Automation Maturity: Organizations with weak, flaky, or incomplete automation cannot safely adopt MVT. Without reliable automated coverage, minimizing manual testing significantly increases the risk of undetected defects.

- No Monitors or Telemetry Systems: Since MVT heavily depends on post-release monitoring, it will fail without strong observability. Without real-time logging, alerting or error tracking, teams can’t see problems until they affect hundreds of users.

Conclusion

Minimum Viable Testing is a realistic, contemporary guide to delivering high-quality software in today’s fast-moving engineering environment. By concentrating on only what’s really important, such as core value, critical journeys, and the highest-risk areas, teams get to move faster without sacrificing user trust or product stability. Tools like testRigor make Minimum Viable Testing far more achievable by providing fast, resilient, and highly maintainable test automation.

MVT is not to test less but to test smarter with tighter and better automation, good monitoring, and learning. When used in the smart way and in appropriate contexts, it’s a strong enabler for agility, efficiency, and continued product excellence.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |