What is Performance Testing: Types and Examples

|

|

As technology advances, application performance is one of the parameters that determines user satisfaction and business success. To accomplish this, performance testing is an essential tool, as it tests the software under certain workloads. This holistic process and its developed methods allow for bottleneck discovery, assessing system reliability, and optimizing resource utilization.

In this article, we cover performance testing, its different types, and some case studies in software engineering that demonstrate why it is an important key in the equation.

What is Performance Testing?

Performance testing is a specialized area of software testing that assesses the responsiveness, speed, stability, scalability, and resource usage of an app under a specified workload. Functional testing confirms the software does what it is supposed to do, whereas performance testing validates how well an application performs in terms of responsiveness and stability under a particular workload (normal usage, high load, prolonged activity, etc.).

Performance testing is a key part of the software development lifecycle (SDLC) as it ensures that applications are performing up to expectations, delivering a seamless user experience, and ultimately aligning with business goals.

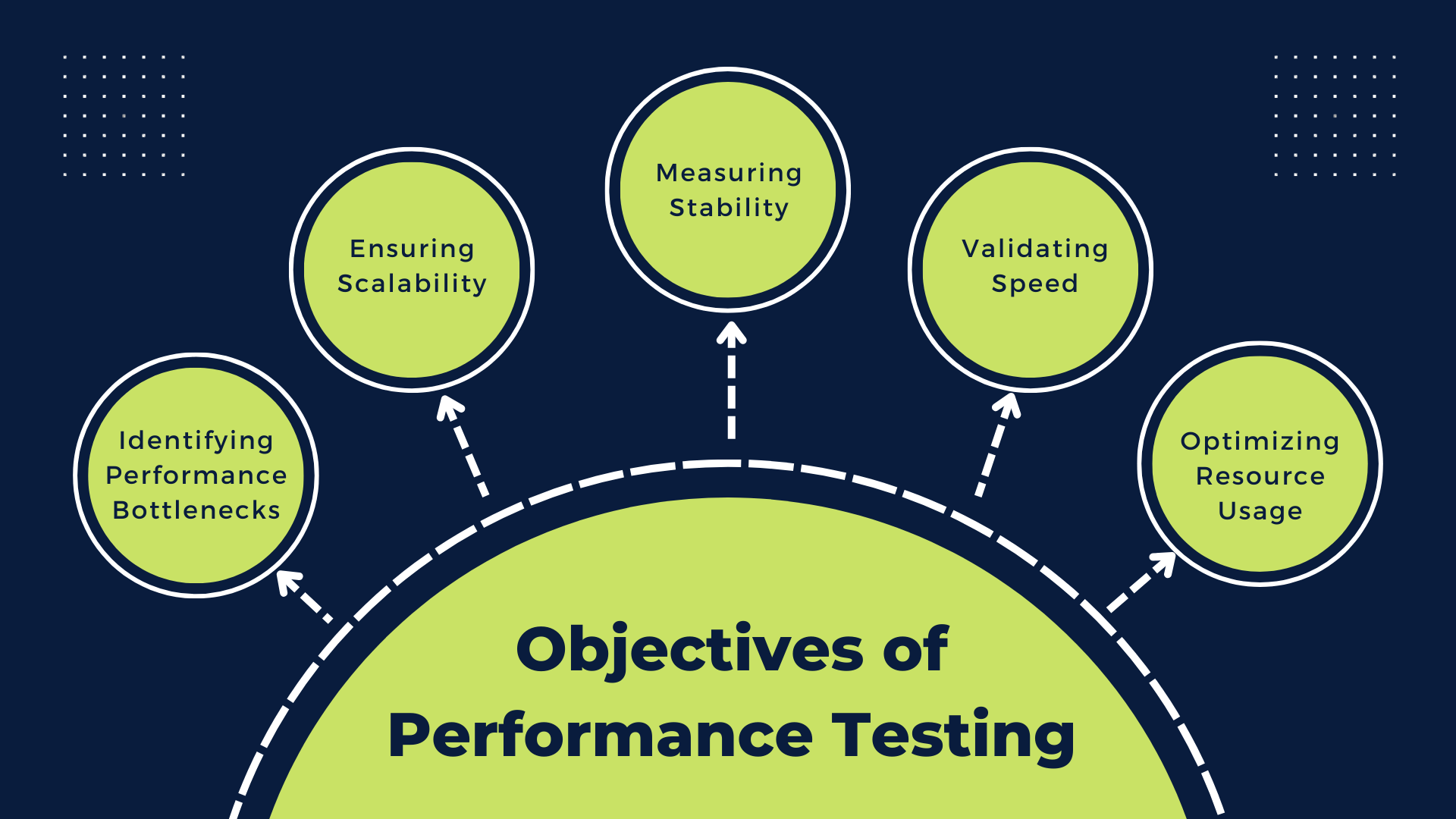

Objectives of Performance Testing

Software performance testing acknowledges that a software application meets its required performance level under a variety of conditions. Let’s discuss the primary objectives of performance testing in detail:

Identifying Performance Bottlenecks

The main goal of performance testing is to figure out what areas degrade performance when the application is under load. Some examples include inefficient code, costly database queries, server misconfigurations, or hardware constraints. Organizations can then solve these issues proactively through targeted optimizations, effectively boosting the overall performance of an application.

Ensuring Scalability

Scalability refers to an application’s capacity to handle growing workloads effectively while maintaining acceptable performance levels. A performance test checks whether the system can scale up (for more users, transactions or data) or scale down without losing efficiency. Scalability is absolutely essential if your application is likely to scale or deal with unpredictable traffic.

Measuring Stability

Stability ensures the continued reliability and performance of the application during extended use cases or other stressful situations. Performance testing examines whether the system can continue functioning correctly for a length of time without crashing, without memory leaks, or becoming slower in response. Stability testing is required for real-time applications like online tools or other key to business IT systems.

Validating Speed

Speed has a huge impact on user experience. Performance testing ensures that the response times of user actions, API calls, system processing, etc., are in accordance with the defined business and user requirements. Among other things, it emphasizes response time, transaction time, and page load time. This ensures that the system will run quickly and efficiently within the expected workloads.

Optimizing Resource Usage

Proper utilization of the system and resources such as CPU, memory, network bandwidth, and storage are essential aspects of performance testing. It helps avoid wrong configurations of the system, reducing operation costs and making the system stable to handle heavy loads.

Why is Performance Testing Important?

Performance testing is an essential part of the software development lifecycle, ensuring that applications provide the expected level of performance across various conditions. Its significance lies in how it deals with significant aspects of user experience, business continuity, and operational reliability. Let’s look into a detailed list of the reasons that make performance testing important :

User Experience

Performance testing is one of the important reasons for a smooth and responsive UI. On the other hand, if applications are slow, laggy, or unresponsive, it leads to user frustration and dissatisfaction. In this more digitally savvy world, users expect instantaneous responses and smooth user experience from software applications. Read: UX Testing: What, Why, How, with Examples.

Brand Reputation

Regularly encountering performance issues can tarnish the reputation of the company. Very competitive industries with plenty of options for users have this effect even more. A single instance of downtime or subpar performance during a major event can result in negative press coverage, damaging customer trust.

Revenue Impact

For many businesses, application performance correlates directly with revenue generation. For example, if a critical system does not perform properly, it can have severe financial ramifications, especially for systems that rely on live transactions from users or viewer engagement.

Prevent Failures

Detecting and correcting performance problems early in the development process will prevent production failures. Performance testing helps you identify bottlenecks and resource constraints to avoid crashes or outages under load conditions.

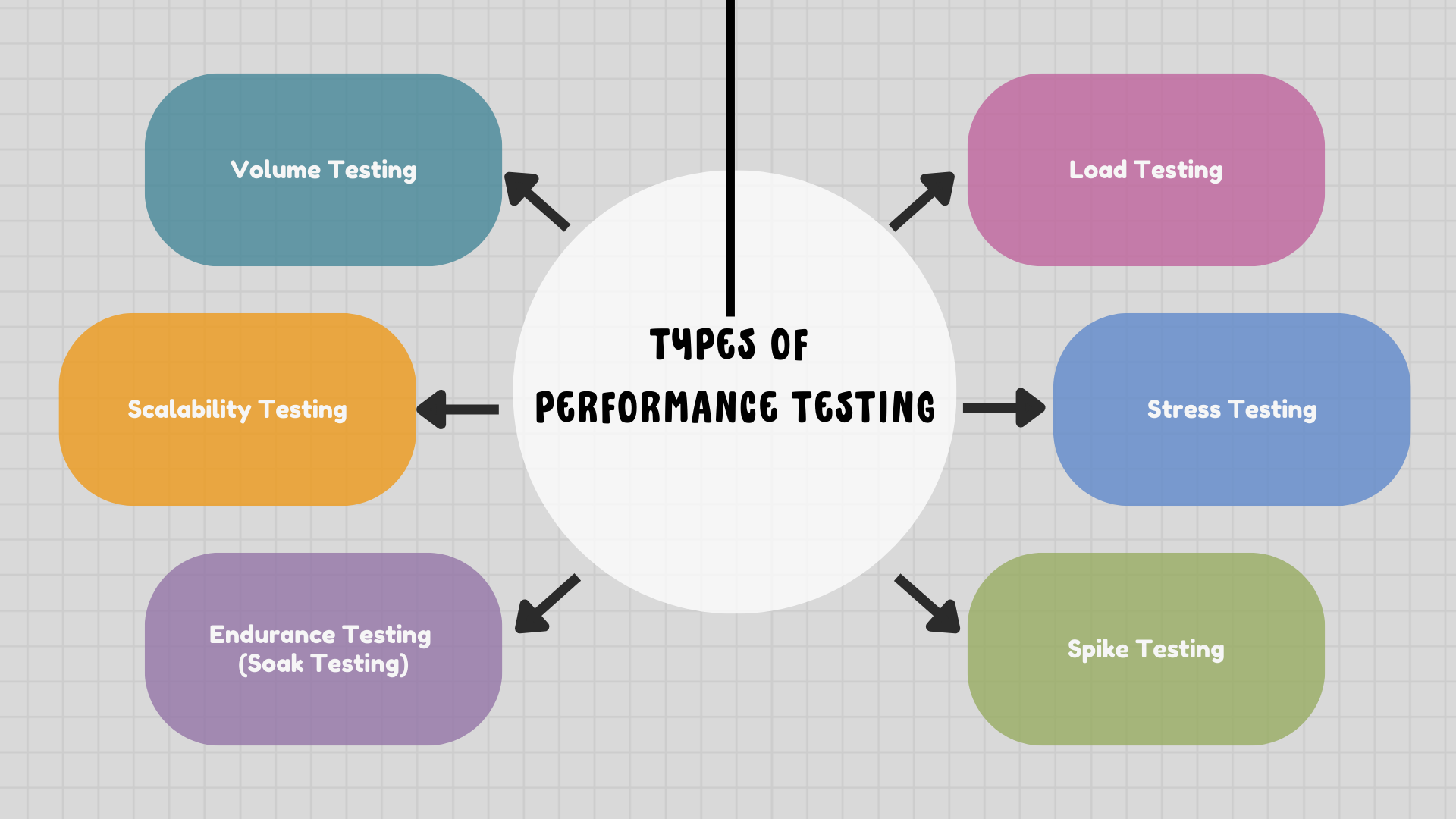

Types of Performance Testing

Performance testing encompasses various methodologies tailored to assess different aspects of system performance. Each type targets specific performance challenges. This is done to ensure that the application operates optimally under varying conditions. Below is a detailed explanation of the key types of performance testing:

Load Testing

Objective: To confirm that the system can process anticipated user activity optimally, eliminating performance degradation and ensuring smooth operations.

Load testing is used to see how a system behaves under normal or expected load. This type of test verifies that the application functions as expected under normal levels of traffic or transactions.

Imagine an e-commerce website preparing for daily operations. Load testing simulates 10,000 concurrent users browsing products, adding items to carts, and completing purchases. At the time of testing, the response time was being tracked, and it was found that the search query execution time was taking more than 2 seconds due to improper indexing in the database. Regular operations may sometimes be optimized for the best user experience.

Key Focus Areas:

- Response Time: How quickly the application responds to user actions.

- Throughput: The number of requests the system can process in a given time frame.

- Resource Utilization: Monitoring CPU, memory, and network usage during the test.

Stress Testing

Objective: To discover the boundaries of the system’s performance ranges and make certain that it is able to recover gracefully after extreme loads.

Stress testing analyzes how the system performs in extreme or adverse conditions. It also determines the application’s breaking point and measures its ability to recover after failure.

A ticket-booking platform expects a surge in activity as a flash sale goes live for a popular concert. The stress test also simulates half a million simultaneous ticket-purchasing users, which is 10 times the expected traffic. The test shows that the payment gateway cannot go beyond 400,000 users; it just crashes. The system is then made more resilient and ready to handle such situations more effectively in the future.

Key Focus Areas:

- Maximum Load Capacity: Determining the system’s threshold for traffic or transactions.

- System Recovery: Observing how the system behaves and recovers after exceeding its limits.

- Failure Behavior: Ensuring that failure modes (e.g., error messages) are handled gracefully.

Spike Testing

Objective: To make sure that unpredictable, bursty traffic (i.e., spikes in traffic over a short period of time) is handled gracefully by the machine.

Spike testing is an important subset of stress testing, concentrating on sudden and significant load increases. It assesses the system’s credit to handle sudden spikes of traffic and return to normal once the spike dissipates.

A viral marketing campaign is launched on a social media platform, and traffic spikes 500% in several minutes. Spike scenarios simulate this, showing that the server takes too long to allocate resources during the spike. The platform is designed to scale so that sudden surges won’t result in delays or crashes after applicable adjustments.

Key Focus Areas:

- Scalability: How quickly the system scales up to manage sudden load spikes.

- Recovery Time: The speed at which the application stabilizes after the spike.

- Error Handling: Observing whether the system provides meaningful error messages during overload.

Endurance Testing (Soak Testing)

Objective: To evaluate the reliability and efficacy of the system with prolonged use.

Endurance testing tests how the application behaves when there is a constant load for a long period of time. It is useful for diagnostics of long-term problems like memory leaks or slow degradation in performance.

A streaming service described executing endurance testing that simulated 72 hours of continuous video playback for 50,000 simultaneous users. The test detects a memory leak in the streaming server that causes a performance degradation 24 hours after tests were run. Addressing the problem allows the platform to be stable for extended durations of usage. Read: Automated Testing for Real-Time Streaming Applications.

Key Focus Areas:

- Stability Over Time: Ensuring the application remains reliable during prolonged use.

- Memory Usage: Detecting memory leaks or inefficient memory allocation.

- Resource Efficiency: Monitoring CPU, disk, and network usage over time.

Scalability Testing

Objective: To get the application ready for future growth without requiring excessive redesign or performance loss.

Scalability testing analyzes the application’s behavior during workload increase/decrease. This makes sure that the system can scale up (rather, take more users or data) and scale down (consume efficiently lesser resources) when required.

A cloud-based customer relations management platform developed an entry-level idea with the goal to scale its user base from 1,000 to 50,000 users within 12 months. To simulate this growth, scalability testing reveals that database query performance begins to degrade around 30,000 users. To prevent performance issues as the system scales, the team uses database sharding to partition their data.

Key Focus Areas:

- Horizontal Scaling: Adding more servers or nodes to distribute the workload.

- Vertical Scaling: Increasing hardware resources (e.g., more memory or CPU) to improve performance.

- Performance Consistency: Verifying that response times and throughput remain stable as the workload scales.

Volume Testing

Objective: To ensure the system is scalable and does not have any severe performance bottlenecks with large datasets.

Volume testing measures how the system deals with large volumes of data. It checks if the application handles the said processes of storing and retrieving the data well without compromising performance.

An analytics platform analyzes a dataset with billions of records to return insights to users. Performance testing shows that some queries for the analytics database take more than 10 minutes to run, indicating unoptimized database joins. We achieve much lower query response times by indexing and partitioning the data so that end users can have insights much faster.

Key Focus Areas:

- Database Performance: Measuring query response times with large datasets.

- Storage Management: Ensuring efficient use of storage resources.

- Data Handling Logic: Verifying that application logic functions correctly with high data volumes.

Software Performance Testing Metrics

Performance testing involves measuring various metrics to evaluate how well a system performs under specific workloads. Each metric provides unique insights into the application’s behavior, helping identify potential bottlenecks and inefficiencies. Below is a detailed explanation of the key performance testing metrics:

Response Time

Response time is the duration between a user sending a request and receiving a response from the system. It is one of the most critical metrics as it directly impacts user experience. Applications with fast response times ensure smoother interactions, while slow response times can lead to frustration and user churn. Response time is typically measured in milliseconds or seconds and is closely monitored to meet user expectations and service level agreements (SLAs). For example, in e-commerce platforms, a delay of even a few seconds in page response can significantly impact sales and conversions.

Throughput

Throughput refers to the number of requests or transactions the system can process in a given time frame, usually measured in requests per second (RPS) or transactions per second (TPS). It provides an understanding of the system’s capacity to handle workloads. High throughput indicates that the application can efficiently process a large volume of user requests, making it essential for systems with high traffic demands, such as streaming platforms or online banking services. Throughput is often analyzed alongside response time to ensure the system maintains performance as workload increases.

Error Rate

Error rate is the percentage of requests that result in errors during a test. This metric is crucial for assessing the reliability of the application under load. Errors can include server-side issues (e.g., HTTP 500 errors), client-side errors (e.g., HTTP 400 errors), or timeout issues. A high error rate indicates that the application is failing to handle the workload effectively, which can lead to user dissatisfaction and operational problems. Monitoring and reducing the error rate ensures that the system is reliable and meets the expected performance benchmarks.

CPU and Memory Utilization

CPU and memory utilization measure the percentage of system resources consumed during testing. High utilization levels may indicate that the system is struggling to handle the workload and may lead to performance degradation or crashes. By analyzing resource usage, teams can identify inefficiencies in code, database queries, or server configurations. For instance, excessive CPU usage might point to poorly optimized algorithms, while high memory consumption could indicate memory leaks. Maintaining optimal resource utilization ensures the application runs smoothly without overburdening the infrastructure.

Latency

Latency is the delay between a user’s request and the system beginning to process it. It is a key factor in real-time applications where low latency is critical, such as gaming, video conferencing, or stock trading platforms. Unlike response time, which measures the entire duration of a request cycle, latency focuses on the initial delay before the system processes the request. High latency can indicate network issues, server overload, or inefficient system architecture. Reducing latency is vital for delivering a responsive user experience.

Transaction Time

Transaction time measures the total time taken for a specific business transaction to complete. This metric is especially important for applications involving multi-step processes, such as placing an order on an e-commerce site or transferring funds in a banking application. Transaction time includes all individual steps, such as database interactions, server-side processing, and client-side rendering. Monitoring transaction time helps ensure that critical workflows meet performance expectations, providing a seamless experience for users during complex operations.

Read more about project-related metrics: Why Project-Related Metrics Matter: A Guide to Results-Driven QA.

Glossary of Terms Used in Performance Testing

Below is a glossary of common terms and concepts used in performance testing to help you understand and communicate effectively about this domain:

- Baseline: The initial set of performance metrics collected to compare against future test results.

- Benchmarking: Comparing the application’s performance against predefined standards or competitors.

- Capacity Planning: The process of determining the infrastructure needed to handle the expected user load.

- CPU Utilization: The percentage of CPU resources used by the application during the test.

- Data Throughput: The volume of data processed by the system in a specified timeframe, usually measured in MB/s.

- Disk I/O: Input/output operations on the disk, reflecting the system’s ability to read and write data efficiently.

- Error Codes: Specific codes returned by the system to indicate issues like 404 (Not Found) or 500 (Server Error).

- Garbage Collection: The process in which the system reclaims memory occupied by objects no longer in use.

- Hits Per Second: The number of HTTP requests sent to the server per second during the test.

- Jitter: Variability in response times over multiple test iterations.

- Load Balancer: A device or software that distributes traffic across multiple servers to ensure reliability and performance.

- Memory Leak: A condition where the application fails to release unused memory, leading to increased memory consumption over time.

- Network Latency: The time it takes for data to travel between the client and server over a network.

- Pacing: The interval or delay between successive transactions performed by virtual users.

- Profiling: The process of analyzing and identifying performance bottlenecks in an application.

- Queue Time: The time spent by a request waiting in a queue before being processed.

- Resource Utilization: The percentage of system resources (CPU, memory, disk, etc.) consumed during a performance test.

- RPS (Requests Per Second): The number of requests a server processes per second, which is used to measure server load.

- Scalability Testing: A test to evaluate the system’s ability to handle increasing workloads effectively.

- Session: A series of interactions between a user and the application during a single connection.

- Session Timeout: The time limit after which an inactive user session is automatically terminated.

- System Under Test (SUT): The application or system being evaluated during performance testing.

- Think Time: The delay or pause simulated by virtual users between consecutive requests.

- TPS (Transactions Per Second): The number of complete transactions processed by the application per second.

- Utilization: A measure of how efficiently system resources like CPU, memory, and network are being used.

- Warm-Up Period: The initial time allocated for the system to reach a steady state before actual measurements begin.

- Workload Model: A representation of the expected user behavior, including concurrent users, transaction types, and data volume.

Challenges in Performance Testing

Performance testing is an essential instrument to ensure the reliability and scalability of an application, yet it has to point out challenges that need to be addressed. These issues usually arise from the difficulties of accurately simulating real-world scenarios, controlling test environments, and accurately interpreting results. Let’s discuss the key challenges in performance testing in more detail.

- Environment Setup: Creating a testing environment similar to production can be expensive, requiring hardware, software, and network resources configured to emulate real-world conditions. In the absence of the proper setup, test results can misrepresent your production performance.

- Complex Workloads: It’s hard to simulate real-world user behavior and workloads because applications are used in many different ways, including various traffic patterns, device types, and network conditions. It can make you miss problems or unnecessarily optimize.

- Monitoring: Analyzing performance metrics and identifying bottlenecks requires expertise and comprehensive monitoring tools. Interpreting large volumes of data, such as response times and resource utilization, is critical to pinpointing performance issues.

- Scalability: Testing for distributed systems for scalability often involves simulating a large-scale traffic scenario as well as managing dependencies between multiple components. If sufficient scalability testing has not been done, there will be challenges in systems meeting the ramp-up in user demands.

Conclusion

Performance testing is a crucial part of the software development process that ensures apps function correctly and meet users’ expectations. With an understanding of the types of performance testing available, the correct tools to assist in this process, and by following performance testing best practices, organizations can build reliable systems that perform well under all scenarios.

Performance testing ensures that a business delivers a reliable, quick, and cost-effective experience to users.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |