Prompt Engineering in QA and Software Testing

|

|

In the age of artificial intelligence (AI) and automation, software testing has evolved considerably. One emerging practice is “prompt engineering,” which is especially relevant when it comes to testing models like OpenAI’s series. But what exactly is prompt engineering, and how does it fit within the software testing landscape? Let’s look into it, and then show you how testRigor is utilizing its model for faster and more efficient test creation.

| Key Takeaways: |

|---|

|

What is Prompt Engineering?

At its core, prompt engineering involves designing, analyzing, and refining the inputs (or “prompts”) used to elicit responses from AI models, ensuring that the outputs are as desired. Just as a skilled interviewer can frame questions in various ways to get the most accurate answers from a human interviewee, prompt engineers frame their inputs to AI systems in a way that maximizes the accuracy, relevance, and clarity of the system’s outputs.

For many people, the phrase “using prompt engineering” is synonymous with “using ChatGPT”. However, this isn’t necessarily the case, as prompt engineering can be applied to a broad spectrum of models. Additionally, many companies have security concerns about using ChatGPT, which is one of the reasons why testRigor’s AI engine does not use it.

Read: How to Test Prompt Injections?

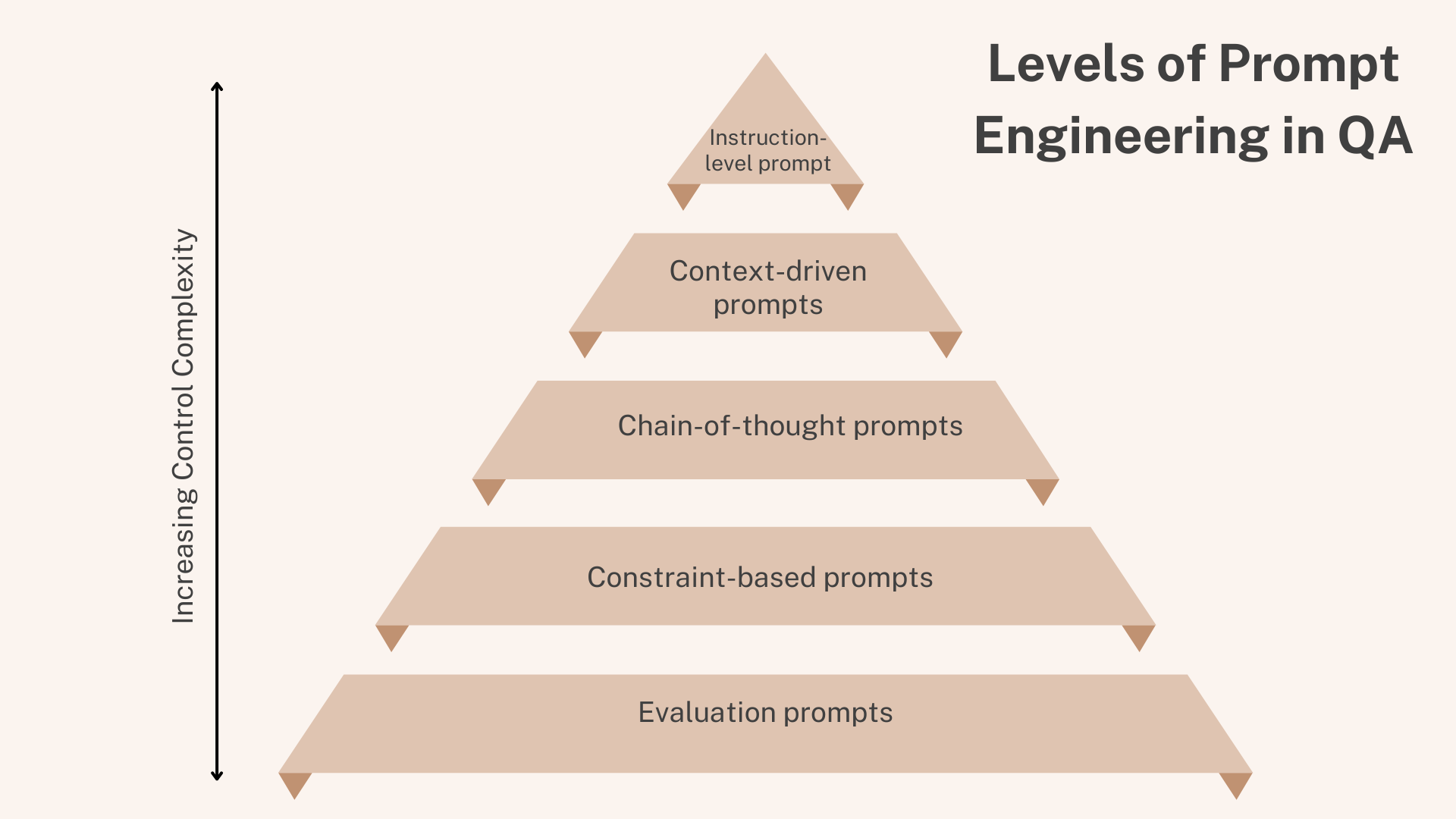

Levels of Prompt Engineering in QA

Prompt engineering in QA progresses from basic task instructions to structured control over how AI reasons, applies context, and evaluates correctness. Recognizing these levels enables QA professionals to design reliable, repeatable, and context-aware AI-driven testing workflows instead of relying on ad-hoc prompts.

- Instruction-level Prompt: Instruction prompts tell the AI exactly what action to perform, such as generating test cases or analyzing requirements. They are useful for simple, well-defined QA tasks but provide limited control over relevance and depth.

- Context-driven Prompts: Context prompts supply background such as application type, user roles, workflows, and data states. This ensures the AI produces test scenarios that align with real-world usage and system behavior.

- Chain-of-thought Prompts: Chain-of-thought prompts guide the AI to reason step by step before producing output. They help uncover complex logic paths, dependencies, and edge cases in multi-step QA scenarios.

- Constraint-based Prompts: Constraint prompts define boundaries by explicitly stating what the AI must not include or assume. They prevent scope creep, invalid test cases, and violations of compliance or testing rules.

- Evaluation Prompts: Evaluation prompts instruct the AI to assess correctness, coverage, or risk instead of generating new content. They enable AI to act as a reviewer, identifying gaps, inconsistencies, or missing test scenarios.

Why is Prompt Engineering Crucial in Software Testing?

- Improves Model Understanding: Different prompts shed light on the AI model’s functioning, assisting in troubleshooting and behavior refinement. Read: What are LLMs (Large Language Models)?

- Enhances Model Utility: Consistent and appropriate responses to a broad spectrum of user queries make models like chatbots or virtual assistants more valuable. Prompt engineering is the key.

- Safety and Reliability: It’s imperative to identify and rectify potential problematic outputs for AI models in sensitive applications. Diverse prompts play a pivotal role.

- Real-world Consequences: Inadequately tested AI models, especially in sectors like healthcare or autonomous vehicles, can have grave implications. This emphasizes the necessity of prompt engineering.

- Contingency Measures: It’s beneficial for AI systems to have built-in mechanisms, like deferring to a human or providing generic answers, when faced with unfamiliar prompts.

- Modern AI Failure Modes Addressed by Prompt Engineering: As AI adoption has accelerated, new failure modes have emerged that require deliberate prompt design to control and mitigate.

- Hallucinations: Constrained prompts, explicit data boundaries, and evaluation criteria help contain hallucinated outputs by limiting what the AI is allowed to infer or invent. Read: What are AI Hallucinations? How to Test?

- Overgeneralization: Context-specific prompts prevent AI models from applying broad assumptions across unrelated scenarios, which is critical when testing domain-specific applications.

- Context Drift: Context anchoring within prompts ensures the AI retains earlier decisions, assumptions, and system states throughout multi-step test flows, reducing logical inconsistency. Read: AI Context Explained: Why Context Matters in Artificial Intelligence.

- Vision Misinterpretation in UI-Based AI: In vision-driven testing, precise prompts help resolve UI ambiguity by clarifying which elements, states, or visual cues the AI should prioritize, minimizing false positives and missed defects. Read: How to do visual testing using testRigor?

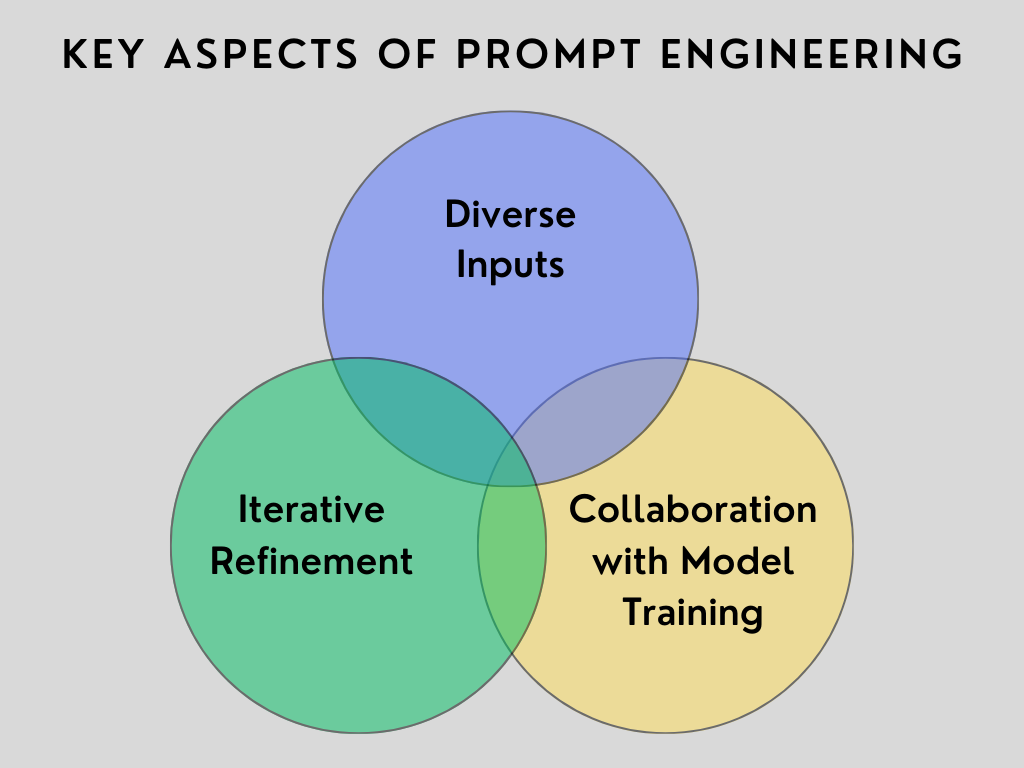

Key Aspects of Prompt Engineering in Software Testing

- Diverse Inputs:

- Examples: For instance, testing a chatbot requires prompts from different languages, colloquialisms, and cultural contexts.

- Impact on Model Fairness: Ensuring models don’t discriminate against specific groups mandates testing with diverse demographic inputs.

- Iterative Refinement:

- Feedback Loop Creation: Continuous improvement is realized when insights from one test cycle inspire the next set of prompts.

- Integration with Other Testing Methods: Prompt engineering works best when integrated with other methods, like adversarial testing.

- Collaboration with Model Training:

- Fine-tuning with Custom Prompts: Insights can guide further model refinement. If a style of prompt is consistently misinterpreted, it indicates a training gap.

- Active Learning: Challenging examples unearthed by prompt engineering can be incorporated into model retraining.

Comparison with Traditional Testing

Traditional QA methodologies often focus on fixed scenarios with predictable outputs. In contrast, prompt engineering, tailored for AI, accepts and even expects variability. While the former might rely heavily on predefined test cases, the latter leans into adaptability and exploration, navigating the vast landscape of potential AI responses to ensure consistency and reliability.

Prompt Engineering vs. Traditional Test Case Design

Traditional test case design focuses on defining steps, data, and expected results for a system to be validated by humans or automation frameworks. Prompt engineering serves the same purpose, but expresses test intent in a structured natural language format that an AI system can execute, interpret, and adapt.

In this sense, prompts act as executable test intent, where clarity, structure, and scope determine how accurately the AI performs validation. When prompts are designed with instruction, context, constraints, and evaluation criteria, natural language does not introduce ambiguity, it replaces brittle scripts with resilient, intent-driven test definitions.

| Traditional Test Case Design | Prompt Engineering |

| Test case defines what to validate | Prompt defines what the AI should validate |

| Written as steps and expected results | Written as structured natural language intent |

| Preconditions describe the system state | Context prompts describe application, user, and data state |

| Test steps control execution flow | Chain-of-thought prompts control reasoning flow |

| Test data supplied explicitly | Data embedded as contextual input |

| Expected results define pass/fail | Evaluation prompts define correctness criteria |

| Negative tests written as separate cases | Constraints specify what must not happen |

| Scope controlled by test plan | Scope controlled by prompt constraints |

| Stability depends on script accuracy | Stability depends on prompt clarity and structure |

| Maintenance requires script updates | Maintenance requires prompt refinement |

Training and Skillset for QA Testers

With AI becoming central to software solutions, the required skill set for QA engineers is also evolving. In the age of prompt engineering, understanding AI behavior, linguistic intricacies, and domain knowledge is as crucial as understanding code structure. Training programs are emerging to equip QA professionals with these competencies, ensuring they are primed to navigate the challenges AI presents.

Challenges and Considerations

- Bias Mitigation: Testing prompts must be unbiased, ensuring the model’s fairness and wide applicability. Read: AI Model Bias: How to Detect and Mitigate.

- Complexity of AI Responses: AI models, unlike traditional software, produce a broad range of responses, complicating the testing process. Read: What is Explainable AI (XAI)?

- Subjectivity in Evaluating Responses: The “correctness” of AI responses can be open to interpretation, posing unique challenges.

- Scalability:

- Automated Prompt Generation: Given the vastness of potential prompts, automated tools might be the answer to generate a plethora of test prompts, or even employ AI to craft challenging prompts for other AI systems.

Prompt Engineering in Software Testing Example

Now, let’s talk about how you can use prompt engineering to build your automated tests in testRigor. And before we dive into more details, here is an example of how to use Prompt Engineering for your test cases:

Prompt Engineering for Vision-Based Testing

Vision-based testing introduces a fundamentally different challenge compared to traditional DOM or locator-driven automation. Instead of interacting with fixed identifiers, AI must interpret visual elements, layouts, labels, and intent, making prompt engineering critical for accuracy and resilience. Read: Vision AI and how testRigor uses it.

Handling Ambiguous UI Elements

Modern user interfaces often contain visually similar elements such as multiple buttons, icons without labels, or repeated controls across sections. Well-crafted prompts disambiguate intent by describing purpose and context rather than relying on visual appearance alone.

For example, instead of referencing position or color, prompts can specify function, user goal, or screen state, guiding the AI toward the correct element even in visually dense interfaces.

Visual Similarity vs. Semantic Meaning

Two UI elements may look identical while serving entirely different purposes, such as “Save,” “Submit,” and “Continue” buttons styled the same way. Prompt engineering allows testers to emphasize semantic meaning over visual similarity, ensuring the AI selects elements based on user intent rather than shape or style.

This distinction is essential in enterprise and SaaS applications where design systems prioritize consistency, but functionality varies widely.

Prompting for Resilience Against UI Changes

UI layouts evolve frequently due to redesigns, responsive behavior, or A/B testing, often breaking traditional automation. Vision-based prompting enables testers to describe what the user is trying to accomplish rather than where or how an element appears.

Instead of prompting “click Submit,” testers can prompt “click the primary action button used to complete the form,” allowing the AI to adapt automatically even if labels, placement, or styling change. This approach dramatically reduces test fragility and maintenance effort.

How does Prompt Engineering in Software Testing Work

As a prompt engineer, you copy and paste your test case into testRigor, which then breaks it down line by line. Each line is treated as a prompt and executed step by step by the AI. The system examines your screen at each step and determines what action should be taken based on the content displayed. In the context of testRigor, know all the super easy ways to create or generate tests: All-Inclusive Guide to Test Case Creation in testRigor.

High-level approach

find a kindle and add it to the shopping cart

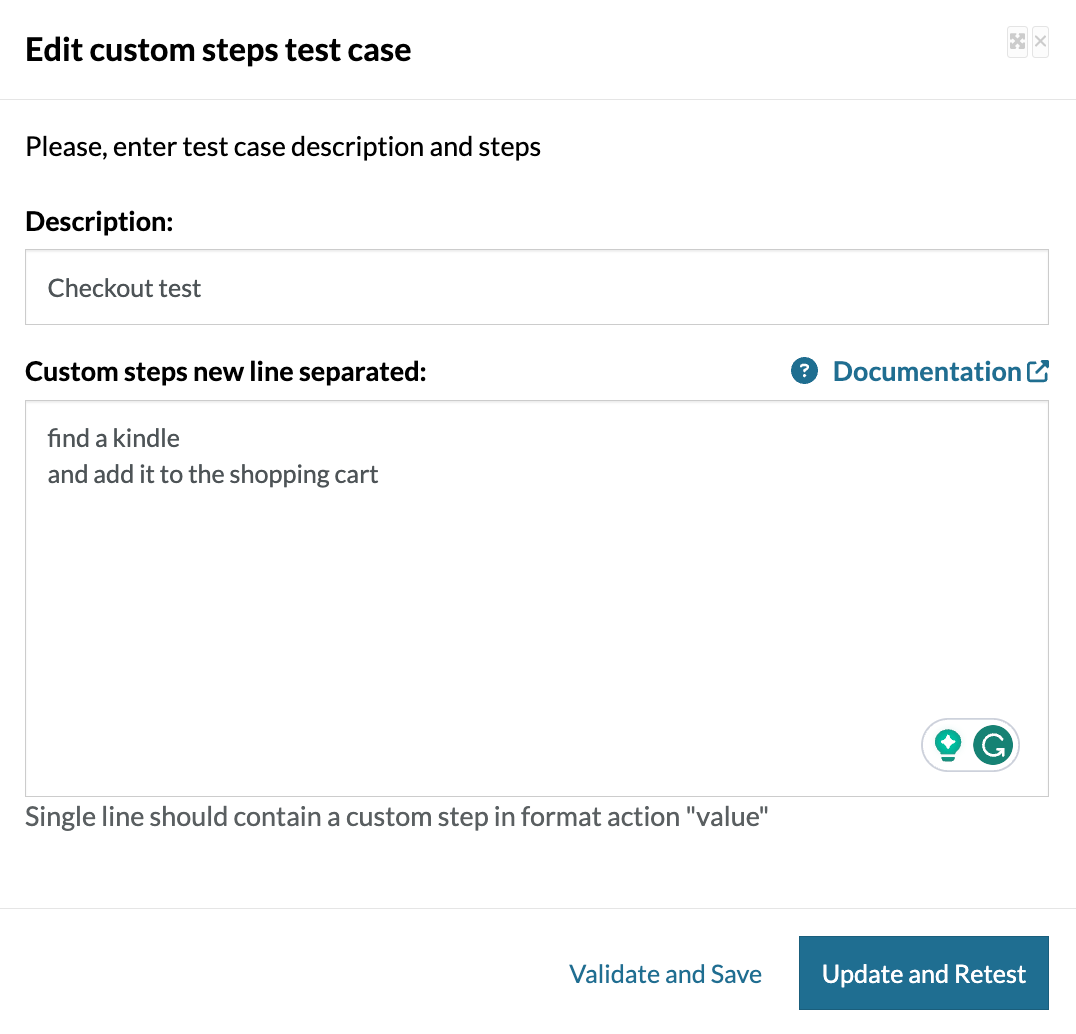

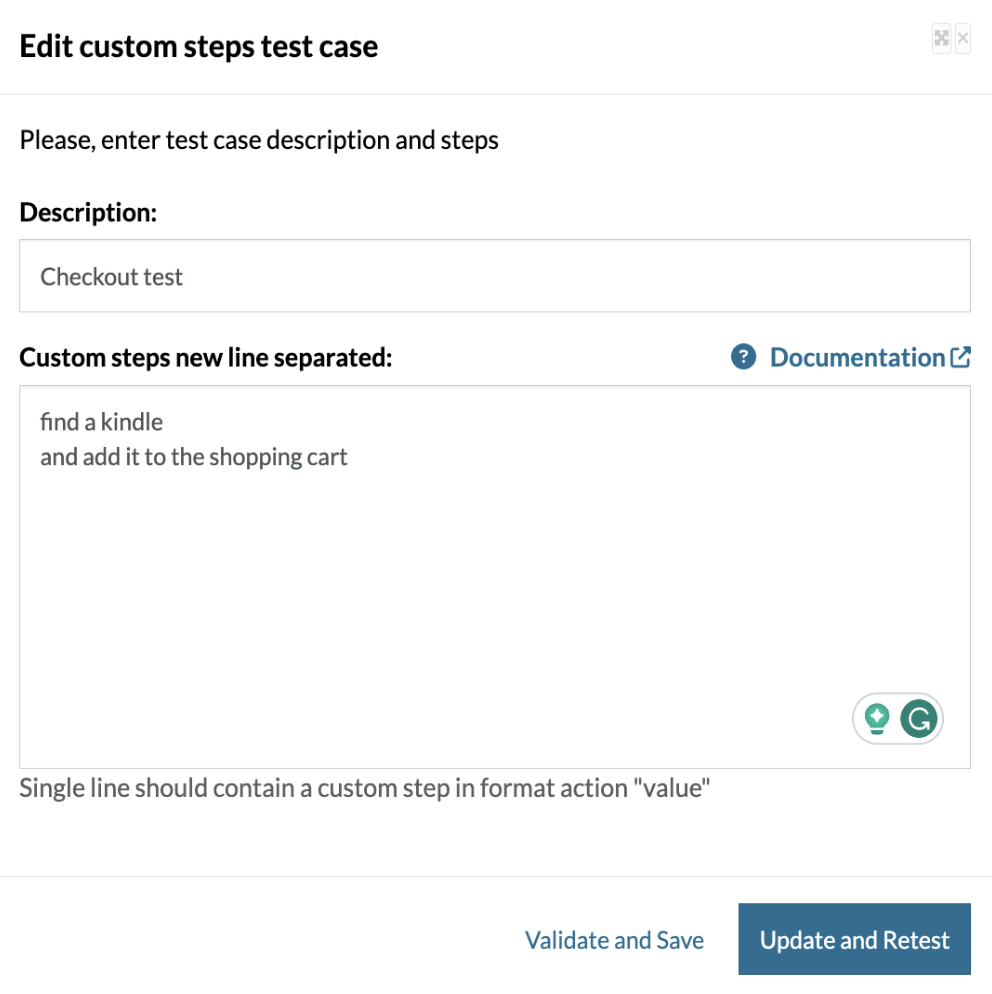

This is how it will look in the UI:

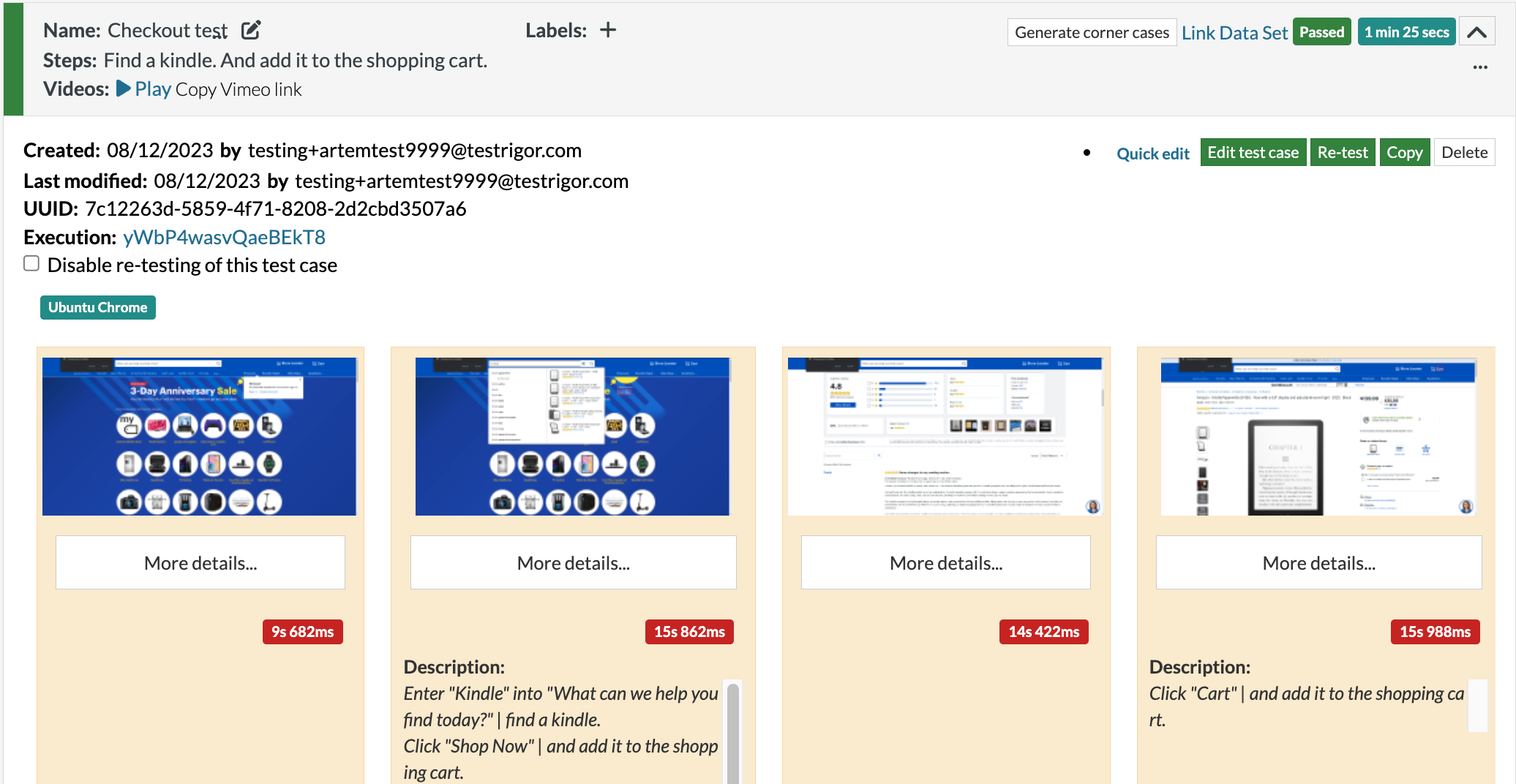

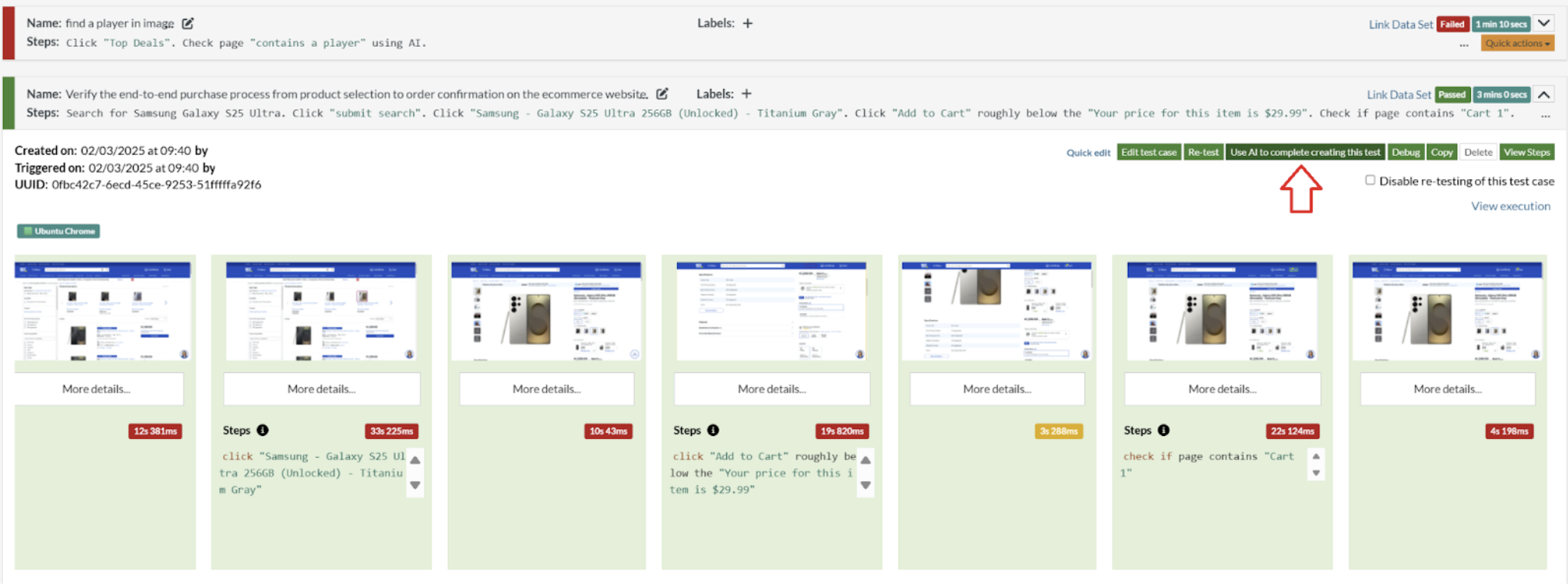

After pressing confirm, sit back and relax. The testRigor engine will create the test case based on the criteria you’ve specified. However, upon execution, you might discover that it doesn’t perform as you intended:

As illustrated in the example, since no Kindle was selected, the system wandered around trying to satisfy the second prompt: add it to the shopping cart.

find a kindle and select it and add it to the shopping cart

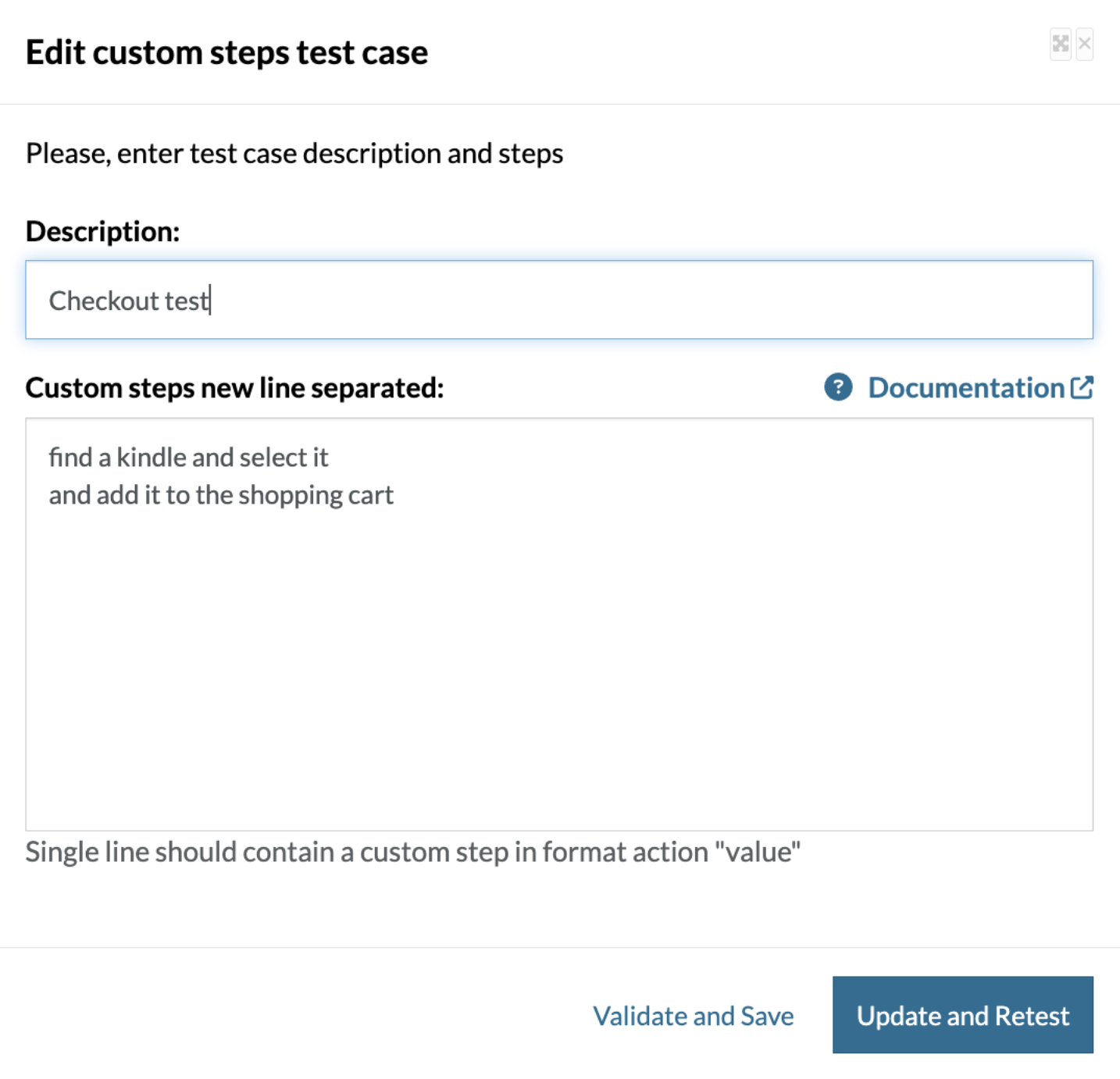

This is how it will look in the UI:

In essence, the primary responsibility in this scenario is ensuring that the prompt is lucid and straightforward. It may require supplementary clarifications or additional context to guide the system effectively and guarantee it operates as intended. You can follow this 3-step process to make sure that your prompting works as expected.

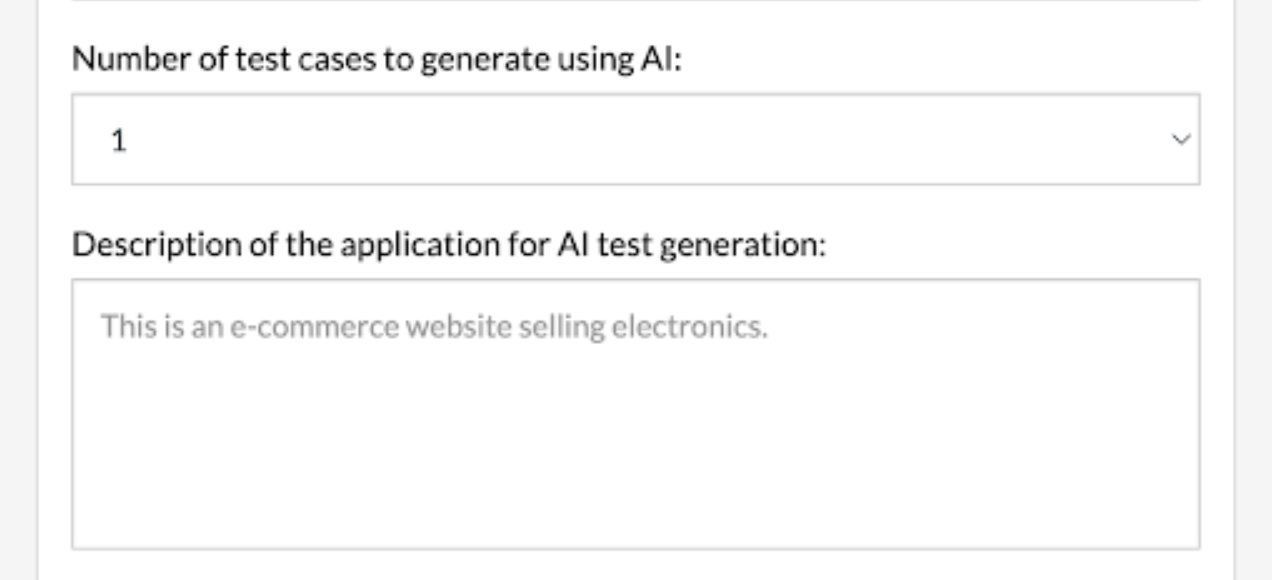

Step 1: Provide a Detailed Description of the AUT: testRigor will generate tests based on the app description that you will provide. Make sure that it is detailed enough to provide AI a clear context, so the AI-generated tests are relevant. For example, this is a good description for the Salesforce application: This is a full-featured web and mobile based CRM system. As a user you can create Contacts and Deals, set up associations between those and other objects, and much more. You can also build your custom forms backed by built-in Apex programming language, and search types of available objects.

Step 2: Provide Non-ambiguous Test Case Description: testRigor generates test steps based on the test case description as well. Make sure you provide a non-ambiguous test case description and help AI to generate relevant test steps. You can also choose to select ‘AI Context‘ to have more meaningful test steps. In the example below, we can provide the Test Case Description as “Find, Select, and Add Kindle to Cart” instead of “Checkout test”. This description is helpful for AI to generate relevant test steps.

Step 3: Provide Manual Inputs When Needed: AI may sometimes get stuck while building a test case, even with clear instructions. When this happens, step in to manually guide it by adding the specific steps needed to overcome the hurdle. After providing this help, click Use AI to complete creating this test so the AI can resume and finish the process from where you left off.

Other Prompt Engineering Techniques

Prompt engineering is a multifaceted field comprising numerous techniques. Let’s consider the ones that would be helpful in a QA environment.

Least-to-most prompting technique for QA prompts

Rooted in the principle of gradation, the ‘least-to-most’ technique seeks to guide AI systems incrementally. There might be instances where an AI doesn’t behave as anticipated. Drawing from this technique, one effective countermeasure is to fractionate the primary instruction into more granular, explicit steps, thereby facilitating the AI’s comprehension and execution.

add a kindle to the cart

find a kindle and select it and add it to the shopping cart

By employing such granular instructions, we can bridge the gap between AI’s interpretation and the desired outcome, ensuring smoother and more predictable system interactions. For more examples, dos and don’ts, read this detailed guide to know How to use AI effectively in QA.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |