Data-driven Testing Use Cases

|

|

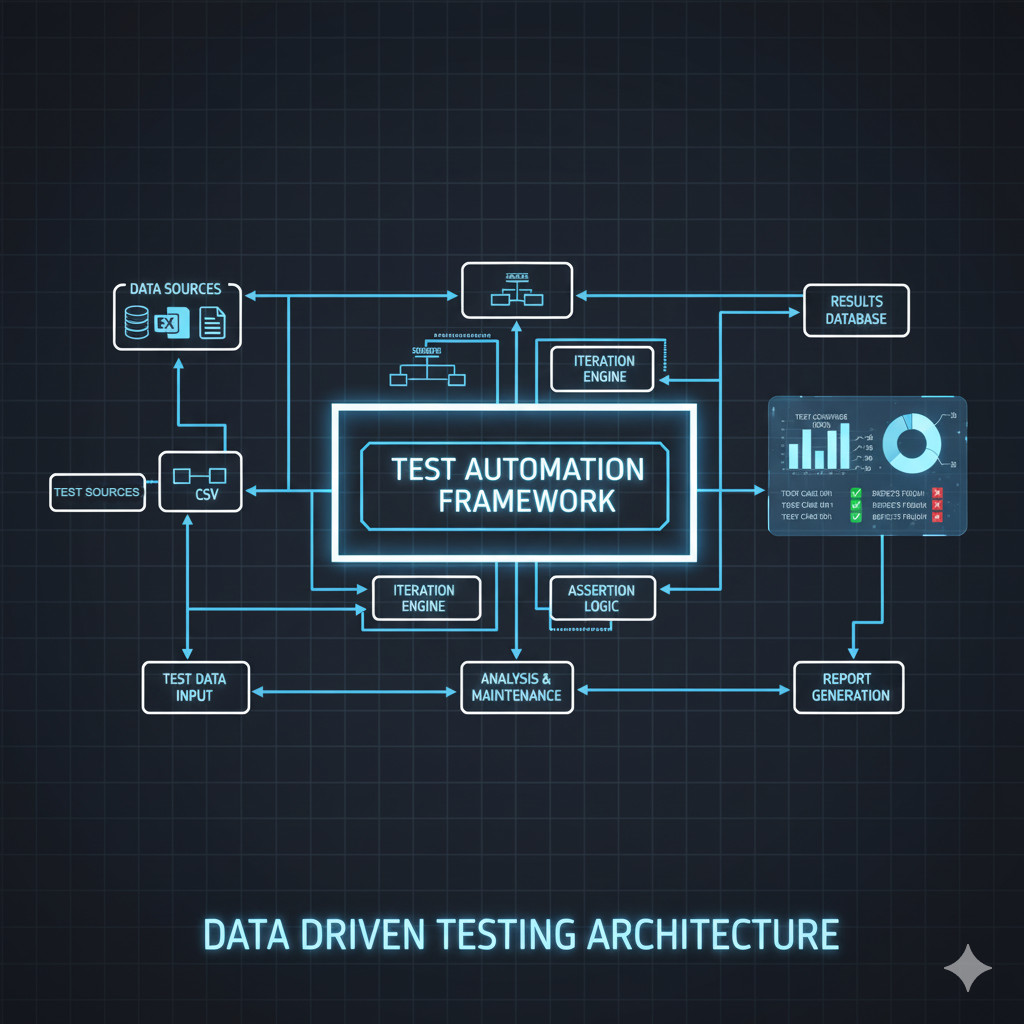

In software testing, data-driven testing (DDT) has become one of the most promising approaches for achieving reliability, scalability, and functional correctness of applications. Contemporary software needs to operate on a variety of input data ranging from numerical and string values to complex data structures, under different circumstances. If you hard-code in your test data, it results in less test coverage and flexibility to handle new scenarios. That’s where data-driven testing has an immense impact.

Data-driven testing separates test logic from the test data and enables users to run test cases with varying input values. Instead of writing several test cases over and over to cover various data scenarios, you only need a single script that can programmatically read in inputs from external sources like CSV files, spreadsheets (Excel), XML, JSON sets / documents, databases or APIs.

| Key Takeaways: |

|---|

|

Understanding Data-Driven Testing

Before delving into use cases, it’s crucial to grasp what data-driven testing truly means and why it’s essential in modern QA practices.

The Logic

In data-driven testing, the test parameters (input data) and the expected output are isolated from the test logic itself. Test scripts are configured to pull data from outside sources and repeat the same course of action for each dataset.

This enables the easy testing of different input/output pairs without the need to rewrite the test code, making it flexible and efficient. It’s especially useful in different automated testing frameworks where automation scripts can easily iterate over data-driven inputs. Read more about Data-driven Testing: How to Bring Your QA Process to the Next Level?

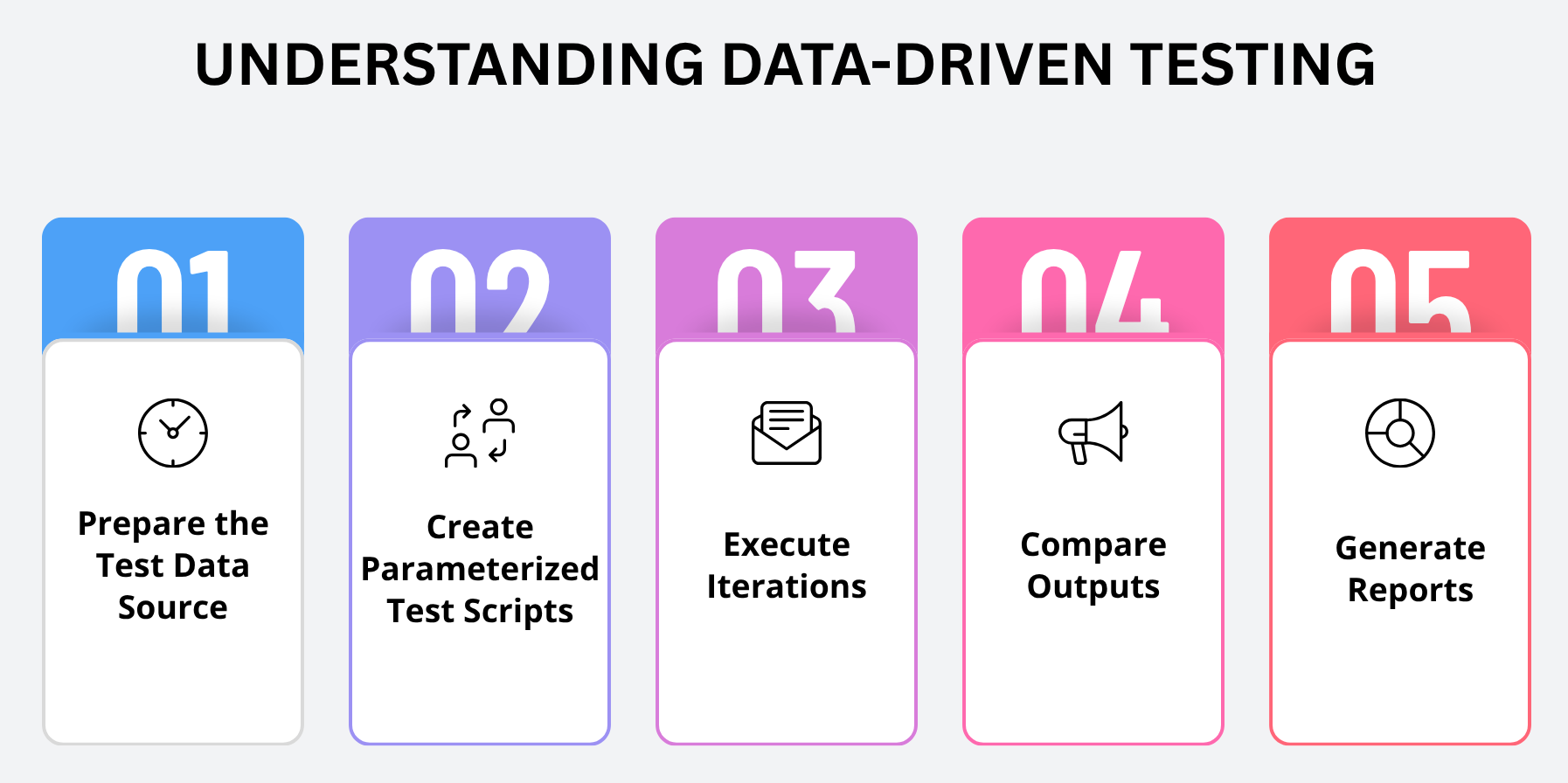

How it Works

- Prepare the Test Data Source: The test data resides externally within files (Excel, CSV, JSON, etc) or in a connected database. This separation ensures that if input data changes, the test script does not require any modification.

- Create Parameterized Test Scripts: Test scripts are authored with variables or placeholders that populate input values at runtime. The latter point enables efficient running of the same test logic across multiple datasets.

- Execute Iterations: The test framework automatically iterates through each row or record in the data source for you. That is, each round of the table represents a single test using different data-set combinations.

- Compare Outputs: In each iteration, the framework compares the actual output with the expected one mentioned in the dataset. This guarantees a consistent and correct validation for all input variations.

- Generate Reports: After the test is run, the reporting shows which datasets passed and failed. These are useful for testers to find out which specific combination of data is causing a problem and ensure simple debuggability.

By externalizing data, testers can quickly modify or add new scenarios without altering the underlying test logic, a massive advantage for agile and CI/CD environments.

Why Data-Driven Testing Matters

Data-driven testing is not just a technical technique, it’s a strategic mechanism which gives QA teams the power to keep up with continuous testing across modern and fast-changing applications. By separating data from test logic, teams achieve the flexibility and scale required to keep pace with modern, data-rich environments effectively.

- Enhanced Test Coverage: Data-driven testing enables teams to test with hundreds and thousands of input combinations very easily. This is to make sure that the application responds properly for different data types, covering both typical and edge-case scenarios.

- Reduced Maintenance: Test logic and data are separated, so changes in the test data don’t need any code rewriting. This substantially reduces the effort required for maintenance and can adapt more quickly to changing needs or business rules.

- Increased Reusability: A single well-written test script can verify different scenarios by iterating through different data sets. This reusability speeds up the iteration cycle and encourages consistency between environments.

- Faster Execution: Automation frameworks execute data-driven tests at the same time across extensive sets of data which saves a lot of time. It means DDT is perfect for CI/CD pipelines where fast feedback matters most.

Read more about Dynamic Data in Test Automation: Guide to Best Practices.

In modern CI/CD environments, tools like testRigor make it easy to integrate data-driven tests into pipelines. Each data variation can automatically execute in parallel, generating detailed reports and identifying data-specific failures without script-level maintenance.

Data-Driven vs. Keyword-Driven Approaches

Although Data-Driven Testing (DDT) and Keyword-Driven Testing (KDT) both intend to improve the performance and the quality of automated testing procedures, they have different focuses and methods. Data-driven testing stresses testing many data inputs using a single test script, and keyword-driven testing emphasizes not only capturing the steps as separate actions but also making them reusable by parameterizing so that a step can be executed with different sets of data.

DDT fundamentally addresses data variation, while KDT focuses on action abstraction.

| Aspect | Data-Driven Testing (DDT) | Keyword-Driven Testing (KDT) |

|---|---|---|

| Primary Focus | Testing multiple input and output combinations | Reusing predefined actions through keywords |

| Core Idea | Separate test data from test scripts | Separate test logic from underlying code |

| Data Source | External files (Excel, CSV, JSON, Database) | Keyword tables, spreadsheets, or keyword libraries |

| Test Design | One test script runs with many data sets | Test steps defined by high-level keywords |

| Maintenance Effort | Low — only test data changes | Moderate — keyword library needs updates |

| Required Skill Level | Usually requires scripting knowledge | Can be created by non-technical users |

| Best Suited For | Validating large data combinations (e.g., forms, APIs) | Building readable, reusable automation flows |

| Execution Approach | Same logic, different data inputs | Same actions, different keyword sequences |

| Output Focus | Data accuracy and coverage | Test flow clarity and modularity |

In summary, Data-Driven Testing focuses on varying inputs, while Keyword-Driven Testing focuses on varying actions, both complementing each other in achieving scalable and maintainable automation.

Interestingly, testRigor supports both data-driven and keyword-driven methodologies. Users can define reusable actions (keywords) while parameterizing test inputs from external datasets, bridging the advantages of both approaches. Read more about Test Data Generation Automation.

Core Use Cases for Data-Driven Testing

Let’s explore the major practical applications where data-driven testing proves indispensable.

Use Case 1: Testing Form Validations and Input Fields

One of the typical scenarios for data-driven testing is testing input fields in web and mobile apps. Forms such as sign-up forms, payment forms, and survey applications will often need lots of data validation. For example, consider a registration form with fields for name, email, password, date of birth, and phone number. So, instead of writing a separate test case for each scenario, you can just create a single test script and then feed the script with multiple data sets stored in any external file. Read: How to Test Form Filling Using AI.

Example Data Set:

| Scenario | Input | Expected Output |

|---|---|---|

| Valid email | “[email protected]” | Accepted |

| Invalid email | “john.doe@” | Error message |

| Missing name | “” | Error message |

| Weak password | “12345” | Password strength warning |

This guarantees that the form functions properly for all possible combinations of valid and invalid inputs, a level of coverage you can’t achieve with manual or static tests alone.

Use Case 2: Verifying Login and Authentication Systems

Login is a fundamental part of most applications. It needs to support all possible kinds of credential sets: right, wrong, old, locked out or disabled accounts. For QAs, this database approach has enabled them to run through these combinations on a large scale in no time at all.

Example Data Set:

| Username | Password | Expected Result |

|---|---|---|

| user1 | “pass123” | Login Successful |

| user2 | Wrong password | Invalid Credentials |

| user3 | Expired password | Account Locked |

| admin | “admin@123” | Login Successful |

With this methodology, testers can verify not just functional correctness, but also the security enforcement, such as account lockout after multiple failures. This helps to:

- Prevent unauthorized access vulnerabilities.

- Validate password policies and account lockouts.

- Guarantee that session handling and redirects are consistently used.

With data-driven login testing, application reliability is greatly enhanced without increasing the overhead of manually developing duplicate test scripts.

Use Case 3: Testing E-Commerce Checkout Workflows

For e-commerce, the application must manage diverse product categories, a variety of quantities, prices, payment methods, and types of discounts. Manually testing each of these combinations is infeasible, which is why data-driven tests have been, and continue to be, a game-changer. Read: E-Commerce Testing: Ensuring Seamless Shopping Experiences with AI.

Example Data Set:

| Product ID | Quantity | Payment Type | Discount Code | Expected Result |

|---|---|---|---|---|

| P1001 | 1 | Credit Card | SAVE10 | Order Success |

| P2002 | 2 | PayPal | – | Order Success |

| P3003 | 1 | Debit Card | INVALID | Error Message |

| P4004 | 5 | Credit Card | BULKBUY | Order Success |

This allows testers to script the complete checkout process with a single test logic that iterates through these data combinations.

Benefits:

- Identify pricing or tax calculation mistakes.

- Validates coupon logic under multiple conditions.

- Test payment gateway integration robustness.

- Ensures order confirmation and notifications are consistent.

Use Case 4: Database Validation Testing

Applications that heavily interact with databases, such as banking, inventory management, or CRM systems, rely heavily on data integrity. Data-driven testing helps to verify that the data entered via UI or API updates the records in your database.

For a banking app:

- Test deposits, withdrawals, and transfers with various data combinations.

- Validate database entries reflect accurate balance updates.

- Confirm transaction IDs and timestamps are generated correctly.

QA teams perform end-to-end validation by comparing expected data from an external database to actual database values after every test execution.

Benefits:

- Finds corrupt and incorrectly linked information.

- Validates CRUD (Create, Read, Update, Delete) operations.

- Ensures referential integrity across linked tables.

Use Case 5: API Testing with Variable Payloads

Modern applications are built on APIs. There is a level of complexity in the API endpoints, as most take multiple parameters with different data types that must be tested. Even for a small change in the input data, inconsistent service behavior can be observed, yielding end-to-end testing based on comprehensive data coverage to validate API robustness and reliability, adhering to all possible use cases. Read: How to do API testing using testRigor?

For a POST /user API endpoint:

- A JSON data set can have multiple user requests with different attributes.

- The request and response times that validate status codes and response times with messages are done with the same test logic.

Example Data Set:

| Name | Role | Expected Status | |

|---|---|---|---|

| John | [email protected] | Admin | 201 Created |

| Mike | invalidemail | User | 400 Bad Request |

| Jane | [email protected] | Guest | 201 Created |

Benefits:

- By employing reusable parameterized data, development teams can rapidly validate that new code modifications have not ‘broken‘ any existing API functionality without rewriting test scripts.

- Automated data iteration enables real-time validation of differing input combinations, thereby achieving full coverage with little manual intervention.

- Testing extreme, invalid, and unexpected data inputs helps uncover vulnerabilities and confirm that the API responds gracefully to all scenarios.

Use Case 6: Financial and Banking Transaction Validation

In financial use cases, precision and robustness are not subject to discussion. Data-related testing can mimic thousands of transaction combinations to validate system stability and correctness. Testers can validate complex business rules, identify calculation abnormalities sooner, and ensure financial transactions are run uniformly across different scenarios.

Example Data Set:

| Account No | Transaction Type | Amount | Currency | Expected Result |

|---|---|---|---|---|

| 100123 | Deposit | 500 | USD | Success |

| 100123 | Withdraw | 1000 | USD | Insufficient Funds |

| 200456 | Transfer | 250 | EUR | Success |

| 300789 | Withdraw | -10 | USD | Invalid Amount |

This level of data coverage ensures compliance with business rules and helps prevent financial inconsistencies or losses. Read: Automated Testing in the Financial Sector: Challenges and Solutions.

Use Case 7: Cross-Browser and Device Compatibility Testing

Modern users connect to applications through different browsers, devices, and screens, the display resolution of which can be very different. These devices run on different operating systems with different settings. By leveraging data-driven testing, you should be able to parameterize these combinations and automate them effectively.

Example Data Set:

| Browser | OS | Resolution | Expected Behavior |

|---|---|---|---|

| Chrome | Windows 10 | 1920×1080 | Layout correct |

| Safari | macOS | 1440×900 | Layout correct |

| Firefox | Ubuntu | 1366×768 | Layout correct |

| Edge | Windows 11 | 2560×1440 | Layout correct |

Automating these combinations reduces manual repetition and guarantees a consistent user experience across platforms. More about Cross-browser Testing.

Use Case 8: Localization and Internationalization Testing

Global deployed applications must handle different languages, date and number formats, and currencies. Testers can easily verify localized content through data-driven testing. The benefit of this model is that users can receive accurate translations, culturally appropriate formats, and consistent features regardless of location.

Example Data Set:

| Language | Currency | Date Format | Expected Label |

|---|---|---|---|

| English | USD | MM/DD/YYYY | “Total” |

| German | EUR | DD.MM.YYYY | “Gesamt” |

| Japanese | JPY | YYYY/MM/DD | “合計” |

Data-driven scripts can dynamically switch locale configurations and validate the correct rendering of translations and formats. Read: Localization vs. Internationalization Testing Guide.

Use Case 9: Performance Testing with Variable Loads

Data-driven testing can simulate performance conditions by providing test tools (such as JMeter or LoadRunner) with different user loads, transaction sizes, or session data. These tools enable testers to measure the DUT under realistic and peak load conditions, verifying whether it will scale and perform optimally before going into production.

Different datasets can represent users with distinct behaviors:

- 10 concurrent users performing basic searches.

- 100 users are submitting forms simultaneously.

- 1000 users executing transactions of various sizes.

By parameterizing data, testers can ensure system scalability under real-world conditions. Read: What is Performance Testing: Types and Examples.

Use Case 10: Testing Data Migration or ETL Pipelines

For large organizations, the data migration and ETL operations need to guarantee end-to-end consistency of data from source to target systems. Data-driven testing ensures that the transformed data is as expected after having been migrated.

Example Data Set:

| Source Table | Target Table | Transformation Rule | Expected Match |

|---|---|---|---|

| users_old | users_new | Uppercase email | Yes |

| orders_old | orders_new | Currency conversion | Yes |

| addresses_old | addresses_new | Merge city/state | Yes |

By automating data comparisons across systems, QA teams minimize risks in high-stakes data transitions.

Use Case 11: Insurance, Healthcare, and Policy Testing

In domains such as insurance or health care, the number of input parameters (e.g., patient information, types of policies, and premium calculation) is very large. Big test-data allowances are allowed with data-driven testing. It guarantees that the sophisticated business logic and computational models deliver consistent results in all data combination forms, avoiding expensive errors in mission critical environments.

Example Data Set:

| Policy Type | Age | Health Condition | Expected Premium |

|---|---|---|---|

| Life | 25 | Healthy | $100 |

| Health | 45 | Diabetes | $250 |

| Auto | 30 | Accident History | $300 |

By combining these datasets, testers ensure systems compute premiums, co-pays, or reimbursements correctly under different profiles.

Benefits of Data-Driven Testing in Modern QA Pipelines

Efficiency, flexibility, and scalability are key in today’s QA pipelines. Data-driven testing (DDT) facilitates these goals by allowing teams to run the same test logic against multiple datasets in order to cover more ground and reduce repetition. It allows QA engineers to be highly flexible while not losing themselves in frequent changes.

- Scalability: Using data for testing gives teams the flexibility to scale test coverage easily by adding new datasets. This allows for the addition of more test cases without the need to write or copy existing scripts.

- Maintainability : Test cases are kept separate from data and so can be updated, supplemented without changing any test logic. This decoupling reduces script maintenance and provides for future flexibility as tasks change.

- Consistency: Each testing iteration is based on the pre-defined logic, keeping consistent validation for various sets of data. Such consistency enhances the reliability of results and removes inconsistencies introduced by manual manipulation.

- Efficiency: There’s no redundancy when using the same script for multiple sets as well as speeding up test time. This level of efficiency greatly decreases the time spent during regression and functional testing cycles.

- Traceability: Every data record is tied to a particular test iteration, ensuring you can identify the exact data state and conditions that resulted in a failure. It enables accurate debugging and accelerates the process of fixing defects.

In essence, DDT transforms how testing scales in agile organizations that demand rapid feedback and constant deployment confidence.

testRigor operationalizes data-driven testing by allowing teams to create data-driven tables and iterate over complex datasets, all without writing a single line of code. This approach reduces maintenance overhead and ensures comprehensive test coverage across diverse environments. Read more about How to do data-driven testing in testRigor (using testRigor UI).

Conclusion

Data-driven testing has changed how QA teams look at automation, emphasizing flexibility, scalability, and translating to real scenarios. It separates data from logic, allowing testers to cater to a wide range of situations without any additional scripting labor. When paired with no-code solutions such as testRigor, teams can roll-out massive amounts of data iterations in short order while keeping an automation suite clean, thus delivering faster, and more reliable releases. Put simply, DDT enables continuous validation in next generation data-intensive applications while maintaining automation simple and adaptive.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |