ELC 2025 Takeaways: Getting Value from Using AI for Code Generation

|

|

Artificial Intelligence has become the buzzword of every engineering conference, boardroom meeting, and product roadmap. But at this year’s ELC Annual Conference, the conversation took a refreshingly practical turn. Led by Artem Golubev, Co-founder & CEO of testRigor, the roundtable “Getting Value from Using AI for Code Generation” brought together engineering leaders who’ve lived the reality, which includes the good, the frustrating, and the transformative.

The central question discussed was: “Are we actually building better software with AI or just building faster chaos?”

| Key Takeaways: |

|---|

|

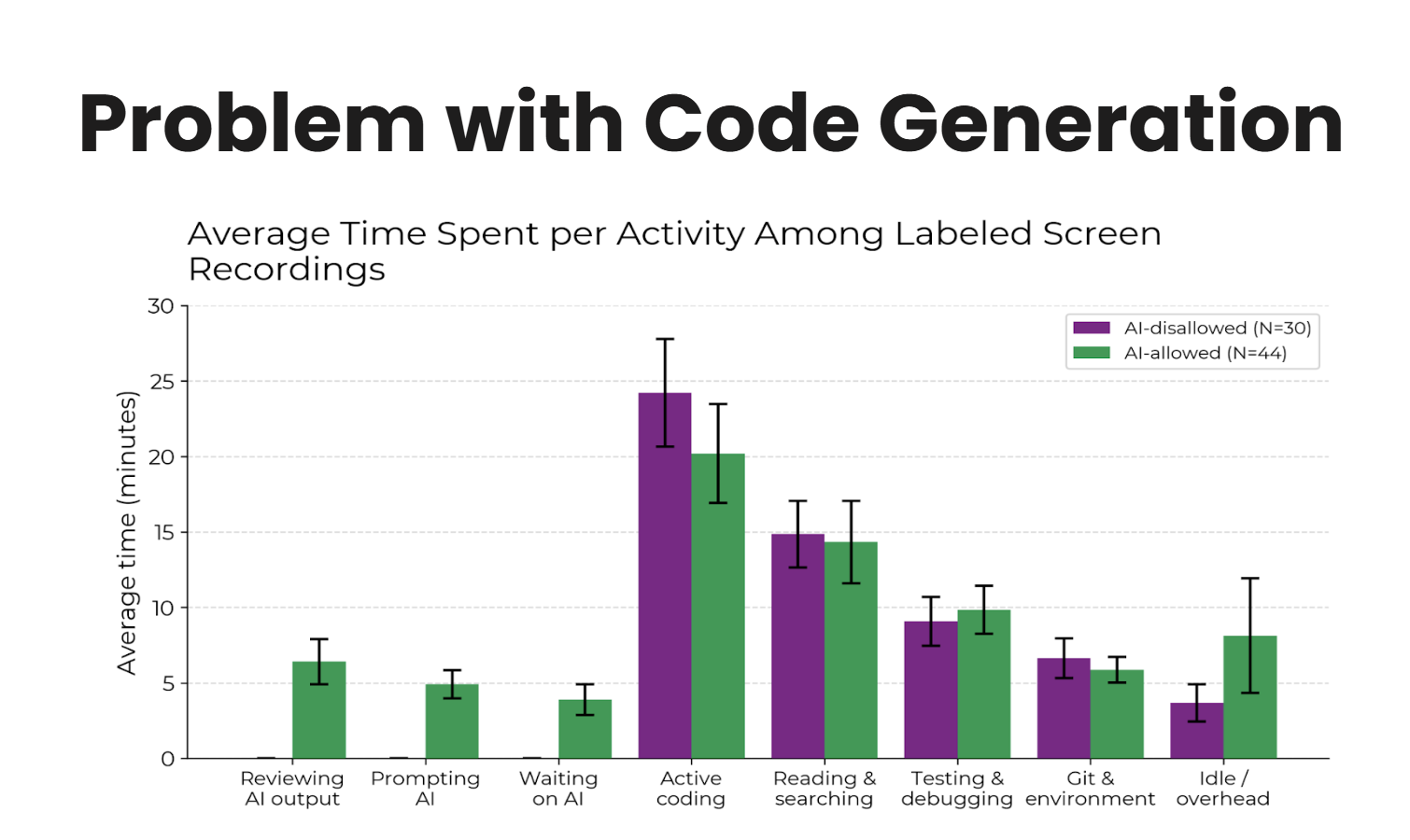

Why Faster is not Always Better

The promise of AI-generated code boosting productivity by 10x sounds exciting, but reality tells a different story. People expect improvement of performance, but actually, it slows them down by 19% as measured by Model Evaluation and Threat Research (METR). Engineering leaders at the roundtable discussed that the real productivity is around 10–20%, and sometimes it even dips into negative numbers during adoption.

So, why is that?

Because AI may write code fast, but it doesn’t guarantee correctness, clarity, or maintainability. Hallucinations are a real big issue with AI. So, we might say that AI feels like having a brilliant intern, you go faster only if you already know exactly what you want.

Takeaway: Speed without structure is a liability, not a win.

Mindset Shift to We Own the Quality

The session also addressed an ever-present problem, i.e., accountability gaps between development and QA. Several leaders shared how they broke silos by introducing shared ownership models. One example is on-call rotations for production incidents. Once developers start being responsible for broken tests, their priorities shift from “Did it ship?” to “Does it actually work?” At testRigor, we’ve seen this shift firsthand. Teams that adopt shared quality ownership consistently do better than those that treat QA as just a checkpoint. Read: How to use AI effectively in QA.

Takeaway: If you don’t own outcomes, you’re just shipping artifacts.

Why Good Prompts Start with Great Requirements

The biggest takeaway from the discussion was this: AI doesn’t eliminate the need for good requirements. Rather, it magnifies their importance. When teams feed vague prompts to AI, the output mirrors exactly that. But when they define detailed acceptance criteria, AI tools like Copilot or Cursor deliver more reliable code. AI is not a magic wand. It is a mirror that reflects your clarity (or lack thereof). Read more about the role of Prompt Engineering in QA and Software Testing.

Takeaway: Prompting is only as good as your planning.

Why Tests Bring True AI Efficiency

Artem mentioned a point that resonates deeply with our philosophy at testRigor: the true multiplier isn’t AI, it’s testing. The modern SDLC process is fundamentally broken. Though we gave great advancements in tooling, teams still struggle with issues that impact engineering time and cause slow delivery. Engineers are often pulled into non-engineering tasks. This leaves less time for actual innovation. What is even worse? Unclear or misunderstood specs often lead to costly rework. The teams are forced to rebuild features that should have been worked right the first time.

Automated testing, powered by natural language tests, transforms validation. When teams start with clear acceptance criteria (ATDD), AI can actually perform at its best. You make the code pass the tests during ATDD. It creates code that is in-sync with the user intent and business outcomes. But again, you can not achieve success just with tooling. The real challenge is cultural. Most engineers still treat testing as a side quest rather than a priority.

Takeaway: Without tests, AI simply amplifies your mistakes.

Developers are now Reading, NOT Writing

When using AI for code generation, developers’ focus is shifting from writing code to reviewing and validating it. Many leaders now treat AI output like a pull request from a junior teammate. Review it carefully, merge only what seems correct, and refactor again for readability. As mentioned earlier, this mindset shift isn’t just tactical, it’s cultural. It redefines engineering excellence around critical thinking, validation, and maintainability.

Takeaway: Developers are becoming curators rather than creators.

Is Smart Code a Silent Risk?

AI’s code often looks smart, but sometimes, that’s the actual problem. Several attendees warned of AI-generated logic that’s over-engineered, abstracted beyond reason, or inconsistent with existing standards. Great engineering isn’t about sophistication, it’s about clarity and reliability.

Takeaway: Your code can be boring, but bulletproof.

Trust but also Verify

Another recurring theme was AI’s overconfidence problem. Large language models rarely admit uncertainty. They generate ‘believable’ code, even when it’s wrong. Developers must learn to verify outputs systematically, rather than instinctively trusting them. Studies show that engineers only feel faster when using AI. But it is often slower overall due to hidden rework.

Confidence ≠ Correctness.

Read: Top 10 OWASP for LLMs: How to Test?

Takeaway: Confidence must always be grounded in verification — through testing, reviews, and accountability.

A Reality Check of AI Engineering

Participants agreed that AI code generation will follow the familiar path of past test automation waves. It will go through consolidation, maturity, and then realism. Its near-term value lies not in replacing developers but in enhancing discipline, clarity, and testing efficiency. As AI tools continue to evolve, one thing remains constant: clarity, testing, and culture will always be the foundation of sustainable innovation. If your team is exploring AI code generation, start by strengthening your test coverage and requirement clarity because that’s where true productivity begins. Read: Gartner Hype-Cycle for AI 2025: What the Future Holds in 2026?

At testRigor, we believe the future of software engineering lies in synergy between AI and automation testing. Our platform already helps teams express complex test scenarios in plain English, ensuring that AI-generated code doesn’t outpace validation. Read: All-Inclusive Guide to Test Case Creation in testRigor. We performed a case study to test the success of this approach:

Step 1: The Product Manager created a detailed PRD

Step 2: QA interns created detailed test cases to match the PRD (with AI help, of course)

Step 3: QA interns generated code until it passes the test(s)

Step 4: The Engineer provided a code review that is addressed by AI

Step 5: Released in PRODUCTION!!! (with minimal rework and maximum clarity)

Result: We managed to save around 45% of the time on simple tickets and addressing code reviews. This helped us speed up the overall delivery time by 20% and we are still improving.

Takeaway: AI is the new compiler. It won’t write your system, but it will penalize bad design more quickly.

Conclusion

The biggest insight from the ELC discussion wasn’t about AI at all: It was about people.

AI magnifies whatever is the base of your engineering culture. If your team values testing, clarity, and ownership, AI accelerates progress. If not, it amplifies noise and risk. Finally, we can say that AI isn’t replacing developers. It is revealing how disciplined your engineering culture really is.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |