Types of AI and Their Usage

|

|

AI has emerged as a research dream, and the heartbeat of this continuous digital transformation. It’s the technology that drives self-driving cars, chatbots, recommendation systems, fraud detection models, and medical diagnostics as well as millions of other applications across industries. But under the single-word AI there is a stack of technologies – each with its own architecture, capabilities, and use cases.

AI fluency is a necessity not only for engineers but also for business decision makers, QA strategy authors, and architects of intelligent systems. AI isn’t just one thing; It is instead a range of disciplines like Machine Learning (ML), Deep Learning, Natural Language Processing (NLP), Generative AI, Neural Networks, Computer Vision, and Cognitive Computing, etc. For example, each group supplies its own set of use cases or problems to solve, which are more or less self-governing.

| Key Takeaways: |

|---|

|

The Evolution of Artificial Intelligence

It is worth tracing the evolution of AI before knowing the different types, which started with rule-based reasoning and then to adaptive and generative intelligence.

The Symbolic Era (1950s-1980s)

The first AI was based on symbolic logic – the representation of human knowledge in the form of explicit rules. Expert systems such as MYCIN (medical diagnosis) were based on if-then logic.

- Benefit: This approach ensures that outcomes remain consistent and easy to interpret. Since the system follows clear, rule-based logic, users can anticipate the same results given the same inputs, making the process transparent and reliable.

- Limitation: A major drawback is its rigidity when faced with uncertainty or imperfect information. The system struggles to make sense of vague, noisy, or missing data, leading to errors or the inability to produce meaningful outcomes in such situations.

The Statistical and Machine Learning Era (1990s-2010s)

With the increased computing power, AI moved away from rule-based systems to data-driven learning. Patterns were learned rather than being subject to fixed logic.

- Machine Learning was born with algorithms to find patterns in data and predict. Machine Learning is essentially a field that aims to create algorithms that can find hidden patterns and relationships in data. By inferring from a large dataset, they are able to let systems make intelligent decisions and predictions without programmed rules.

- Deep Learning revolutionized AI with the use of multi-layered neural networks capable of processing images, text, and speech. Deep Learning transformed AI with powerful, complex multi-layer neural networks that are similar to a human’s cognitive system. This progress enables machines to comprehend and interpret unstructured data types, including images, natural language, and audio, at unbelievably high levels of quality and complexity.

The Generative and Cognitive Era (2010s-Present)

AI has already crossed the boundaries of classification and prediction; it produces, thinks, and communicates.

- Generative AI aims on generating entirely new and novel content by training on large amounts of data. It can produce convincing text, write code, play music, and create life-like images, effectively simulating human creativity with the machine as co-creator.

- Cognitive AI is more than just recognizing patterns; it also adds logic, perspective, and situational understanding. This allows it to understand context, interpret intent, and take action in ways that very much mimic human logic or problem-solving.

Therefore, the current AI ecosystem is a collection of several interacting paradigms, each of which is a step in this evolutionary process.

The Hierarchy of AI Types

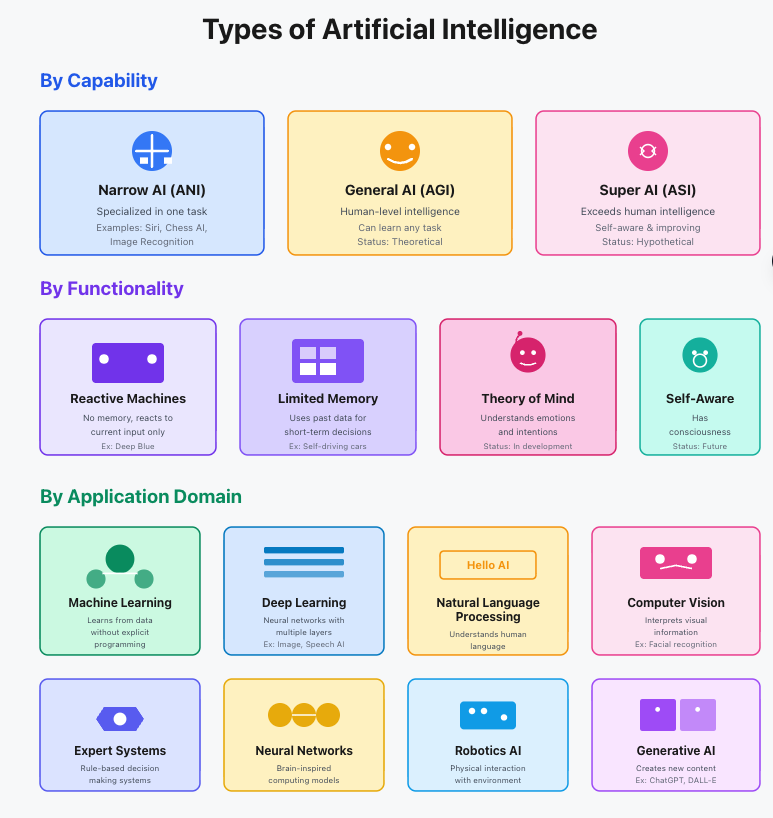

Generally, AI can be divided into two dimensions, which include the level of capability (how intelligent it is) and functional specialization (what it does). Read more about Test Automation with AI.

Capability-based Classification

AI may be classified according to its degree of intelligence and the tasks it can perform. It helps to differentiate between systems that carry out particular tasks and perhaps do them very well, and ones that seek to replicate or even exceed human cognitive faculties. This taxonomy is a useful tool for making sense of the state and future of AI.

| Type | Description | Example |

|---|---|---|

| Narrow AI (Weak AI) | Created to perform a certain task or field. | Chatbots, recommendation systems. |

| General AI (Strong AI) | Theoretical AI is capable of performing any cognitive action as a human being. | Still under research. |

| Superintelligent AI | More than human intelligence, able to reason and be innovative independently. | Theoretical future stage. |

The majority of systems currently in existence are Narrow AI – optimized to specific tasks. However, within that limited area, their intelligence can be profound and transformative.

Function-based Classification

AI may be classified according to its degree of intelligence and the tasks it can perform. It helps to differentiate between systems that carry out particular tasks and perhaps do them very well, and ones that seek to replicate or even exceed human cognitive faculties. This taxonomy is a useful tool for making sense of the state and future of AI.

- Machine Learning (ML)

- Deep Learning (DL)

- Natural Language Processing (NLP)

- Generative AI

- Neural Networks

- Computer Vision

- Cognitive Computing

Let’s explore these in depth.

Machine Learning (ML)

Machine Learning is the science of allowing computers to learn without being programmed to do so. An ML model does not operate on a set of rules, but rather constructs patterns and relationships using the input data and uses them to make predictions or decisions on new data. The mathematical functions used to optimize the internal parameters of ML algorithms minimize errors, which enables them to learn and improve over time.

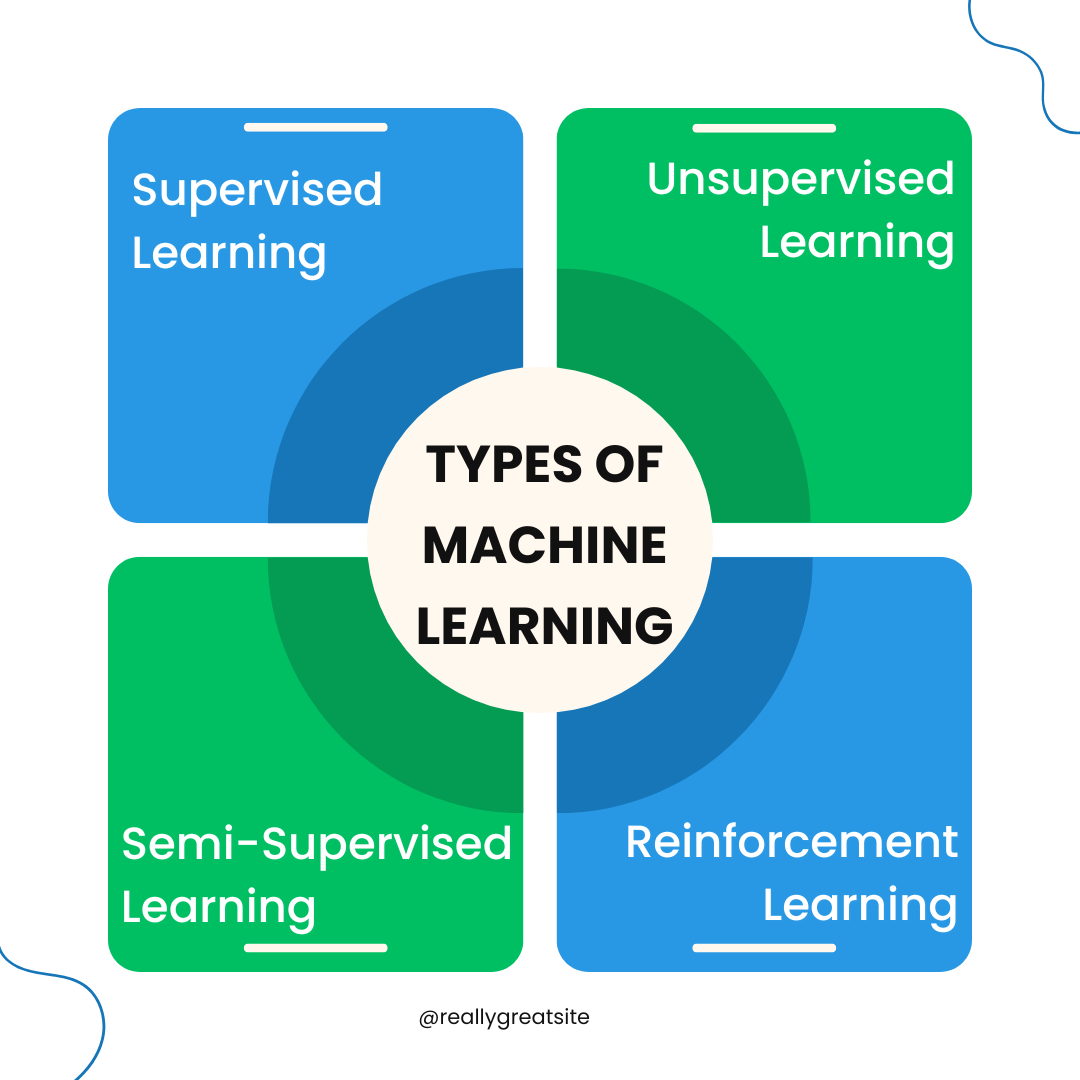

Types of Machine Learning

- Supervised Learning: The model is trained with labeled datasets in which inputs and outputs are known, allowing it to predict responses for new data.

- Example: Predicting house prices based on features like size, location, and number of rooms.

- Unsupervised Learning: This model discovers latent patterns or clusters of datasets without known outputs.

- Example: Segmentation of customers in clusters to create segments with similar buyers.

- Semi-Supervised Learning: The model is trained on little labeled data in concert with vast amounts of unlabeled data to enhance learning precision.

- Example: when detecting fraudulent transactions, we have a few of them marked as fraud.

- Reinforcement Learning: The model learns from experience, getting rewards for good actions and penalties for bad ones.

- Example: Teaching robots to walk or AI agents to play chess by maximizing scoring points.

Usage of Machine Learning

Most recent AI applications use Machine Learning (ML) to process data, learn patterns, and make decisions.

- Predictive Financial and Health Analytics: Computer learning models forecast trends in the stock market or patients’ risk of disease.

- E-commerce and Streaming Recommendations: Amazon or Netflix, for example, recommend products and shows based on your previous picks.

- Email Spam Filtering and Anomaly Detection: ML algorithms identify outlier data points; email spam filters are a well-known example.

- Credit Scoring and Churn Reduction: Banks use ML to decide on a client’s credit status, and companies discover which of their customers are likely to stop using the services.

ML is the computational foundation of contemporary AI, transforming raw data into intelligent knowledge. Read more about Machine Learning to Predict Test Failures.

Deep Learning (DL)

Deep Learning (DL) is a class of ML frameworks that utilize multi-layered neural networks to model complex, hierarchical data patterns. By which higher layers automatically discover appropriate features from lower ones, namely pixels to edges to shapes to objects in image recognition, or letters to words to sentences to meaning in language understanding. They rely on massive amounts of data for robust predictions and decisions. The layers are abstracted by higher-level features of the lower layer:

- Pixels to edges to shapes to objects (image recognition).

- Words (letters) to sentences to meaning (in language processing).

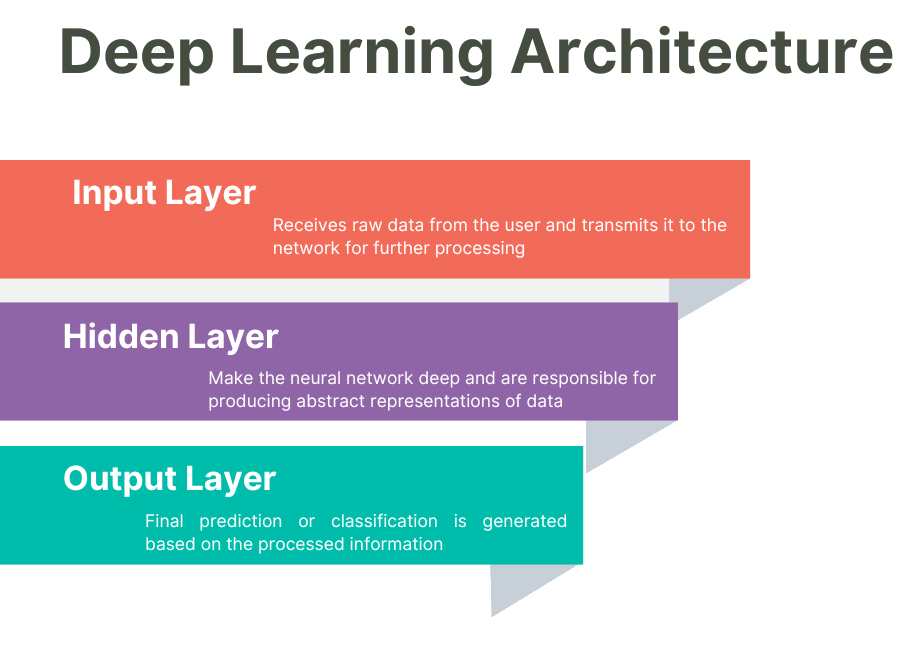

Architecture and Mechanics

Deep Learning is based on a subset of Artificial Neural Networks (ANNs), which itself draws analogy with the structure and working of biological neurons in the human brain. The networks, comprising nodes (neurons) connected to other nodes, pass data through layers of computation so as to enable machines to learn patterns and associations from large amounts of data.

- Input Layer: The input layer receives raw data from the user and transmits it to the network for further processing, like pixel values of an image in hand are transferred into a network before they get recognized. It acts as a data input, converting inputs into numeric values that can be recognized by the neural network model.

- Hidden Layer: These layers make the neural network deep and are responsible for producing abstract representations of data, such as detecting edges, textures, or a particular shape in an image. The depth and number of layers in the network will drive how good a model can be at capturing complex relationships; hence, deep networks have the capability to address more complex problems.

- Output Layer: The final prediction or classification is generated based on the processed information (i.e., whether an image is of a cat or a dog). It converts the discovered features into a human-understandable inference by giving us the network’s final numerical outcome.

Training is the process of adjusting weights using backpropagation to reduce error by optimizing through gradient descent.

Usage of Deep Learning

Deep Learning drives:

- Voice recognition, such as Alexa and Siri.

- Biometric authentication and facial recognition.

- Healthcare diagnostic image classification.

- Natural language generation (GPT-based systems).

- Self-driving car perception systems.

Deep Learning allows machines to see and understand complex data, the basis of more advanced AI fields such as Generative AI and NLP.

Neural Networks

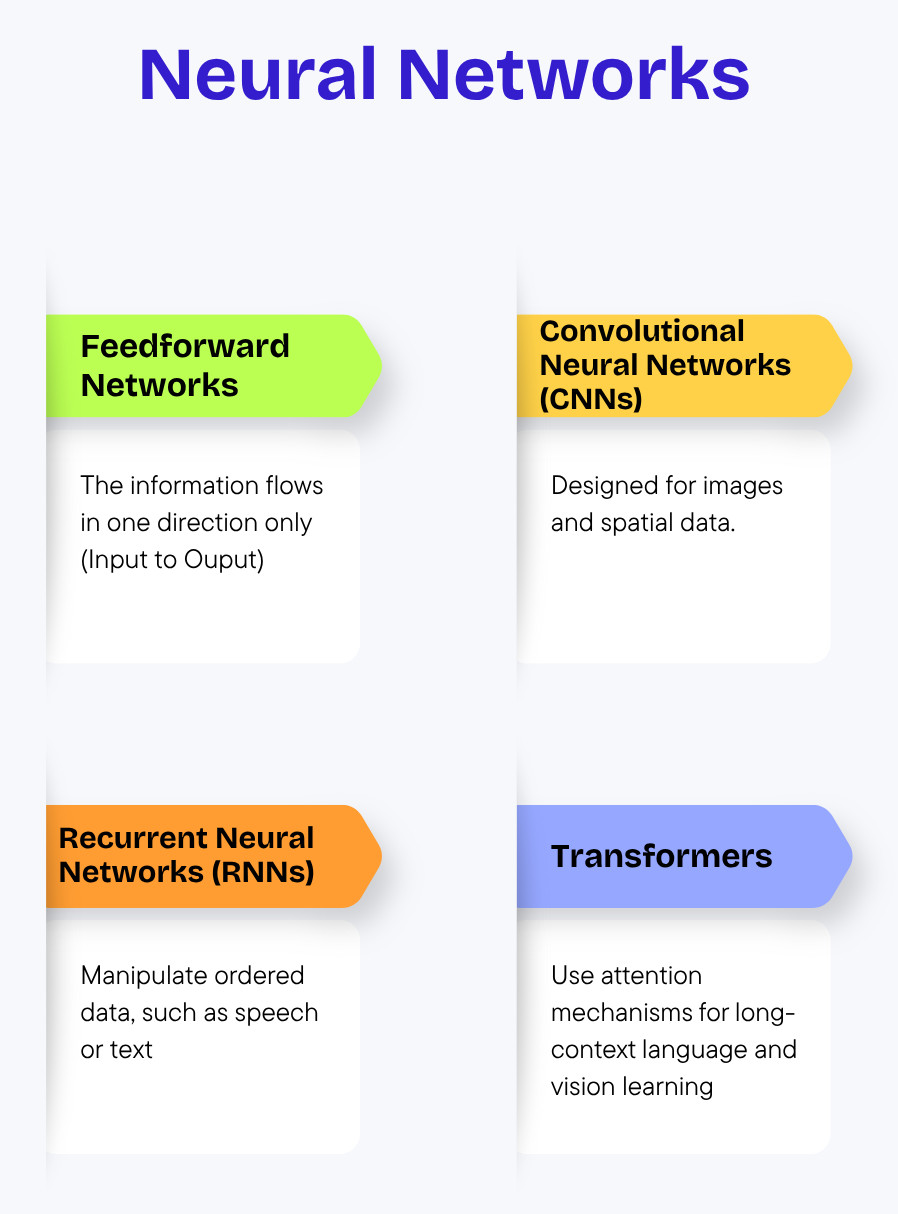

Neural Networks are a type of computing system whose design is inspired by the human brain. These consist of nodes (neurons) with weighted links between neurons and a collective processing of information. Artificial Intelligence is fueled by neural networks. Read more about What is a Neural Network? The Ultimate Guide for Beginners.

Variants include:

- Feedforward Networks: The information flows in one direction only (Input to Output). These are the simplest neural networks and are widely used for tasks such as classification or regression with independent input and output features.

- Convolutional Neural Networks (CNNs): They are designed for images and spatial data. They are based on convolutional layers which are able to automatically learn local patterns such as edges, textures and objects, using these automated features in computer vision applications.

- Recurrent Neural Networks (RNNs): Manipulate ordered data, such as speech or text. They preserve memory of prior input, and thus can capture temporal dependencies and relationships.

- Transformers: Use attention mechanisms for long-context language and vision learning. They are able to process sequences in parallel, learning global dependencies between elements and enabling models such as GPT and BERT.

Usage of Neural Networks

- CNNs are used to visualize recognition patterns, the patterns we consult in images and video surveillance with sharp use for visual recognition tasks such as autonomous driving, etc.

- RNNs contribute to conceptualizing and working with sequences of data to perform speech-to-text, generating text based on patterns and making time series predictions.

- Transformers power models such as GPT, BERT, and the next generation of AI systems for language that bring together image and voice.

Neural networks enable machines to model human perception and cognition, learn nonlinear, complex relationships that cannot be learned using traditional algorithms. Read Neural Networks: Benefits in Software Testing.

Natural Language Processing (NLP)

NLP can get machines to understand, process, and generate human language. It is the language of human communication and symbolic logic. Modern NLP queries a mix of linguistics, deep learning, and probabilistic models to extract text, context, and sentiment. Thanks to various newer breakthroughs, NLP can now fuel such applications as chatbots, translation tools, and sentiment analysis with uncanny accuracy.

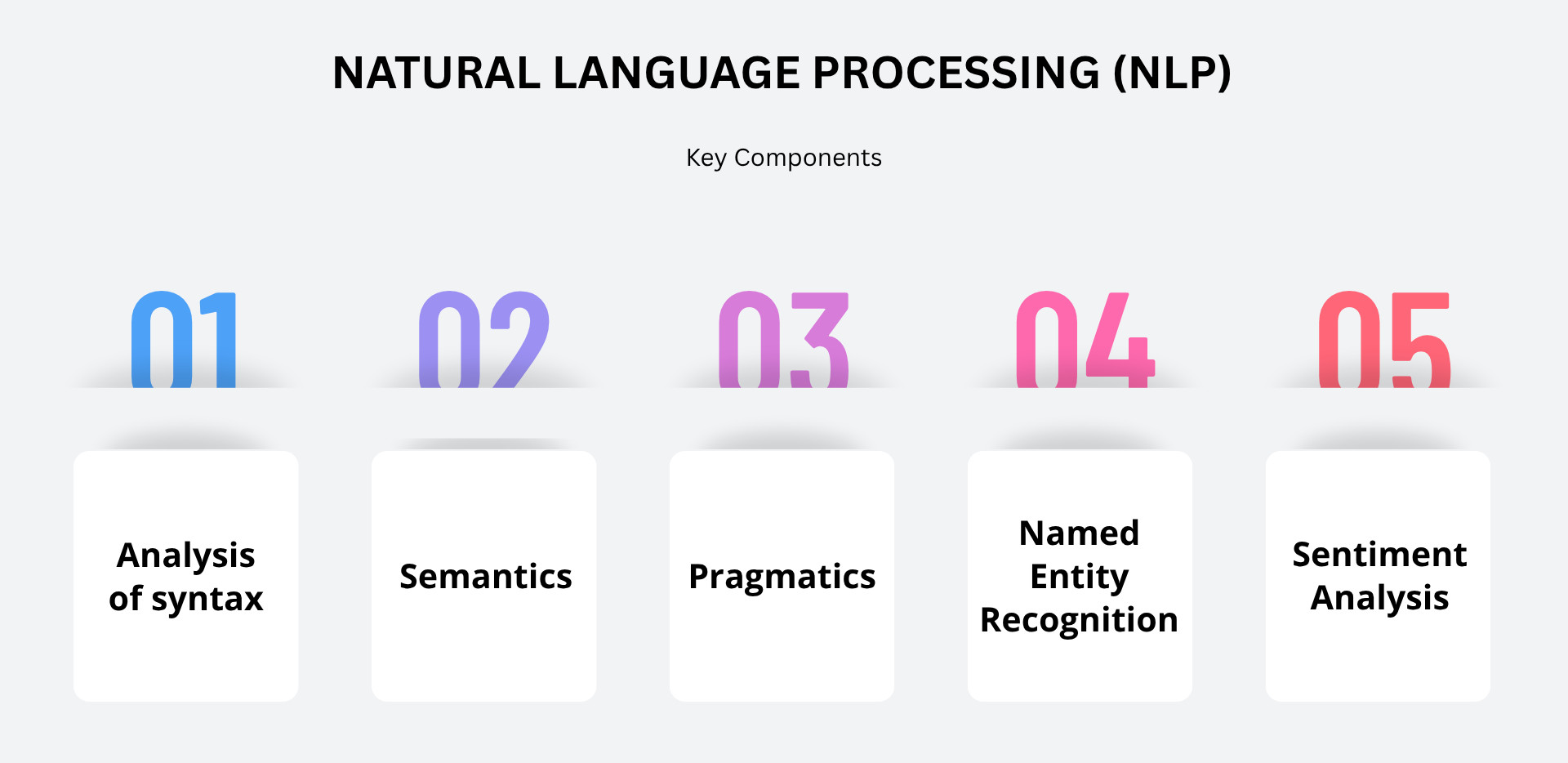

Key Components

- Analysis of Syntax: This refers to the way words are ordered (the order in which they appear), including their relative relationships to one another.

- Semantics: This is where the actual meaning of words and sentences is interpreted or extracted.

- Pragmatics: Is about grasping the context, purpose or conversational subtleties that are intended to go beyond the literal meaning.

- Named Entity Recognition (NER): Recognizes and categorizes entities such as persons, dates, organizations, and locations in text.

- Sentiment Analysis: Identifies positive, negative, or neutral sentiment expressed in a text.

Usage of NLP

- Chatbots and virtual assistants rely on NLP to interpret user questions and deliver human-like responses instantly.

- NLP is used by machine translation tools such as Google Translate to translate text from one language to another and maintain a semblance of meaning and context.

- Emotional analysis and summarization are two cases in which NLP is used to identify the feelings of text, or present a boiled down version of long content.

- NLP also turns in the legal and medical space to analyze documents to generate key points or help with research/decision making etc.

- Voice interfaces in smart homes enabled by NLP are there not only to understand spoken commands, but also to engage in natural email conversations and complete tasks.

The current NLP systems, such as GPT-5, PaLM, and LLaMA3 are based on transformer architectures. They can comprehend enormous linguistic subtleties and respond in a human manner. Read more about Natural Language Processing for Software Testing.

Generative AI

Generative AI is about creating content – text, images and designs. They are generated independent of the data that it was modelled on. It isn’t looking to classify or predict, but rather produce new artifacts (whether they be text, code, audio or images). It is derived from foundational models trained on large datasets and, as such, can extract general patterns while enabling their application to specific tasks.

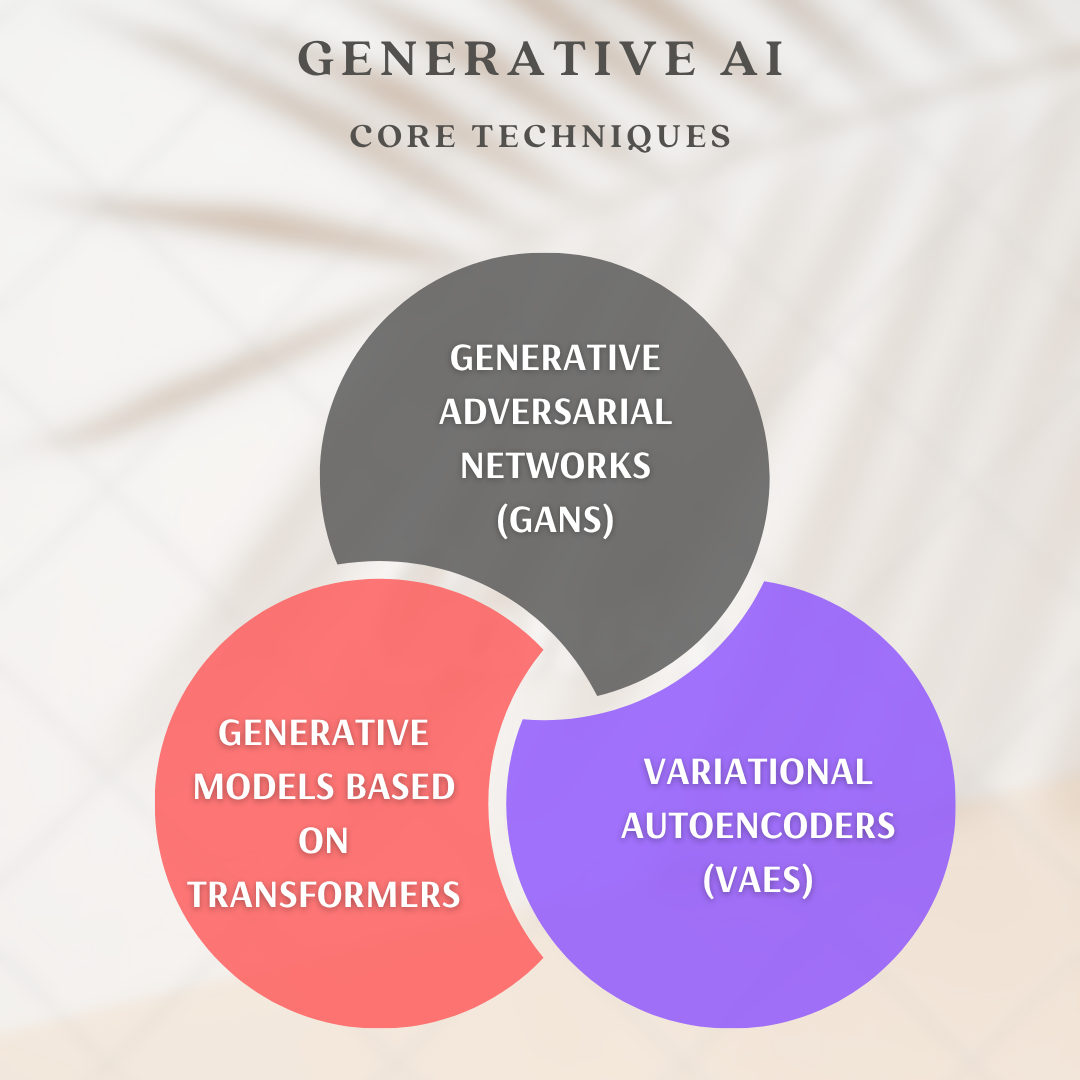

Core Techniques

- Generative Adversarial Networks (GANs): Two neural networks competing with each other, a generator to produce the data, and a discriminator to distinguish between real and fake data.

- Variational Autoencoders (VAEs): Summarise and decode data to encode as latent representations.

- Generative Models based on Transformers: Large Language Models (LLMs) such as GPT and image models such as DALL·E or Midjourney.

Usage of Generative AI

- Auto code completion, story generation, and text generation are all possible using AI models to generate coherent automation for you.

- AI is also used in the entertainment and advertising industries, and it is made for image/ video synthesis, leading to creative photo-realistic multimedia.

- Physiological processes and drug discovery use AI to predict molecular interactions, which help accelerate drug research.

- AI-enabled prototyping & design automation to help develop new ideas with innovative designs faster.

- Automating QA AI for generating fake test data is handy for mimicking coverage and automating the manual work of testing.

Generative AI is creative intelligence: the transition to imagination. Read more about Generative AI in Software Testing.

Computer Vision

Computer Vision enables machines to read and understand visual information, including images, videos, and 3D objects. It processes pixels by detecting patterns and doing spatial reasoning. It makes it possible for systems to recognise objects, and monitor activities, in real-time by ‘seeing‘ shapes, textures and movements. The technology is critical for uses ranging from facial recognition and autonomous driving to medical image analysis.

Techniques

- The process of identifying and highlighting objects is related to shape recognition, which consists of detecting and segmenting the contours of objects within an image or video.

- OCR or Optical Character Recognition is a procedure for machines to identify and convert printed or handwritten text from images into machine-readable form.

- 3D reconstruction and motion tracking enable you to reconstruct 3D spatial models along with the motion of objects between frames for applications such as AR, VR, and robotics.

- Visual Transformers (ViTs) can enable high-level scene understanding, and AI models can discern complex visual contexts and relationships in full images for better visual reasoning.

Usage

- In diagnostic and medical imaging, computer vision is used to find anomalies, which help doctors in providing an accurate assessment of patients.

- Autonomous vehicles and drones use computer vision to see their environment, detect obstacles, and travel safely.

- Robotics and industrial inspection employ computer vision for defect detection, quality control, and precision automation.

- AR and surveillance systems use computer vision to detect objects, improve real-world visuals, and intelligently observe surroundings.

Computer vision bridges the perception-decision gap by converting vision into data. Read Vision AI and how testRigor uses it.

Cognitive Computing

Cognitive computing is designed to replicate human reasoning: combining perception, learning, and decision-making to resolve ambiguous problems. Cognitive systems are concerned with understanding and reasoning, unlike ML, which is concerned with prediction. AI techniques are used together by cognitive systems:

- Pattern discovery using Machine Learning.

- NLP of language.

- Reasoning and inference knowledge graphs.

Usage of Cognitive Computing

- Healthcare and financial decision-support systems.

- Customer service contextual recommendations.

- Intentional and emotional conversational systems.

- Personalized adaptive learning platforms.

Cognitive computing is a blurring of the artificial and natural thought – making AI a reactive tool into a proactive partner.

The Interconnected Ecosystem of AI Types

There is no independent field of AI. In the present intelligent systems, various types of AI are integrated to provide holistic performance. For example:

- Self-driving vehicles combine Computer Vision (object recognition), Machine Learning (forecasting), and Reinforcement Learning (decision-making).

- Virtual assistants combine NLP (understanding), Speech Recognition (listening), and Cognitive AI (context reasoning).

- Deep Learning (image classification) and Knowledge Graphs (clinical reasoning) are used in healthcare diagnostics.

This convergence is the future of AI, multimodal intelligence, in which models can process text, vision, sound, and action simultaneously. Read more about Cognitive Computing in Test Automation.

The Strategic Value of Understanding AI Types

The awareness of the differences between types of AI is not only scholarly – it is a strategic one.

- Engineers: It informs architectural design and the choice of algorithm.

- QA Leaders: It establishes the scope of tests, data requirements, and success measures.

- Business Executives: It explains the possible effects of AI, constraints, and ROI.

Companies that comprehend the synergy between these types of AI will be able to become more innovative and responsible in terms of scale. For instance:

- Intelligent Automation: NLP + Generative AI.

- Robotics: Use Vision + Reinforcement Learning.

- Incorporate Cognitive AI + ML analytics in strategic decision systems.

Knowledge of AI typologies, therefore, is knowledge of digital evolution itself.

Conclusion

Artificial Intelligence is a constellation of connected fields that allow machines to replicate different types of perception, learning, and creation. Collectively, these five (Machine Learning, Deep Learning, NLP, Generative AI, and Cognitive AI) represent the intelligence architecture that powers our world today. After all, AI isn’t all about automation and efficiency — rather, it’s about pushing the capabilities of humans, accelerating understanding, and building systems that can learn and reason.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |