Cybersecurity Testing in 2025: Impact of AI

|

|

The scope of cybersecurity is changing all the time, new threats, vulnerabilities and vectors of attack are always appearing. As more businesses and industries shift to digital and undergo digital transformations, cybersecurity has become a major concern. In 2025, Artificial Intelligence (AI) is playing a key role in transforming the field of cybersecurity testing. This article investigates how AI changes cybersecurity testing, discusses the benefits it brings, considers problems it may create, and features real-world examples to illustrate its impact.

Introduction to Cybersecurity Testing

The purpose of cybersecurity testing is to find and repair security weaknesses in an organization’s networks, systems and applications that might be exploited by hackers. It seeks to identify vulnerabilities long before they can be taken advantage of and, at the same time, prevent systems from being compromised.

Cybersecurity testing encompasses different types of assessments, such as:

- Penetration Testing: In this type of testing, you simulate cyberattacks to identify exploitable vulnerabilities.

- Vulnerability Scanning: In this, automated tools are used to scan networks and applications for known vulnerabilities.

- Security Audits: These audits help in assessing an organization’s security policies, procedures, and practices.

- Risk Assessments: This helps to identify potential security risks and evaluate their impact.

You can go through this blog to have a detailed understanding of Security Testing.

The traditional approach to cybersecurity testing is heavily human-biased, but as the security attacks grow rapidly, this method becomes insufficient. So, now cybersecurity powered with AI tools is making a significant impact and enhancing the security of applications and infra.

The Role of AI in Cybersecurity Testing

AI can completely rebuild cybersecurity testing by taking over repetitive tasks from manual testers, doing large data set analysis, and identifying patterns that slip the eye of human testers. Let’s discuss more about the technologies impacting cybersecurity testing in 2025:

- Machine Learning (ML): Machine Learning algorithms learn from the data to detect patterns and anomalies in real-time.

- Natural Language Processing (NLP): Using NLP, you can analyze unstructured data such as emails, social media, and chat logs to identify phishing attempts or insider threats.

- Generative AI: Generative AI is used to simulate realistic attack scenarios so that system response can be tested.

- Deep Learning: This kind of advanced neural network can recognize deep-hidden patterns in data to discover advanced persistent threat (APT).

- Reinforcement Learning: It allows AI systems to learn from their interactions and improve their performance over time. This technique is particularly useful when one needs an AI-controlled handful to cope with varying warfare environments.

AI in cybersecurity testing enhances threat detection as well as threat breakdown and control. It is not only able to take care of tasks such as vulnerability detection, penetration testing and risk assessments but also can predict and prevent future attacks.

Read: Top 10 OWASP for LLMs: How to Test?

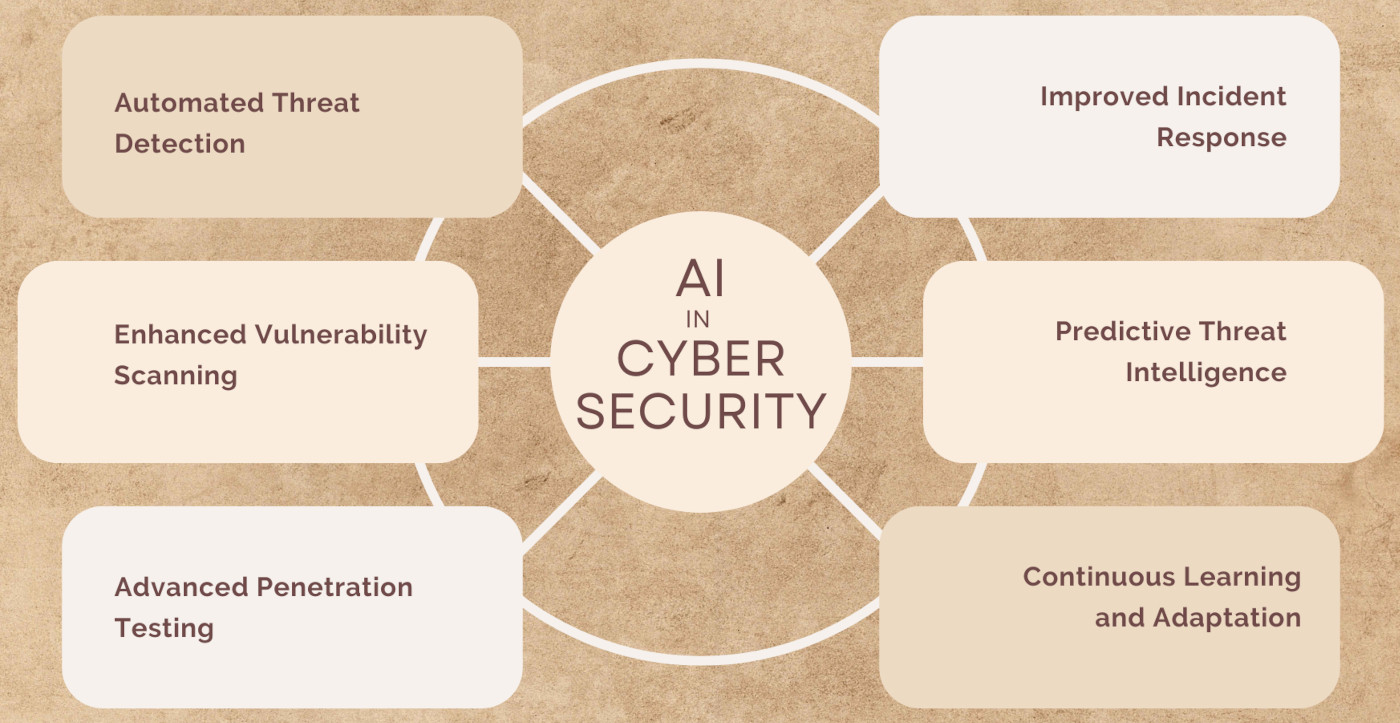

The Benefits of AI in Cybersecurity Testing

The impact of AI on cybersecurity testing is multifaceted, offering numerous benefits that enhance security posture, streamline processes, and reduce human error.

Automated Threat Detection

AI-powered systems can automate the detection of threats by continuously monitoring networks, applications, and user behavior. Machine learning algorithms can identify unusual patterns or deviations from normal behavior, which may indicate a potential breach or cyberattack. This proactive approach enables organizations to detect threats in real-time and respond quickly.

Example: In 2025, advanced AI-powered platforms like Darktrace’s Antigena are widely used to detect threats in real-time by learning the normal patterns of behavior in a network. When an anomaly is detected, the AI system triggers alerts, allowing cybersecurity teams to take preemptive action. This autonomous threat detection and response system helps businesses secure their environments from advanced persistent threats (APTs), zero-day exploits, and insider threats without constant human intervention.

Enhanced Vulnerability Scanning

Traditional vulnerability scanning tools are based on predefined signatures and rules to detect existing vulnerabilities. Nevertheless, they can not detect zero-day vulnerabilities or emerging attack vectors that have not been recorded yet. On the other hand, AI-powered vulnerability scanners can collect data from different sources such as social media, hacker forums and dark-web markets to spot new threats.

AI might also be able to predict vulnerabilities by looking at the true code of software, finding patterns which are probably harming security. Such an approach in advance makes it likely that organizations will fix vulnerabilities before anybody sees them.

Example: Imagine a major financial organization using AI to check their applications for openings. Machine learning models in the AI engine distinguish patterns in code and potential problems so that they can be fixed, such as SQL injection or cross-site scripting (XSS) vulnerabilities. By regularly inspecting the codebase or user behavior changes, AI can flag concerns even before vulnerabilities have been recognized by public threat databases.

Advanced Penetration Testing

Penetration testing, also known as ethical hacking, is an important part of cybersecurity testing. In the reconnaissance phase, systems are scanned for potential vulnerabilities, but AI can improve penetration testing by automating this phase. Furthermore, AI-driven tools are used to simulate attacks that incorporate the various tactics, techniques and procedures (TTPs) employed in real-world cyber-crime.

Example: Cobalt.io is one of the many penetration testing platforms that use AI to improve the process. These platforms use AI to map possible attack routes and then automatically simulate a sequence of cyber-assaults. The system can monitor the response from the target infrastructure and learn from these interactions to refine future experiments. An AI-based system is able to perform a wider range of attack scenarios than a human could pull off within the same period of time, offering more comprehensive coverage for possible loopholes.

Improved Incident Response

When a security breach occurs, the key is swift response and containment action. Incident response times can be improved by AI analyzing security log files and logically tracing back to the point of intrusion where a security breach originated or was made possible. Using machine learning to sift data, we can determine the timing and method of an attack, telling you all definitions like the timeline of the attack, how it originated, and what systems were affected. Using historical data and best practices as a basis for action, AI-powered incident response platforms can also recommend remediation steps.

Example: In 2025, AI-powered security orchestration, automation and response (SOAR) systems are increasingly being used for direct responses. For instance, when an AI system detects ransomware spreading on the internal network of a company, the system will automatically disconnect affected machines from this network, stop suspicious activity scooting around it, and arrange for all encrypted files’ (backups) to be restored. This immediate response prevents damage and stops the ransomware from spreading to other systems.

Predictive Threat Intelligence

AI can be used to predict threat intelligence by analyzing historical data and detecting patterns that show future detection of threats. AI can even predict potential attack vectors and proposal for steps to be executed based on modules of machine learning (ML) algorithms. If an AI system recognizes a rise in ransomware strikes on certain sectors, it alerts the organizations of those markets to take some extra precautions.

Organizations can also use predictive threat intelligence to outsmart cybercriminals by identifying new threats as they start, not after damage has been done. Taking this measure could massively lower the risk of a successful attack.

Example: For Instance, a large retail company uses AI-driven predictive analytics for over-viewing customer transactions and network traffic. Then, for example, the AI system may pick up a pattern of fraudulent credit card transactions coming from particular areas. With the early detection of these patterns, the company may add security controls like requiring multi-factor authentication for transactions originating from those countries, decreasing instances of fraud.

Continuous Learning and Adaptation

Among the important advantages of using AI in cybersecurity testing is its capacity to learn or adjust. Traditional security solutions, due to static rules and signatures, become outdated very quickly. AI-powered systems, on the other hand, can adapt their algorithms to detect emergent threats by learning from new data.

For Example, AI systems can learn from recent cyber attack strategies and theories to evolve their models accordingly for identifying such attacks in the future. Being able to learn from real-world attacks means AI-powered cybersecurity solutions will always be one step ahead of even the most adaptable malicious actors as their tactics evolve.

Challenges for AI in Cybersecurity Testing

While AI offers significant benefits in cybersecurity testing, it also introduces new challenges. These challenges must be addressed to fully realize the potential of AI in securing digital systems.

AI Bias and False Positives

An AI algorithm is only as good as the data on which it was trained. An AI that is trained on biased or incomplete data may provide incorrect outputs. For instance, an AI-powered intrusion detection system could produce a high false positive rate, labeling normal operations as alerts. Of course, this can inundate security teams with hundreds of alerts, making it impossible to ignore the rule (alert) fatigue that will set in and actually cause active threats to remain hidden.

To mitigate this challenge, AI systems need to be trained on a broad spectrum of datasets and consistently updated with newly acquired data in order to combat the problem.

Adversarial Attacks on AI Systems

The use of AI by cybercriminals is becoming more advanced and increasingly focused on finding ways to trick weaknesses that exist within these technologies. By changing some of the input data it misleads AI algorithms in favorable decision-making and is called adversarial attacks. For instance, an attacker could add noise to an image with subtle changes that can make the AI-based facial recognition system misclassify a person.

As a plan of correction, the AI systems should be designed with robust security measures like adversarial training. In this training, AI is exposed to manipulated data to improve its resilience.

Data Privacy Concerns

AI is data-hungry, and when we talk about security testing, those datasets are often very sensitive, such as user credentials, financial information, or just normal PII. AI depends on data, and therefore, the two are closely linked — if data is stored in the cloud or shared with third-party vendors, then privacy protection is also dependent!

As a mitigation, it will be necessary for organizations to enforce robust data protection policies on their AI systems, ensuring that they meet privacy requirements like the General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA). Read: AI Compliance for Software.

Complexity and Skill Gaps

Although effective, AI-driven cybersecurity solution is complicated to implement and needs special skills for management. A lot of companies do not have the skills to implement and manage AI systems properly. Plus, it can be hard to deploy AI for security use cases with certain types of legacy systems still in place.

Organizations need to overcome these hurdles by investing in the cybersecurity training of relevant employees so they can effectively execute AI-driven strategies.

Real-World Examples of AI in Cybersecurity Testing

The impact of AI on cybersecurity testing can be seen in several real-world examples, where AI-powered solutions have enhanced security posture and mitigated cyber threats.

Example 1: Darktrace ActiveAI Security Platform

Darktrace is a cybersecurity company that offers solutions using artificial intelligence to identify and respond in real-time fast enough to combat cyber threats. Darktrace’s AI platform works to learn what ‘normal’ looks like in a network. Should the AI see that something sketchy is going on, it will set off an alert and may execute a response to curb the menace.

For instance, Darktrace’s AI observed a data exfiltration attack that was being carried out slowly and quietly over time as hackers were trying to steal sensitive information. The AI detected the unusual data transfers and disabled the hacked system before any further damage could be done.

Example 2: Microsoft’s Azure Sentinel

Azure Sentinel delivers intelligent security analytics and threat intelligence across the enterprise, providing a single solution for alert detection, threat visibility, proactive hunting (to search for active threats), etc. The service uses Big Data to analyze security data and, therefore helps organizations detect, investigate and respond real time threats. Azure Sentinel utilizes AI to detect correlations across the full breadth of an organization’s infrastructure and help detect security incidents.

Azure Sentinal detected a phishing attack by using algorithms for email metadata, and user behavior. This alerted the security team before any users clicked a link to phishing campaign.

Conclusion

In 2025, AI is revolutionizing cybersecurity testing by automating vulnerability assessments, enhancing threat detection, and improving efficiency. However, AI introduces challenges such as adversarial attacks, ethical concerns, and the potential misuse by malicious actors.

Organizations must balance the benefits of AI with these risks while ensuring human oversight and adapting to evolving regulations. Those who effectively use AI will be better positioned to defend against the growing array of cyber threats.