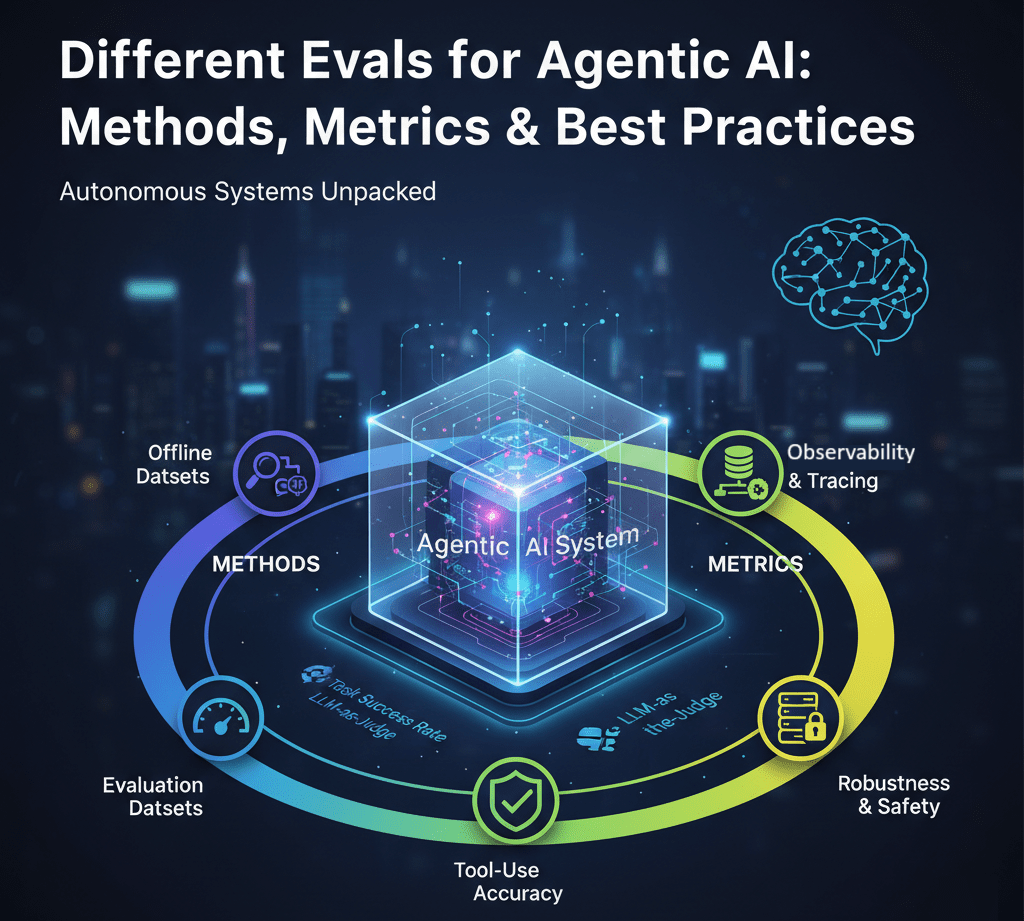

Different Evals for Agentic AI: Methods, Metrics & Best Practices

|

|

When you think about AI, you might think of models like ChatGPT — powerful systems able to produce text based on a single prompt. That’s changing fast. With Agentic AI, we finally have systems that don’t just know the answers; they do things.

This shift means that old methods of assessing AI will need to be reworked. This is because they are non-deterministic, multi-step, and quite complex; we can not take their last output to be reliable. We do need to grade their behavior.

Let’s look at how to work with Agentic AI differently and come up with more appropriate QA standards for them.

| Key Takeaways: |

|---|

|

What is Agentic AI?

An Agentic AI system isn’t just an LLM; it’s a complex, integrated software architecture designed to exhibit autonomy and pursue multi-step goals with minimal human intervention. To put it simply, an Agent moves the AI paradigm from simply being a sophisticated calculator to being a dedicated digital employee.

The concept of “agency” means the system can perceive its environment, form a plan, take action, monitor the results, and correct its own course – all to accomplish a complex objective. It is the ability to take initiative rather than just waiting for the next instruction.

General Architecture of Agentic AI

- The Reasoning Engine (The Brain): This is the underlying LLM (or a smaller, purpose-built model). It takes the user’s high-level goal and, using a framework, breaks it down into a sequence of intermediate steps. It’s responsible for generating the plan, selecting the right tool, and determining if a step was successful.

- Memory (Context and State): Unlike a stateless chat model, an Agent needs persistent memory.

- Short-Term Memory: Maintains the context of the current session (the ongoing chain-of-thought, the previous tool outputs).

- Long-Term Memory: Stores general knowledge, user preferences, and past experiences (often implemented using vector databases for RAG or persistent key-value stores).

- Tool Belt (The Hands): These are the functional interfaces that allow the Agent to interact with the external world. These tools are often implemented as function calls or APIs. Examples include:

- A web search engine to acquire real-time data.

- A code interpreter to run Python code for mathematical calculations.

- APIs for SaaS applications (Slack, Jira, Salesforce) or internal enterprise systems.

- Execution Loop (The Will): This is the control mechanism. It ensures the Agent’s reasoning is translated into action. The loop repeats:

- Observe: Perceive the state of the environment (or the output of the last tool call).

- Reason: The LLM decides the next step based on the goal, the plan, and the observation.

- Act: Execute the chosen tool/API call.

- Reflect: Evaluate the answer compared to the expectations. In the case of an error or deviation in the result, the loop sends this failure to a Reasoning Engine, which then draws up a new, remedial plan (self-correction).

This cycle of planning, doing, and reflecting is the process of being an agent and what enables it to automate business logic that no script could solve.

Challenges with Agentic AI Testing

The Multi-Step, Non-Deterministic “Black Box” Problem

Traditional software testing relies on deterministic behavior: If I input A, the output must be B. Simple LLM testing is about one step: Given this prompt, is the answer accurate?

- Plan: Decompose the task.

- Action 1: Call the database API to find tickets.

- Action 2: Call the LLM (Reflection) to summarize the tickets.

- Action 3: Call the Jira API to create the ticket.

- Audit Trail Complexity: When the agent fails, it’s often impossible to definitively point to a single line of code or a single model step as the root cause. Was it a bad initial plan, a memory lapse, or a faulty API call? The internal “thought process” is a black box.

- Coverage Crisis: Creating a test suite to cover every possible multi-step branching path, tool-use scenario, and reflection loop is exponentially complex and quickly becomes intractable.

Tool-Use and External System Reliability

An agent is useless without its Tool Belt (the APIs and external systems it interacts with). The evaluation must extend beyond the LLM’s reasoning and into the successful execution of those actions in the real world.

- Integration Brittleness: We must test how the agent handles the unpredictable nature of external systems: API timeouts, incorrect HTTP response codes, unexpected schema changes, or authentication failures.

- Input Fidelity: The agent must generate the exact required payload for an API call. A single misplaced comma in a JSON body generated by the LLM can cause a catastrophic failure, and testing for this kind of “tool-call-hallucination” is highly granular and difficult to automate fully.

- State Management: An agent often changes the state of the system it’s working in (e.g., updating a database record or booking a seat). A critical challenge is ensuring that a test run doesn’t corrupt a live environment and that the agent maintains consistent context across all state changes.

Dynamic Environments and Model Drift

When AI Agents operate in an emerging environment, their behavior also emerges.

- Emergent Behavior: The behavior of two or more simple agents or two or multiple actions in the context of a single agent is combined, which can result in unexpected and/or undesired (emergent) behavior.

- Model Drift: Because agents interact with live, real-world data and sometimes learn or adapt over time, their performance can degrade – or drift – if the nature of the input data or the external tools they rely on changes. For example, if the layout of a webpage that a scraping tool relies on changes, the agent must detect the change and self-correct, and the test suite must be able to validate this recovery capability.

- No “Golden Output”: For many complex tasks, there isn’t a single “correct” answer to test against. If an agent is tasked with “Draft a marketing strategy for Product X”, success is subjective. This necessitates using a secondary LLM (LLM-as-a-Judge) to evaluate the quality, tone, and completeness of the final output, adding another layer of complexity and potential bias to the evaluation pipeline.

Evaluation Methods for AI Agents

Offline vs. In-the-Loop Evaluation (Static vs. Dynamic)

- Offline (Static) Evaluation: This is the equivalent of unit or regression testing in traditional software. It uses a fixed, pre-labeled dataset (the ground truth) in a sandbox environment where API calls are usually mocked or faked. This method is fast, cheap, and repeatable, making it perfect for regression testing – ensuring a new model or prompt change hasn’t broken an existing capability. However, it completely fails to capture how the agent performs against live, real-time data or API latency.

- In-the-Loop (Dynamic) Evaluation: This is essential for agents because it simulates the “real world.” The agent is allowed to execute its actions against live (or near-live) services, meaning it will experience network errors, real-time data changes, and non-deterministic API responses. This is implemented either via safe, sandboxed environments (staging/pre-production) or, once high confidence is established, through low-exposure A/B testing in production, where a small fraction of real user traffic is routed to the new agent version. Dynamic evals are costly and slower, but provide the only true measure of an agent’s resilience and reliability.

Programmatic Evaluation: The LLM-as-a-Judge Framework

Because Agentic AI tasks often lack a simple “right or wrong” answer (e.g., evaluating the quality of a generated report or the coherence of a multi-step plan), we automate the grading using a powerful, external AI known as the LLM-as-a-Judge.

- Concept: Instead of manually grading thousands of outputs, we feed the agent’s execution trace (the full log of steps, tool calls, and final output) to a highly capable, proprietary model (like GPT-4 or Gemini). We instruct this “Judge” model with a detailed rubric (e.g., “Score the output for tone, factual accuracy against source data, and logical consistency on a scale of 1-5”).

- Applications: This framework excels at evaluating subjective qualities like:

- Coherence and Readability: Did the agent maintain a logical thread throughout its multi-step process?

- Step Utility: Did every action the agent took contribute positively to the final goal?

- Safety: Did the agent violate any guardrails regarding sensitive topics or data handling?

- Best Practices: The key challenge here is bias. To ensure fair scoring, the prompt given to the Judge model must be meticulously crafted to be clear, objective, and blind to which agent produced the result.

Human-in-the-Loop (HITL) and Expert Review

Even with sophisticated LLM judges, human oversight remains non-negotiable, particularly in high-stakes domains (finance, healthcare, legal).

- When to use HITL: Human review is paramount when evaluating qualitative metrics like user experience, ethical compliance, and overall safety. If an agent performs a task correctly but uses a confusing or offensive tone, only a human can reliably catch that.

- Methods:

- Expert Trace Review: Instead of reviewing every output, domain experts inspect the detailed failure traces (the log of steps) from high-impact or failed tasks to identify systemic weaknesses in the agent’s reasoning or planning logic.

- User Satisfaction: Collecting real-world feedback via satisfaction surveys or explicit thumbs-up/thumbs-down signals after agent interaction.

- A/B Testing with Human Feedback: Comparing a new agent version against a baseline, and measuring not just Task Success Rate, but human-labeled metrics like “Helpfulness” or “Clarity”.

- AI and Closed Loop Testing

- What is AI Evaluation?

- Generative AI vs. Deterministic Testing: Why Predictability Matters

- What is Explainable AI (XAI)?

- AI Model Bias: How to Detect and Mitigate

- What are AI Hallucinations? How to Test?

- What is Adversarial Testing of AI

- What is Metamorphic Testing of AI?

Common Metrics for AI Agentic Systems

Unlike traditional AI models that are often graded on a single metric like image classification accuracy, Agentic AI must be measured across multiple dimensions. Since agents perform multi-step tasks, their success depends not just on the final answer, but on the entire process, including planning, tool use, and resilience to errors.

| Metric | Explanation | Why It Matters |

|---|---|---|

| Task Success Rate (TSR) | The percentage of end-to-end tasks the agent completes correctly and fully without requiring human intervention. This is measured against a predefined goal or ground truth. | Directly reflects the agent’s value proposition. If it is low, the system is fundamentally unreliable. |

| Tool/Action Selection Accuracy | A granular metric that assesses whether the agent selected and correctly used the appropriate external tool (e.g., calling the right API, querying the correct database) at each step of its plan. | Pinpoints failure in the agent’s reasoning or planning logic. A low score here means the agent’s ‘brain’ can’t correctly map a sub-goal to a required action. |

| Task Adherence (or Fidelity) | Measures how closely the agent’s executed steps align with the user’s initial high-level instruction or the agent’s own internal plan (Chain-of-Thought). | Ensures the agent doesn’t “drift” from the core objective or engage in unnecessary, off-topic actions, which impacts both accuracy and cost. |

| Efficiency (Latency/Duration) | The average time taken from the user providing the initial input to the agent successfully delivering the final, correct output. | Essential for User Experience (UX). A correct answer that takes five minutes is useless in a live chat or high-frequency environment. |

| LLM Token Usage per Task (Cost) | Tracks the total number of input and output tokens consumed by the Large Language Model during the entire multi-step process. | Directly relates to cloud API costs. Monitoring this helps optimize prompts and planning to ensure the agent uses minimal ‘thought’ to reach the goal. |

| Autonomy (Decision Turn Count) | The number of consecutive steps or decisions the agent executes without human oversight or requiring an immediate human-in-the-loop (HITL) fallback. | The higher the score, the more truly autonomous the agent is, leading to greater operational scalability and reduced staff workload. |

| Robustness/Stability (Recovery Rate) | The percentage of failures (e.g., API timeouts, unexpected data errors, network issues) the agent detects and successfully recovers from through retries, alternate tool selection, or re-planning. | Measures resilience. A robust agent doesn’t crash; it adapts, maintaining service continuity even when its external environment is unstable. |

| Hallucination Rate | Measures the frequency with which the agent generates factually incorrect, illogical, or ungrounded statements, particularly when using Retrieval-Augmented Generation (RAG). | A key safety metric. High hallucination undermines user trust and can lead to severe business or compliance risks. |

Best Practices for Agentic AI Evaluation

Here are the important best practices for AI evaluation.

Build a Comprehensive Evaluation Dataset

A model is only as good as the data it’s tested on. For agents, this means creating test scenarios that reflect the chaos of the real world, not just textbook examples.

- Synthetic Benchmarks: Start by generating large, structured datasets designed for deterministic testing. These datasets should cover a broad range of tasks and include clear, pre-defined “ground truth” steps and final correct answers. These are ideal for running fast, cheap regression tests every time you change the agent’s logic.

- Real Task Replay: The gold standard is replaying anonymized logs from real production failures or user sessions. By feeding the exact inputs that caused a previous version of the agent to fail, you ensure the new version doesn’t break the same way. This focuses your testing effort on the most impactful, real-world edge cases.

Instrument for Deep Observability and Auditing

You can’t fix what you can’t see. For complex, multi-step agents, standard application logging isn’t enough; you need full visibility into the agent’s internal reasoning process.

- Trace Logging: Implement systems that capture the complete log, the “trace”, of the agent’s internal steps for every single run. This trace must include: the input prompt, the agent’s intermediate thought process (its Chain-of-Thought), the specific tool call it made, the tool’s output, and the final decision. This is your digital “audit trail” for debugging failures.

- Failure Tagging: Don’t just log a failure; categorize it. Systematically tag the root cause of every unsuccessful task (e.g., tool_api_timeout, hallucination_factual, planning_loop, misinterpreted_goal). This data allows for automated root cause analysis and helps engineering teams prioritize fixes based on the most common failure modes.

Integrate Evaluation into CI/CD Pipelines

For software engineering teams, continuous integration/continuous deployment (CI/CD) is mandatory; the same discipline must apply to Agentic AI.

- Mandatory Evaluation Gates: Make performance evaluation a non-negotiable step before any new agent code or prompt is deployed. If the agent’s key metrics (like Task Success Rate) don’t meet a set threshold on your golden benchmark dataset, the deployment should automatically fail and block.

- Establish Performance Baselines: Define a stable baseline of performance for the current production agent. Set up regression alerts that trigger if a new code commit causes a significant drop in any key metric (e.g., “If TSR drops by more than 5%,” or “If average latency increases by more than 100ms”). This prevents seemingly small code changes from introducing unexpected and costly degradation in performance.

Tools For Agentic AI Evaluation

Agentic AI systems, which involve large language models (LLMs) that can reason, plan, and use external tools to execute multi-step tasks, require specialized evaluation tools. Here’s a list of some of the most popularly used tools in the industry.

| Tool/Platform | Primary Category | Core Function |

|---|---|---|

| LangSmith | Observability & Tracing, Built-in Evaluation | Provides full-stack logging and tracing to capture every step of an agent’s run (LLM calls, tool usage, memory). It natively incorporates LLM-as-a-Judge capabilities to score agent outputs on custom criteria. |

| DeepEval | Evaluation & Tracing, LLM Testing Framework | A testing framework (like Pytest for LLMs) that offers both advanced LLM-as-a-Judge metrics (like G-Eval) and component-level tracing for debugging. |

| Dynatrace, Arize AI, PostHog | Monitoring & Analytics | Tools that provide real-time monitoring of agent performance, cost, and operational metrics in production. |

| RAGAS | Automated Evaluation Frameworks (RAG Metrics) | An open-source framework dedicated specifically to measuring the quality of Retrieval-Augmented Generation (RAG) pipelines. |

| OpenAI Evals | Automated Evaluation Frameworks & Benchmarks | An open-source framework and registry from OpenAI for creating structured, reproducible tests (known as “Evals”) to systematically measure model performance. |

| AgentBench, GAIA | Standardized Benchmarks | Publicly available test suites are used to assess an agent’s planning, reasoning, and tool-use capabilities. |

| MLflow Evaluate | LLMOps Evaluation Component | The model evaluation component of the MLOps platform, MLflow, integrates evaluation metrics (including LLM-specific ones) directly into your model tracking and deployment workflows. |

Apart from these specialized tools, a generative AI-based tool like testRigor can also help with agentic AI evaluation, primarily by providing a robust, stable, and highly adaptable platform for end-to-end testing of the AI agent’s overall performance, its tools, and its user interface. With this tool, you can effectively test the external behavior of the agent, which is crucial for agentic AI evaluation.

-

Checking End-to-End Task Success: Agentic AI evaluation focuses on the agent’s ability to complete a multi-step task (its TSR). testRigor is designed for this type of complex, end-to-end user flow. You can write a test case in plain English that describes the goal of the agent, not just a single interaction. For example:

go to the booking page enter "New York" in “destination” click “first available flight” verify that the price is displayed

If the AI agent is a chatbot that handles this flow via conversation and tool use, testRigor interacts with the final UI or API to validate the outcome. If the agent makes a mistake in any step of its internal planning, the final test will fail, proving a break in the Task Success Rate. - Testing Tool Use and API Interactions: Agents rely on external tools (APIs, databases, web searches) to complete their tasks. Testing the functionality and reliability of these tools is essential. testRigor has strong capabilities for:

- API Testing: You can integrate API calls directly into your end-to-end tests to validate that the agent correctly invoked an external service and that the service returned the expected data. These tests require zero coding and can be written in plain English.

- Data Validation: You can validate data extracted from web pages (via the agent’s actions), emails, SMS, or even databases to ensure the agent used the information correctly in its final output.

- Testing Robustness and Security: The probabilistic nature of LLMs means they are vulnerable to prompt injection—a key security risk for agents. testRigor allows you to easily inject adversarial or contradictory natural language prompts into the agent’s chat interface (or other input fields) to test its guardrails.

- Stability in Dynamic Environments: Agent UIs and the tools they call can change frequently. Traditional testing tools often break when the underlying code changes. testRigor’s core functionality is its AI-based element identification and self-healing tests.

- It uses Natural Language Processing (NLP) to find UI elements as a human would (click “Submit Button”) rather than relying on brittle technical locators like XPaths.

- If a button is moved or slightly renamed, the test often self-heals and continues running, dramatically reducing the maintenance burden and providing a more stable testing environment for rapidly evolving AI agents.

- This, in turn, reduces test maintenance time and cost drastically and gives a reliable analysis.

- Chatbot Testing Using AI – How To Guide

- Top 10 OWASP for LLMs: How to Test?

- AI-Based Self-Healing for Test Automation

- AI Features Testing: A Comprehensive Guide to Automation

- How to use AI to test AI

Conclusion

There’s a clear takeaway: as we enter the age of Agentic AI, success must be defined in a fundamentally different way. No longer can you depend on a single, clean metric from a test set. If you have a mix of evals that cover different things, then it gives you a multi-dimensional view. The first step is to use tools at every level. Such deep observability is the only means to debug, audit, and ultimately build reliable systems that are delivering business value.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |