Different Software Testing Types

|

|

“If an end user perceives bad performance from your website, her next click will likely be on your-competition.com.”― Ian Molyneaux

You require thorough software testing to ensure that your user does not click on your competitors’ website/app and stays with you as a loyal customer. But before we look at the different types of software testing, let’s first understand what software testing means.

Software Testing: Definition

Software testing is a systematic process that aims to ensure the quality of a software application by identifying discrepancies between its actual performance and expected outcomes. Testers evaluate the software’s functionality, speed, security, and user-friendliness by using manual techniques and automated tools.

The primary objective is to detect any flaws, confirm no errors, and ensure the software aligns with its design goals and functions consistently. This process involves identifying errors, ranging from programming bugs and overlooked features to minor glitches. Whether the software is for mobile devices or web browsers, testing ensures that users’ experiences match expectations.

Types of Software Testing

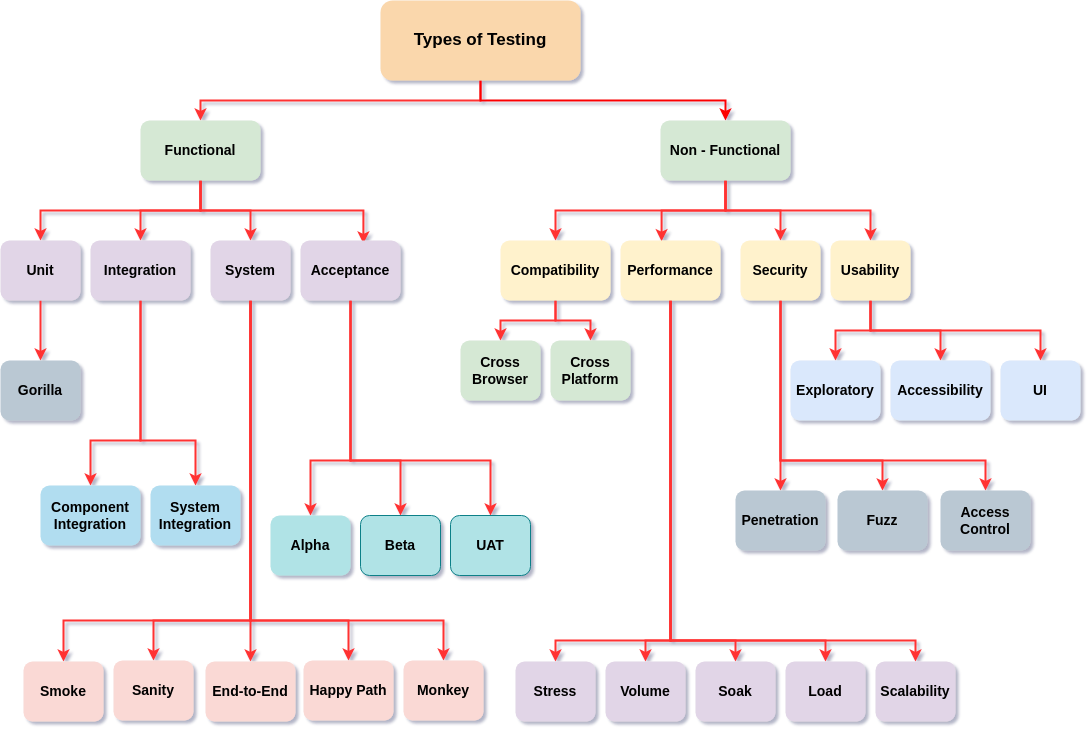

At a high level, software testing can indeed be broadly categorized into –

- Functional Testing

- Non-Functional Testing

So, let’s go through each one and see how they are further classified.

Functional Testing

Functional testing is a form of software testing aimed at ensuring that a software system operates in line with its specified requirements and expectations. This testing method ensures that the system functions as intended and fulfills the functional requirements outlined by stakeholders.

It delves into the actions of the software system, examining how it executes its tasks. Carried out from a user’s viewpoint, functional testing evaluates specific features by supplying inputs and verifying the corresponding outputs.

Functional testing can be further classified into –

- Unit Testing

- Integration Testing

- System Testing

- Acceptance Testing

Unit Testing

It is a software testing method where a software application’s smallest testable components or “units” are individually and independently tested to ensure they function as intended and meet their design specifications. It’s aimed at ensuring that individual parts of the source code are working right, often before they’re integrated with other components.

Gorilla Testing

It involves exhaustive, intensive, and repeated testing of a particular module or function within an application to ensure its robustness and reliability. The name “gorilla” indicates the depth and thoroughness with which the specific unit is tested, akin to beating it aggressively like a gorilla.

Advantages – Detection of hidden defects, increases reliability, simplicity, comprehensive

Example – Imagine a word processing software application with a feature that saves a document when a user presses the “Save” button. In gorilla testing:

- A tester might consecutively click the “Save” button hundreds or thousands of times to ensure the software doesn’t crash or show any unexpected behavior.

- The tester might also try saving documents of varying sizes and formats repetitively to see if there’s any memory leak or slow down over time.

Integration Testing

Integration testing ensures individual units or components of a software application are combined and tested as a group. The primary aim is to verify if different modules or services work together as expected. The main objective of integration testing is to detect defects in the interaction between integrated components.

It ensures that integrated units work cohesively and checks the data communication among these modules. This type of testing is crucial for systems that rely on interconnected modules, as individual modules might function perfectly in isolation but fail when integrated.

Advantages – Detects interface issues, reduces system integration failures, validates data communication, and supports modular development.

Example – Consider an online bookstore application with modules like User Account, Search, Shopping Cart, and Payment. Unit testing focuses on testing these modules individually, but integration testing happens when the User Account and Search or Shopping Cart and Payment Module are integrated.

Component Integration Testing

The application’s individual components (or units) are combined and tested. This testing specifically targets the interfaces and interactions between these components.

System Integration Testing

System Integration Testing, or SIT, is a phase in which individual software modules or applications are integrated and tested as a complete system. It focuses on verifying the interactions between different systems or subsystems, ensuring they work cohesively. The primary goal of SIT is to validate that interconnected systems cohesively share and process data, ensuring that the combined system functions as intended.

This testing is crucial for systems that depend on multiple subsystems or external systems, providing no interface issues between them.

System Testing

System testing focuses on testing the entire software application in an environment that closely mirrors the real world or production environment. It evaluates the application’s functional and non-functional requirements to ensure the complete system behaves as designed. The main goal of system testing is to validate the end-to-end functioning of a software system and ensure it meets the specified requirements. It aims to find defects that might arise when the software runs in its actual environment, considering all its features and functionalities.

Smoke and Sanity Testing

Smoke and sanity testing are both types of preliminary testing, often used to decide whether a software build is stable enough to undergo further, more detailed testing. Let’s discuss them one by one.

Smoke Testing

Smoke Testing, sometimes referred to as “Build Verification Testing“, is a type of software testing where the significant functionalities of an application are tested to determine if they work correctly. It’s like a “first impression” test for a new software build, determining if it’s stable enough for further testing. The primary objective is to ensure that the critical functionalities of an application are working as expected. It helps in detecting significant issues in the initial phases of software development.

Advantages – Early detection of defects, quick feedback, build verification

Example – For a web-based email application, Smoke testing will check if the user can create an account, send or receive email, etc.

Sanity Testing

Sanity Testing is done after receiving a software build, with minor changes in code or functionality, to ascertain that the bugs have been fixed and that these changes introduce no further issues. The main objective is determining whether the specific functionality or the bug fix works as expected. It’s a narrow regression test focusing on one or a few functionalities.

Advantages – Apart from Smoke testing, this also helps narrow the focus to a particular feature.

Example – In the same email application, if there was a previous bug that emails with attachments weren’t being sent, a sanity test after the supposed bug fix would involve sending emails with various attachments to ensure the specific issue was resolved.

End-to-End Testing

End-to-end (E2E) testing is a comprehensive software testing methodology that evaluates an application’s behavior flow from start to finish. It ensures that the entire data flow process and interactions between integrated components, systems, and databases work seamlessly, simulating real-world scenarios.

Advantages – Real-world scenarios help ensure data integrity, validate integrations and reduce future costs.

Example – Consider an e-commerce platform; consider a test scenario with the below steps:

- A new user registers, with their details traversing from the front-end UI, being validated by the Business Logic Layer, and finally stored in the database via the Data Access Layer. Post-registration, the user browses products.

- The items displayed are fetched from the database, passing through the data access and business logic layers to the front end.

- The user then adds a product to their cart and checks out, triggering inventory checks and payment processing through all layers.

- Upon successful purchase, the database logs the transaction, updating product quantities.

- Finally, the user leaves feedback captured on the UI, processed by the business logic, and stored in the database.

Happy Path Testing

Happy Path Testing, or Positive Path Testing, involves testing a system with expected inputs and conditions to achieve a known outcome. It tests the application by inputting only valid data and ensuring it behaves as expected without encountering exceptions or errors.

Advantages – A quick indication of the system’s health, the foundation for further testing, confirms positive flow.

Example – Consider an online login form where – The user enters a correct username and password and clicks the “Login” button. The application successfully logs the user in and redirects them to the dashboard.

In this scenario, Happy Path Testing would only consider the situation where a user provides the correct username and password and expects a successful login. It wouldn’t test for incorrect passwords, empty fields, or other potential error scenarios.

Adhoc Testing

Adhoc Testing, often called “chaos testing”, ensures the system is tested by providing random inputs and observing its behavior without any specific predefined test cases or systematic approach. Adhoc Testing aims to uncover system crashes or unpredictable behavior under chaotic conditions. It seeks to discover unknown issues in the software by simulating how a user might behave if interacting with the software without any specific goal or knowledge.

Advantages – Discover unexpected scenarios, extensive coverage, no pre-knowledge required, robustness check

Acceptance Testing

It is a formal phase of software testing where the client or end-user validates the system to ensure that it meets its specified requirements and determines whether it’s acceptable for delivery. This type of testing is typically the last phase before the software product goes live or is delivered to the customer to:

- Validate the complete functionality of the application against business requirements.

- Ensure the system is ready for release and satisfies user needs.

- Confirm that specified criteria are met, assuring the stakeholders that the product delivers the expected value.

Acceptance testing can be classified as Alpha, Beta, and User Acceptance Testing.

Alpha Testing

Alpha testing is one of the user acceptance testing phases where the software product is tested in-house, i.e., within the organization that developed it, before being released to external users. It is typically conducted after system testing and before beta testing. Alpha testing often involves both the testing and development teams operating in a controlled environment.

Advantages – Reduces post-release defects, helps identify and fix major bugs before the product reaches beta testers or the public, and developers get immediate feedback, allowing for swift corrections.

Example – Consider a software company developing a new word processing application. During Alpha testing –

- A select group of internal testers is chosen to use the application as if they were the end users.

- They create documents, format text, insert images, save files, print documents, and use other application features.

- While doing so, they provide feedback regarding usability, performance, and potential bugs.

- The development team receives this feedback and works on resolving any identified issues before proceeding to the beta testing phase.

Beta Testing

Beta testing happens when a nearly finished product is released to a limited group of external users who are not a part of the development team for evaluation, feedback, and bug identification. It follows alpha testing and is conducted outside the development team, allowing real users to test the software in real-world conditions.

Advantages

- It helps in understanding the product’s market fit and potential areas of improvement.

- Catches and addresses potential issues before a full-scale release, thus avoiding costly post-release fixes.

- Direct feedback from actual users helps identify real-world issues and gauge user satisfaction.

Example – Consider a company developing a new mobile game. During beta testing, the below steps are followed:

- The company released the game to select users who signed up for the beta test.

- These users play the game on their devices, exploring features, completing levels, and using in-game purchases.

- They provide feedback on game mechanics, potential glitches, graphics quality, and enjoyment.

- Some users might report crashes or difficulties with certain levels on specific phone models.

- The company collects all this feedback, addresses the issues, and proceeds with the game’s final release, ensuring a smoother experience for the wider audience.

User Acceptance Testing

UAT, or User Acceptance Testing, is the final software testing phase before formally releasing the software. In this phase, the users test the software to ensure it meets their needs and requirements. It verifies that the solution works for the user and can handle required tasks in real-world scenarios according to specifications.

Advantages –

- Ensures the system meets business needs.

- It lets users become familiar with the new system before it goes live.

- Offers a chance to get feedback directly from end-users, ensuring their satisfaction.

Example – Suppose a bank is deploying a new online banking platform. Before launching it to all its customers, the bank invites a select group of employees and perhaps some long-term customers to use the platform. They’re asked to perform various tasks like transferring money, checking balances, and setting up recurring payments.

During this testing phase, one user identifies that the system doesn’t confirm setting up a recurring payment, leaving them unsure if the action was successful. The bank can rectify this issue based on the feedback before the platform’s wider release. This is a simplified representation of UAT in action.

Non – Functional Testing

Non-functional testing evaluates a software application’s non-functional attributes, such as performance, usability, and reliability, rather than specific behaviors. Instead of examining if a system performs its intended tasks, non-functional testing ensures that the software meets standards for user experience, operates efficiently under load, remains secure against potential threats, and functions reliably across different environments and devices.

Essentially, it’s about ensuring the software works correctly and delivers a quality user experience in various real-world scenarios.

Non-functional testing can be classified into:

- Security testing

- Performance testing

- Usability testing

- Compatibility testing

Security Testing

Security testing is software testing to identify vulnerabilities, threats, and risks and ensure the software system is protected against potential attacks. It’s designed to identify weaknesses in the system’s defenses that might allow unauthorized access, data breaches, or other malicious activities.

Different types of security testing are:

- Penetration testing

- Fuzz testing

- Access control testing

Penetration Testing

Penetration testing, often called “pen testing” or “ethical hacking,” involves simulating cyberattacks on a software application, network, or computing environment to identify vulnerabilities that malicious actors could exploit. It is an in-depth form of security testing performed from the perspective of potential attackers.

Advantages – Proactive security, informed decision-making, regulatory compliance, and trust building.

Example – An e-commerce company decides to undergo penetration testing to ensure the security of its platform. A team of ethical hackers attempts to exploit potential vulnerabilities. They might use techniques like SQL injection to try to access the database or attempt to bypass authentication mechanisms to gain unauthorized access. By the end of the test, the company will have a clear picture of potential security lapses. It can work on fortifying its defenses before any actual attacker attempts to exploit them.

Fuzz Testing

Fuzz testing, often simply referred to as “fuzzing,” is a software testing technique that involves feeding a system with large amounts of random or malformed data to find vulnerabilities, particularly those related to crashes or exploitable conditions. The goal is to stress-test an application beyond typical user scenarios to identify weak points that might not surface during regular testing.

Advantages – Automated vulnerability discovery, proactive security, enhanced software stability, comprehensive input testing

Example – Imagine a web application that accepts image uploads. A fuzz tester might automatically send thousands of malformed image files to see if they cause the application to crash or behave unexpectedly. For instance, by introducing an error in the header of a PNG image, the fuzzer tests if the application can gracefully handle or reject such a file. If the malformed image causes the system to crash or, worse, allows arbitrary code execution, the developers know they have a severe vulnerability to address.

Access Control Testing

It focuses on verifying and validating the effectiveness of mechanisms that restrict unauthorized access to resources, functions, or data within a system. It ensures that users (or systems) can perform only those actions they are permitted to and are restricted from those they’re not.

Advantages – Data protection, compliance, auditing and monitoring, trust building.

Example – Consider a company’s internal portal with different access levels for employees, managers, and administrators where:

- Employees can view their personal information and pay stubs but cannot access other employees’ data.

- A manager may have access to the data of all team members, along with certain managerial functionalities like approving time off.

- An administrator may have the right to add or remove user accounts and change system configurations.

Access control testing, in this scenario, would involve:

- Confirming that an employee cannot access another employee’s personal data or managerial functions.

- Checking if a manager cannot perform administrative tasks but can access team data.

- Ensuring that administrators have all the rights they need.

- Trying to bypass these controls to see if any vulnerability or loophole might allow unauthorized access.

Performance Testing

It focuses on determining how a system performs under a particular workload. It doesn’t focus on finding bugs but on identifying performance bottlenecks in the system. This testing encompasses various subtypes: load, stress, and endurance.

We can check the performance of an application by load, stress, soak, volume, scalability, endurance, and recovery testing. Let’s go one by one.

Load Testing

It is a subtype of performance testing that focuses on determining a system’s behavior under a specific expected load. This typically involves simulating many users interacting with the system simultaneously to observe how it responds when subjected to standard and peak load conditions.

Advantages – Prevent downtime, improve user experience, cost efficiency, informed scaling decisions, and risk reduction.

Example – Consider a popular online ticket booking platform that expects a surge in traffic due to a significant event or concert going on sale. Load testing for this platform might involve:

- Simulating the expected number of users trying to purchase tickets simultaneously as soon as they are released.

- Monitoring server health, database response times, and application responsiveness during this simulated load.

- Increasing the load gradually to determine the maximum number of users the platform can support without affecting performance or causing errors.

- Analyzing the results to identify areas of improvement, such as server scaling, database optimizations, or frontend enhancements.

Stress Testing

Stress testing evaluates a system’s robustness, stability, and reliability under extreme conditions. It involves subjecting the software to conditions beyond its specifications to observe how it behaves and at what point it breaks or fails to recover. It helps to identify the system’s breaking points or failure conditions.

It ensures that the system fails gracefully and safely without compromising security or causing data loss and determines how the system recovers after the extreme conditions are normalized.

Advantages – System robustness, risk mitigation, resource optimization, graceful failures, insight into scalability.

Example – Imagine a banking application server designed to handle 1,000 simultaneous transactions. In stress testing, the server might be subjected to 5,000 concurrent transactions, well beyond its intended capacity, to observe its behavior. During the test:

- The response times of transactions might increase significantly.

- Some transactions might fail for various reasons, like database timeouts or system resource constraints.

- The server might eventually crash if pushed too hard.

The test would then help determine the following:

- How the server behaves as it approaches its limit.

- Whether any data gets corrupted or lost during the extreme load.

- If there are any security vulnerabilities exposed when the system is under stress.

How the server recovers or needs to be recovered after the stress is removed or reduced.

Soak Testing

Also known as endurance testing involves subjecting a system to a continuous and expected production load for an extended period to discover how the system behaves. It’s primarily aimed at identifying performance issues that might surface with prolonged execution, such as memory leaks or system slowdowns.

Advantages – System reliability, optimized resource utilization, prevents downtime, enhanced user experience.

Example – Imagine a streaming service like Netflix that users often binge-watch shows on weekends. For soak testing, the application would be continuously run for 48 hours straight, simulating users watching multiple episodes back-to-back without breaks. This helps identify if the system can sustain prolonged activity without memory leaks, slowdowns, or other issues arising from extended use. If, after many hours, the application starts buffering excessively or crashes, it may indicate potential problems that need addressing.

Based on the results:

- Developers can identify if there are memory leaks in the system.

- Optimizations can be made to improve resource allocation and release.

- Fixes can be implemented to ensure that long-term viewers avoid hiccups or performance issues while using the service.

Volume Testing

It involves subjecting the software to a large volume of data. It is conducted to analyze the system’s behavior under varying amounts of data, significantly beyond the anticipated amounts in real-life use cases. This test primarily focuses on the database and checks the response of the application when the database is subjected to a large volume of data.

Advantages – Increases reliability, provides performance insight, Risk Reduction, and optimization.

Example – Consider a banking application that typically handles a few thousand transactions daily. The system might be simulated for volume testing to process several million transactions within a few hours to see how it copes. If the application starts to slow down or produces errors during this surge, it indicates areas that need optimization or improvement to handle large data volumes.

Scalability Testing

It is a subset of performance testing that evaluates a system’s capability to grow and manage increased load, whether by scaling up or out. This kind of testing determines at what point the system’s performance starts to degrade or fails to scale.

Advantages – Future preparedness, cost-effective, optimized performance, reduction of risks, ensures stability.

Example – Consider a streaming service currently supporting 10,000 concurrent viewers. With an anticipated release of a popular show, they expect a spike to 100,000 viewers. Scalability testing simulates this viewer increase and monitors whether the service can handle the load without lag or crashes. If the streaming remains smooth, the service is deemed scalable for the expected spike; if not, adjustments are required.

Usability Testing

Evaluates a product or system’s user interface (UI) and overall user experience (UX) by observing real users interacting with it. The goal is to identify usability problems, gather quantitative data on participants’ performance (like completion rates, error rates, and task time), and determine participants’ satisfaction with the product.

Usability testing can be categorized into Exploratory, Accessibility, and User Interface Testing.

Exploratory Testing

It is an informal and unscripted testing approach where testers actively explore the application, relying on their skills, experience, and intuition to find defects. Instead of following a predefined set of test cases, testers dynamically design and execute tests based on their discoveries during the testing process.

Advantages – Offers a fresh perspective on the application, can uncover critical defects, and is particularly useful in situations with limited documentation.

Example – Consider a new mobile app designed for photo editing. Instead of just following scripted tests (e.g., “apply filter X to photo Y”), a tester using exploratory testing might experiment with the app by taking a photo, using various editing tools in different combinations, trying to undo and redo edits, sharing the edited photo, and so on. During this exploration, the tester might discover that applying specific edits causes the app to crash or that a particular tool doesn’t work as intuitively as intended.

Accessibility Testing

Checks whether a digital product, like a software application or website, can be used by people with disabilities. This form of testing ensures that all potential users, regardless of their physical or cognitive abilities, can access and utilize the product effectively.

Advantages – Makes products usable by a wider audience, including the differently-abled; helps adhere to legal and regulatory requirements. Expands the potential user base and customer market.

Example – A company has developed a new e-learning platform. During accessibility testing, testers use screen readers to navigate the platform, ensuring that all the text content is readable and that all interactive elements, like buttons or links, are selectable and functional. They also provide that the platform can be navigated using only a keyboard (for those who can’t use a mouse) and that videos have captions or subtitles for the hearing impaired. Identifying and rectifying barriers makes the e-learning platform more accessible to a broader range of users.

Read here how to build an ADA-compliant app.

User Interface Testing

It is the process of testing the graphical interface of a software application, ensuring that it meets the specified design requirements. It primarily focuses on how users interact with the software, checking elements like buttons, menus, icons, text boxes, and other interface functionalities to ensure they work correctly and provide a positive user experience.

Advantages – Ensures the software or application is user-friendly, intuitive and detects visual and functional interface issues before reaching the end user; a well-tested UI often leads to greater user satisfaction and retention.

Example – Suppose a company has developed a new mobile banking app. During UI testing, testers would validate that when a user taps the “Transfer Money” button, it opens the correct screen. They would also check design aspects, like ensuring that the text is readable, icons are appropriately sized and intuitive, and the overall look and feel match the brand’s design guidelines.

Testers might also resize the app on different devices to ensure the design remains consistent and functional. Any discrepancies or issues discovered during this process would be noted and rectified to ensure the end-user has a seamless experience.

Compatibility Testing

Ensures an application runs as expected across different environments, devices, operating systems, browsers, and network configurations. It assesses the software’s capability to function in various conditions and confirms its seamless performance and interaction without any discrepancies.

Cross-browser and cross-platform are two types of compatibility testing. Let’s explore each.

Cross-Browser Testing

It is software testing where an application or a website is tested across multiple browsers to ensure consistency and functionality. This testing verifies that the software behaves as expected when accessed through different browsers, ensuring that layout and features remain consistent.

Advantages – Ensures a consistent look and feel across all browsers, guarantees that users of less popular browsers still have a positive experience, and builds user trust and confidence as they experience consistent behavior irrespective of the browser used.

Example – Consider an online e-commerce store that introduces a new checkout feature. Cross-browser testing would involve checking the functionality and appearance of this checkout process on browsers such as Chrome, Firefox, Safari, Edge, and Opera. During testing, it was discovered that a particular “Apply Coupon” button doesn’t render correctly on Safari. This discrepancy is then resolved to ensure all users, regardless of their browser choice, have a seamless checkout experience.

Cross Platform Testing

Test an application or software product across multiple operating systems or platforms to ensure its correct functionality, appearance, and behavior. This ensures that software provides a consistent experience whether accessed on Windows, MacOS, Linux, iOS, Android, or any other platform.

Advantages – Consistency, wider reach, increased reliability, cost-effectiveness.

Example – Imagine a mobile application developed for Android and iOS platforms. Cross-platform testing would involve testing the app on different devices running various versions of Android and iOS. During the testing process, it might be observed that a specific feature works smoothly on Android devices but crashes on iPhones with older iOS versions. This inconsistency would be documented and resolved to ensure users on both platforms enjoy a harmonious experience with the app.

How testRigor Helps?

Most testing types we discussed can be performed manually or through automation. Automation offers advantages such as time savings and more extensive coverage with fewer resources. However, a current drawback is that many automation tools are tailored to a specific type of testing.

Only some tools can support a broad range of testing methods, which is where testRigor distinguishes itself. Let’s see it in detail.

- Browser and Platform testing – testRigor supports executing test scripts across multiple browsers and platforms, covering compatibility testing.

- System Testing – testRigor is designed to focus on system testing, ensuring it supports all forms of system testing.

- Usability Testing – testRigor supports visual testing and accessibility testing.

- Load Testing – Using testRigor, we can also perform load testing.

- Different types of testing – testRigor supports web and mobile browser testing, mobile app testing, desktop testing, API testing, etc.

A few other exciting features that testRigor offers are –

- Generative AI – Leveraging generative AI, testRigor can create an entire test case autonomously. The QA individual simply needs to provide the test case title or description.

- Parsed Plain Language – testRigor assists manual QA professionals in developing automation scripts using plain English, thus eliminating the reliance on programming languages.

- Image Recognition – testRigor processes text within images using OCR and recognizes buttons or texts through machine learning-driven image classification.

You can explore the extensive features supported by testRigor here.

Wrapping Up

Software testing has many types, each looking at different software application parts. They ensure the software works right and is fast, user-friendly, and safe. Companies want their software tested thoroughly. Given the competitive market, companies aim to release software quickly and update it often. This requires quick and efficient testing. Tools like testRigor help with this, reducing testing time and leading to faster releases with higher quality.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |