How to use AI to test AI

|

|

These days, you’ll find artificial intelligence being a part of almost every application. You can see them as large language models (LLMs) like ChatGPT or generative AI features like summaries of reviews or content. Traditional test automation tends to struggle while handling such features due to AI’s dynamic nature. To make sure that you are covering all grounds to test applications, using AI-powered test automation tools is going to be the way ahead.

Let’s understand more about why you need AI to test AI.

Challenges with Testing AI Features

The dynamic nature of AI can confuse your traditional test automation. Here’s why

- Unpredictability of AI Behavior: AI systems, especially things like machine learning models, can behave unpredictably. They learn from data, and the way they respond to new or unseen inputs can change over time. This makes it hard to know exactly what to expect from them, which can make creating automated tests tricky. A test that works today might not work tomorrow if the model has “learned” something new.

- Black-Box Nature of AI: Many AI systems are considered “black boxes,” meaning we can’t easily see how they make decisions. We might know the inputs and the outputs, but understanding the inner workings of the model is much harder. This makes it tough to know whether a test has covered all aspects of the system and whether the system is functioning as expected.

- Complexity of AI Systems: AI models, like language models (LLMs) or image recognition tools, can be very complex. They don’t just follow a set of instructions like traditional software. Instead, they learn from large amounts of data and adjust their behavior based on patterns they detect. This complexity makes it harder to test every possible scenario, especially as the models evolve.

- Difficulty in Creating Test Data: For AI testing, you need lots of diverse data to cover all possible situations the AI could face. However, generating enough quality test data can be a challenge. AI systems may also require data that includes edge cases (rare or extreme situations), and it’s difficult to come up with test data that represents all possible outcomes.

- Bias and Fairness Testing: AI models can sometimes make biased decisions based on the data they’ve learned from, but detecting bias can be tricky. Testing for fairness in AI is complicated because you need to evaluate the AI’s behavior across different groups and scenarios.

- Performance and Accuracy Issues: AI models can be tested for things like speed, accuracy, and reliability. But measuring performance can be difficult because it’s not always clear what “success” looks like. For example, in a language model, what might be considered a “correct” answer can vary. So automated tests may not always catch these nuances.

- Integration with Existing Systems: AI systems don’t operate in isolation. They are often integrated into larger software applications. Testing how an AI system interacts with other parts of the software or the real world can add extra complexity. Automation needs to ensure that the AI features work not only by themselves but also within the full context of the application.

How does AI help test AI?

With AI-based testing tools, you can tackle the problem points that challenge traditional test automation systems.

Intelligent Test Case Generation

- Automated Scenario Creation: AI algorithms analyze requirements, user stories, and even the AI model’s behavior to automatically generate relevant and diverse test cases. This reduces the manual effort in creating comprehensive test suites.

- Risk-Based Prioritization: AI can prioritize test cases based on the potential risk and impact of failure, to make sure critical functionalities are tested thoroughly.

- Handling Variability: AI can generate test cases that account for the non-deterministic nature of AI, including variations in input data and expected output ranges.

Enhanced Test Automation and Execution

- Self-Healing Tests: AI-powered tools can identify changes in the AI application’s interface or underlying structure and automatically adjust test scripts. This minimizes test maintenance and reduces test failures due to minor UI updates. Read about: Self-healing Tests.

- Visual Validation for AI Outputs: For AI features involving visual elements (e.g., image recognition, object detection), AI-based visual testing tools can intelligently compare outputs against expected baselines, identifying subtle discrepancies that traditional methods might miss.

- Natural Language Processing (NLP) for Testing AI Interactions: For AI features involving natural language (e.g., chatbots, voice assistants), NLP-enabled testing tools can understand and validate the AI’s responses based on semantic meaning and context, rather than just exact string matching.

- Simulation of User Behavior: AI can simulate realistic and complex user interactions with AI features, including edge cases and unexpected inputs, to assess robustness and identify potential failure points.

Data-Driven Testing and Analysis

- Automated Test Data Generation: AI can generate synthetic test data that mimics real-world scenarios, including diverse and edge-case data, which is crucial for thoroughly testing AI models. Read: Test Data Generation Automation.

- Bias and Fairness Detection: AI-powered tools can analyze training and testing data, as well as model outputs, to identify potential biases related to factors like demographics, to make sure the AI feature behaves fairly across different user groups.

- Anomaly Detection in Test Results: AI algorithms can analyze large volumes of test results to identify anomalies and unexpected behavior patterns in the AI feature’s performance.

- Predictive Defect Analysis: By analyzing historical test data and AI model behavior, AI tools can predict potential areas of failure and help focus testing efforts.

Performance and Scalability Testing for AI

- Realistic Load Simulation: AI can simulate various user loads and traffic patterns to assess the performance and scalability of AI-powered features under different conditions.

- Resource Monitoring and Optimization: AI tools can monitor resource utilization (CPU, memory, etc.) during AI feature testing and identify potential bottlenecks or areas for optimization.

AI-based Tools to Test AI

The potential of AI-based testing tools is unlimited. Yet, there are very few tools in the market that help you do this. For an all-around testing solution that utilizes Gen AI, testRigor is your best bet. This tool harnesses the power of AI through natural language processing (NLP), generative AI, and machine learning (ML) to make test automation more accessible and efficient for everyone on your team.

You can put to use testRigor’s other AI-based features that help test the untestable, like graphs, images, chatbots, LLMs, Flutter apps, mainframes, and many more. This tool emulates a human tester, meaning it tries its best to look at test cases as a human would. This means plain English test steps and no complex XPaths or CSS to identify UI elements on the screen. Here’s how this powerful tool can bring a sigh of relief to your AI features testing.

- Test Creation in Natural Language: We discussed how tough it can be to validate graphs as a human, let alone explain to a machine. Luckily, this tool lets you use plain English language to write test cases. Just write what you see on the screen, and testRigor will take care of the rest. Along with manually writing test cases in English, you can use the generative AI feature to help you create test cases in bulk based off a comprehensive description or prompt. Read: All-Inclusive Guide to Test Case Creation in testRigor.

- AI Vision: testRigor utilizes AI vision capabilities to “see” and interpret the visual elements. This allows it to go beyond basic pixel comparisons. It can help you to verify and analyze images, graphs, diagrams, and interpret various patterns and outcomes in them. Read: Vision AI and how testRigor uses it.

- Self-healing of Tests: testRigor constantly tries to make sure that your test runs are not flaky and that you’re only bothered if there’s a real issue. The tool uses AI to heal tests in the event of basic UI changes, such as if the UI element’s label or position changes. This is a huge relief from a test maintenance standpoint. Read: AI-Based Self-Healing for Test Automation.

- Visual Regression Testing: You can use testRigor to perform visual regression testing by comparing the application with a baseline. This can come in handy, too, if your application’s components are meant to be static. Read: How to do visual testing using testRigor?

- Contextual Understanding: testRigor has another interesting capability – to allow testers to provide AI context at each step. Just by appending the “using ai” suffix to the command, you can easily test otherwise difficult scenarios. This is immensely helpful in graph testing as the AI is designed to understand the context of the graph within the application.

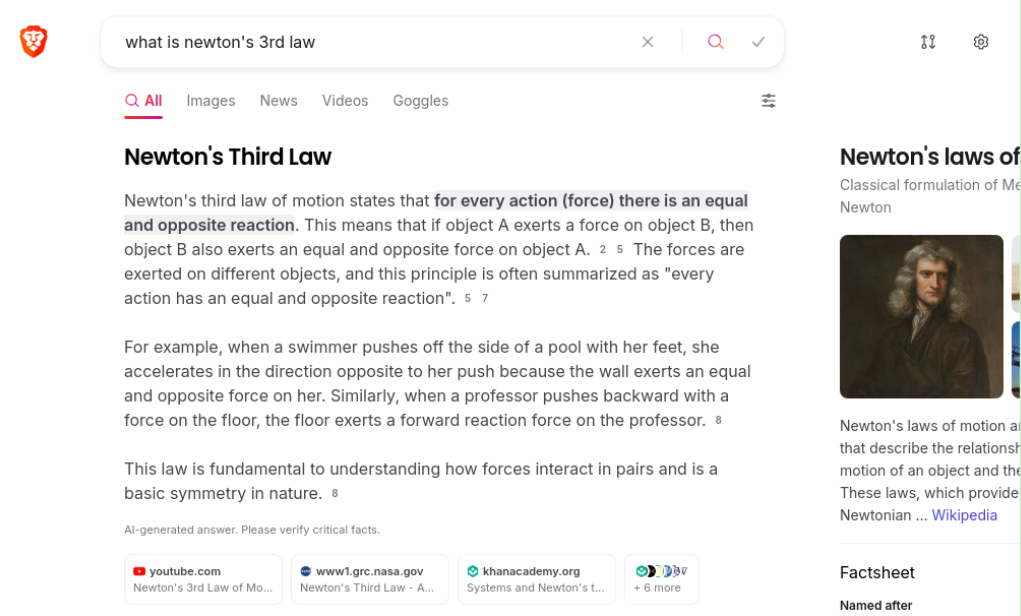

Let’s take a look at an example of testing generative AI-based summaries using testRigor’s AI features. In this example, we will query a browser that offers a summary based on the content it finds.

Prompt that we will ask the browser: what is Newton’s third law?

This is how the generative AI summary of the browser will look.

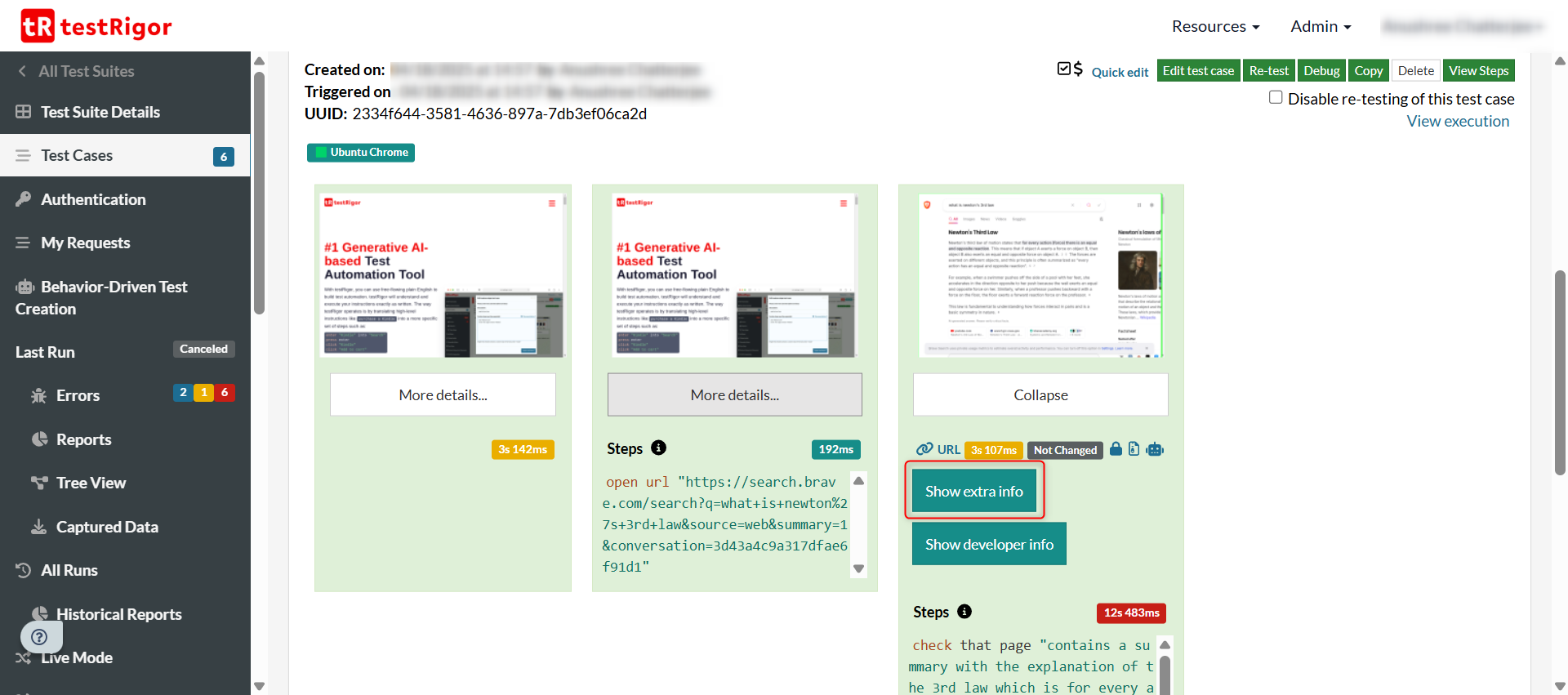

testRigor can do this verification for you with bare-minimum steps. Once you’ve opened the browsers and entered the query string, a single step is all it takes to verify the outcome.

check that page “contains a generative AI-based summary with the explanation of the 3rd law which is for every action there is an equal and opposite reaction” using AI

This is testRigor’s context-based testing capability. As is the case with any prompt-based AI engine, you need to try a few times before you find the right prompt that gets you the desired results. But once that clicks, testRigor handles the rest.

testRigor captures screenshots of each and every step it executes, along with logs, errors, and more information.

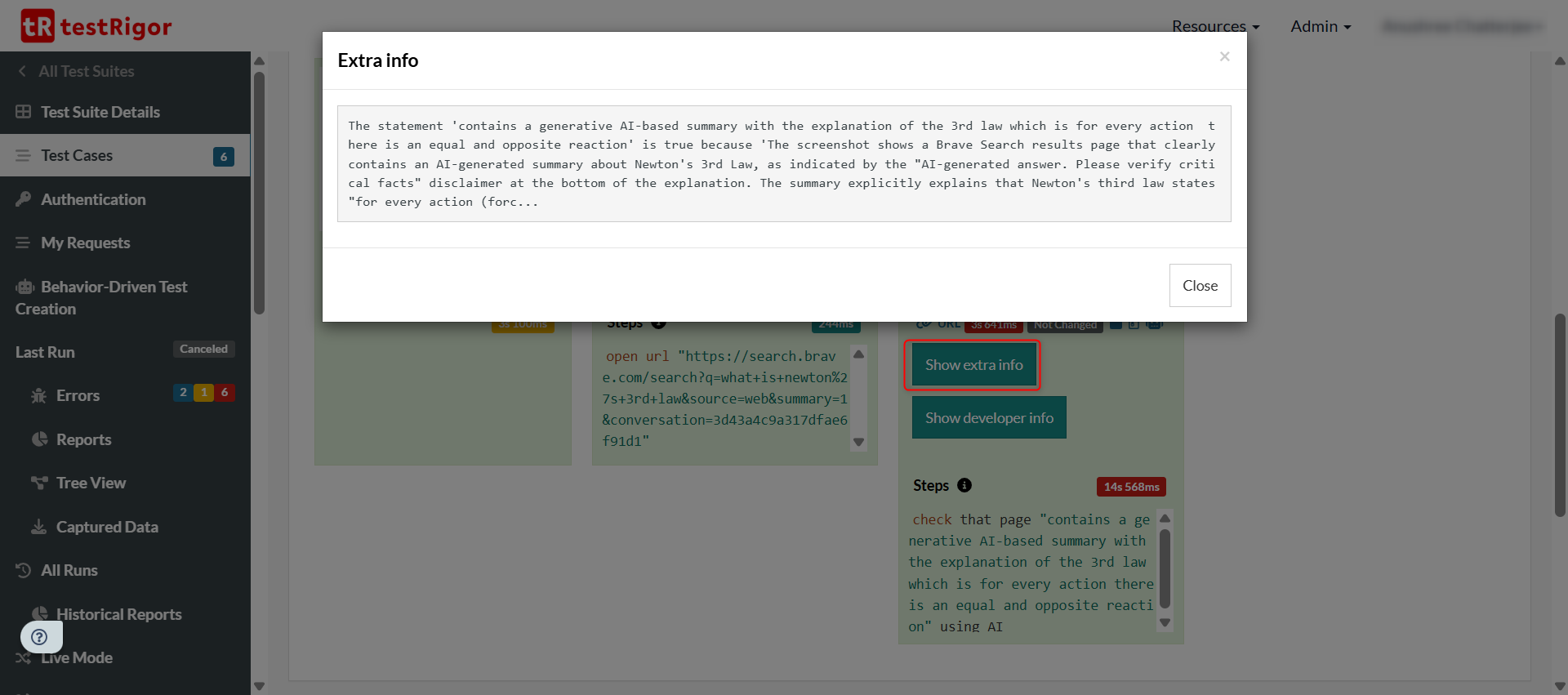

The best part is that there’s even a summary of what the AI engine found. Under the “Show extra info” section, for every step that you test with the “using ai” feature, you can see the AI engine’s explanation for passing or failing it. For example, in the above case, the explanation that testRigor provides is this:

The statement ‘contains a generative AI-based summary with the explanation of the 3rd law which is for every action there is an equal and opposite reaction’ is true because ‘The screenshot shows a Brave Search results page that clearly contains an AI-generated summary about Newton’s 3rd Law, as indicated by the “AI-generated answer. Please verify critical facts” disclaimer at the bottom of the explanation. The summary explicitly explains that Newton’s third law states “for every action (forc…

You can do a lot more with testRigor. Check out the complete list of features.

Read more about how testRigor tests different AI features.

Conclusion

While automating the testing of AI features is important for testing modern applications, it requires extra effort because AI systems are constantly learning, can be hard to predict, and don’t often follow traditional patterns of behavior. These challenges make it crucial to use the right tools and strategies to test AI effectively.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |