What is Responsible AI?

|

|

Artificial Intelligence (AI), which was once the main plot of science fiction films, has become an everyday reality very quickly. Today, it is behind recommendation engines, self-driving cars, healthcare diagnostics, fraud detection systems, and even creative art. The capabilities of AI are both disruptive and transformative, yet this power introduces complex challenges and ethical implications.

This is where the notion of Responsible AI even comes to emerge. Responsible AI ensures that artificial intelligence is developed, deployed, and governed in a manner that delivers alignment with human values, fairness, transparency, and accountability.

With AI increasingly shaping industries and societies, there has been a growing demand for ethical and fair systems. The discussion has moved from “What is AI capable of?” to “What should AI do?” This shift highlights the significance of responsible AI, a structured approach to ensuring that the creation and implementation of AI are done in a manner that’s beneficial to humanity, while mitigating its harm.

| Key Takeaways: |

|---|

|

Defining Responsible AI

Responsible AI is the notion that you build, create, and develop AI in a way that is ethical, transparent, and accountable, and for the good of humanity. It seeks to avoid biases, misuse, and unexpected consequences by integrating ethical principles and governance mechanisms into the development of AI systems.

In plain language, Responsible AI means that the things we build do what they are supposed to do, treat people fairly and legally, and fit into social norms. This implies that AI systems must be more than accurate and efficient; they also need to uphold human dignity, privacy, and justice.

Responsible AI goes beyond just being a technical challenge; it’s also a philosophical and social one. It is a mix of data science, software development, sociology, law, and human rights. Multidisciplinary cooperation and organized governance are the keys to its success.

Responsible AI is based on three dimensions:

- Technical Integrity: Accuracy, reliability, and sound engineering.

- Ethical Honesty: Courtesy to fairness, inclusion, privacy, and anti-discrimination.

- Social Responsibility: Achieving AI goals through societal well-being, sustainability, and justice.

Read: AI in Engineering – Ethical Implications.

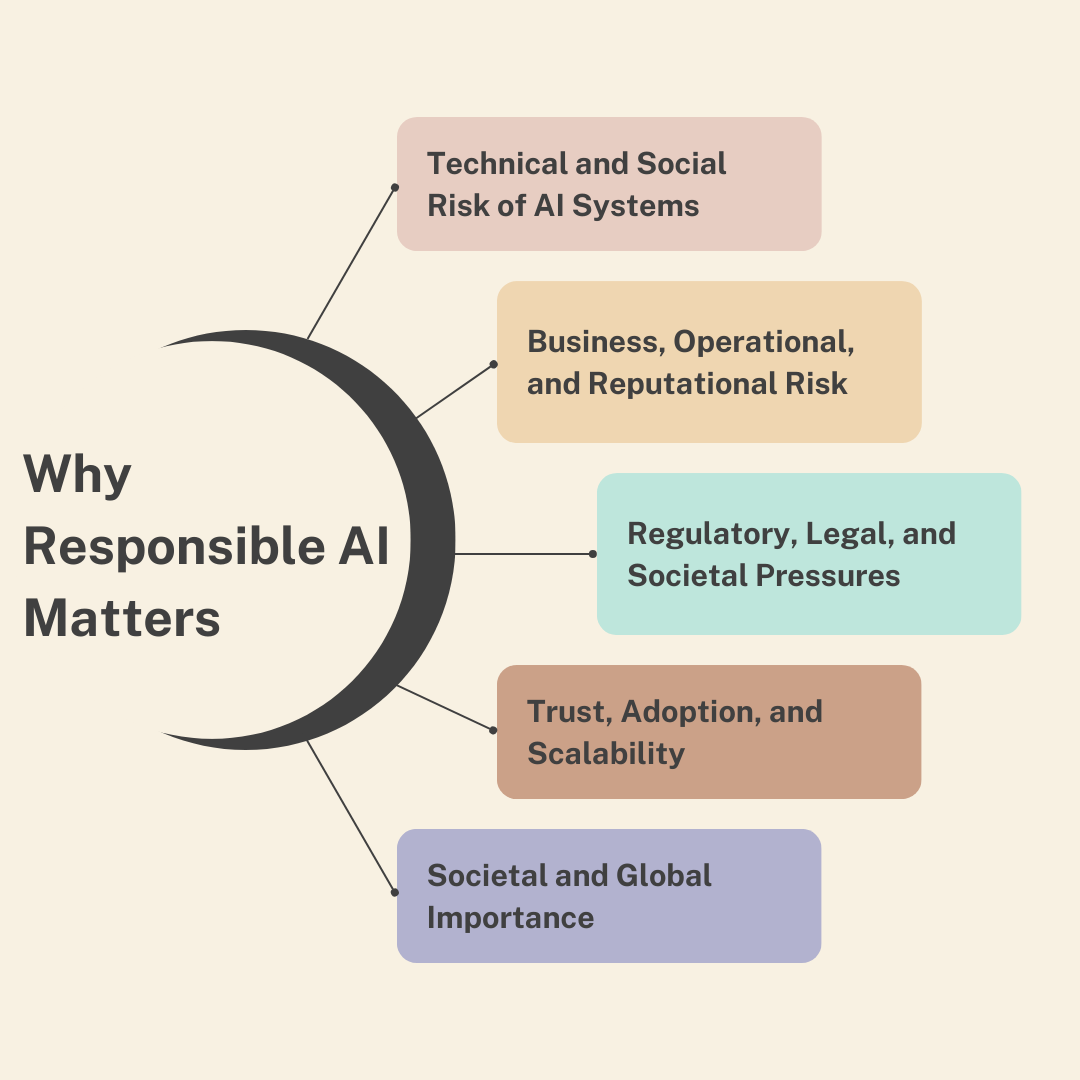

Why Responsible AI Matters?

AI has demonstrated tremendous ability to help us address some of the most challenging problems. But when built or used carelessly, they can magnify social inequalities, obscure decisions, and even cause physical or psychological harm. Think of biased hiring algorithms or facial recognition mistakes that spur wrongful arrests, or chatbots that promote news misinformation. These highlight how the lack of accountability in AI design can lead to devastating outcomes.

Technical and Social Risk of AI Systems

AI systems can propagate or increase bias, generate incorrect predictions, and do unexpected things in the real world. Such errors can threaten life or even livelihood in places as vital as healthcare and finance. Algorithms can socially marginalize people, cause privacy violations, and manipulate social media discourse. Responsible AI frameworks have been employed to address these challenges by increasing model transparency, interpretability, and fairness.

Business, Operational, and Reputational Risk

For enterprises, the abuse or failure of AI is connected to huge operational and reputational costs. An AI system gone rogue can demolish customer confidence, break the rules, and incur litigation. The practice of responsible AI being applied in business operations leads to companies avoiding reputation scandals, retaining customer trust, and dealing with complex international regulatory landscapes.

Regulatory, Legal, and Societal Pressures

International regulators, such as the EU AI Act and the OECD AI Principles, are promoting frameworks that necessitate transparency, risk management, and accountability in AI. By being early adopters of Responsible AI principles, companies can stay ahead of control measures and penalties from compliance regulations, rather than by building an ethical reputation.

Trust, Adoption, and Scalability

The deployment of AI is largely trust-based. Users, customers, and regulators should have confidence that the AI systems are making trustworthy and fair decisions. Responsible AI ensures trust through transparency in design decisions, clear explanations of decision-making processes, and the upholding of rights. This trust promotes rapid uptake and scalability by minimizing stakeholder pushback.

Societal and Global Importance

Employment and healthcare, education, climate policy, and justice are some of the critical sectors that AI influences. Effective governance is a desperate requirement in terms of maintaining ethics for society. By being made responsible, AI can be a force for good in the world, reduce inequality, and promote human-centered development.

Read: AI Compliance for Software.

Role of Testing in Responsible AI

Responsible AI is not just about writing ethical guidelines; it is also about verifying, through rigorous testing, that systems behave as intended in the real world. This is where modern test automation platforms play an essential role.

Tools like testRigor, a Gen AI-powered end-to-end test automation platform, help teams continuously validate AI-enabled applications from the user’s perspective. With testRigor’s plain English test cases, AI context, and Vision AI capabilities, product teams, QA engineers, and even non-technical stakeholders can collaboratively design and execute tests. By integrating such tools into the development lifecycle, organizations can turn Responsible AI principles into repeatable, testable, and auditable software behavior.

testRigor helps you to test biases, hallucinations, chatbots, LLMs, and other AI features easily in plain English.

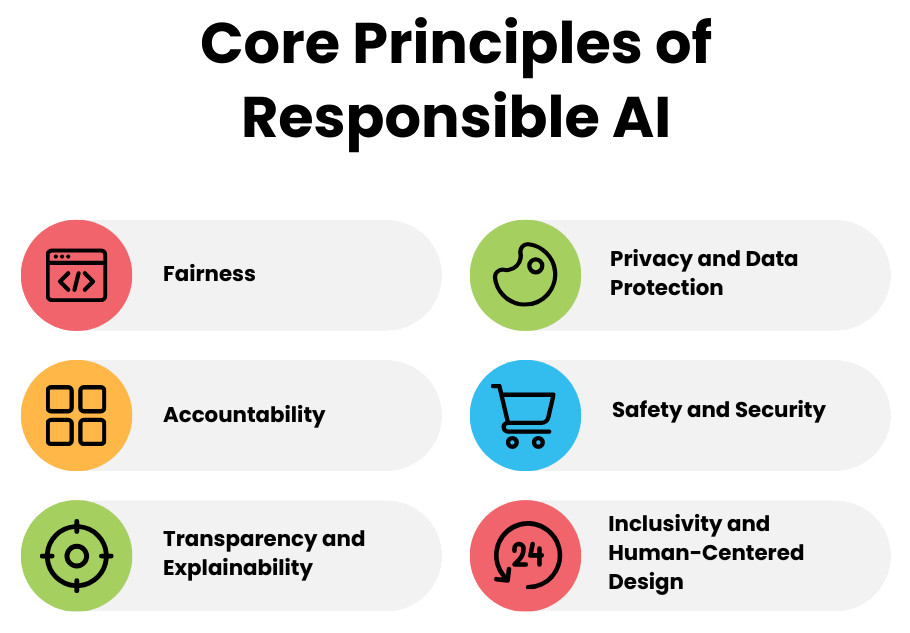

Core Principles of Responsible AI

Though interpretations vary slightly across organizations, several key principles consistently define Responsible AI:

Fairness

Fairness implies that AI systems are fair with respect to individuals and groups. It covers challenges such as algorithmic bias, discrimination, and disparate impact. Developers should also be able to evaluate the datasets and models they are using and delete biases that may have harmful implications for certain populations. Fair AI design includes diversity in race, gender, geographic distribution, and social background.

Example: A bank using AI for loan approvals must ensure that its model doesn’t unfairly reject applicants from minority backgrounds due to biased training data.

Accountability

Accountability holds human beings responsible for the actions of the AI system. There are defined governance models that define the responsibility on each side of the AI lifecycle: data, design, deployment or monitoring. Logging, audit trail, human control, and documented decision flow are the means of enhanced accountability.

Example: In healthcare, AI can assist in diagnosis, but final decisions should rest with qualified medical professionals who can interpret results responsibly.

Transparency and Explainability

Transparency publishing the working mechanism, data applied, and decision process in AI models. Explainability is considered a complement to transparency, such that the model’s decisions are interpretable to humans. It’s a form of accountability and an opportunity for the public to challenge or dispute machine-based decisions, one of the biggest requests in a controlled space like credit rating or employment.

Example: In credit scoring, an applicant should be able to understand why they were denied a loan, whether due to their income level, credit history, or another factor.

Privacy and Data Protection

Responsible AI protects the privacy of individuals while ensuring compliant data collection, consent management, anonymization, and secure storage. Cybersecurity is a critical one as well; AI needs to be resistant and resilient to adversarial attacks and sensitive data breaches. With appropriate responsible data governance models, it is possible to hold AI accountable for how the data is managed throughout its lifetime.

Example: Facial recognition systems must obtain explicit consent and limit data storage to prevent misuse.

Safety and Security

AI systems need to be robust in the real world and in an adversarial environment. Reliability is employed to ensure that a service consistently performs, but robustness seeks to prevent tampering or unexpected input. Safety is about precautionary measures, not to hurt people and the environment.

Example: Autonomous vehicles must undergo rigorous testing to prevent accidents due to sensor failure or malicious interference.

Inclusivity and Human-centered Design

Artificial intelligence needs to enhance human abilities, rather than take them away in a careless manner. Human-centered design is focused on cooperation, understanding, access, and dignity towards the variety of users. Inclusion makes AI represent as many human experiences as possible and as few exclusionary impacts as possible. Read: How to Keep Human In The Loop (HITL) During Gen AI Testing?

Example: Voice assistants must be trained to recognize diverse accents and speech patterns to serve users globally.

Read: AI Agency vs. Autonomy: Understanding Key Differences.

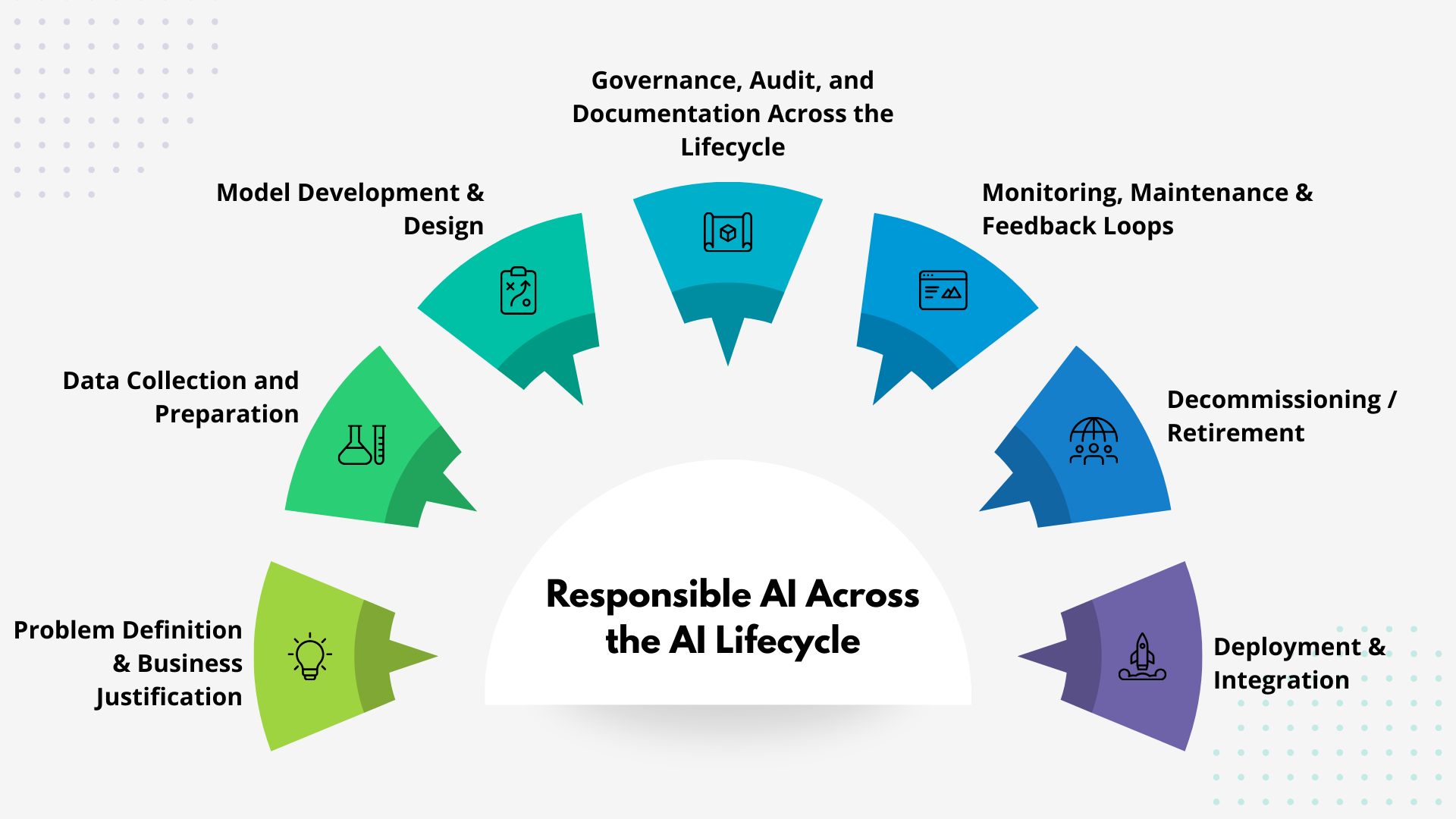

Responsible AI Across the AI Lifecycle

To build Responsible AI, you need to take a structured approach that includes ethical principles at every stage of the AI lifecycle. Here are the most important steps in this kind of framework:

Problem Definition & Business Justification

Framing of ethical issues initiates responsible AI even before collecting information. So the question an organization should ask is: Does this use case serve a legitimate social or business purpose? Is there a good chance it will cause harm, exacerbate inequity or violate rights? Definitions of ethical dilemmas provide a focus and reason for an AI to be applied.

Data Collection and Preparation

Prejudice can often have its origins in knowledge. Fairness is related to the quality, diversity, and representativeness of datasets. Tools for consent, tracking lineages, assessing labeling quality, and bias detection should be built into the data governance systems. Document the sources of data and their intended use to provide traceability and transparency.

Model Development & Design

The danger as AI systems go into production is disruption to operations. Deployment practices need to include validation in a real-world environment, fail-safe techniques, and human-in-the-loop reviews. Assimilation into workflows should not be opaque; the users must be trained on how to interpret the model outputs responsibly.

Deployment & Integration

Now that AI systems are moving into production, the challenge is adapting operations. Best practice deployment includes real-world validation, fail-safes, and human-in-the-loop checks. The model framework and integration into workflows should be transparent, and users should be educated on how the models are to be holistically read responsibly.

Monitoring, Maintenance & Feedback Loops

Once deployed, AI systems need to be regularly audited for drift or bias, which can lead to inaccuracies. Responsible AI practice also advocates for the amelioration of a self-reinforcing learning loop, enabled by both user feedback loops and external auditing that could allow the model to be adjusted.

Decommissioning / Retirement

Organized decommissioning is essential when an AI system becomes obsolete or goes rogue. That said, responsible AI includes model shutdown procedures, data archiving procedures, and transition planning procedures to make sure that there is absolutely zero residual risk.

Governance, Audit, and Documentation Across the Lifecycle

Each stage of the lifecycle must be well documented: data provenance, data design reasoning, model testing, and data governance. Auditability promotes confidence and openness and assists in demonstrating regulatory compliance in case of an external audit or during a crisis.

Read: Impact of AI on Engineering: What the Future Holds for Engineers.

Implementation of Responsible AI

AI responsibility only gets real when those high-level principles are translated into day-to-day practices that employees can follow. It needs all four areas of culture, tools, process, and governance to work in tandem with each other.

- Organisational Culture and Governance: It begins with cultural accountability. Ethical AI must be made an integral part of the executive leadership’s corporate strategy. AI governance boards, ethics champions, and policy frameworks oversee early situations of implementation and accountability. The organizational DNA should have genes of transparency, equality, and inclusion.

- Tools and Processes: Technical tools are enablers of Responsible AI. Ethical compliance is implementable via bias detection libraries, explainability dashboards, differential privacy systems, and model interpretability frameworks. These are codified with the assistance of standardized checklists and review methodologies.

- Integration into AI Lifecycle: It’s not an afterthought to think about responsible AI. Through incorporating ethical reviews at all levels, from the prospectively designed to those undertaken by monitoring, such an ordered supervision is ensured instead of ad hoc remedies.

- Metrics and Monitoring: Ethical performance can be further monitored in a gradual manner using quantifiable metrics (e.g., fairness, explainability, and privacy compliance scores). They are then partly used to detect and adjust drift, or develop toward principles with time.

- External Alignment and Transparency: Public accountability is created through public transparency reports, external audits, and communication with stakeholders. Conformance to international standards (ISO/IEC, IEEE, OECD) can be viewed as a way of following best practices internationally.

- Continuous Improvement and Iteration: AI and ethics are not static, so Responsible AI must be iterative. If the policy gets updated constantly and refined in detail, feedback from users, regulators, and the impacted communities always has to be taken into account.

- Challenges and Trade-offs: It is challenging to strike the right balance between business agility and ethical rigor. In some cases, this might be because more interpretability can lead to lower performance, or privacy can make the data less rich. Ethical and contextual bargaining is what responsible AI relies on when dealing with these trade-offs.

Read: Will AI Replace Testers? Choose Calm Over Panic.

Challenges in Adopting Responsible AI

Even though more people are becoming aware, it is still very hard to put Responsible AI into practice:

- Bias in Data and Algorithms: Data-driven approaches are prone to inheriting the biases from their inputs. It is not easy to clean and balance datasets, particularly for complex or historical data.

- Lack of Standardization: There is no global standard against which to measure a company’s Responsible AI practices. That lack of uniformity makes cross-border compliance a mess.

- Trade-Off Between Performance and Transparency: There is often a trade-off between the predictive performance of AI models (e.g., deep neural networks) and their interpretability. Obtaining performance and being explainable jointly is still a challenging task.

- Ethical Dilemmas: The use of AI in surveillance, military, and predicting human behaviour is sending us towards lawlessness around consent, autonomy, and privacy.

- Regulatory and Legal Ambiguity: AI regulation is still largely being written. In many jurisdictions, it is not clear what constitutes corporate or ethical behavior when it comes to the use of AI.

- Organizational Culture: Creating AI responsibly will demand a cultural shift within organizations, where ethics are just as important to innovation and profit. This process is generally slow and may meet resistance.

The Role of Explainable AI (XAI)

Explainable AI (XAI) is a crucial aspect of Responsible AI, as it ensures that the decisions made by models are interpretable to humans. XAI employs techniques such as LIME, SHAP and counterfactual reasoning to explain why AI makes a specific decision. Explainability supports:

- Accountability: Ensures that teams and stakeholders know how and why an AI system makes certain decisions.

- Debugging: Can be used to diagnose and correct biases, mistakes, or unexpected behaviors in the model.

- Regulation: Encourages compliance with strict legal and ethical rules in safety-critical areas such as healthcare, finance, and public safety.

By adding explainability, developers build trust and give stakeholders the power to question and improve how AI works.

Human Oversight and Ethical Governance

Responsible AI is not just a matter of making better algorithms; it’s mostly a governance challenge. Enterprises will need to stand up governance models that involve boards of ethicists who oversee AI-driven projects, keep transparent records, and have a team with diverse stakeholders, including ethicists, domain experts, and policymakers. Human intervention is still necessary to ensure AI tools supplement, rather than replace, human judgment.

Read: AI Glossary: Top 20 AI Terms that You Should Know.

Responsible AI and Business Transformation

Ethical AI is fast becoming the new competitive advantage for modern businesses. Any company implementing ethical frameworks will gain trust in the brand, readiness for regulation, and improved performance in the exchange model, as well as a stronger work culture. Organizations can also innovate responsibly with AI by incorporating safety and preserving public trust.

Read: AI in Engineering: How AI is changing the software industry?

Conclusion

Responsible AI isn’t just something that would be nice to have; it’s the prerequisite for sustainable technological development. It guarantees that as AI becomes more powerful, it stays aligned with human values, rights, and dignity. Adopt these values of transparency, fairness, and accountability to make sure you’ll be using AI for good.

To make such a promise a reality, more than just principles are needed; we need operational patterns, automation, and tooling. When clear governance is paired with modern AI-assisted testing platforms such as testRigor, organizations can persistently ensure that their AI systems do what they say in a manner consistent with values and obligations.

Ultimately, Responsible AI is a promise of technology that is serving humanity rather than ruling it. We owe it to ourselves, and we owe it to each other, as humans, to make that promise real.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |