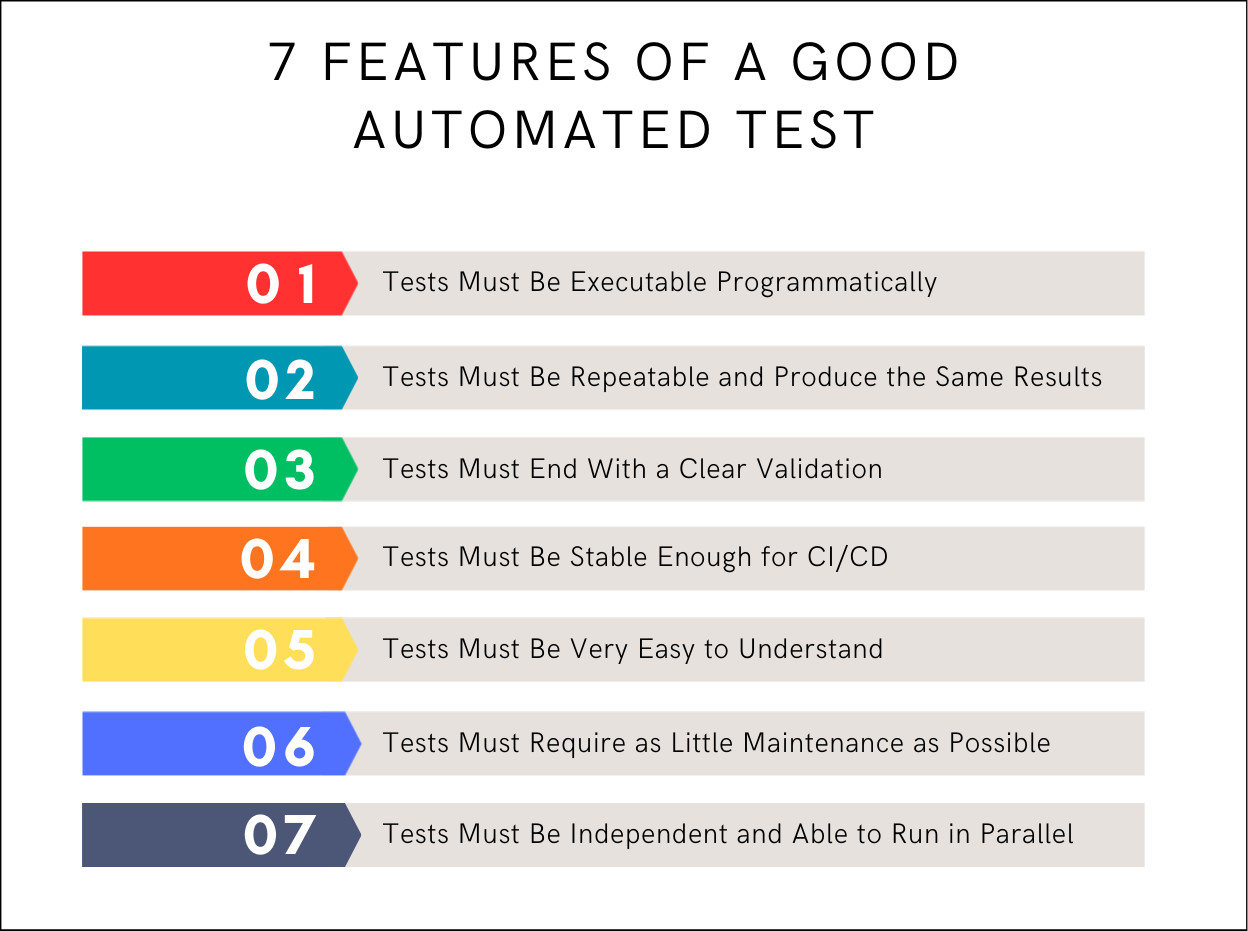

7 Features of a Good Automated Test

|

|

Automated testing is essential for software development in the current landscape, where CI/CD methods are increasingly common. Additionally, distributed architectures and continuous updates make frequent releases a necessity. Yet, with automated tests, not all value is equal. Some of these tests will become reliable quality gates. In contrast, others will become fragile scripts that slow down development, fail for no reason, and require updates whenever the UI or workflow changes.

The cost-effectiveness of automation varies between industries and testing levels (UI, API, unit, integration, and end-to-end) based on how tests are implemented. Whether a team is creating a web platform, a mobile app, a backend service, or even a microservice, the same basic principles will still affect whether automated tests are reliable, maintainable, and scalable.

Here is where the seven signs of a good automated test come in. These capabilities help teams with their automation design, increasing support for CI/CD, reducing flakiness, enhancing the readability of tests, surviving when the product changes, and running together in parallel. With these traits in place, automated tests instead become long-term value rather than an endlessly accumulating test debt.

| Key Takeaways: |

|---|

|

Understanding the 7 Principles

Here are the seven simple rules that constitute a good automated test. We’ll have more on them later. These rules help build the automated tests that are understandable, simple to maintain, and can be scaled. A good automated test must:

- Be Executable Programmatically: It should be fully autonomous, running without any human intervention, both as part of the CI/CD cycle and on schedule.

- Be Repeatable and Produce Consistent Results: Tests should not pass or fail differently between runs unless the application they run on has also changed.

- End with a Clear Validation: For each test, you will need to explicitly assert expected results in order to ascertain a pass or fail result.

- Be Stable Enough to Run Reliably in CI/CD: Automation tests should be able to execute in parallel with variations in timing and in headless or cloud execution.

- Be Very Easy to Understand: The purpose and flow should be clear to anybody who is viewing the report or reviewing the script.

- Require as Little Maintenance as Possible: Tests should not break if the UI is changed a little or the backend is slightly altered, or run in a different environment.

- Be Independent and Capable of Running in Parallel with All Other Tests: No test should rely on shared state, execution order, or artifacts created by other tests.

How these Principles Fit into Modern Testing

These seven features are universal. They are not bound to any specific automation framework, programming language or testing technique. Instead, they are applicable wherever automated testing is implemented in any technology stack or delivery mechanism.

These seven principles apply across all layers of testing, including:

- Unit tests

- API and service-level tests

- UI and functional tests

- Integration and contract tests

- Full end-to-end workflows

They are relevant for all kinds of software, from traditional applications to cutting-edge platforms:

- Web and cloud-based systems

- Mobile and desktop applications

- Distributed microservices

- AI-driven and event-based architectures

They are also relevant at all stages of the engineering lifecycle, including:

- Developer workflows during feature creation

- QA processes during regression and validation

- CI/CD pipelines that rely on fast, stable automation

- Release readiness checks before deployment

Regardless of whether the tests are coded by hand, auto-generated using low-code tools, or built with codeless automation, these principles dictate how stable, maintainable, and valuable that test suite will be over time.

How these Qualities Shape Automation

These attributes determine the impact of any testing approach. They directly influence:

- How tests hold up under rapid development, and how they can maintain stability while code is rapidly developed.

- How efficiently CI/CD pipelines are executed, minimizing the flaky failures, and avoiding any slowdown on the pipelines.

- How well engineers can maintain the test suite, with tests being easy to update, and not becoming a maintenance overhead.

- How fast teams are able to diagnose failures so that when things go wrong, they can be easily traced back, replayed, and fixed.

- How well does the automation scale, where a test suite can grow without growing in execution and maintenance cost?

In other words, good automation is based on tests that are designed specifically to include these seven principles. Without these characteristics, tests become brittle; they break with each minor update, and they hinder your team’s progress. In the long run, they will cost more money and take more time to maintain.

Mastering Test Automation: The Seven Principles Explained

So, now let’s dive deep into these 7 fundamentals of automation. These are the principles on which any reliable, scalable, and efficient automation approach stands. Knowing and consistently applying them is the difference between excellent automation teams and those that are constantly fighting flaky tests and heavy maintenance overhead.

1. Tests Must Be Executable Programmatically

An ideal automated test should not require any human intervention. It should not require manual setup sequences, pre-navigation through the user interface, manual data population, or any kind of environment manipulation that is outside the test itself. The test that is fully executable as a program is consistent, predictable, and can be part of the automation ecosystem the modern team relies upon.

Programmatic execution helps to make sure:

- The test can be run in any CI/CD pipeline or can be added as a part of any deployment pipeline.

- The test can be easily executed on a local machine or any cloud server without many code changes.

- The test should be free from any user-dependent variations.

- The test can be triggered automatically on commits or should be able to run in schedules or as a part of deployment.

Without programmatic execution, automation becomes partial and unreliable.

Why This Matters

- Programmatic execution helps to eliminate human error.

- It also helps to make the CI/CD pipelines fully automated.

- This supports continuous feedback loops.

- Also, helps to make sure the tests are consistent across the environments.

2. Tests Must be Repeatable and Produce the Same Results

Repeatability is also crucial for the quality of automation. Executing the same test multiple times under identical conditions gives inconsistent results, making it unreliable. A repeatable test yields the same result for every execution, unless there is a change in the application behavior. That consistency creates a bedrock of trust, from which teams can then use automation as a reliable epicenter or source of truth.

Why This Matters

- This consistent behavior across runs keeps the test suite stable and avoids flaky failures.

- An automated process with consistent results breeds confidence in the automation and provides teams with trust that they can rely on it.

- Reliable test execution makes CI/CD more reliable and smoother-running pipelines.

- Stable results reduce debugging complexity, making failures easier to identify and resolve.

Causes of Poor Repeatability

- The fragile locator makes the tests fail randomly when UI elements are moved or changed.

- Incorrect wait commands cause timing mismatches that result in erratic test results.

- Environmental differences create variations in behavior between local, staging, and CI environments.

- Shared test data creates conflicts between test execution, as many test cases are dependent on the test data.

- When the application and automation test run are not in sync due to timing issues, the test fails intermittently.

Reliable automation starts with predictable outcomes.

3. Tests Must End with a Clear Validation

A test that has not been validated is an unfinished one. Automation isn’t about taking action; it’s about checking results. Clear validation makes sure that the test has a clear goal and checks to see if the expected behavior happened. If you don’t have it, a test might pass even if the app isn’t working right, which could mean you miss bugs and feel more confident than you should. Every test must end with a clear assertion that confirms whether the expected behavior occurred.

Why This Matters

- A clean validation describes that the results are good or bad, with no confusion about the test result.

- Proper assert statements remove false positives, making sure no test can pass without meeting the expected criteria.

- Strong validations make sure that the test really checks and reflects the business logic that was meant to be tested.

- Well-designed checks improve defect detection, making it easier to catch issues early and accurately.

Examples of Validations

- UI validations include text to be present, absent or changed.

- API response validations, including the schema and value match.

- Validations for checking the database operations.

- Validating the notifications that are shown.

When the validations are meaningful, it gives a purpose for that test case.

4. Tests Must be Stable Enough for CI/CD

CI/CD pipelines reveal gaps in automation. Tests will be faster, headless, parallel, and on machines with limited resources. A good automated test should be able to handle these situations without failing at random. The test must always work the same way, no matter how fast it runs, what environment it runs in, or how many people are using it at once.

Why This Matters

- Stable tests make sure the pipeline won’t get blocked, thereby ensuring the development and release workflows won’t get interrupted.

- Reliable automation enables a faster feedback loop, thereby helping the team to detect and fix issues quickly.

- If the test behaviour is consistent, then there can be multiple deployments in a day, making continuous delivery achievable.

- Better automation stability brings confidence in the team, thereby encouraging wider adoption and reliance on test results.

Common CI/CD Failure Points

- If the scripts contain hardcoded sleep timing, then there is a high chance the test case will fail while running in CI/CD.

- There can be failures due to network latency. Using AI techniques helps to ensure the tests are executed successfully, even if there is network latency.

- Without unique test data, the test cases can fail, as the test data may be used by some other tests and have been changed to the preconditions.

5. Tests Must be Very Easy to Understand

Readable tests minimize maintenance, speed up debugging, build collaboration, and future-proof the automation. When a test is clear, teams can update, debug, and extend it without fear of breaking it or misunderstanding its behavior. It should remain instantly clear to any engineer, QA, Dev, Product or someone else new to the team.

Why This Matters

- If the tests are easy to understand, any new member getting onboarded will get to track easily. Usually, this is the most painful point for any new resource joining the team to understand the test cases.

- When there is a cross-team dependency, the scripts that are easy to understand help the team understand the flow.

- Tests that are written transparently make the maintenance and updates part very easy, even if it’s not done by the author.

- A complex test case can create multiple interpretations, which can cause a wrong test result.

Readable Tests Exhibit

The properly written test case should have a clear name, and the language flow should be plain, not making it complicated and confusing, and should have an intuitive structure with obvious validations. If someone needs ten minutes to interpret the test, it’s not a good test. Read: How to Write Test Cases from a User Story.

6. Tests Must Require as Little Maintenance as Possible

Automation scales only when maintenance is kept to a minimum. High-maintenance tests quickly drain engineering capacity and limit ROI. When maintenance of the tests is minimal, the team can start to focus on increasing coverage, quality, and productivity rather than always fixing everything that’s broken. A good test is a responsive one; it survives minor UI or behavioural changes without breaking.

Why This Matters

- A test with very little maintenance reduces the overall cost of ownership.

- Reliable tests prevent the automation decay, thereby reducing the maintenance cost for automation.

- Once the test becomes more stable, the team focuses on expanding the coverage.

- With stable tests, the development and release became faster.

Strategies to Lower Maintenance

- Traditional automation tools depend on flaky web element locators, which get changed frequently. With AI-based tools like testRigor, it has its own method of identifying elements, making the test cases stable. Read: Decrease Test Maintenance Time by 99.5% with testRigor.

- It’s always a good practice to have unique test data for every test case, thereby reducing the false positive failures due to data issues, and which in turn makes the tests stable.

- It’s a good practice to avoid test chaining; if it must be followed, it’s better to follow more robust exception criteria logic.

- If UI validation is not mandatory, then it is better to use API automation, as the failure rate is very minimal for API tests.

7. Tests Must be Independent and Able to Run in Parallel

Test independence is essential for test scalability because each test must create its own data, clean up after itself, avoid relying on execution order, and refrain from sharing state with others. When tests operate in complete isolation, they remain reliable regardless of how or when they run. This independence allows them to execute in parallel, dramatically reducing total pipeline execution time.

Why This Matters

- Test independence enables fast pipelines by allowing parallel execution without conflicts.

- Isolated tests eliminate cascading failures, preventing one test’s issues from affecting others.

- Independent execution improves overall reliability, ensuring consistent behavior across environments.

- Self-contained tests support large-scale suites, making it possible to expand coverage without increasing instability.

Common Violations of Independence

- Shared user accounts create conflicts between tests and lead to unpredictable outcomes.

- Reusing state between tests causes dependencies that break isolation and reduce reliability.

- Tests that depend on others to prepare data become fragile and fail when the expected sequence is disrupted.

- Global variables and static states introduce hidden coupling, making tests interfere with one another.

How to Achieve it as Easily as Possible?

Through testRigor, you can write tests in plain English from the end-users’ perspective, which would simplify your life significantly and assist with all the features above:

- The test can be executed programmatically – clearly, testRigor is a test automation system, so it does help. Moreover, it empowers people who are not engineers to create test automation.

- The test is repeatable, and if the application remains unchanged, the test result remains the same. Here, testRigor makes it trivial to generate unique data.

- The test ends with a validation. Validations are made easy in testRigor, too.

- The test is stable enough to be used in CI/CD. A unique feature of testRigor is that the tests are as stable as if you executed them manually. Moreover, testRigor is built for CI/CD and easily integrated with Jenkins, CircleCI, GitHub Actions, GitLab CI, or anything else you can think of.

- The test is very easy to understand. It can’t get simpler, as tests are in plain English. Read: All-Inclusive Guide to Test Case Creation in testRigor.

- The test requires as little maintenance as possible. Tests on testRigor only need to be changed when your executable specification changes.

- The test is independent and can run in parallel with all other tests. This is a built-in feature of testRigor; all tests by default will run in parallel.

Wrapping Up

A well-designed automated test is more than a script; it is a long-term quality asset that consistently supports rapid releases and evolving software. By following these seven principles, teams create tests that remain stable, scalable, and easy to maintain even as applications grow in complexity. When paired with modern tools like testRigor, these principles become even easier to achieve, enabling organizations to confidently accelerate delivery without sacrificing quality.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |