Flaky Tests – How to Get Rid of Them

|

|

Automated testing has established itself as a fundamental part of contemporary software development. It allows teams to verify code, catch regressions early, and speed up release cycles, all in their CI/CD pipeline. And yet, out of the countless technical and process hurdles teams have to overcome on a daily basis, flaky tests are still one of the most enduring and annoying problems.

Flaky tests, tests that randomly pass or fail without any change in the code, are a known source of headache for undermining confidence, slowing development, and causing noise in automation. As teams grow their automated testing efforts, the question becomes inevitable:

Can you really get rid of flaky tests?

We will explore this question from first principles: what flaky tests are, why they occur, whether they can ever be fully eliminated, and practical strategies that help teams mitigate their impact.

| Key Takeaways: |

|---|

|

What are Flaky Tests?

A flaky test is an automated test that demonstrates its implementation inconsistently, failing some number of times in a row against the same code and environment with no changes. Now it can go through in one run, fail the next, and pass again soon after. A test that cannot be trusted can hardly be used. This means that flaky tests act to reduce confidence in the overall automated test suite. With time, they can slow progress by getting teams to re-run tests or simply let failures pass.

- You run the test once — it passes.

- You run it again immediately — it fails.

- You run it a third time — it might pass again.

This discrepancy is counter to the core premise of automated testing: same inputs should result in the same outputs. In a well-behaved test suite, when you don’t change the code, a test should pass consistently or fail consistently. A flaky test would violate this and add a random number to the result. This randomness makes it impossible to distinguish actual defects from testing noise, resulting in wasteful time and a loss of automation confidence.

Read: Decrease Test Maintenance Time by 99.5% with testRigor.

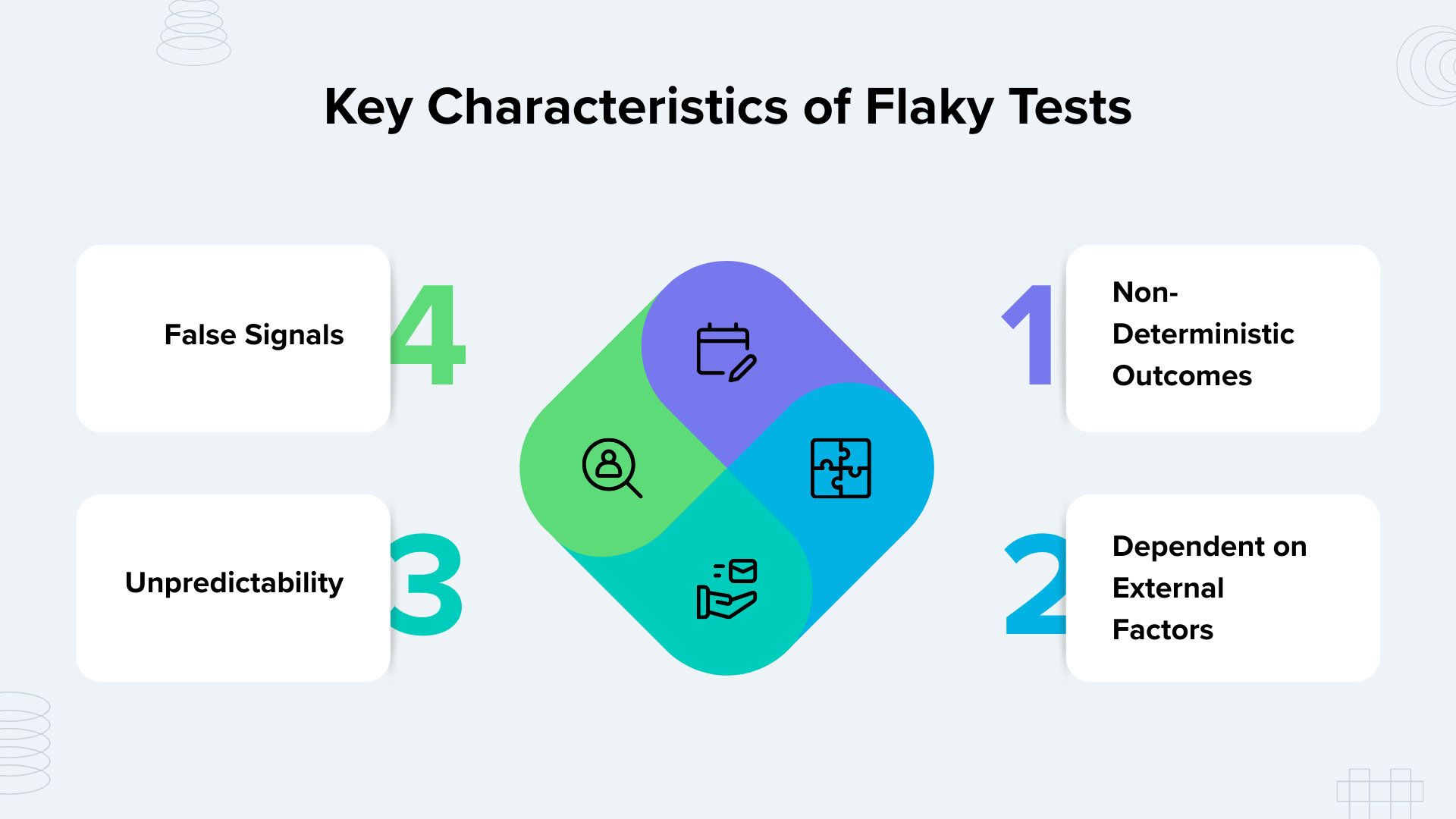

Key Characteristics of Flaky Tests

Flaky tests share these common characteristics, which help explain why we can’t trust their outcomes. These features explain why and how these tests behave in perpetuating different behavior across several executions. Having an awareness of these behaviors allows teams to identify flakiness early on and correct it before performance and confidence in the development process are affected.

- Non-deterministic Outcomes: The same test may yield different results in several runs, even if the tested code or environment remains unchanged.

- Dependent on External Factors: Flakiness often arises due to timing, non-deterministic environments, shared state between tests or flaky third-party services.

- False Signals: These tests cause non-defect-related failures that mislead a team down the path of chasing ghosts.

- Unpredictability: Their random behavior makes root-cause analysis difficult and significantly increases the time required to debug failures.

Flaky tests can affect tests at any layer of the test suite: unit, integration, UI, performance or end-to-end. They can often be found in tests that involve working with the real systems, because timing, network reliability, and external dependencies contribute to instability more than mocks or stubs.

Read: AI at testRigor.

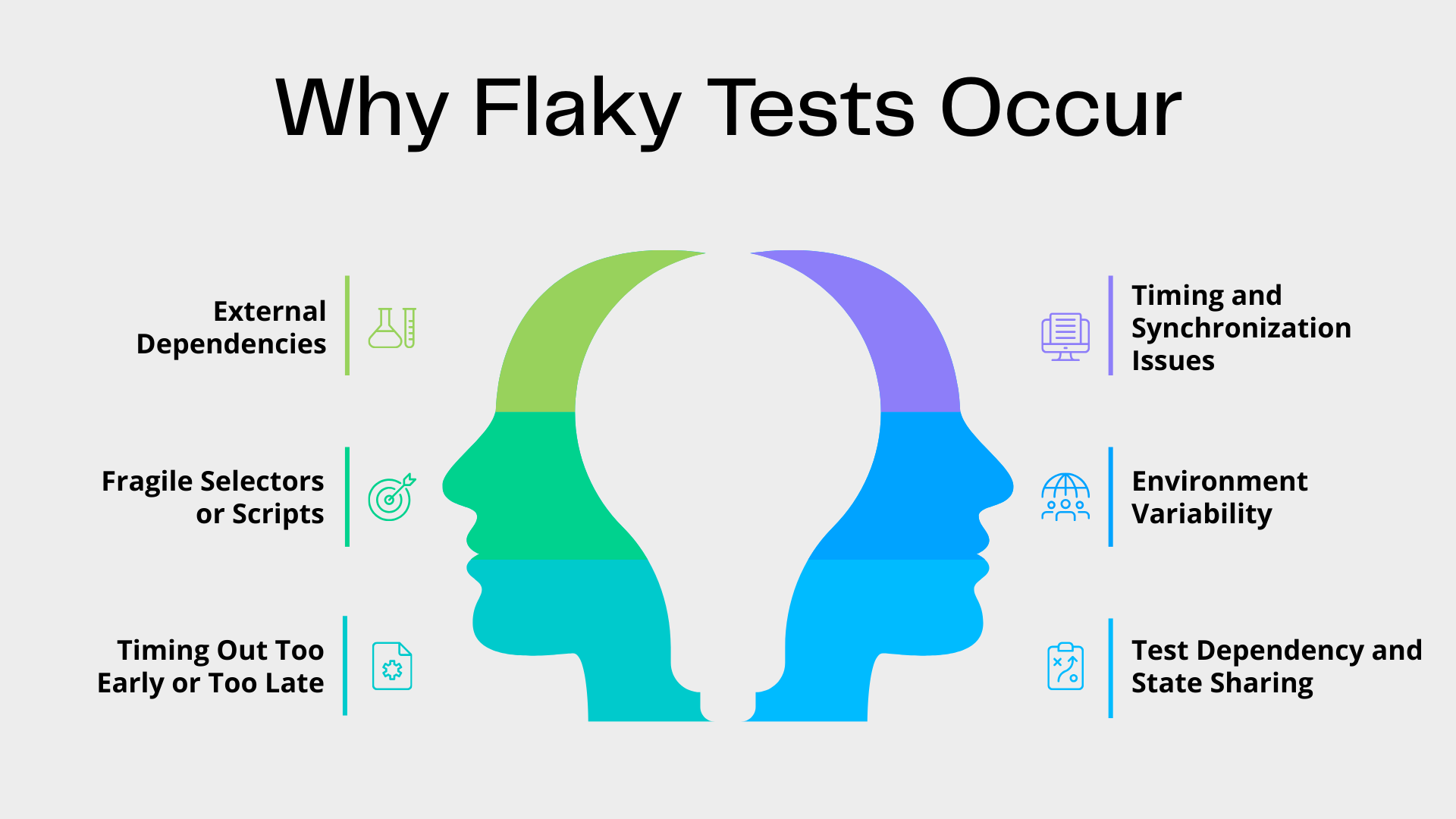

Why Flaky Tests Occur

We can’t discuss flaky tests without addressing why they crop up in the first place. Although flaky tests could have a variety of triggers, most load-related reasons fall into a few general categories. By identifying these root causes, you can more easily prevent flakiness, rather than fixing the symptoms as they come up after your tests fail.

Timing and Synchronization Issues

When tests interact with asynchronous operations such as UI rendering, network responses, or file I/O, tests that make assumptions about timing will break intermittently. Without proper synchronization or waiting mechanisms, tests may execute assertions before the system reaches the expected state. For example, a test that checks if a button exists before it actually loads can fail due to a slow network response or slower hardware performance.

Environment Variability

Various types of test environments, such as desktops, CI machines, VMs, and cloud machines, can be some sources of non-determinism. It could also be that there’s network latency, CPU load or even discrepancies between the test machines. These differences cause the tests to not run consistently in different environments, even if the application code is identical.

Test Dependency and State Sharing

Tests that reuse shared state, like databases, files, or global variables, might conflict with each other. When it’s not the case, a test result will affect another. So, there are hidden dependencies that cause test outcomes to become order dependent. Because of this, tests can pass or fail because they are run in an execution sequence rather than because the application is acting a certain way.

External Dependencies

Any tests that rely on external APIs or services are fundamentally fragile. If the third-party service is down or slow, this will cause the test to fail even though your application logic is fine. When these failures happen, they add noise to test results and reduce our confidence in the dependability of automated tests.

Fragile Selectors or Scripts

In the case of UI tests, if they are built using fragile selectors or deprecated locators, then when the UI slightly changes (for example, a minor layout change or dynamic attributes), it will cause flaky results, and nothing has actually changed as far as application functionality. That makes people solve rather generic scenarios, and the general test maintainability overhead grows for that change.

Timing Out Too Early or Too Late

Tests with hard timeouts that are neither adaptive nor context-aware can frequently fail if the system under test takes slightly longer to respond. Slow environments, temporary load spikes, or background processes can push response times just beyond the configured limit. As a result, tests fail intermittently even though the application is functioning correctly.

Read: A Comprehensive Guide to Locators in Selenium.

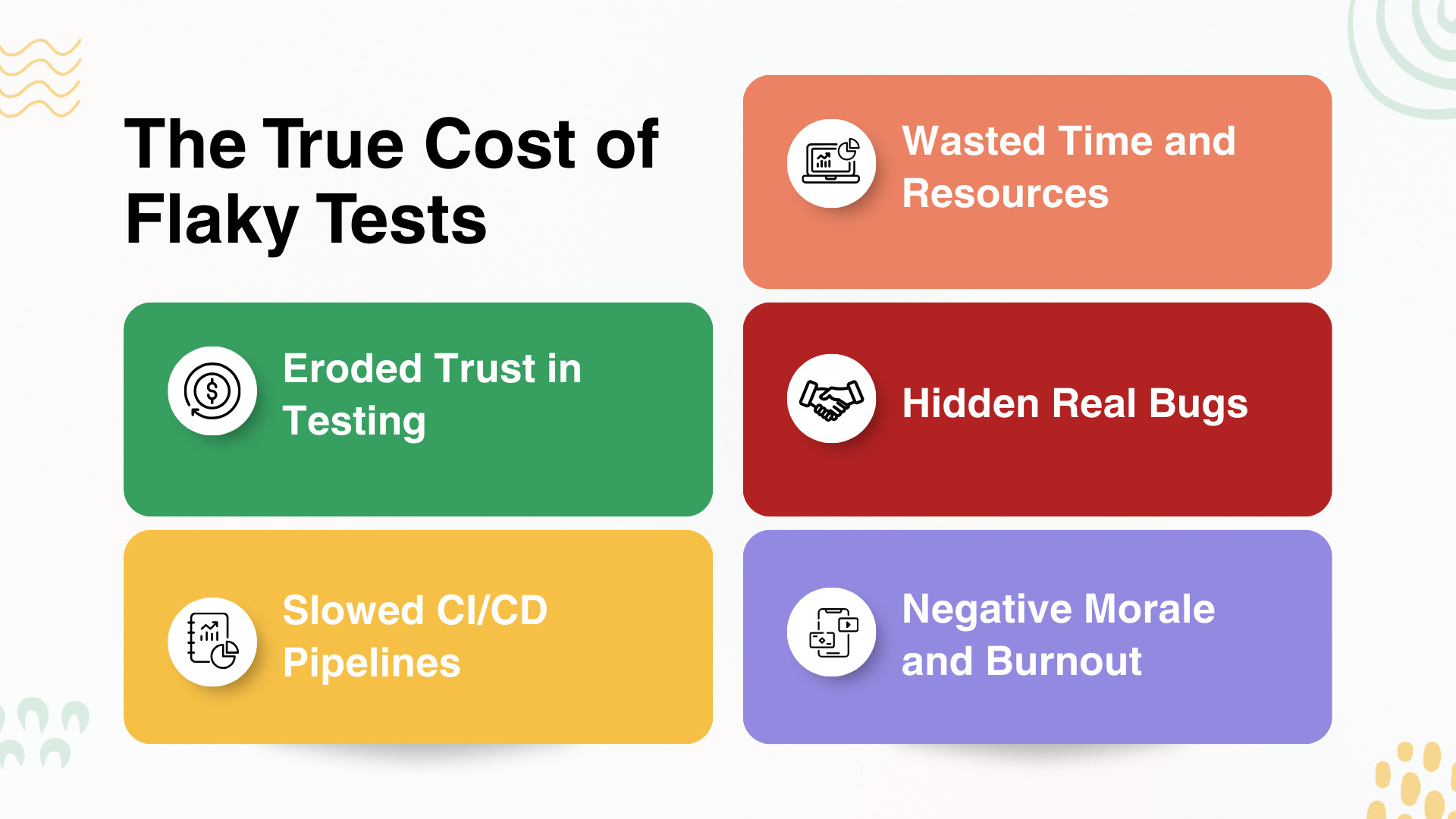

The True Cost of Flaky Tests

Flaky tests may seem a minor annoyance at first, but their effect is actually quite damaging. They slowly but surely devalue the entire process of testing and erode engineering discipline over time. What starts out as an occasional annoyance can turn into a chronic issue that hurts quality, delivery speed, and team morale.

- Eroded Trust in Testing: When a team cannot trust test outcomes, developers start ignoring failing tests or rerunning them until they “turn green.” This undermines the core purpose of automation: confidence in quality.

- Slowed CI/CD Pipelines: Each flaky failure typically triggers manual investigation, re-runs, or even paused pipelines. This directly increases cycle times and reduces delivery velocity.

- Wasted Time and Resources: Teams spend precious hours diagnosing false alarms that are not real failures. This wasted engineering effort could be spent on building new features or improving product quality.

- Hidden Real Bugs: Perhaps the most dangerous effect is that flaky failures may be dismissed as “just another flake.” This can mask real defects that slip into production unnoticed.

- Negative Morale and Burnout: Constantly fighting flaky tests can demoralize teams, leading to frustration and burnout among QA engineers and developers alike.

Read: How to Write Stable Locators.

Can Flaky Tests be Completely Eliminated?

Expectations about test flakiness would need to be realistic. On the other hand, in modern distributed, asynchronous, and infrastructure-dependent software systems, achieving absolute zero flakiness is a rather theoretical goal. There will always be some traces of non-determinism, so complete removal is not practical.

The real goal isn’t perfection, but practical elimination, driving flakiness down to a level where it no longer impedes release velocity, undermines trust, constitutes anything more than a commensurate burden, or warps how engineering is done. Flakiness only becomes truly dangerous when there’s a culture of toleration, normalisation or disregard rather than control.

Read: AI-Based Self-Healing for Test Automation.

The Myth of “Just Retry the Test”

The most frequently used technique that teams have developed is automatic retries, where a failed test is run one or two more times before it is allowed to fail our build. While this can minimize short-term noise, it generally hides the problem instead of solving it. Eventually, re-tries become flaky and teach teams that test failures are merely negotiable, not actionable.

- Retries mask real defects by allowing unstable tests to pass without addressing the root cause.

- Retries reduce accountability for test quality because failures are no longer treated as signals that require investigation.

- Retries increase CI execution time, slowing feedback loops and delaying releases.

- Retries make failures harder to interpret by obscuring whether a test passed due to stability or sheer repetition.

Retries should be used sparingly and temporarily, not as a permanent solution.

Read: How to reference elements by UI position using testRigor?

Flaky Tests as a Test Design Problem

Flakiness is typically due to poor test design rather than the underlying infrastructure being unreliable. Tests tend to get that way when they try to do too much, rely on specific implementation details, mix orchestration with verification, are based on capturing exact timing, or read further than is intended.

Well-designed tests, by contrast, focus on outcomes rather than mechanics. They validate observable behavior instead of internal structure, operating at the right level of abstraction. When tests are designed this way, flakiness tends to decrease naturally rather than requiring workarounds.

Read: How to use AI effectively in QA.

Test Pyramid Imbalance and Flakiness

Teams that have a lot of UI or end-to-end-heavy test suites tend to be much flakier. These tests have more things in motion, more contextual dependencies, and they are typically in environments that are noisier. Partially as a consequence, failures commonly originate outside the system under test, which makes them difficult to diagnose and reproduce. This instability eventually dampens feedback loops and erodes trust in test findings.

- Deterministic unit tests verify core logic quickly and reliably with minimal external dependencies.

- Isolated integration tests validate interactions between components while keeping the environment controlled.

- Contract validation ensures services agree on expectations without requiring full system execution.

- A focused set of end-to-end scenarios confirms critical user journeys without overloading the test suite.

Read: Test Automation Pyramid Done Right.

Organizational Factors that Enable Flakiness

Flakiness is more than a technical problem; it’s often the outgrowth of organizational habits and incentives. Teams’ ownership arrangement, how they respond to failure, and the value placed on test automation actively affect the stability of the test. And when an organization tolerates poor practices, flaky tests become widespread in a hurry.

- Test ownership is unclear, leaving no one accountable for fixing or improving unstable tests.

- Failures are ignored, allowing flaky behavior to persist without investigation or resolution.

- Automation is treated as a checkbox, leading to superficial coverage instead of reliable validation.

- Speed is prioritized over stability, encouraging shortcuts that introduce long-term test fragility.

High-performing teams treat automation as production software. Tests have clear owners and are reviewed, refactored, and maintained with the same discipline as application code.

Why Traditional Automation Tools Struggle

Traditional automation tools often struggle with flakiness, not because teams misuse them, but because of the patterns these tools encourage by design. They push testers closer to implementation details and away from intent-driven validation. Over time, this increases complexity and amplifies instability in test suites.

- Forcing low-level scripting ties tests directly to UI structure and technical mechanics rather than user behavior.

- Relying on brittle selectors causes tests to break with minor UI or DOM changes that do not affect functionality.

- Requiring manual synchronization logic introduces timing assumptions that are difficult to maintain and easy to get wrong.

- Encouraging overengineering in tests leads to excessive conditional logic and branching that increases fragility.

The more logic embedded in tests, the more opportunities exist for instability.

How AI-Driven Automation Reduces Flakiness

Modern automation is shifting away from scripting how to test toward describing what should happen. This intent-based approach reduces coupling, improves resilience, and aligns tests more closely with real user behavior. This shift lays the foundation for AI-driven automation.

AI-powered automation decreases flakiness by moving testing from hard-coded scripts to contextual knowledge. These tools don’t depend on specific technical instructions, but they read intentions and watch the application as a human tester would. This results in much less instability in modern, rapidly changing systems.

- Abstracting away fragile selectors so tests are not tightly coupled to the DOM structure or UI implementation details.

- Handling synchronization automatically by waiting for meaningful application states rather than fixed time delays.

- Adapting to UI changes by recognizing elements visually or semantically, even when layouts or identifiers change.

- Minimizing timing assumptions by reacting to actual system readiness instead of predefined execution order.

Read: AI Context Explained: Why Context Matters in Artificial Intelligence.

How testRigor Helps Avoid Flaky Tests

testRigor aims to address flakiness by changing how tests are authored from low-level scripting to intent-based assertions on behaviour. It provides a radical separation between what the user does and how they do it, thus removing many of the structural causes of instability. It enables the creation of test suites that are stable enough for rapidly changing applications.

-

Plain-English, Intent-driven Test Creation: testRigor allows tests to be written in plain English, describing user actions and expected outcomes rather than technical steps. This keeps tests aligned with business behavior instead of code structure. As a result, tests are easier to read, maintain, and are far less sensitive to internal changes.

-

Context-based Element Identification: Elements in testRigor are identified using visible text and contextual understanding instead of brittle XPath or CSS selectors. This mirrors how real users perceive the application rather than how the DOM is structured. When UI layouts or underlying code change, tests remain stable because the user-facing behavior is unchanged.Read: testRigor Locators.

-

Built-in Smart Waiting and Synchronization: testRigor automatically handles synchronization by waiting for meaningful application states before performing actions. Test authors do not need to add explicit waits, sleeps, or timing logic. This eliminates a major source of flakiness caused by asynchronous behavior and variable load times.

-

Vision AI for Visual Understanding: Vision AI enables testRigor to validate what is actually rendered on the screen, not just what exists in the code. This is especially important for dynamic interfaces, canvas elements, and modern front-end frameworks. By verifying visible outcomes, tests stay accurate and resilient as UI implementations evolve.

Now, see a sample comparison between the script created using traditional automation tools and testRigor. The scenario that we validate below is a simple login.

Traditional Automation Scripting

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver = webdriver.Chrome()

wait = WebDriverWait(driver, 20)

try:

driver.get("https://example.com/login")

username = wait.until(

EC.visibility_of_element_located(

(By.XPATH, "//form[@id='loginForm']//input[@name='username' and @type='text']")

)

)

username.clear()

username.send_keys("[email protected]")

password = wait.until(

EC.visibility_of_element_located(

(By.XPATH, "//form[@id='loginForm']//input[@name='password' and @type='password']")

)

)

password.clear()

password.send_keys("SuperSecretPassword")

login_btn = wait.until(

EC.element_to_be_clickable(

(By.XPATH, "//form[@id='loginForm']//button[contains(@class,'btn') and normalize-space()='Login']")

)

)

login_btn.click()

welcome_text = wait.until(

EC.visibility_of_element_located(

(By.XPATH, "//header//div[contains(@class,'user-info')]//*[contains(normalize-space(),'Welcome')]")

)

)

assert "Welcome" in welcome_text.text

print("Login successful")

finally:

driver.quit()

testRigor Script

enter stored value value "email address" into "Username" enter stored value "password value" into "Password" click "Login" check that page contains "Welcome"

If you notice the testRigor script, there is no dependency on XPaths, this makes sure the script is not dependent on any DOM elements, ensuring the script won’t be brittle. Similarly, there are no custom waits. These capabilities ensure there is no flakiness in the script, and the stakeholders won’t see any false positive test reports.

Conclusion

Flaky tests are not just a technical nuisance but a signal of deeper issues in test design, tooling, and team discipline. While achieving absolute zero flakiness is unrealistic in modern, asynchronous systems, teams can reduce it to a negligible level by focusing on deterministic test design, clear ownership, and the right balance in their test strategy.

Treating test automation as production-quality software and refusing to normalize flaky behavior restores trust and speed in CI/CD pipelines. By adopting intent-driven, AI-powered approaches like testRigor, teams can move beyond brittle scripts and finally make flaky tests the exception rather than the rule.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |