Scalability Testing: Automating for Performance and Growth

|

|

Have you ever witnessed a store-wide sale at a popular outlet? Hundreds of people with long shopping lists are looking to avail themselves of discounts. The store attendants are perplexed and overburdened with the frenzy. The cash counters have long queues. Everyone within the store is under immense pressure.

Now imagine a site-wide sale on an online store like Amazon. Though you cannot see the customers in person, you can very well imagine everyone’s eagerness to complete their shopping at discounted rates. In online stores like Amazon, the store attendants translate to the website and its resources like the checkout module, search module, online payment, the website’s load handling capacity, and so on. Continuing with Amazon’s example, all of their sales are hit, with millions of products being sold successfully. So, how do these online stores prepare for it?

Firstly, they use historical data and industry trends to predict the expected user load. Then, load-testing tools are used to simulate thousands of virtual users concurrently browsing products, adding items to carts, and making purchases. This creates an environment that mimics the anticipated real-world traffic on Prime Day. During the simulated load, various metrics are closely monitored. The test results help pinpoint any weaknesses in the system. Finally, based on the test results, Amazon can take steps to scale its infrastructure.

What essentially happened here is that Amazon did scalability testing to ensure that they were ready for the event beforehand.

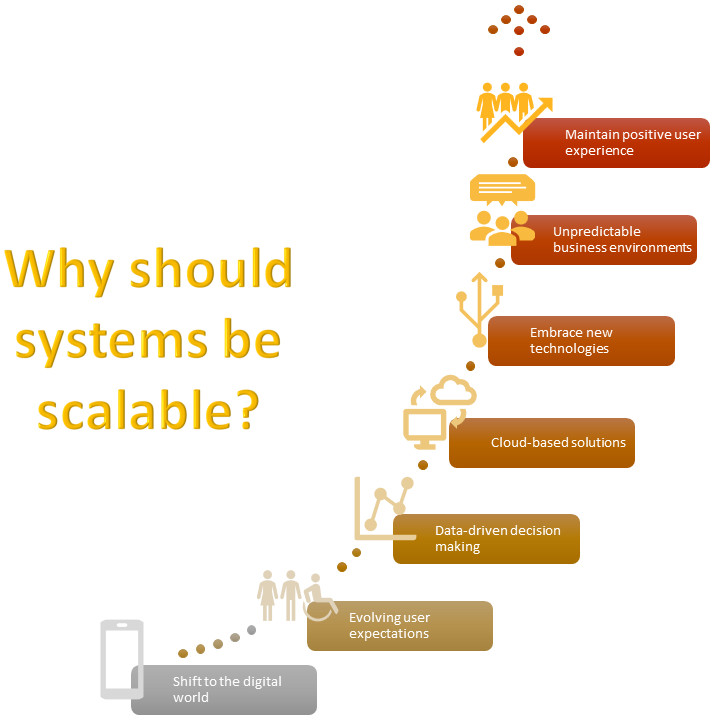

Why is scalability important?

In the era of the internet, mobile devices, the cloud, IoT, and AI, user expectations have risen. Rightly so since it has become very easy to deliver a lot to them in a very short time. But for that to be possible, systems need to be scalable. Here are some reasons why system scalability is a necessity these days.

What is scalability testing?

Scalability testing is a type of non-functional testing that assesses how a system performs under varying loads. It’s essentially putting the software through its paces to see if it can handle an increase (or decrease) in user traffic, data volume, or overall workload without falling apart.

How is scalability testing different from load testing?

Scalability testing

Scalability testing is all about assessing how a system adjusts to varying loads by adding or removing resources (scaling up or down).

The key question it answers is: Can the system grow and still perform well?

It typically involves a gradual increase in load over time, which simulates how a system might experience growth in real-world scenarios. The primary concern is maintaining performance as the system scales. Metrics like response times, throughput, and resource usage are monitored to identify bottlenecks that could hinder future growth.

Load testing

Load testing is more concerned with determining the system’s maximum capacity under a specific load.

It essentially asks: How much load can the system handle before it breaks?

Load testing tools create scenarios that mimic real-world user traffic patterns, often focusing on peak usage periods. This helps identify breaking points and ensure the system can withstand anticipated stress. The emphasis is on identifying weaknesses that could cause crashes or performance degradation during periods of high traffic.

Scalability vs. load testing

Here’s an analogy to help understand the difference better:

- Think of scalability testing like testing a balloon. You gradually inflate the balloon (increasing load) with air (resources) to see how much it can expand (scale) before it pops (failure). You’re interested in how well it scales with added resources.

- Load testing is like squeezing a balloon. You apply pressure (load) to see how much it can take before it bursts (failure). You’re interested in the maximum pressure it can withstand.

In essence, scalability testing is about future-proofing your system for growth. On the other hand, load testing is about ensuring your system can handle current and anticipated peak loads.

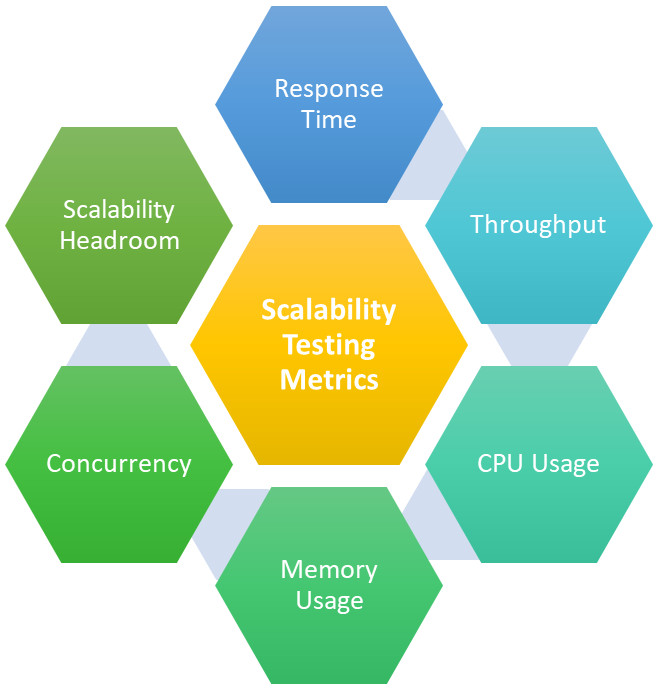

Metrics involved in scalability testing

Scalability testing measures a combination of performance metrics and resource utilization parameters to assess how well a system adapts to changing loads. Here’s a list of parameters:

- Response time: This is the time it takes for the system to respond to a user request after receiving it. Scalability testing tracks how response times change as the load increases. Ideally, they should stay within acceptable limits even under heavy traffic.

- Throughput: Throughput refers to the number of requests or transactions the system can process within a specific timeframe (e.g., transactions per second). Scalability testing measures how throughput scales with increasing load. The system should be able to handle a higher volume of requests without a significant drop in throughput.

- CPU usage: This indicates how busy the Central Processing Unit is in handling tasks. Scalability testing monitors CPU usage to identify if it becomes overloaded under heavy load, leading to performance degradation.

- Memory usage: This refers to the amount of memory the system is using. Scalability testing helps determine if the system runs out of memory under heavy load, causing crashes or slowdowns.

- Network bandwidth: This is the amount of data that can be transferred over the network in a given time. Scalability testing monitors network bandwidth usage to identify if it becomes saturated under heavy load, leading to slow data transfer or network congestion.

- Concurrency: This refers to the number of users that can interact with the system simultaneously. Scalability testing measures how the system performs with an increasing number of concurrent users.

- Scalability headroom: This is the buffer between the current load and the system’s capacity. Scalability testing helps determine how much headroom the system has before performance suffers.

Types of scalability testing

There are two main ways to go about scalability testing based on how the load is adjusted:

Horizontal scalability testing

This type focuses on evaluating how the system performs when you add more resources to distribute the workload. Imagine adding more lanes to a highway (resources) to handle increased traffic (load).

- Approach: Simulates adding more servers or instances of the application to handle the growing load. This helps assess if the system can effectively distribute tasks across these additional resources and maintain performance.

- Example: Adding more web servers to an e-commerce website during the peak season to handle increased user traffic and purchase volume. Read: Why Companies Switch to testRigor for E-Commerce Testing?

Vertical scalability testing

This type concentrates on evaluating how the system performs when you upgrade existing resources to handle a higher load. Think of widening existing lanes on a highway (upgrading resources) to accommodate more traffic (load).

- Approach: Simulates upgrading hardware components like CPU, memory, or storage capacity on existing servers. This helps assess if these upgrades can improve the system’s capacity to handle a heavier load.

- Example: Upgrading the CPU and memory of a database server to handle a larger volume of data queries.

Horizontal vs. vertical scalability testing

Here’s a table summarizing the key differences:

| Type of Scalability Testing | Approach | Analogy | Example |

| Horizontal | Adding more resources | Adding more lanes to a highway | Adding more web servers |

| Vertical | Upgrading existing resources | Widening existing lanes on a highway | Upgrading CPU and memory of a server |

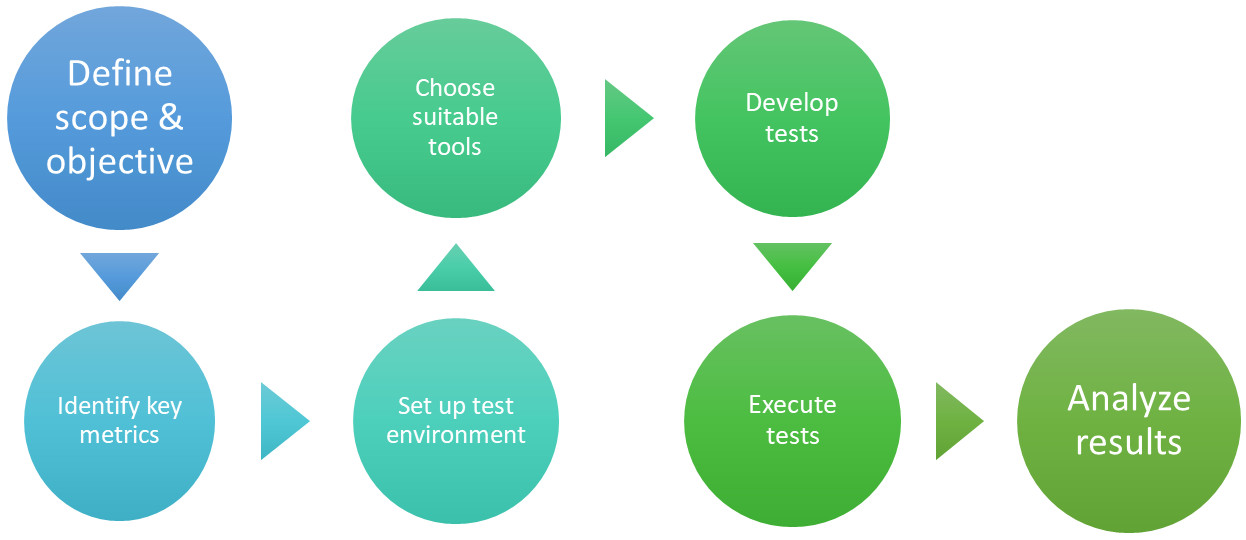

How to do scalability testing?

You can follow this approach to go about with scalability testing. Based on your organization’s policies and specific needs, you can customize this further.

-

Define the scope and objectives

- Start by clearly defining the application or system under test and its expected usage patterns. Read: Test Planning – a Complete Guide.

- Determine what aspects of scalability you want to assess (horizontal, vertical, etc.).

- Set specific and measurable objectives for the testing, such as identifying performance bottlenecks or measuring the system’s capacity under load.

-

Plan the test environment

- Set up a testing environment that closely mirrors your production environment in terms of hardware, software, and network configuration. This ensures the test results are relevant to real-world scenarios.

- Provision enough resources to simulate various load levels during the testing process.

-

Choose the right load testing tool

- Several load testing tools are available, each with its own features and functionalities.

- Consider factors like budget, complexity of your system, and desired level of control when selecting a tool.

-

Design scalability test scenarios

- Develop realistic test scenarios that reflect typical user behavior and expected load patterns. This could involve simulating user logins, product searches, or purchase transactions. Read: Positive and Negative Testing: Key Scenarios, Differences, and Best Practices.

- Gradually increase the number of virtual users or workload in these scenarios to progressively stress the system.

-

Configure and execute the tests

- Configure the chosen load testing tool according to your test scenarios and load parameters.

- Run the scalability tests and closely monitor the system’s performance metrics (response times, throughput, resource utilization) throughout the testing process.

-

Analyze the results and identify bottlenecks

- After the tests are complete, analyze the collected data to identify any performance bottlenecks or areas where the system struggles under load. Read: Test Reports – Everything You Need to Get Started.

- Look for trends in response times, throughput, and resource usage to pinpoint potential issues.

-

Make improvements and iterate

- Based on the test results, formulate a plan to address the identified bottlenecks. This might involve optimizing code, upgrading hardware, or implementing horizontal scaling strategies.

- Refine your test scenarios and iterate on the scalability testing process to continuously improve the system’s ability to scale effectively.

Automating scalability testing

Scalability testing also involves tasks that are repetitive in nature and can be delegated to test auomation. Automation will empower you to run more extensive tests with a larger number of virtual users, providing a much better picture of the system’s behavior under heavy load. For faster and more consistent results, you can automate:

- Test case execution

- Test data management

- Test reporting and analysis

- Integration with CI/CD

- Test environment setup and teardown

With modular and reusable test components, you can ensure that the effort for setting up scalability testing is low.

Writing test cases for scalability testing

When it comes to the test cases themselves, you can use the following approach to design them.

What to consider when creating scalability test cases?

Before diving into the writing part, consider these aspects.

- System Under Test (SUT): Clearly define the application or system you’re testing, including its functionalities and expected usage patterns.

- Scalability goals: Determine what aspects of scalability you want to assess (horizontal, vertical, or a combination). Are you focusing on handling more users, increased data volume, or overall workload?

- User scenarios: Identify realistic user actions and behaviors that will be simulated during the test. Consider typical user journeys and peak usage periods.

- Performance metrics: Define the key performance metrics you’ll monitor, such as response times, throughput, and resource utilization (CPU, memory, network).

- Load levels: Specify the different load levels you’ll simulate during the test. This could involve gradually increasing the number of concurrent users, data volume, or transaction rate.

- Pass/fail criteria: Establish clear criteria for determining whether a test case has passed or failed. This might involve setting acceptable thresholds for response times or resource usage under load.

How to write scalability test cases?

Now that you’ve done the pre-requisites let’s look at how to write scalability test cases:

- Start with user journeys: Map out the core user journeys within your application. Break down these journeys into specific steps a user would take (e.g., login, search for product, add to cart, checkout). Read: UX Testing: What, Why, How, with Examples.

- Define test scenarios: Based on the user journeys, design test scenarios that simulate real-world user behavior under varying load conditions. These scenarios should represent typical and peak usage patterns.

-

Structure your test cases: Use a clear and consistent format for your test cases. Know: How to Write Test Cases? (+ Detailed Examples).Test cases could include:

- Test case ID: Unique identifier for each test case.

- Description: Clear description of the user journey or functionality being tested.

- Pre-conditions: Any setup steps required before the test can run (e.g., user login, data population).

- Test steps: Detailed steps outlining the user actions simulated during the test.

- Pass/fail criteria: Define the conditions for successful test completion (e.g., acceptable response times, resource utilization levels).

- Expected results: Specify the anticipated outcomes of the test under normal and high load.

- Leverage data-driven testing: Utilize external data files (CSV, JSON) to store test data (usernames, passwords, product IDs) for your test cases. This allows for easy variation in test data during execution and avoids repetitive test script modification. Read: How to do data-driven testing in testRigor.

- Consider gradual load increase: Design your test scenarios to gradually increase the load on the system (e.g., start with a low number of concurrent users and progressively increase). This helps pinpoint the load level where performance starts to degrade.

- Incorporate error handling: Include steps to handle potential errors or unexpected behaviors that might occur during the test. This ensures the test continues execution even if it encounters issues.

- Maintain and update test cases: As your system evolves, regularly review and update your scalability test cases to reflect new functionalities or changes in user behavior.

Challenges with automated scalability testing

Though automated scalability testing can be a great step toward achieving your QA goals, it still poses challenges that need to be addressed.

- Initial investment: Setting up the infrastructure and tools for automated scalability testing requires an upfront investment. This includes costs for load testing tools, potentially new hardware or cloud resources, and potentially training for your team.

- Test script maintenance: Automated tests rely on scripts that define the test scenarios and load parameters. As your system evolves with new features or functionalities, these scripts need to be maintained and updated to reflect those changes. This ongoing maintenance can be time-consuming.

- Expertise required: Some level of scripting expertise or knowledge of the chosen automation tools is necessary to effectively create and maintain automated scalability tests. This might require additional training for your QA team or hiring personnel with the specific skillset.

- Complexity of test design: Designing effective scalability test scenarios that accurately represent real-world user behavior under varying load conditions can be complex. It requires a good understanding of the system, user journeys, and performance metrics.

- Data management: Scalability testing often involves simulating a large number of users. Managing the test data like user credentials, and product information efficiently becomes crucial. Without proper data management strategies, tests can become cumbersome and prone to errors.

- Identifying the right level of automation: While extensive automation is desirable, it’s important to strike a balance. Over-reliance on automation can lead to brittle tests that break easily with minor changes.

- Simulating realistic load: Creating realistic load scenarios that accurately reflect real-world user behavior can be challenging. It’s crucial to consider factors like user think time, concurrent actions, and network conditions.

- Debugging automated tests: If an automated test fails, troubleshooting the root cause can be more challenging compared to manual testing.

Solutions to scalability testing challenges

You can use these solutions to deal with the problems faced with scalability testing:

- Modular and reusable test components: Focus on creating well-structured and modular test components that can be easily reused across different test scenarios. This minimizes maintenance effort when changes are required.

- Invest in training and skills development: Provide training opportunities for your team members to build their knowledge and expertise in test automation tools and best practices.

- Prioritize core functionalities: Start by automating critical test cases that focus on core functionalities of the SUT. Gradually expand automation coverage as your expertise and comfort level increase.

- Implement data-driven testing: Utilize data-driven testing techniques to separate test logic from test data. This allows for easier variation in user data during test execution and reduces the need for frequent script modifications.

- Focus on maintainability: Write clear, well-documented test scripts with proper commenting to enhance maintainability. Utilize version control systems to track changes and facilitate collaboration. Read: How to Write Maintainable Test Scripts: Tips and Tricks.

- Adopt an iterative approach: Start with a basic level of automation and gradually improve your test suite over time. Continuously evaluate the effectiveness of your automated tests and refine them as needed.

- Start small and scale up: Don’t try to automate everything at once. Begin by automating core test scenarios and gradually expand your automation coverage as you gain experience and confidence. Learn what is Test Scalability.

- Evaluate costs vs. benefits: Carefully assess the cost of automation tools and potential training needs against the long-term benefits of faster testing cycles, improved consistency, and quicker feedback on scalability issues.

- Focus on reusability: When creating automated test scripts, prioritize modularity and reusability. Break down complex functionalities into smaller, reusable components. This simplifies maintenance as changes only need to be made to specific modules.

- Utilize CI/CD integration: Integrate your automated scalability tests into your CI/CD pipeline. This allows for the automatic execution of tests after every code commit or deployment, ensuring continuous monitoring of scalability throughout the development lifecycle.

- Log and monitor: Implement robust logging and monitoring mechanisms within your automated tests. Detailed logs can provide valuable insights into test failures, aiding in troubleshooting. Read: Understanding Test Monitoring and Test Control.

Tips to improve automated scalability testing

Besides the solutions mentioned above, here are some more tips to help you better your scalability testing automation efforts:

-

Leverage scalable testing toolsMany load testing tools offer built-in automation features. Look for tools that allow scripting test scenarios, parameterization of data, and scheduling automated test runs.Popular options include:

- JMeter (open-source): Offers a robust scripting language for creating automated load tests.

- LoadRunner (commercial): Provides a comprehensive solution for automated load and performance testing.

-

Implement test automation frameworksConsider using a dedicated test automation framework to automate the core user actions within your test scenarios. These frameworks can integrate with load testing tools for a cohesive testing process.A good pick for you could be testRigor, which is an AI-based tool that allows you to test across multiple platforms and browsers. Being a cloud-based testing platform, it can scale with your testing needs. It uses generative AI to make test creation easier for you, as easy as writing test cases in plain English language.Not only that, but it also reduces your test maintenance costs drastically. Along with a rich feature set, it integrates well with other tools and platforms that offer services like CI/CD, test case management, databases, and more.

-

Utilize parameterization techniquesLeverage parameterization to create reusable test scripts that can be dynamically adjusted for different load levels. This avoids repetitive script modifications for each test run. For example, parameterize the number of virtual users or data used in test cases to easily scale the load during automated testing.

-

Utilize cloud-based resourcesCloud platforms like AWS or Azure offer scalable resources that can be easily provisioned and de-provisioned for automated testing. This eliminates the need to manage physical infrastructure for scalability testing.

-

Utilize version control for test scriptsMaintain your automated test scripts and configurations in a version control system like Git. This facilitates tracking changes, collaboration, and rollback to previous versions if necessary. Some test automation tools might also have built-in version control capabilities, eliminating the need to rely on other platforms for the same. Read: How to Do Version Controlling in Test Automation.

Final thoughts

Scalability is no longer a luxury – it’s a necessity for businesses to thrive in today’s fast-paced and data-driven world. It ensures they can handle growth, adapt to change, and deliver a positive user experience even under pressure.

Take scalability testing a step further and use automation. This can significantly enhance the efficiency and effectiveness of your testing process. By carefully planning, selecting the right tools, and implementing automation best practices, you can ensure your system is well-prepared to handle future growth and maintain optimal performance under varying loads.

FAQs

Scalability testing is important because it ensures that an application can handle growth in users, transactions, and data without performance degradation. It also helps identify potential bottlenecks, optimize resource utilization, and plan for future capacity needs.

Scalability testing can be integrated into a CI/CD pipeline using tools like Jenkins, GitLab CI, or Travis CI. Test scripts are executed automatically during the deployment process, and performance metrics are monitored and analyzed as part of the continuous integration and deployment workflow.

Analyze key metrics such as response times, throughput, and resource utilization to identify performance trends and bottlenecks. Compare results against predefined benchmarks to determine if the system meets scalability requirements. Use automated analysis tools to generate detailed reports and provide insights for optimization.

Scalability testing provides insights into the system’s capacity limits and performance under increased loads. This information helps in making informed decisions about resource allocation, infrastructure upgrades, and architectural changes needed to support future growth.

Monitoring is critical for collecting real-time performance metrics and identifying issues during scalability testing. Automated monitoring tools track key metrics and generate alerts for anomalies, helping to ensure that the system performs well as it scales.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |