What is Autonomous Testing?

|

|

Being autonomous means to operate independently without human intervention.

This sounds too good to be true for machines. Yet, there are examples of autonomous machines, such as novel autonomous vehicles or simple autonomous vacuum cleaners.

If we look at the evolution of the vacuum cleaner, traditional vacuum cleaners were operated by hand, requiring physical effort. The automated upright vacuum cleaners introduced motorized cleaning heads, automating the cleaning process. Bring us to the autonomous robot vacuum cleaners that can navigate through spaces, avoid obstacles, and clean floors independently.

Autonomy is also being applied to various industry processes, even software testing.

Some History

End-to-End regression testing, in particular, Web and Mobile testing, have historically been done in three ways:

- Manual testing: Someone would write descriptions in “test cases” of what and how to test, usually in an Excel sheet. Then when a release is ready, a group of humans calling themselves “testers” would read these scripts and conduct the “test”. This usually would result in verifying that the functionality described in that “test case” works according to the specification in that “test case”.

- Automation testing: Similar to above, humans would write code to “automate” those “test cases”. So there is no need (supposedly) for humans during the “test” phase because it is now executed automatically. The prime examples of frameworks that humans use to write these cases are Selenium, Appium, Cucumber, etc.

- Record-and-play: Some companies went even further and created “recorders” that testers could use to record their actions and “kind of” produce a test. I say “kind of” because you’d most probably need to write a verification part yourself.

Despite these techniques helping with achieving test coverage and low defect count, they didn’t move the needle much. This is due to the challenges with automation testing and record-and-play methodologies for regression testing, specifically the below issues:

A challenge to create

For automation, you basically spend engineering time creating tests—that’s expensive. For record and play, it is almost the same since you need to be able to create the validations and have an expert who is familiar with the system. The system usually creates the description “test cases” in advance.

Support nightmare

Many changes to the system under test will break these tests, and they might also have bugs. Basically, it is a law of diminishing returns—the more tests you have, the more time the QA team spends supporting them instead of doing something productive, including writing more tests.

Stability issues

There are stability issues of two types:

- In many cases, both automation and record-and-play tests rely on “XPath”—the precise location of elements. Any change in the structure will break it.

- The infrastructure is inherently unstable by nature. Timeouts and browser/emulator crashes are normal. The tests usually don’t account for it and die at random moments, causing spurious test “failures”.

But, most importantly, it all pales in comparison with the amount of time it takes to manually test the software. This brings us to the next ‘it thing’ in software testing – autonomous testing.

What is autonomous testing?

Imagine if the entire software testing process did not require manual intervention. That is what autonomous testing aims to achieve. It goes beyond automation by introducing self-learning and decision-making capabilities. The machine will take care of all the steps involved in software testing, like creating test cases, executing them, and maintaining them. The machine here is software powered by Artificial Intelligence (AI) and Machine Learning (ML), taking software testing to the next level.

For example…

Imagine a web application. Traditionally, a tester would manually create test cases, execute them using automation tools, and analyze the results. In autonomous testing, the system could:

- Analyze the application’s code and UI to identify potential test cases.

- Generate test cases for different user scenarios, such as login, search, and purchase.

- Execute these test cases automatically, including data-driven testing with various inputs.

- If a test fails, the system might automatically retry the test, isolate the issue, or even suggest code changes to fix the problem.

- Continuously learn from test results to improve test case coverage and efficiency.

This makes autonomous testing highly beneficial to testers.

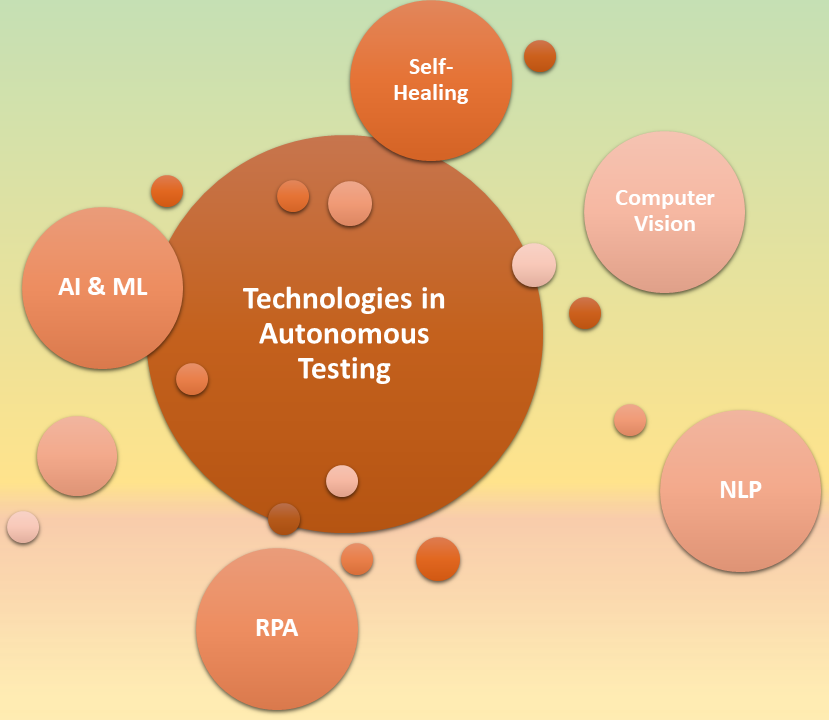

Common technologies used in autonomous testing

The following technologies are commonly employed to achieve autonomy in testing:

- Artificial Intelligence (AI): For learning from data and making decisions.

- Machine Learning (ML): This is used for pattern recognition and predictive analytics and to train models for test case generation, defect prediction, and test optimization.

- Natural Language Processing (NLP): This is used to understand and generate test cases based on requirements.

- Robotic Process Automation (RPA): For automating repetitive tasks, learn more about RPA.

- Continuous Integration/Continuous Deployment (CI/CD): This is for integrating and deploying code changes automatically.

- Computer vision: Used for image-based testing and UI element recognition. Read how to do visual testing.

- Self-healing algorithms: For maintaining and updating test scripts dynamically.

What aspects of software testing are made autonomous?

Autonomous testing takes every single aspect of software testing and tries to mimic how a human tester would go about it.

Test case creation

The manual approach: The first step would be to create test cases. A human would go through the user stories, requirements, and code (depending on the type of testing) to come up with suitable test cases.

The autonomous approach: Here’s how these systems do it:

- Data collection: These systems collect data from various sources, including application logs, user interactions, past test results, code repositories, user requirements, and issue trackers.

- Analysis: Using AI and ML algorithms, the collected data is analyzed to identify patterns, detect anomalies, and understand application behavior.

- Understanding requirements: NLP techniques are used to parse and understand requirements, user stories, and documentation.

- Modeling user behavior: AI models are trained on user interaction data to simulate real user behavior and generate realistic test cases.

- Automated script generation: Based on the analysis, the system automatically generates test scripts using test automation frameworks.

- Data-driven generation: The system creates test cases by combining different data sets to cover various input combinations.

Test execution

The manual approach: A tester would set up the test environment and run every test case in it. Executing every test case manually is not only cumbersome but also time-consuming and prone to human error.

The autonomous approach: This is a way to reduce human efforts drastically. The following techniques are used to make the process self-reliant.

- Parallel execution: Multiple tests can run simultaneously to accelerate the process. Read: Parallel Testing: A Quick Guide to Speed in Testing.

- Self-healing: The system can automatically adjust test scripts to adapt to changes in the application.

- Virtualization: Virtual environments are created to isolate tests and prevent interference.

- Environment management: They can dynamically provision and manage test environments, ensuring consistency and scalability.

- Data preparation: Test data is generated or extracted from existing sources.

- Test orchestration: Tests are executed in a specific order or based on dependencies.

Test analysis

The manual approach: Imagine reviewing every test outcome after having laboriously executed them. Again, there would be a high chance of human errors.

The autonomous approach: Instead of the manual effort, allowing AI to analyze the test outcomes will take the burden off you. Some of the common activities that happen here are:

- Pattern recognition: ML models analyze test results to identify patterns and correlations that indicate potential defects.

- Anomaly detection: AI algorithms can detect anomalies in test outcomes, flagging them for further investigation.

- Defect prediction: AI can analyze test results to identify potential defects and prioritize them for investigation.

- Root cause analysis: By correlating test failures with code changes or system logs, the system can automatically identify the root cause of issues.

Test maintenance and optimization

The manual approach: Based on the test analysis, you might need to update your test suites. This test maintenance tends to increase as your test cases increase, and doing it manually only leads to more errors.

The autonomous approach: Instead, leveraging AI to maintain and optimize your test suites is a good idea.

- Self-healing: AI can detect changes in the application and update test cases automatically to reflect these changes.

- Coverage analysis: The system analyzes code and test coverage to identify gaps and redundancies, optimizing the test suite for maximum efficiency.

- Prioritization: Based on risk assessment and historical data, AI prioritizes test cases that are most likely to uncover critical defects. Read: Risk-based Testing: A Strategic Approach to QA.

- Test reduction: Redundant or low-value tests are identified and eliminated to streamline the testing process.

Test reporting

The manual approach: Test reporting is one of the tasks that might require extensive manual review if done by a human. The tester will have to go through various testing artifacts and then compile a report.

The autonomous approach: It is better to leave test reporting to AI. It can generate detailed reports and insights from test execution data, highlighting trends and areas for improvement. You’ll see the following being used:

- Automated Reporting: AI tools generate detailed reports on test execution, coverage, and defects, providing actionable insights in a clear and concise manner.

- Dashboards: Interactive dashboards offer real-time visibility into the testing process, highlighting key metrics and trends.

- Natural Language Generation (NLG): This technology converts complex data into human-readable reports, making it easier for stakeholders to understand the results.

Stages of autonomous testing

Autonomous testing is a progressive journey, evolving from manual testing to complete self-sufficiency. Various factors, like the complexity of the application, available resources, and organizational goals, determine how far one goes. You can always figure out what level works best for you.

Here’s a condensed view of each stage and its characteristics.

Stage 1: Manual Testing

- Human-centric: All testing activities are performed manually by human testers.

- No automation: No automation tools or scripts are used.

Stage 2: Assisted Automation

- Human-assisted: Testers use automation tools to support test execution and analysis.

- Script-based: Test scripts are created and maintained manually.

Stage 3: Partial Automation

- Shared responsibility: Humans and AI collaborate on testing activities.

- AI assistance: AI agents assist in test case generation, execution, and analysis.

Stage 4: Integrated Automation

- AI-driven: AI takes over most testing activities, with human oversight.

- Self-healing: Tests can automatically adapt to changes in the application.

Stage 5: Intelligent Automation

- Autonomous testing: AI independently creates, executes, and analyzes tests.

- Continuous learning: The system learns from test results to improve over time.

Stage 6: Full Autonomy

- Self-sufficient: Testing is entirely automated without human intervention.

- Predictive analytics: The system can predict potential issues before they occur.

We are yet to reach this ultimate goal of fully autonomous testing. Though, there are a few tools that can help you achieve a high degree of autonomy in testing.

How is autonomous testing different from automated testing?

Automated testing enhances human capabilities, while autonomous testing aims to replace human intervention with intelligent machines. Both these techniques differ significantly on their independence and intelligence levels.

Here’s a tabular representation of the differences between the two.

| Feature | Automated Testing | Autonomous Testing |

|---|---|---|

| Definition | Involves using software tools to execute test cases and compare actual results with expected results | Leverages AI and ML to create, execute, and analyze test cases without human intervention |

| Focus | Primarily on test execution and verification | On the entire testing lifecycle, including test case generation, execution, analysis, and optimization |

| Test case creation | Manual | AI-generated |

| Test execution | Automated | Automated and self-healing |

| Test analysis | Manual | AI-driven analysis and optimization |

| Human involvement | Requires significant human intervention for test case creation, script maintenance, and result analysis | Minimal human oversight, primarily for defining test objectives and reviewing results |

| Intelligence level | Limited intelligence, relying on predefined scripts and rules | High level of intelligence, capable of learning, adapting, and making decisions independently |

| Decision making | Based on predefined scripts | Based on AI algorithms and learning |

How to transition to autonomous testing?

Before going to autonomy, you should have automated testing in place. This guide explains how to transition from manual to automated testing.

Once you have automated testing, you can upgrade to autonomous testing by:

- Select appropriate tools: Choose tools that support automation, AI, and integration with your development environment.

- Identify automation opportunities: Focus on repetitive and time-consuming test cases.

- Introduce AI and ML: Incorporate AI capabilities into your testing toolset. You can build AI models for test case generation, defect prediction, and test optimization. Use comprehensive data on test cases, execution results, and defects to train your models.

- Implement self-healing mechanisms: Enable test cases to adapt to changes in the application.

- Continuous learning: Ensure the system learns from test results and improves over time.

- Monitor and maintain: Regularly review and update the autonomous testing system.

There’s an easier way to adopt autonomous testing: using testing tools that offer AI implementations like testRigor.

Autonomous testing with testRigor

If you are looking for autonomous testing, testRigor is the closest thing to it. This tool uses AI and ML to automate test creation, execution, and maintenance. Let’s see how.

testRigor generates/records/lets you create test scripts in plain English language, similar to how human testers would write manual test cases. You can induce a level of autonomy in this process by using the generative AI capabilities of this tool to create tests. By providing a thorough description of what kind of software testRigor needs to test in the app description section, the tool will create a series of test cases in plain English using AI. You can then modify these test cases manually as required and add more tests to the suite. Besides this, you can manually add tests to the suite or use the record-and-playback feature to do so.

Test runs in this tool are very stable. testRigor utilizes AI to handle any flakiness that may arise due to unstable environments. Since this tool does not require testers to mention implementation details of UI elements like XPaths and CSS selectors, any changes in them do not drastically affect the test run.

AI ensures that the element is found. With such stability, test maintenance also reduces to a bare minimum. testRigor provides features like self-healing by adapting specification changes that make test execution and maintenance easier.

The future of autonomous testing

While we’re making rapid strides in AI and automation, achieving complete autonomous testing remains a challenge. Current tools offer varying degrees of autonomy, excelling in specific areas like test case generation, self-healing, or test analysis.

Why is complete autonomy difficult?

You can attribute difficulty in achieving complete autonomy to the following reasons:

- Complex applications: Modern software systems are highly complex, with intricate interactions and edge cases that require human judgment.

- Contextual understanding: Truly understanding the application’s purpose and user needs often demands human interpretation.

- Ethical considerations: Autonomous systems need to be programmed with ethical guidelines to ensure unbiased and fair testing.

- Continuous evolution: Software is constantly changing, requiring adaptability and learning, which is still an evolving field for AI.

Despite these challenges, the industry is making significant progress. While we haven’t reached the pinnacle of autonomous testing yet, the tools and techniques available today offer substantial benefits and are steadily moving us closer to that goal.

Frequently Asked Questions (FAQs)

Aspects such as test case generation, test execution, test maintenance, defect detection and analysis, test optimization, and reporting and insights become autonomous.

AI contributes by learning from data, making predictions, generating test cases, adapting to changes, detecting defects, optimizing test coverage, and providing actionable insights.

Yes, autonomous testing tools can integrate with existing CI/CD pipelines, version control systems, and project management tools to support seamless testing throughout the development lifecycle.

While autonomous testing can benefit many types of applications, its effectiveness may vary depending on factors such as the complexity of the application, the availability of historical data, and the specific testing requirements.

Challenges include the initial setup and integration, the need for quality data for training AI models, potential resistance to change from team members, and the requirement for ongoing monitoring and adjustment of the testing process.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |