When to Stop Testing: How Much Testing is Enough?

|

|

“Keep testing till you get 100% coverage.”

“Test till you don’t find any bugs.”

“Test until every single line of code has been executed under every possible condition.”

“Testing should continue until all stakeholders are completely satisfied with the software.”

You’ve probably heard these kinds of statements – or even said them yourself. Quite often, we just say or think these statements without putting in too much thought.

The idea behind them is to define a finish line for testing. When not done consciously, these kinds of statements can be quite misleading and earn testing a bad rep.

So then, how do we quantify objectively when to stop testing? After all, we’re constantly told when to start testing, so it is only fair that we know when to stop it.

Let’s explore this idea in depth over here.

When to Start Testing?

You should start testing as soon as you have something to test!

In the simplest terms, testing should begin early in the development process, not just at the end. This is further emphasized with modern development approaches like Agile Methodology and Shift-Left testing.

Testing starts and continues throughout the software development lifecycle.

- Start Testing Early: Begin testing even when parts of the project are still being built. This is often called shift-left testing, meaning you move testing earlier in the process. This helps catch problems early before they grow into bigger issues.

- Test as You Build: As developers write code or create features, they can start testing small pieces to make sure they work correctly. This could be unit tests, which check individual parts of the code. Popular approaches include test-driven development (TDD) and business-driven development (BDD).

- Test Features as They’re Completed: Once a feature or a module is done, test it to ensure it meets the requirements and works as expected. This is when you’ll do things like integration testing or functional testing.

- Continuous Testing: If you’re using automated tests, they should be run continuously as new changes are made. This gives immediate feedback to the team and helps them spot issues fast.

- Test with Real Users: Once the system is ready, conduct user testing to see how real users interact with the software and check for usability problems.

When to Stop Testing?

Now, we know that it’s good practice to keep testing throughout the development process. Yet, we need to know when to move on from a testing cycle. We’re essentially talking about the exit criteria of testing.

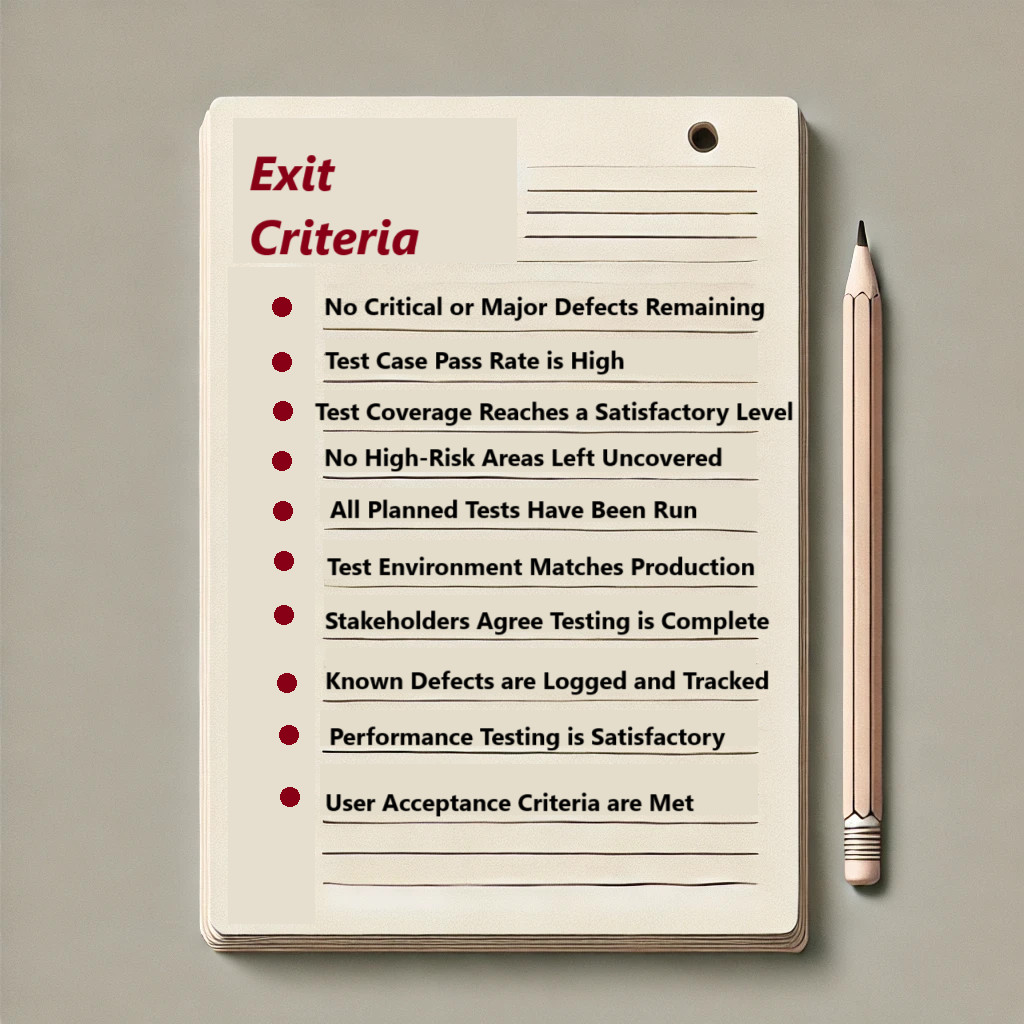

What are Test Exit Criteria?

Test exit criteria are the conditions that need to be met before you can stop testing a software application. Think of it as a checklist to make sure the software is ready for release. These criteria help teams know when they’ve tested enough and can move on to the next phase, like deployment.

Here’s what you’ll usually see being involved as a part of exit criteria.

Factors Determining When to Stop Testing Process

While there isn’t a one-size-fits-all approach to determining whether you’ve tested enough, here are some common parameters that go into making this decision. You can use these to determine the exit criteria for your project.

Test Coverage

- What it means: Test coverage refers to how much of your software you have tested. It’s like making sure you’ve checked all parts of a car to see if they work before you drive it.

- Why it matters: The more areas of the software you test, the less likely you are to miss bugs. But you don’t need to test every single possible combination; focus on the most important parts.

- How it helps: If you’ve tested all the key features and functions that users will use the most, then you can be more confident that the software is ready. Testing everything may not be necessary.

Defect Rate

- What it means: The defect rate is the number of bugs or issues found during testing. If bugs are consistently being found, it may mean that more testing is needed. If bugs are rare and the ones found are minor, it might be time to stop.

- Why it matters: If testing reveals fewer defects over time, it suggests the software is becoming more stable. If defects are still being found regularly, it indicates the software isn’t ready for release.

- How it helps: Monitoring the defect rate helps you decide when most of the issues have been resolved, and you can stop testing. A high defect rate means you require more testing, but a low rate indicates enough testing.

Read about other testing metrics over here: Essential QA Metrics to Improve Your Software Testing.

Risk-Based Testing

- What it means: Risk-based testing involves focusing on the most critical or high-risk parts of the software. For example, if your software handles payments, you’d want to test that area more thoroughly because a bug in that feature could be very costly.

- Why it matters: Some parts of the software are more important than others. Prioritizing testing on these areas ensures that you’re testing what matters most.

- How it helps: By identifying and testing high-risk features more rigorously, you can stop testing once you’re confident that the core, high-risk parts are stable and secure.

Regression Testing

- What it means: Regression testing is checking if new changes or features have broken anything that used to work.

- Why it matters: After making changes to software, it’s important to make sure that previously working features aren’t negatively affected. If all critical features still work after changes, that indicates that testing might be sufficient.

- How it helps: If regression testing shows that nothing important is broken, you can stop testing and be confident that the changes didn’t introduce new issues.

Release Deadlines

- What it means: Sometimes, you have to stop testing because you have a fixed deadline to release the software. This could be because of business needs, market pressures, or planned events.

- Why it matters: Even if testing isn’t 100% complete, at some point, the software has to be released. This means balancing the need for thorough testing with the need to meet deadlines.

- How it helps: If the deadline is approaching and enough testing has been done on the most important areas, you may need to stop testing. The key is managing expectations and focusing on high-priority issues.

Test Results Consistency

- What it means: If you’re getting consistent, repeatable results in your testing – especially that most tests are passing – then it’s a good sign that the software is stable.

- Why it matters: Consistent results show that the software isn’t introducing new issues with each round of testing. If you’re consistently finding fewer defects, it’s a sign that further testing may not yield much new information.

- How it helps: Once test results stabilize and the defects you find are minor or non-critical, it might be time to stop testing and release the product.

Automation in Testing

- What it means: Automation refers to using tools and scripts to run repetitive tests, which speeds up the process and gives quicker feedback.

- Why it matters: Automated tests can quickly tell you if new changes cause issues in the software, so you don’t have to do everything manually.

- How it helps: If automated tests are running regularly and passing, this can help you determine when enough testing has been done. Automated tests provide fast feedback, so you can stop testing once they confirm stability.

Stakeholder Approval

- What it means: Stakeholders are the people who have a vested interest in the software, like business owners, project managers, or clients. These people need to agree that the software is ready to be released.

- Why it matters: Testing isn’t just about finding bugs – it’s also about meeting the needs of the business and the users. Stakeholders can provide valuable insights into whether the software is ready for release.

- How it helps: Once stakeholders are satisfied with the results of testing and feel confident that the software meets business requirements, you can stop testing.

Testing Resources

- What it means: Testing takes time, people, and tools. Sometimes, you may need to stop testing because you’ve reached the limit of available resources, whether it’s time, people, or budget.

- Why it matters: Testing is not unlimited. You have to prioritize where to focus testing efforts. If you’ve tested the most critical parts and exhausted resources, it may be time to stop.

- How it helps: When you run out of resources but have covered the most important areas, it’s a good signal that you’ve done enough testing.

Performance and Scalability

- What it means: Performance testing ensures the software runs smoothly even under heavy use, and scalability testing ensures it can handle more users or data in the future.

- Why it matters: If the software can handle the expected load and scale as needed, you’re less likely to encounter problems post-release.

- How it helps: Once performance and scalability tests pass, and there are no major issues, it can help decide that testing is sufficient.

When Testing Should be Stopped: Role of Automation

With automated testing, you can speed up the process of testing. This helps you decide when testing is “enough”.

- Faster Feedback: When tests can be run quickly, you can identify problems early. If the automated tests pass consistently, it’s a strong signal that the software is stable, and you might not need to do further testing.

- Consistency in Testing: Consistency means that the test results are reliable. You won’t get false positives or false negatives because of human mistakes. If automated tests are consistently passing across different cycles, it tells you that there are fewer bugs, and the software is likely ready for release.

- Easier Regression Testing: When regression tests keep passing after each update, it suggests that the software is stable and it’s safe to stop testing without missing critical issues.

- Higher Test Coverage: If automation has covered a high percentage of your codebase or features, and all tests are passing, you can stop testing because you’ve already covered the critical areas.

- Good for Repetitive Testing: Some tests need to be run repeatedly, like performance tests or testing the same feature in different environments. Automation is perfect for running these tests over and over again without any extra work from humans.

- Easier Monitoring and Alerts: Automation can actively monitor the system for issues, so testers don’t have to manually check every result. With automated monitoring, you can rest assured that if there are any problems, they’ll be caught immediately. If no issues are found, it may be time to stop testing.

- Efficiency and Cost-Effectiveness: Automation makes testing more efficient and cost-effective. Once automated tests are written, they can be run as many times as needed without adding extra cost. They can also be scheduled to run outside working hours.

- Continuous Testing: It is easier to integrate automated test suites into CI/CD pipelines and run them whenever you want. This way, you can test every build or release quickly and gain confidence in your application’s health.

Efficient Testing with AI-based Test Automation Tools

While test automation can be your way ahead to deciding when you’ve tested enough, choosing a poor test automation tool can unravel your efforts. Opt for a tool that is easy to use, lets you write test cases easily, requires bare minimum test maintenance, and gives you reliable test runs. One of the top options for achieving this kind of effortless test automation is testRigor. Read: Decrease Test Maintenance Time by 99.5% with testRigor.

With testRigor, you can create test cases in simple English language. This is a huge help when you want to quickly write test cases to cover a number of modules. Not only that, but anyone can do this due to the simplicity of creating test cases with this tool. Automate a variety of scenarios ranging from QR and CAPTCHA resolutions to email and form testing across different platforms and browsers. If you integrate testRigor with your CI/CD framework, you’ll have a system that can test as frequently and quickly as your releases.

This generative AI-powered tool makes sure that you can easily run and maintain your test cases. testRigor is an AI agent and is known for its reliable test runs hence, you can rest assured knowing that your application is being tested thoroughly. This should give you the confidence to take the necessary steps to decide when to wrap up testing.

Conclusion

Deciding “how much testing is enough” is about balancing thoroughness with practicality. Focus on key areas that matter most, consider how many defects remain, look for consistency in test results, and prioritize high-risk features. If your test coverage, defect rate, and risk-based testing align, and you’ve met your deadlines, you’ll know when to stop testing and move forward with the release.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |