How to use AI to improve QA engineering productivity?

|

|

QA engineering productivity is not counted based on the total number of defects raised by the team. There are chances that the defect count will be high, but the defects will be invalid or of low-quality. So generally, QA productivity is calculated considering multiple factors that focus on quality and efficiency. In this article, we will be discussing how effectively we can measure QA productivity, the factors that impact productivity, and also a few strategies through which we can increase the team’s productivity.

Understanding Productivity

Generally, in the software industry, we can define productivity as the efficiency of the project delivery team in producing high-quality products or services within a given timeframe. This involves optimizing the use of resources like time, technology, and talent to deliver software that meets or exceeds customer expectations.

The major key factors that affect productivity involve modern development practices like Agile and DevOps, using advanced tools and technologies for coding/testing, and a highly collaborative team through every stage of the development cycle.

Productivity also depends on the team’s learning and adapting to new technologies that can empower the development cycle, reduce defects, and improve performance.

Defining QA Engineering Productivity

For a QA team, productivity depends on the team’s efficiency in delivering high-quality software with close to zero defects within the timeframe. It measures how well and quickly a QA team can ensure software quality. Having said that, it doesn’t mean productivity is based on the speed of testing, but it focuses more on achieving a balance between finding and fixing bugs while providing a smooth development process.

There are several factors that contribute to defining the QA team’s productivity:

Faster Delivery

- Efficiency: It involves finding and fixing the bugs effectively, with minimal rework. This includes prioritizing critical issues and focusing on areas with high user impact.

- Meeting deadlines: Completing testing tasks within the allocated time frames without compromising quality.

Optimizing the Testing Process

- Automation: Using automation tools to handle repetitive tasks so that the testers can put more effort into testing new scenarios.

- Collaboration: Effective communication between teams always helps identify and resolve issues early in the development cycle.

- Choosing the right tools: Team’s efficiency also depends on choosing the appropriate testing tools that help to streamline the process and improve efficiency.

Measuring Success

- KPIs: Using Key Performance Indicators (KPIs) like defect escape rate, test coverage, time to resolution, etc, we can measure the QA team’s productivity. Tracking these KPIs will help the team to understand the areas for improvement.

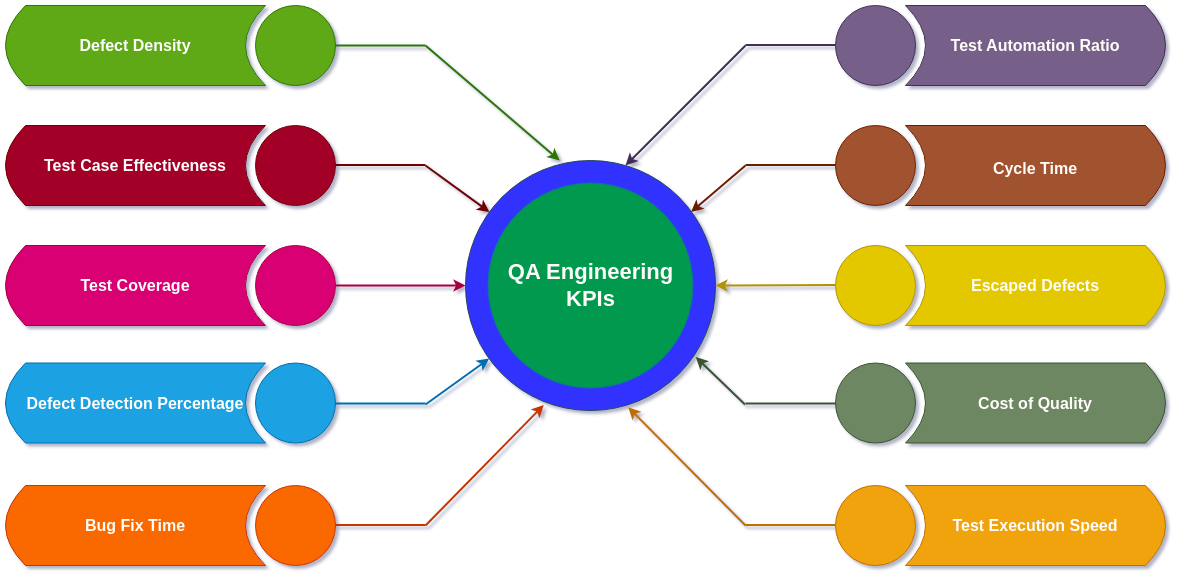

KPIs to Measure QA Engineering Productivity

KPIs play a major role in measuring the team’s productivity. Using them, the top management can assess how productive the team is, which inturn reflects on the quality of the products. It also helps the management to understand the areas where the team excels and the areas where the team needs to improve.

Let’s go through different KPIs commonly used to measure QA engineering productivity:

-

Defect Density: Defect density is calculated based on the number of defects per unit size of the software. The term unit size can be defined in multiple ways, like lines of code, number of function points, or number of files. Defect density is usually calculated to compare the quality of different components in the same project. Lower defect density usually indicates a higher quality of application, and higher density indicates poorly written code.

Example: A new e-commerce platform has a defect density of 3 defects per 1,000 lines of code. This indicates a relatively good quality with room for improvement.

-

Test Case Effectiveness: Test case effectiveness is mainly used to assess the efficiency and effectiveness of test cases in identifying defects in the software. It is calculated as the ratio of the number of defects found during testing to the total number of test cases executed in percentage. High test case effectiveness indicates that test cases are well designed and cover most of the application scenarios, while low effectiveness indicates that the test cases are missing critical scenarios.The formula for calculating test effectiveness:Test Case Effectiveness (%) = (Number of defects detected / Total number of test cases executed) x 100

Example: In a social media application, 70% of test cases identified defects during testing. This suggests the test cases are effective at catching bugs, but there might be room for improvement in the remaining 30%.

-

Test Coverage: This KPI helps to understand how much the test cases cover the source code for a particular module. This gives a clear idea about how much area of code is hit by test cases and how much is not tested at all. Test coverage is generally calculated in percentage. There are various types of test coverage, like statement coverage, branch coverage, and path coverage, each focusing on different aspects of how the code is tested.

Example: A project aiming for 90% test coverage achieves 85% after the initial testing phase. This highlights the need for additional test cases to ensure comprehensive coverage.

-

Defect Detection Percentage (DDP): It tracks the percentage of defects found internally before release compared to those discovered by users after launch. A high DDP signifies a robust internal testing process, preventing issues from reaching end-users and ensuring a smoother user experience. DDP is usually calculated based on the formula:DDP (%) = (Number of defects found during testing / Total number of defects found (during testing + after release)) x 100

Example: An e-learning platform boasts a DDP of 95%. This indicates a well-functioning internal testing process that catches most issues before users encounter them.

-

Bug Fix Time: It measures the average time it takes to resolve a reported defect, reflecting the responsiveness and efficiency of both development and QA teams. Faster resolution times indicate a well-oiled collaboration process and minimize the window of vulnerability for potential security risks.

Example: The average bug fix time is two days for an e-commerce app. This represents an efficient bug-fixing process, ensuring issues are resolved quickly.

-

Test Automation Ratio: Manual testing can be a time-consuming endeavor. The test automation ratio measures the percentage of automated tests versus those conducted manually. Higher automation rates can improve efficiency and consistency in testing, freeing up valuable QA resources for exploratory testing and focusing on complex scenarios. However, it’s crucial to remember that automation shouldn’t replace manual testing entirely.

Example: A team achieves a 60% automated test proportion. This allows them to focus manual testing efforts on complex scenarios while ensuring repetitive tasks are automated for efficiency.

-

Cycle Time: It tracks the initial planning stages to the final software product delivery. Shorter cycle times indicate a more productive and efficient development and QA process, allowing for faster iteration and quicker time-to-market.

Example: A development cycle for a new mobile game is reduced from 4 weeks to 3 weeks. This signifies an improvement in efficiency, allowing for faster time-to-market.

-

Escaped Defects: It measures the number of issues end-users find after releasing the software. Analyzing these defects helps identify weaknesses in the testing process and implement preventative measures to minimize future occurrences.

Example: A productivity app has fewer escaped defects reported by users. This indicates a robust QA process that minimizes issues reaching the end-user.

-

Cost of Quality: Quality doesn’t come free. The cost of quality encompasses the expenses associated with preventing defects (through proactive testing), appraising the software for quality (through testing and code reviews), and failing (fixing defects both internally and externally). Tracking these costs helps QA teams optimize their processes for better ROI, ensuring they deliver maximum value while minimizing rework and wasted resources. Read here: How to Save Budget on QA.

Example: By implementing more automation testing, a company reduces the cost of quality by 20%. This demonstrates how optimizing the QA process can lead to cost savings.

-

Test Execution Speed: This KPI measures the rate at which test cases are executed. It’s particularly important in environments with frequent releases, where faster execution allows for quicker feedback and bug identification.

Example: An e-commerce platform that releases new features weekly utilizes a testing framework that enables it to execute its automated test suite in 30 minutes. This fast execution speed allows them to identify and fix bugs before the new features are deployed, minimizing the risk of impacting user experience.

How to increase QA Engineering Productivity with AI

Integrating Artificial Intelligence (AI) into Quality Assurance (QA) processes can significantly enhance productivity by automating complex tasks, improving decision-making, and optimizing testing strategies. Here are several approaches for using AI to boost QA engineering productivity, each detailed with the specific role of AI:

AI-Powered Test Case Generation

AI-powered test case generation offers a compelling solution for QA teams. It reduces manual effort, ensures comprehensive test coverage, and ultimately leads to higher quality software delivered in a shorter time frame.

Purpose

- Reduced Manual Effort: Test case creation can be time-consuming. AI automates this process by analyzing system requirements and user behaviors, generating relevant test cases without manual scripting.

- Comprehensive Coverage: AI can identify complex scenarios and edge cases that human testers might overlook. This ensures your testing covers a broader range of functionalities, leading to more robust software.

Implementation

- Harnessing the Power of AI: AI algorithms are the brains behind this approach. They analyze sources like application data, user interactions, and historical test results to identify patterns and generate effective test cases.

- Accessible Tools: AI-powered test case generation is no longer a futuristic concept! Intelligent tools like testRigor integrate generative AI seamlessly into the testing process, making it accessible to QA teams of all sizes.

Benefits

- Uncover Hidden Defects: AI’s ability to analyze vast amounts of data helps unearth edge cases that might go unnoticed during manual testing. This leads to a more thorough testing process, minimizing the risk of bugs slipping through the cracks.

- Significant Time Savings: With AI automating test case creation, QA engineers can save valuable time. This allows them to focus on more strategic tasks like exploratory testing and analyzing complex test results.

Intelligent Test Automation

Intelligent Test Automation (ITA) empowers QA teams to automate with confidence. Using AI minimizes manual intervention, increases test reliability, and allows teams to focus on strategic testing initiatives.

Purpose

- Boosting Automation Robustness: Traditional automation can become brittle when applications change. ITA utilizes ML models to recognize UI changes and automatically adjust automated tests, ensuring continuous functionality.

Implementation

- Machine Learning to the Rescue: ITA employs ML models to analyze user interfaces (UI) and detect changes. Based on this analysis, the models can automatically adjust automated tests, minimizing the need for manual intervention.

- Tools for Intelligent Automation: Several tools utilize visual AI to analyze and validate UI elements, simplifying implementing ITA.

Benefits

- Reduced Maintenance Overhead: By automatically adapting tests to UI changes, ITA significantly reduces the time and effort required to maintain automated test suites. This frees up QA resources for other critical tasks.

- Enhanced Reliability: ITA ensures automated tests remain reliable even when the application undergoes UI modifications. This leads to a more stable and dependable testing process.

Predictive Analytics for Defect Management

Predictive analytics empowers QA teams to move from reactive defect management to a proactive approach. This leads to higher quality software, reduced development costs, and a more efficient testing process.

Purpose

- Proactive Defect Management: Instead of reacting to defects after they occur, predictive analytics allows you to anticipate them. By analyzing historical defect data and project trends, these models identify areas with a high likelihood of future defects.

Read for more information: Mastering Defect Management.

Implementation

- Harnessing the Power of Data: Predictive models are built on historical defect data. This data includes information like defect types, code sections affected, and project stages where defects were identified. Additionally, the models consider current project trends and potential risks.

- Prioritizing Efforts: Based on the predictions, you can prioritize testing efforts. By focusing resources on areas with a higher likelihood of defects, you can ensure comprehensive testing and minimize the risk of bugs slipping through the cracks.

Benefits

- Reduced Defect Rate: Proactively identifying potential issues allows for early intervention and bug prevention. This translates to a significant reduction in the overall defect rate of the software.

- Optimized Resource Allocation: Predictive analytics allows teams to allocate resources more effectively. By focusing on areas with a higher risk of defects, QA teams can ensure optimal utilization of their time and expertise.

AI-Driven Test Prioritization

AI-driven test prioritization empowers QA teams to make smarter decisions about test execution order. By focusing on high-impact tests first, they can ensure early detection of critical defects and ultimately deliver high-quality software faster.

Purpose

- Early Defect Detection: Not all tests are created equal. AI prioritizes tests that are more likely to uncover critical defects early in the testing cycle. This allows for quicker bug fixes and minimizes the risk of defects lingering until later stages. Read this article for more information: Risk-based Testing: A Strategic Approach to QA.

Implementation

AI algorithms analyze various factors to determine test priority. These factors can include:

- Recent Code Changes: Tests associated with recently modified code sections have a higher chance of uncovering new defects and are prioritized for execution.

- Historical Defect Data: Test cases with a history of catching defects in similar situations are prioritized.

- Criticality of Application Components: Tests targeting critical functionalities of the application are prioritized to ensure core features are thoroughly tested early on.

Benefits

- Faster Bug Detection and Resolution: By prioritizing tests that are more likely to uncover critical defects, AI helps identify and fix bugs early in the development cycle. This saves time and reduces the overall effort required for testing.

- Reduced Regression Testing Burden: Catching defects early minimizes the need for extensive regression testing later in development. This frees up QA resources for other crucial testing activities.

Natural Language Processing (NLP) for Enhanced Test Coverage

NLP acts as a bridge between human-written requirements and actionable test cases. This leads to a more streamlined testing process, improved test coverage, and higher-quality software.

Purpose

- Closinhigher-quality Gap: Traditional methods of analyzing requirements can miss nuances or leave room for misinterpretation. NLP steps in to bridge this gap. NLP techniques can effectively parse and interpret requirement documents written in natural language.

Implementation

-

From Text to Testable Scenarios: NLP algorithms analyze requirement specifications, dissecting the language and identifying key functionalities. This information is then used to:

- Map Requirements to Test Cases: NLP can automatically create test cases that directly correspond to specific requirements in the document.

- Identify Untested Areas: NLP can pinpoint areas lacking coverage by analyzing the requirements and comparing them to existing test cases.

Benefits

- More Accurate Test Coverage: NLP ensures a more precise and comprehensive translation of requirements into testable scenarios. This minimizes the risk of missing critical functionalities during testing.

- Improved Efficiency: NLP automates some aspects of test case creation, freeing up QA resources to focus on more complex and exploratory testing activities.

AI-Based Continuous Feedback Loop

The AI-based continuous feedback loop transforms testing from a static process into a dynamic and ever-evolving one. This allows QA teams to stay ahead of the curve, optimize their testing efforts, and deliver high-quality software more efficiently. Read: What is Continuous Testing?

Purpose

- Evolving Testing Strategies: This feedback loop utilizes AI to analyze the results of each testing cycle. By uncovering patterns and anomalies in the data, the AI can identify areas where testing strategies can be improved for better effectiveness and efficiency.

Implementation

- AI Analyzes Test Outcomes: AI tools continuously ingest data from test results. This data might include details like passed and failed tests, defect types, and execution times. The AI can identify insights that humans might miss by analyzing this data.

-

Data-Driven Recommendations: The AI suggests modifying your testing approach based on its analysis. These recommendations could involve:

- Prioritizing tests based on real-time data on defect likelihood

- Identifying areas requiring additional test coverage

- Refining existing test cases for improved effectiveness

Benefits

- Dynamically Adapting Testing Processes: The continuous feedback loop allows your testing strategy to adapt and evolve in real time based on collected data. This ensures your testing remains relevant and practical throughout the entire development lifecycle. Understand more about the Software Testing Life Cycle.

- Ongoing Improvement in Test Quality and Effectiveness: By constantly learning from each testing cycle, the AI helps to refine your testing approach, ultimately leading to higher quality testing and a more robust software product.

AI-Enhanced Performance and Load Testing

AI-enhanced performance and load testing empower QA teams to move beyond generic testing scenarios. By creating realistic user behavior simulations, AI helps identify and address potential performance issues before they impact real users. This leads to a more robust and scalable software product that can handle even the most demanding user scenarios.

Purpose

- Unveiling Real-World Performance: Traditional testing methods might not fully capture the nuances of user behavior. AI steps in to bridge this gap. It can generate highly realistic load and performance test scenarios that mimic user interactions by analyzing user behavior patterns.

Implementation

AI algorithms analyze historical user data to understand usage patterns and peak loads. This information is then used to:

- Generate Realistic Test Scenarios: AI can automatically create test scenarios that simulate real-world user behavior patterns, including login times, browsing behaviors, and concurrent user interactions.

- Dynamic Load Adjustment: AI doesn’t stop at creating scenarios. It can also analyze system responses during the test and dynamically adjust the load to simulate sudden surges or peak usage periods.

Benefits

- More Precise Performance Testing: AI ensures your performance testing accurately reflects real-world conditions. This helps identify potential bottlenecks and performance issues that traditional testing methods might miss.

- Meaningful Insights for Optimization: AI provides valuable insights into how the application will perform under pressure by simulating realistic user loads. This allows for targeted optimization efforts to ensure peak performance when it matters most.

Intelligent and efficient tools for QA

Intelligent testing tools equipped with AI capabilities are revolutionizing the world of QA. By simplifying the process, boosting automation, and leveraging AI for smarter testing, tools like testRigor empower QA teams to deliver high-quality results more efficiently.

Purpose

- Reduce Manual Workload: Forget spending hours coding or debugging intricate test scripts. Tools like testRigor leverage AI to simplify test creation. Write end-to-end tests quickly in plain English, focusing on functionalities rather than complex code syntax.

- Seamless Integration: Modern testing tools integrate seamlessly with your existing workflows. testRigor integrates with CI/CD pipelines, test management tools, and infrastructure providers, ensuring a smooth and efficient testing cycle.

- Automate Complexities: Don’t let intricate scenarios slow you down. testRigor allows you to automate complex interactions such as 2FA, QR code scans, CAPTCHAs, email/SMS workflows, table handling, phone calls, file uploads, audio/video elements, database interactions, and data-driven testing, all with plain English commands.

Implementation

- Plain English Power: With testRigor, you can write tests in a natural, human-readable language. This eliminates the need for extensive coding expertise, making test automation accessible to a broader range of team members, even those who are not QA specialists.

-

AI-Driven Efficiency: AI goes beyond simplifying the writing process and performs below tasks as well:

- Automate Test Case Generation: AI can analyze user stories and requirements, suggest relevant test cases, automate repetitive tasks, and free your team to focus on higher-level testing strategies.

- Identify Potential Failure Areas: Leverage AI’s analytical power to pinpoint areas where failures might occur. This allows for proactive testing and minimizes the risk of critical bugs slipping through the cracks.

- Optimize Test Coverage: AI can analyze historical testing data and identify gaps in your testing strategy. Based on this analysis, it can suggest additional tests to ensure comprehensive coverage of all functionalities.

Benefits

- Streamlined Testing Process: Intelligent tools like testRigor simplify test creation, automate complex scenarios, and integrate seamlessly with existing workflows. This results in a more simplified and efficient testing process.

- Improved Team Collaboration: Plain English testing allows team members with varying technical backgrounds to contribute to test automation. This fosters collaboration and ensures everyone is aligned on testing objectives.

- Enhanced Test Quality: AI-driven tools help automate routine tasks, identify potential issues, and optimize test coverage. This ultimately leads to a higher quality of testing with greater efficiency.

- Faster Time to Market: Intelligent tools can significantly reduce testing time by streamlining testing and automating complex scenarios. This allows for faster release cycles and quicker time to market.

Conclusion

Leveraging AI to enhance QA engineering productivity represents a transformative shift in how software testing approaches and executes. Tools like testRigor utilize AI to automate complex tasks, ranging from test case generation to visual validation, thereby increasing the speed and accuracy of test processes.

By employing AI-driven test execution and optimization, QA teams can focus on high-impact areas, significantly reducing time-to-market while maintaining high-quality standards. Predictive analytics further enable preemptive identification of potential defects, allowing for more targeted and effective testing efforts. Moreover, natural language processing aids in refining requirements to ensure clarity and testability from the outset.

Frequently Asked Questions (FAQs)

Yes, with testRigor, you can handle image-to-text and Google Re-Captcha V2 and V3 captchas. You can read this how to guide, which explains each step-by-step procedure to handle a captcha.

The productivity of QA in traditional SDLC models like Waterfall can be hampered by the model’s rigidity and the late stage at which testing is conducted. When issues are discovered late in the process, they are more complex and costly to resolve, leading to inefficiencies and delays.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |