ATDD and TDD: Key Dos and Don’ts for Successful Development

|

|

Acceptance Test-Driven Development (ATDD) and Test-Driven Development (TDD) are powerful methodologies that can significantly improve the quality and efficiency of software development. However, like any methodology, they require careful implementation to reap their full benefits.

This blog post will delve into the key dos and don’ts of ATDD and TDD, based on “Clean Code and Clean ATDD/TDD Cheat Sheet With Dos and Don’ts” by Urs Enzler.

Let’s get started.

Types of Test Methodologies

Software development methodologies have evolved over time to address different challenges and improve collaboration, quality, and efficiency. From Waterfall to Agile, and now DevOps and CI/CD, there are many ways to get the job done. But let’s talk about the types of test methodologies that are widely used to make development and testing part of the same fiber.

Here are the common types of methodologies that are used:

| Methodology | Explanation | Example |

|---|---|---|

| Test-Driven Development (TDD) | You write tests before writing the actual code. | Before writing a calculateTax() function, you first create tests to verify it calculates taxes correctly. |

| Acceptance Test-Driven Development (ATDD) | You write acceptance tests based on user requirements before writing any code. | Writing a test to verify that “a user can log in with a valid username and password.” |

| Behavior-Driven Development (BDD) | An extension of TDD focused on the behavior of the system, written in plain language. |

Given the cart is empty

When the user adds a product

Then the cart shows 1 item.

|

| Specification-Driven Development (SDD) | A methodology where specifications or documentation drive the development process. | Writing detailed specs for an API, including its inputs, outputs, and behavior. |

| Defect Driven Testing (DDT) | Tests are written to reproduce and fix bugs that have been found in the code. Once a test is written to reproduce the defect, the bug is fixed, and the test ensures it doesn’t reappear. | If a bug in user authentication was found and fixed, DDT would specifically test that area to ensure the issue is resolved and doesn’t recur. |

| Plain Old Unit Testing (POUTing) | It’s a straightforward approach to writing unit tests without relying on elaborate frameworks or techniques. | Testing a calculateTotal() function independently to verify it returns the correct sum. |

Read in-depth about these methodologies over here:

- Acceptance Test Driven Development (ATDD)

- What is BDD 2.0 (SDD)?

- What is Test Driven Development? TDD vs. BDD vs. SDD

- TDD vs BDD – What’s the Difference Between TDD and BDD?

- What is Behavior Driven Development (BDD)? Everything You Should Know

In the below sections, we will focus on how to get TDD and ATDD right.

What is TDD?

Test-Driven Development (TDD) is a programming technique where you write tests before writing the actual code. It ensures your code works as intended right from the start, which makes it easier to maintain, debug, and extend.

How TDD Works

TDD follows a simple, repeatable cycle called Red-Green-Refactor:

-

Red: Write a Failing Test

- Write a small test for a specific feature or function you plan to implement.

- The test will fail initially because there’s no code yet to make it pass.

- This step defines the expected behavior of your code.

Example: You want to create a function that calculates the sum of two numbers:def test_add_numbers(): assert add(2, 3) == 5 # This test will fail because `add` doesn't exist yet

-

Green: Write Just Enough Code to Pass the Test

- Write the minimum amount of code required to make the test pass.

- Don’t worry about perfection at this stage – just get it working.

- It ensures your code is functional.

Example: Implement the add function:def add(a, b): return a + b

Now the test will pass:assert add(2, 3) == 5

-

Refactor: Improve the Code

- Refactor the code to make it cleaner, more efficient, or more readable without changing its behavior.

- Run the test again to ensure everything still works.

- This step keeps your codebase maintainable.

Example: If the add function had unnecessary complexity, simplify it while keeping the test green.

TDD Principles

Here are some principles to help you make the most out of TDD.

General TDD Principles

| Principle | Explanation | |

|---|---|---|

| ✅ | A Test Checks One Feature | A test checks exactly one feature of the testee. That means that it tests all things included in this feature but not more. This includes probably more than one call to the testee. This way, the tests serve as samples and documentation of the testee’s usage. |

| ✅ | Tiny Steps | Make tiny little steps. Add only a little code to the test before writing the required production code. Then repeat. Add only one Assert per step. |

| ✅ | Keep Tests Simple | Whenever a test gets complicated, check whether you can split the testee into several classes (Single Responsibility Principle). |

| ✅ | Prefer State Verification to Behaviour Verification | Use behavior verification only if there is no state to verify. Refactoring is easier due to less coupling to implementation. |

| ✅ | Test Domain-Specific Language (DSL) | Use test DSLs to simplify reading tests, builders to create test data using fluent APIs, and assertion helpers for concise assertions. |

Red Bar Patterns

These patterns occur when tests are failing, typically in the “Red” phase of TDD. This phase helps identify the problem you’re solving and ensures your test setup is meaningful.

| Pattern | Explanation | |

|---|---|---|

| ✅ | One Step Test | Pick a test you are confident you can implement that maximizes the learning effect (e.g., impact on design). |

| ✅ | Partial Test | Write a test that does not fully check the required behavior, but brings you a step closer to it. Then use Extend Test below. |

| ✅ | Extend Test | Extend an existing test to better match real-world scenarios. |

| ✅ | Another Test | If you think of new tests, then write them on the TO DO list, and don’t lose focus on the current test. |

| ✅ | Learning Test | Write tests against external components to make sure they behave as expected. |

Green Bar Patterns

These patterns occur when tests are passing, typically in the “Green” phase. This phase focuses on ensuring the implementation satisfies the test, often with minimal effort.

| Pattern | Explanation | |

|---|---|---|

| ✅ | Fake It (‘Til You Make It) | Return a constant to get the first test running. Refactor later. |

| ✅ | Triangulate – Drive Abstraction | Write a test with at least two sets of sample data. Abstract implementation of these |

| ✅ | Obvious Implementation | If the implementation is obvious, then just implement it and see if the test runs. If not, then step back and just get the test running and refactor it. |

| ✅ | One to Many – Drive Collection Operations | First, implement operation for a single element. Then, step to several elements (and no element). |

TDD Process Smells

In Test-Driven Development (TDD), process smells are signs that something is wrong with how you’re applying the TDD methodology. They don’t necessarily break your code, but they indicate that you might not be following best practices, which can lead to poor-quality tests or code in the long run.

Here are some telltale signs of TDD process smells:

- Using Code Coverage as a Goal: While code coverage can be a good indicator of test coverage, it isn’t the best guide. Use code coverage to find missing tests, but don’t use it as a driving tool. Otherwise, the result could be tests that increase code coverage but not certainty.

- No Green Bar in the last ~10 Minutes: Make small steps to get feedback as fast and frequently as possible.

- Not Running Test Before Writing Production Code: Only if the test fails, then new code is required. Additionally, if the test surprisingly does not fail then make sure the test is correct.

- Not Spending Enough Time on Refactoring: Refactoring is an investment in the future. Readability, changeability, and extensibility will pay back.

- Skipping Something Too Easy to Test: Don’t assume. Check it. If it is easy, then the test is even easier.

- Skipping Something Too Hard to Test: Make it simpler. Otherwise, bugs will hide, and maintainability will suffer.

- Organizing Tests around Methods, Not Behaviour: These tests are brittle and refactoring killers. Test complete “mini” use cases in a way that reflects how the feature will be used in the real world. Do not test setters and getters in isolation. Test the scenario they are used in.

TDD and Unit Testing

In TDD, the tests you write are typically unit tests because they focus on testing small, isolated parts of the code. While unit testing checks if the code works, TDD ensures you think about how the code should behave before you write it. This leads to better design and fewer bugs.

Read in-depth about unit testing over here:

- Unit Testing: Best Practices for Efficient Code Validation

- Integration Tests vs Unit Tests: What Are They And Which One to Use?

Unit Testing Principles

| Principles | Explanation | |

|---|---|---|

| ✅ | Fast | Unit tests have to be fast in order to be executed often. Fast means much smaller than seconds. |

| ✅ | Isolated |

Isolated testee: Clear where the failure happened.

Isolated test: No dependency between tests (random order)

|

| ✅ | Repeatable | No assumed initial state, nothing left behind, no dependency on external services that might be unavailable (databases, file system …). |

| ✅ | Self-Validating | No manual test interpretation or intervention. Red or green! |

| ✅ | Timely | Tests are written at the right time (TDD, DDT, POUTing) |

Faking in Unit Tests

Fakes like Stubs, Fakes, Spies, Mocks, and Test Doubles are largely used in unit testing to ensure that methods can be run in isolation. Here are some do’s and don’ts to manage them.

| Do’s ✅ | Don’ts ❌ |

|---|---|

|

Isolation from environment

Use fakes to simulate all dependencies of the testee.

|

Mixing Stubbing and Expectation Declaration

Make sure that you follow the AAA (arrange, act, assert) syntax when using fakes.

Don’t mix setting up stubs (so that the testee can run) with expectations (on what the testee should do) in the same code block.

|

|

Faking Framework

Use a dynamic fake framework for fakes that show different behavior in different test scenarios (little behavior reuse).

|

Checking Fakes instead of Testee

Tests that do not check the testee but values returned by fakes. Normally, it is due to excessive fake usage.

|

|

Manually Written Fakes

Use manually written fakes when they can be used in several tests, and they have only little changed behavior in these scenarios (behavior reuse).

|

Excessive Fake Usage

If your test needs a lot of fakes or fake setup, then consider splitting the testee into several classes or provide an additional abstraction between your testee and its dependencies.

|

Unit Test Smells

Unit test smells tell you that something is wrong with the way you’ve implemented the unit tests. While the system might continue to work, you might observe some of the following signs:

- Test Not Testing Anything: A passing test that at first sight appears valid but does not test the testee.

- Test Needing Excessive Setup: A test that needs dozens of lines of code to set up its environment. This noise makes it difficult to see what is really tested.

- Too Large Test / Assertions for Multiple Scenarios: A valid test that is, however, too large. Reasons can be that this test checks for more than one feature or the testee does more than one thing (violation of the Single Responsibility Principle).

- Checking Internals: A test that directly accesses the internals (private/protected members) of the testee (Reflection). This is a refactoring killer.

- Test Only Running on Developer’s Machine: A test that is dependent on the development environment and fails elsewhere. Use continuous integration to catch them as soon as possible.

- Test Checking More than Necessary: A test that checks more than it is dedicated to. The test fails whenever something changes that it checks unnecessarily. Especially probable when fakes are involved or when checking for item orders in unordered collections.

- Irrelevant Information: The test contains information that is not relevant to understand it.

- Chatty Test: A test that fills the console with text – probably used once to check for something manually.

- Test Swallowing Exceptions: A test that catches exceptions and lets the test pass.

- Test Not Belonging in Host Test Fixture: A test that tests a completely different testee than all other tests in the fixture.

- Obsolete Test: A test that checks something no longer required in the system. It may even prevent the clean-up of production code because it is still referenced.

- Hidden Test Functionality: Test functionality is hidden in either the SetUp method, base class, or helper class. The test should be clear by looking at the test method only – no initialization or assertions somewhere else.

- Bloated Construction: The construction of dependencies and arguments used in calls to the testee makes the test hardly readable. Extract to helper methods that can be reused.

- Unclear Fail Reason: Split test or use assertion messages.

- Conditional Test Logic: Tests should not have any conditional test logic because it’s hard to read.

- Test Logic in Production Code: Tests depend on special logic in production code.

- Erratic Test: The test sometimes passes and sometimes fails due to leftovers or the environment.

What is ATDD?

ATDD (Acceptance Test-Driven Development) is a software development approach where you write acceptance tests before you start coding. These tests define what the software should do from the user’s perspective to ensure it meets the needs of the business and stakeholders.

In simpler terms, it’s like agreeing on a checklist with everyone (developers, testers, and business people) to define what “success” looks like before building something.

How ATDD Works

-

Collaborate to Define Acceptance Tests

- Developers, testers, and business stakeholders work together to define acceptance tests. These are examples of how the software should behave in specific situations.

- The tests are written in simple, human-readable language so everyone understands them.

-

Write Tests First

- These acceptance tests are written before any code is developed, often using tools like Cucumber or SpecFlow that support plain-language test definitions.

-

Implement Code to Pass the Tests

- Developers write the code to make these tests pass, ensuring the software meets the agreed-upon requirements.

-

Verify the Tests Pass

- Run the tests to confirm the functionality works as expected. If a test fails, the code is adjusted until all tests pass.

Tips for Better ATDD

| Tips | Explanation | |

|---|---|---|

| ✅ | Use Acceptance Tests to Drive Your TDD Tests | Acceptance tests check for the required functionality. Let them guide your TDD. |

| ✅ | User Feature Test | An acceptance test is a test for a complete user feature from top to bottom that provides business value. |

| ✅ | Automated ATDD | Use automated Acceptance Test Driven Development for regression testing and executable specifications. |

| ✅ | Component Acceptance Tests | Write acceptance tests for individual components or subsystems so that these parts can be combined freely without losing test coverage. |

| ✅ | Simulate System Boundaries | Simulate system boundaries like the user interface, databases, file system, and external services to speed up your acceptance tests and to be able to check exceptional cases (e.g., a full hard disk). Use system tests to check the boundaries. |

| ❌ | Avoid Acceptance Test Spree | Do not write acceptance tests for every possibility. Write acceptance tests only for real scenarios. The exceptional and theoretical cases can be covered more easily with unit tests. |

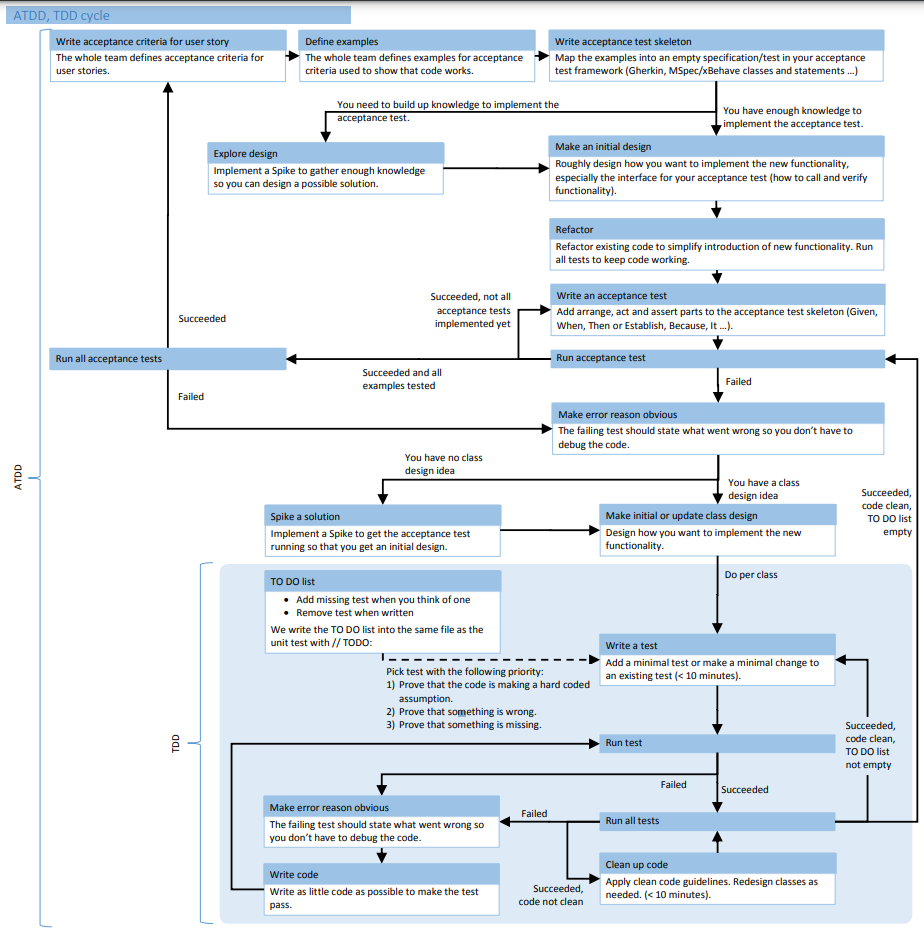

TDD and ATDD Cycle

The below flowchart is from Urs Enzler’s ‘Clean ATDD and TDD cheat sheet‘, and it shows how TDD and ATDD are linked.

Tools to Automate Testing

The tools you choose to automate testing play a huge role in the effectiveness of QA. You might already be using tools like JUnit, Jest, Cucumber, and Mockito, and you can opt for smarter alternatives. You can skip the hassle of depending on multiple tools to translate requirements into test cases and then into automation test scripts. AI-based tools like testRigor let you write specifications directly as test scripts. Here’s how this tool makes testing more efficient and suitable for Agile environments:

-

Directly write acceptance tests as test scripts. testRigor uses generative AI and NLP to let you write plain English language commands that it then runs. The system altogether bypasses the need and reliance on implementation-based UI element recognition (XPaths or CSS). Simply write what you see on the screen and where you saw it.For example, you can mention clicking on a button that appears to the left of another button in this way – click on “Login” to the left of “Profile”. Apart from writing these test cases yourself, you can use the other features that testRigor offers to quickly create test cases, like the record-and-playback tool, auto-generating test cases using generative AI, and live mode.

-

testRigor offers reduced test maintenance by not relying on UI element locators. The tool also uses AI to reduce flakiness and test execution time.

- Integrate with other platforms and services to build a broader testing ecosystem.

Thus, with testRigor, you can automate your acceptance testing across platforms and browsers in no time. All this while involving your entire team in the process. Here’s an exhaustive list of the tool’s features.

Conclusion

Embracing ATDD and TDD isn’t just about writing better code – it’s about building software that meets both technical and user expectations seamlessly. By following the above-mentioned tips and using test automation wherever possible, you can ensure that your software application is of top quality.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |