What is Explainable AI (XAI)?

|

|

As smart as AI is, it can be quite vague at times. Maybe you asked Alexa to tell you a recipe for an apple pie. But poor Alexa, diligent as she may be, misinterpreted the word “tell” and just brought up videos of apple pie, whereas you wanted her to narrate it to you while your hands are deep in flour. While this is a very trivial case of AI doing unexplainable things, you’ll see this kind of behavior across industries that use AI, like criminal justice, healthcare, or manufacturing.

We need some way to know why AI did what it did, especially when what we instructed seems to make sense to us, but somehow, not to the AI model! This brings us to Explainable AI, whose sole purpose is to make AI more transparent and trustworthy. Let’s learn more about it.

Key Takeaways:

- You will learn what XAI (Explainable AI) is and why you need it.

- What is the working of Explainable AI methods?

- What are the popular Explainable AI methods in use?

- How can you use XAI and AI agents in QA?

What is XAI?

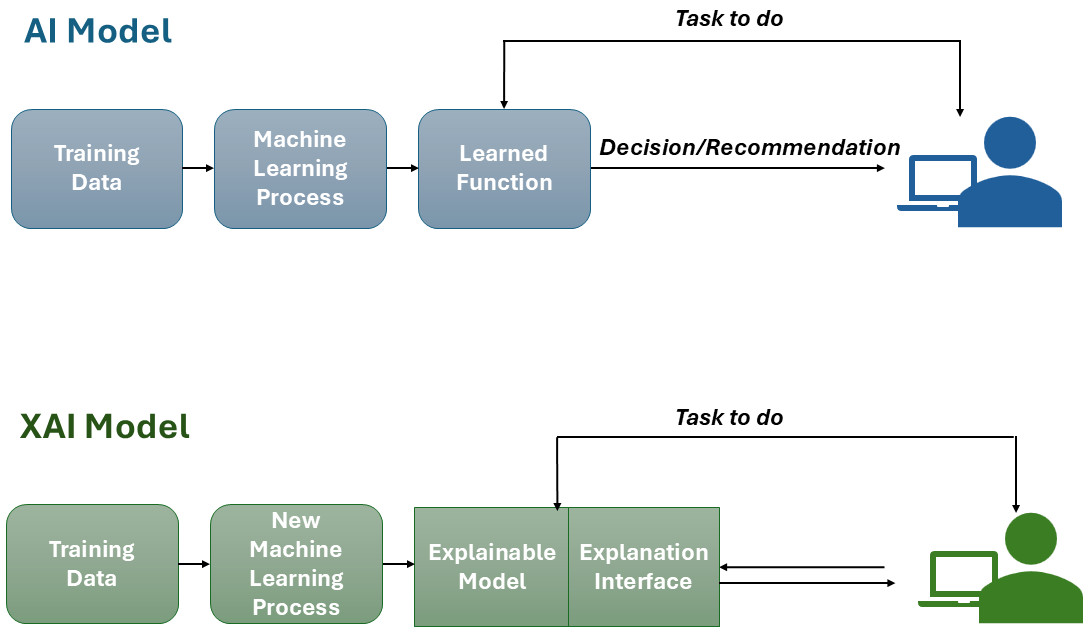

The problem with many powerful AI models today is that they’re like “black boxes.” They give you an answer, but you can’t see or understand how they got there. Explainable AI (XAI) is a way to make AI systems more transparent. It helps humans understand how AI makes decisions, in language or visuals that we can grasp. With XAI, the AI doesn’t just give you a result – it also tells you why it made that choice.

Let’s say an AI says, “You don’t qualify for a loan”.

- Without XAI: You just get rejected. No idea why.

- With XAI: It tells you, “Your credit score is too low, and your income is below our threshold.”

That way, you know the reasoning, and you might even be able to improve your chances next time.

Why do we Need Explainable AI?

You need to know why AI did what it did for many reasons, like

- Trust: Explainable AI helps build trust by showing the reasoning behind a decision in a way people can understand. When we know why something happened, we’re more likely to accept it.

- Accountability: In high-stakes situations, like medical diagnoses or self-driving cars, AI mistakes can be serious. If something goes wrong, we need to be able to ask: What went wrong and why? XAI makes it possible to trace decisions, hold systems (and their creators) accountable, and fix errors.

- Fairness: AI can sometimes learn patterns from data that are biased or unfair. For example, if an AI system is biased against certain groups when hiring or giving out loans, it can quietly keep making unfair decisions. Explainable AI shines a light on those biases. It helps us spot when something’s off and take action to fix it.

- Learning and Improvement: Even developers and data scientists benefit from XAI. If they can see how an AI is thinking, they can improve the model, correct errors, and make better decisions going forward. It’s like debugging a program—if you can’t see what’s going on inside, you can’t fix it.

- Following the Rules: In many parts of the world, laws are starting to require that AI systems be transparent. For example, Europe’s GDPR includes a “right to explanation.” That means if a machine makes a decision about you, you have a right to know why. Explainable AI helps companies stay compliant with these regulations.

How do Explainable AI Methods Work?

- AI Makes a Decision: First, the AI does what it normally does, it looks at the input data and makes a decision. For example, you apply for a loan. The AI looks at your credit score, income, and spending habits and decides to approve or deny your application.

-

XAI Looks Inside the AI’s Decision-Making: Now comes the magic of XAI. It tries to answer questions like:

- “Why was this decision made?”

- “What were the most important factors?”

- “Would the outcome change if some inputs were different?”

XAI tools and techniques analyze the decision and give you a breakdown in human-friendly terms. - It Shows You What Mattered Most: XAI can show you which parts of the input influenced the decision the most. Let’s go back to the loan example. XAI might say: “Your income had a high impact on the decision, while your recent credit card usage had a moderate impact.” It’s like the AI giving you a report card: “Here’s what I looked at, and here’s how it affected the result.”

Two Ways XAI Can Work

- Built-in Explainability: Some AI models are simple enough to explain themselves (like decision trees or linear models). They are “transparent by design.”

- Add-on Explanations: For complex, black-box models (like deep learning), XAI adds a separate explanation layer after the decision is made.

Popular Explainable AI Methods

While XAI seems great, you might wonder how it does this. These methods act like translators – they take the AI’s complex math and turn it into insights that make sense to humans.

LIME (Local Interpretable Model-agnostic Explanations)

Think of LIME like a detective who zooms in on one decision at a time and figures out what influenced it the most.

- How it works: LIME slightly changes your input (like tweaking your income or age) and sees how the prediction changes. It then tells you which inputs made the biggest difference.

- Why it’s useful: It gives you a simple explanation for one decision, even from a complex model.

- Example: LIME might say, “The loan was denied mainly because your income is below $30,000 and your credit score is under 650.”

SHAP (SHapley Additive exPlanations)

SHAP is like a team coach who figures out how much each player (input) contributed to the win (or loss).

- How it works: It assigns a “score” to each feature (like income, age, etc.) based on how much it helped or hurt the decision. These scores are always fair and balanced.

- Why it’s useful: It gives a clear, math-backed explanation of every factor’s role in the decision.

- Example: SHAP might say, “Your high savings helped your approval, but your debt-to-income ratio lowered your chances.”

Saliency Maps (for images)

Imagine using a heat map to show which parts of an image the AI was “looking at” the most.

- How it works: It highlights the pixels in an image that influenced the AI’s prediction.

- Why it’s useful: You can see what part of a medical scan or photo led to a certain diagnosis or label.

- Example: A saliency map might show that the AI focused on a shadowy spot in a lung X-ray when predicting a disease.

Counterfactual Explanations

These are like “what if” scenarios.

- How it works: It shows what minimal changes would lead to a different decision.

- Why it’s useful: It helps people understand what they can change to get a better outcome.

- Example: “You would have been approved for the loan if your income were $2,000 higher.”

Feature Importance Plots

These are simple and visual.

- How it works: It ranks which features (like income, age, or experience) were most important across many predictions.

- Why it’s useful: You get a high-level overview of what the model cares about most.

- Example: For a hiring model, a plot might show:

- Years of experience (most important)

- Education level

- Number of projects completed

XAI and QA

You might have already seen AI’s involvement in QA – Generating test cases in simple English language or by processing user stories and requirements, smart UI element location strategies, better test execution to avoid flaky test runs, real world-based test data generation, intelligent analytics, and more. While all this seems great in theory, when in the application phase, you might find it hard to figure out why did a test automation tool do something while you were expecting it to do something else entirely. This again brings us to the need for having ways to explain the thought process of these AI and ML models.

While many test automation tools are now offering AI capabilities, very few are actually bothering to explain their AI engine’s decisions. However, there’s one tool that tries to explain its AI engine’s behavior – testRigor.

testRigor is a generative AI-based test automation tool that simplifies test creation, execution, and maintenance. You can use simple English language to write test cases that can test functionalities, visuals, end-to-end scenarios, and even APIs. These tests can work across platforms and browsers. You can test many modern-day elements that traditional test automation tools might struggle to automate, like graphs, images, chatbots, LLMs, Flutter apps, mainframes, and many more. To automate these features, though, testRigor offers many AI features of its own, and that is where the explainability of the AI engine comes into the picture.

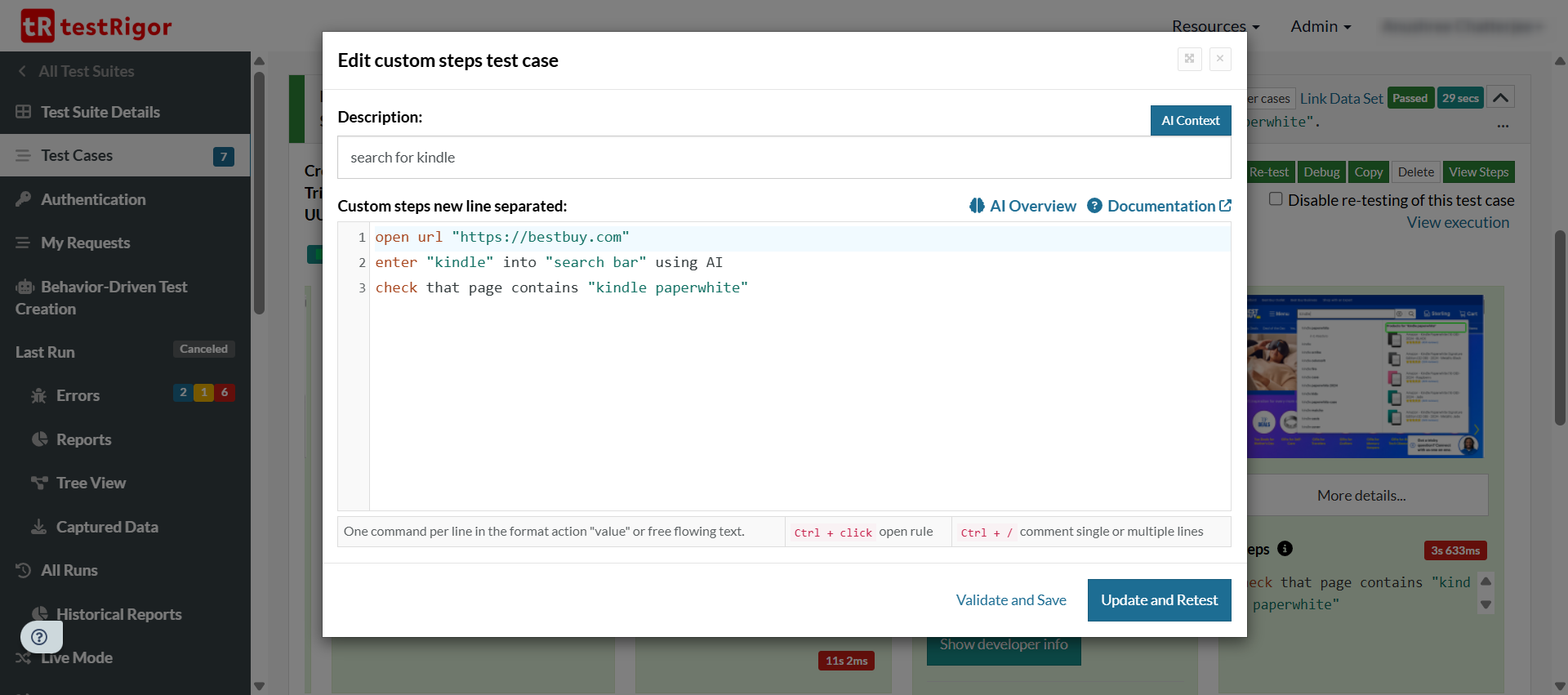

Let’s consider an example where testRigor can understand the context if you append “using ai” to a command. For example, if you write – enter “kindle” into “search bar” using ai, then testRigor will go through the web page, find the search bar, and enter the word “kindle” into it.

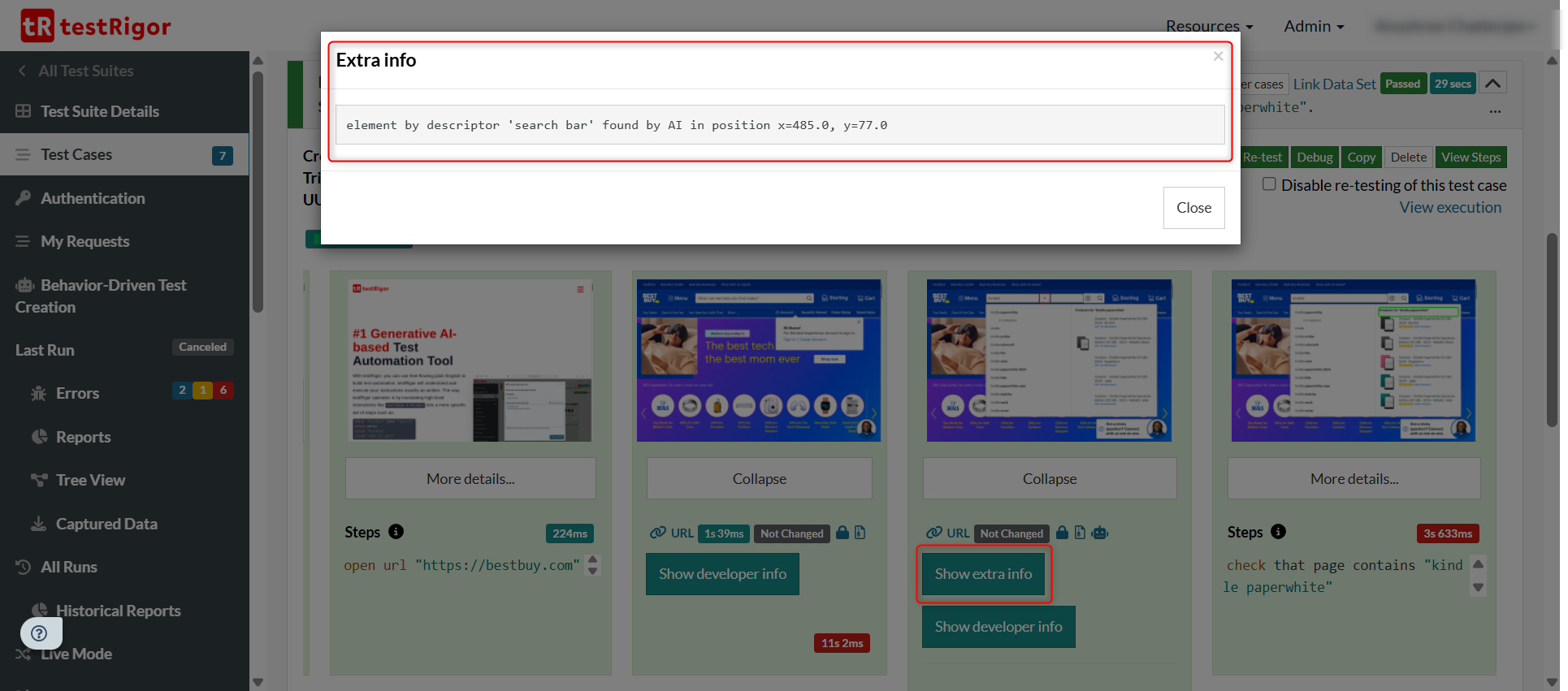

But what did the AI engine do when it encountered that command? To help understand this, testRigor offers a simple overview of what the AI engine did at that step. Here’s a preview of this.

This is a simple demonstration of how explainability can help with the “black box” behavior of AI models. Here’s another example that shows how explainability can help all stakeholders trust AI more.

Conclusion

Thus, XAI seems like the necessary step ahead in a world where AI is being used for various decision-making processes. This will help developers, users, and businesses trust AI and fix it if something goes wrong.

While XAI has made significant strides, the field is still maturing. The future points towards more intrinsically interpretable models, real-time and actionable explanations, standardized evaluation, and a deeper understanding of how humans interact with and trust AI systems through explanations. The ultimate goal is to create AI that is not only powerful but also transparent, accountable, and aligned with human values.

Additional Resources

- AI Model Bias: How to Detect and Mitigate

- What is Quantum AI?

- Edge AI vs. Cloud AI

- What are AI Hallucinations? How to Test?

- AI Context Explained: Why Context Matters in Artificial Intelligence

- Retrieval Augmented Generation (RAG) vs. AI Agents

- AI In Software Testing

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |