Test Manager Cheat Sheet

|

|

A test manager shoulders the responsibility of contributing to the QA operations in an organization. They work closely with development teams, QA teams, and project managers to coordinate testing efforts and ensure that the software is thoroughly tested before it is released to users. Being well-versed with the various processes and concepts in the QA world is a must for every test manager. Hence we have created an easy-to-digest compilation that will come in handy if you are looking to brush up on your QA vocabulary.

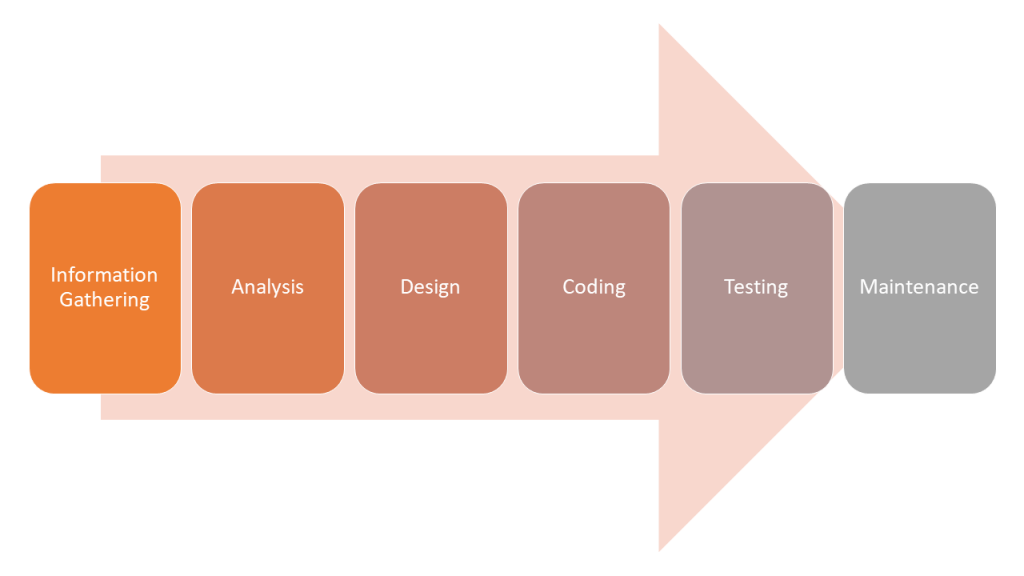

Software development lifecycle (SDLC)

Explore the SDLC further in our comprehensive blog article – What is SDLC? The Blueprint of Software Success

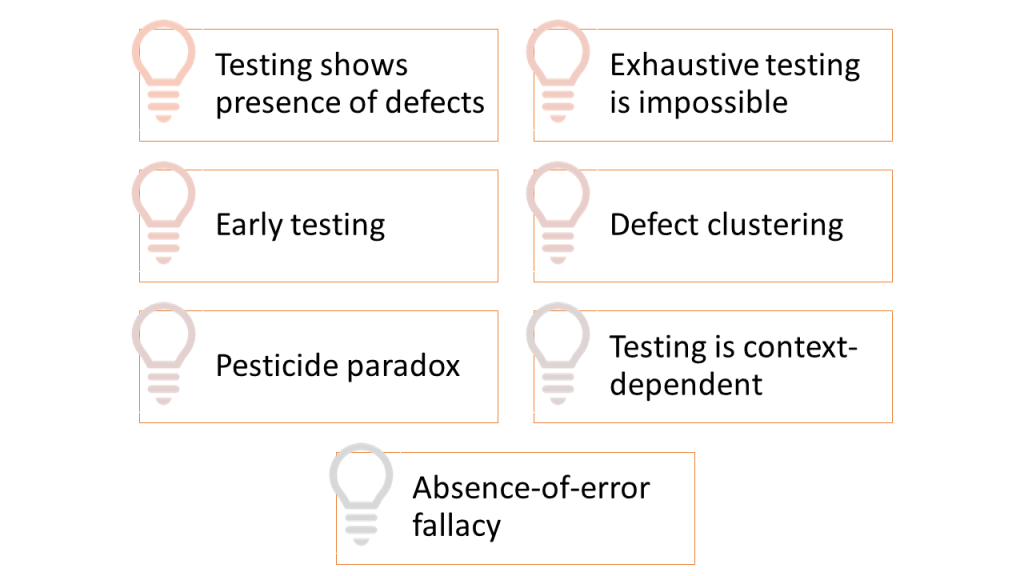

Principles of testing

| Testing shows the presence of defects | Testing can unearth defects but cannot guarantee a defect-free product |

|---|---|

| Exhaustive testing is impossible | It is impractical to test every single combination of inputs and conditions. Instead focus on critical areas and workflows crucial for business |

| Early testing | Fixing defects early on in the cycle is easier and less costly |

| Defect clustering | A small number of modules or components typically contain the majority of defects. Focusing testing efforts on these areas can yield significant defect detection |

| Pesticide paradox | If the same tests are repeated over time, they become less effective at finding new defects |

| Testing is context-dependent | The testing approach and techniques used should be tailored to the specific context of the project, including its goals, requirements, and constraints |

| Absence-of-error fallacy | The absence of errors in testing doesn’t guarantee that the software is defect-free. It’s possible that some defects remain undetected |

Why do we test?

We test the product to:

- Identify defects in the product

- Make sure that the product meets customer requirements

- Product is well suited for the purpose it is made for

QA v/s QC

| QA | QC |

|---|---|

|

|

Find out more about the difference between QA and QC in our detailed blog post – Quality Assurance vs. Quality Control: Know The Difference.

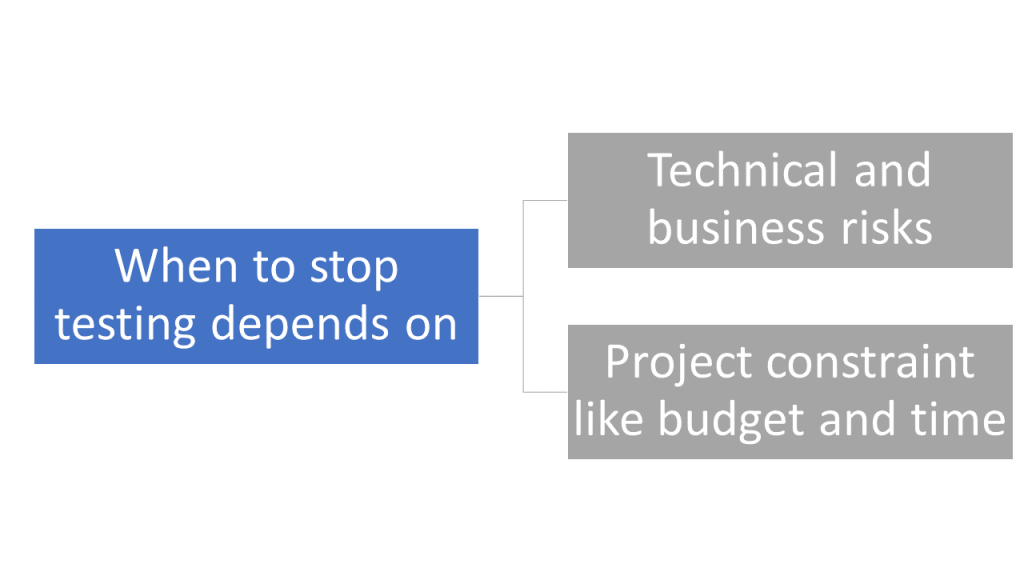

How much testing is enough?

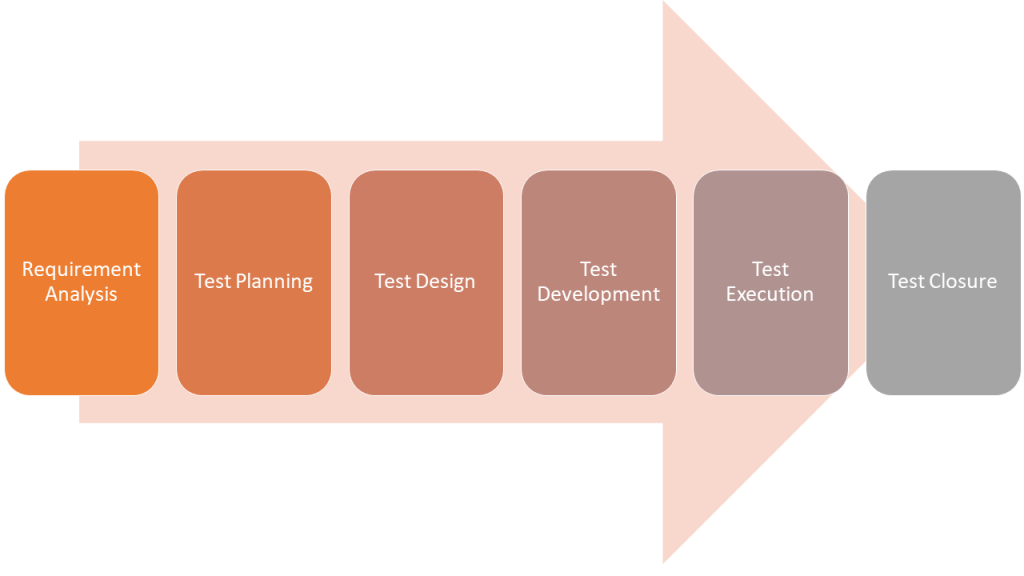

Software testing lifecycle (STLC)

| Requirement Analysis |

|

|---|---|

| Test Planning |

|

| Test Design |

|

| Test Development |

|

| Test Execution |

|

| Test Closure |

|

Levels of testing

| Unit tests |

|

|---|---|

| Integration tests |

|

| End-to-end tests |

|

Read about STLC in detail: STLC guide.

Types of testing

Functional testing

- System testing

- Acceptance testing

- Smoke testing

- Regression testing

- UI testing

- UX testing

- Cross-platform testing

- Usability testing

Non-functional testing

- Performance testing

- Load testing

- Stress testing

- Security testing

- Accessibility testing

- Globalization testing

Static testing

- Thrive on reviewing or analyzing the software artifacts, such as requirements, design documents, and code, to identify defects, inconsistencies, and potential issues

- Common techniques include

- Code reviews

- Design reviews

- Peer reviews

- Walkthrough

Dynamic testing

- These techniques focus on assessing how the software functions during runtime and provide results by identifying defects

- Common techniques include

- System testing

- Acceptance testing

- Performance testing

- Security testing

- Usability testing

- Load testing

- Regression testing

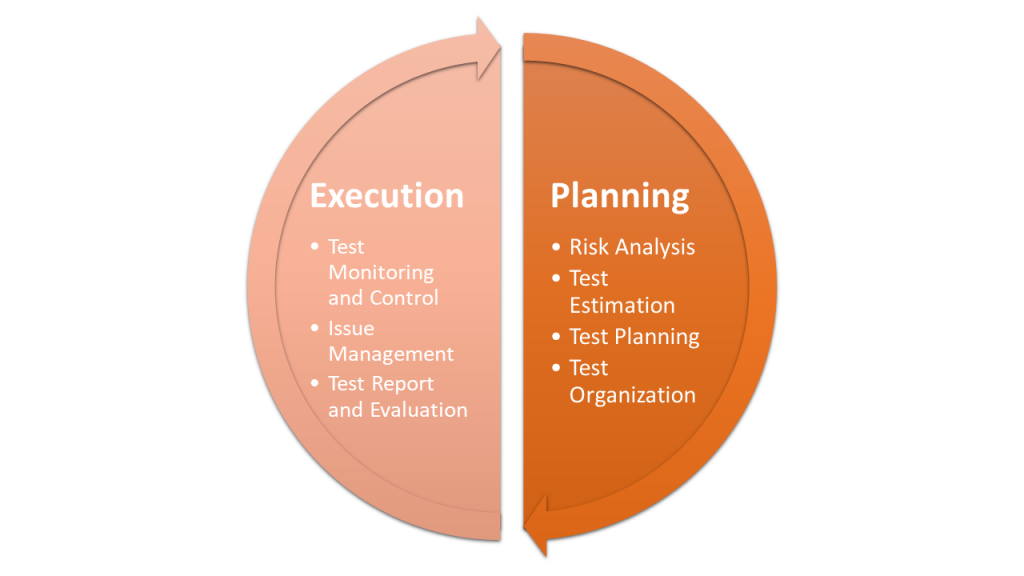

Test management phases

| Risk Analysis |

|

|---|---|

| Test Estimation |

|

| Test Planning |

|

| Test Organization |

|

| Test Monitoring and Control |

|

| Issue Management |

|

| Test Report and Evaluation |

|

Test Strategy

- It is a strategic overview of how testing will be conducted and sets the direction for the testing efforts

- Test strategy talks about types of testing that will be performed, tools to be used for the same, along with the scope of testing

- It may be part of a test plan or a stand-alone document

- Differs from test plan as test plan is more comprehensive and talks about aspects as well like test cases to be used, testing schedule, milestones, resource allocation, test environment, and defect reporting process

- Types of test strategies include

- Analytical

- Model-based

- Methodical

- Compliance-based

- Regression-averse

- Consultative

- Reactive

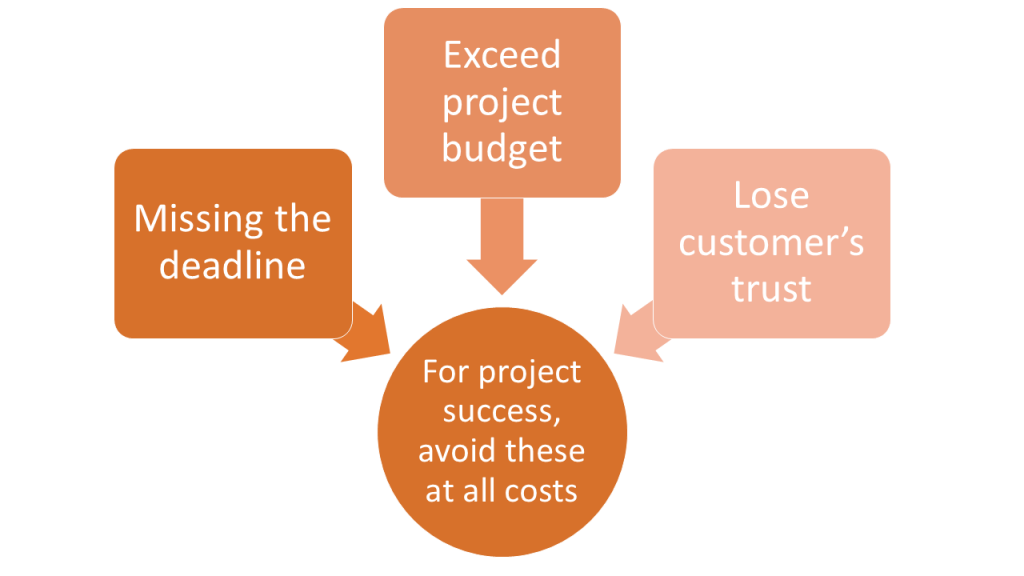

Dealing with Risks

Risks can occur in:

- The project

- Risks that can impact project progress

- The product

- Risks like the ones that lead to the product not satisfying the requirements, customers, or stakeholders

Failures can occur due to:

- Defects in the system

- Environmental conditions

- Malicious activities

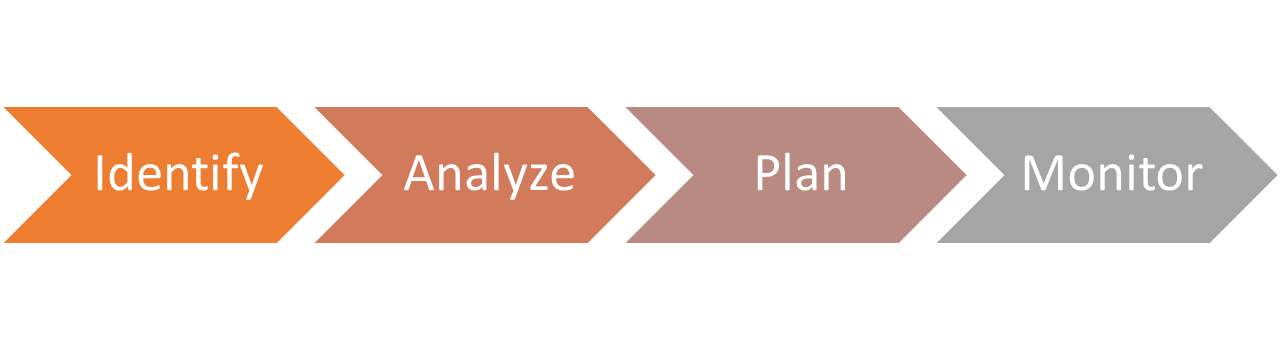

Steps to manage risks

Risk mitigation strategies

These strategies aim to address risks before they negatively impact the testing process and project outcomes. They are as follows:

- Risk avoidance: Bypassing activities that cause this risk. It is usually a high-stakes risk that demands such measures

- Risk acceptance: Low-stakes risks that are acceptable and are usually forecasted during the planning stage are allowed to exist

- Risk reduction or control: Making changes in the plan to avoid or minimize the risk and its consequences

- Risk transfer: Delegating the risk handling to another party

Test Reporting

Types of test reports

| Test incident report |

|

|---|---|

| Test summary report |

|

| Test cycle report |

|

| Traceability matrix |

|

Entry and exit criteria

- Entry Criteria: These are conditions that must be fulfilled before testing activities can commence

- Exit Criteria: These are the conditions that need to be met before testing can be considered complete and the software can progress to the next phase or be released

Pointers to create a good test report

Responsibilities of a test manager

Test Planning and Strategy Development

A Test Manager is responsible for developing comprehensive test strategies and detailed test plans that align with project goals and ensure full coverage of the software under test. This includes defining test objectives, selecting testing methodologies, and setting up timelines.

Resource Management

They efficiently allocate resources, ensuring the right team members with the appropriate skills are available to handle the required testing tasks. This includes managing workloads, scheduling, and optimizing resource use.

Test Run Monitoring and Reporting

Test Managers oversee test execution, tracking progress, identifying issues, and ensuring that tests are completed as planned. They generate reports to track metrics, test results, and defects, providing insight into the software’s quality and readiness.

Stakeholder Communication and Management

They maintain regular communication with stakeholders, including development teams, product owners, and business leaders, to provide updates on test progress, risks, issues, and results. This ensures transparency and informed decision-making.

Risk Management

The Test Manager identifies, assesses, and mitigates risks throughout the testing process, ensuring that critical issues are addressed proactively. This includes creating risk-based testing strategies to prioritize high-risk areas.

Procedure Improvement

Test Managers are responsible for continuously improving testing processes and procedures to increase efficiency and effectiveness. This involves evaluating current practices, incorporating new tools, and implementing lessons learned from previous projects.

Training and Skill Development

A Test Manager ensures that the testing team is well-trained and stays updated with the latest tools, technologies, and methodologies. This includes organizing training sessions, mentoring, and providing opportunities for skill enhancement to improve team performance.

Test Metrics

| Defect Density |

|

|---|---|

| Defect Removal Efficiency (DRE) |

|

| Test Coverage |

|

| Defect Age |

|

| Priority |

|

| Severity |

|

Explore the key test metrics that Test Managers rely on by reading this blog – Metrics for QA Manager.

Tips for choosing tools

Be it test management, automation testing or issue tracking system, using frameworks and tools will ensure that your activities are effective.

When choosing these tools, consider:

- Ease of integration with existing system

- Costs in terms of license, additional skilled manpower

- Ease of learning and onboarding

- Suitability for the task (whether it offers the required functionalities)

Streamline automation testing using testRigor

In the hunt for a suitable automation testing tool, a test manager comes across many choices. Automation testing can seem like a double-edged sword with most tools in the market. Luckily, that is not the case with testRigor. This powerful test automation tool uses AI to make test creation, execution, and maintenance ultra-smooth and easy.

There are many exciting features of testRigor. Let’s go through a few of them.

- Cloud-hosted: testRigor eliminates the need for companies to invest in setting up and maintaining their own test automation infrastructure and device cloud. This translates to significant savings in time, effort, and cost. Once teams are signed in and subscribed, they can start testing immediately with almost no learning curve.

- Free from programming languages: While using testRigor, we don’t have to worry about knowing programming languages. Yes, testRigor helps create test scripts in parsed plain English. This advantage helps manual testers immensely, which is why it is an automation testing tool for manual testers. They can create and execute test scripts three times faster than other tools. Also, any stakeholder can add or update natural language test scripts, which are easy to read and understand.

- AI-based self-healing: Using Vision AI and adaptation with specification changes for rules and single commands, testRigor can look on the screen for an alternative way of doing what was intended instead of failing. This will allow the test script to adapt quickly to new changes in your application. Read more about AI-based self-healing.

- Cross-device Testiing: testRigor supports the simultaneous execution of test scripts in multiple browsers and devices for different sessions. Know about Cross-platform Testing: Web and Mobile in One Test.

- A single tool for all testing needs: You can write test cases across platforms: web, mobile (hybrid, native), API, desktop apps and browsers using the same tool in plain English statements.

- Test AI features: This is an era of LLMs, and using testRigor, you can even test LLMs such as chatbots, user sentiment (positive/negative), true-or-false statements, etc. Read: AI features testing and security testing the LLMs.

- Integrations: testRigor offers built-in integrations with almost all popular CI/CD tools like Jenkins and CircleCI, test management systems like Zephyr and TestRail, defect tracking solutions like Jira and Pivotal Tracker, infrastructure providers like AWS and Azure, and communication tools like Slack and Microsoft Teams.

Conclusion

The role of a Test Manager is vital in ensuring the success of a software development lifecycle through strategic planning, effective team management, and rigorous testing practices. By focusing on key areas such as test planning, resource allocation, risk management, and leveraging metrics for continuous improvement, a Test Manager can ensure high product quality and meet project timelines. Clear communication and collaboration with cross-functional teams are also critical to address issues early and optimize processes.

Staying updated with emerging technologies like AI and automation enhances testing efficiency and keeps the testing framework scalable. Ultimately, a knowledgeable and efficient Test Manager not only drives quality but also enables a proactive and high-performing QA culture.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |